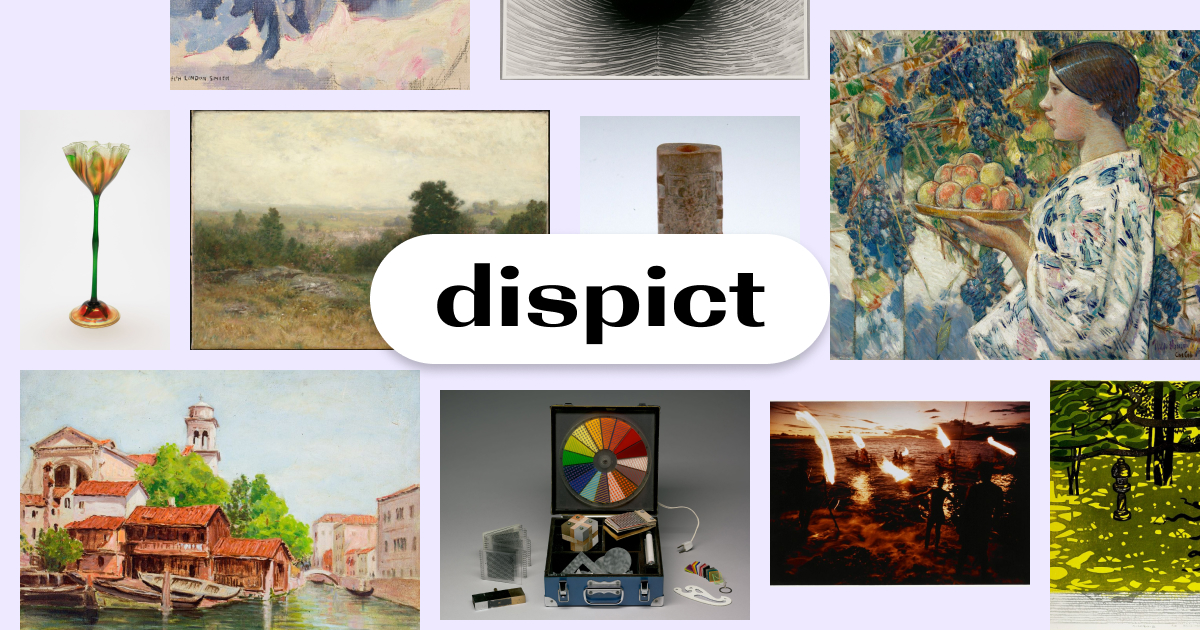

Design a growing artistic exhibit of your own making, with semantic search powered by OpenAI CLIP. Bring your own labels and context.

dispict.com greets you with a blank canvas. You begin typing. Your writing becomes a label, and related artworks appear spatially around the text you wrote. As you pan and zoom around the gallery, you can try other labels to see how the artwork shifts in aesthetic quality.

Focus on a single work to see its context: artist, setting, history, and narrative descriptions. This crucially allows you to learn about the story of the art being presented.

There's currently a lot of excitement about computers helping creatives find inspiration by generating original art pieces from text prompts (1, 2, 3). But these lose the unique, genuine part of walking through an art museum where every work has been lovingly created by humans, and the viewer is surrounded by insight and intention. What if computers could connect us with masterpieces made by artists of the past?

The Harvard Art Museums' online collection is huge, containing over 200,000 digitized works. This is far more than can be easily taken in by a single person. So instead, we apply computation to what it's good at: finding patterns and connections.

Creativity and curiosity require associative thinking. Just like the technological innovations of centuries past have changed the aesthetic character of fine art from literal portraiture to more flexible modes of self-expression, Dispict hopes to be technology that explores the honest, intimate relationship of the creative process with artistic discovery.

Dispict uses real-time machine learning. It's built on contrastive language-image pretraining (CLIP) and nearest-neighbor search, served from Python (on a Modal endpoint) with a handcrafted Svelte frontend.

If you want to hack on dispict yourself, you can run the frontend development server locally using Node v16 or higher:

npm install

npm run devThis will automatically connect to the serverless backend recommendation system hosted on Modal. To additionally change this part of the code, you need to create a Modal account, then install Python 3.10+ and follow these steps:

- Run the Jupyter notebooks

notebooks/load_data.ipynbandnotebooks/data_cleaning.ipynbto download data from the Harvard Art Museums. This will produce two files nameddata/artmuseums[-clean].json. - Run

SKIP_WEB=1 modal run main.pyto spawn a parallel Modal job that downloads and embeds all images in the dataset using CLIP, saving the results todata/embeddings.hdf5. - Run

modal deploy main.pyto create the web endpoint, which then gives you a public URL such ashttps://ekzhang--dispict-suggestions.modal.run.

You can start sending requests to the URL to get recommendations. For example,

GET /?text=apple will find artwork related to apples, such as the image shown

below.

To point the web application at your new backend URL, you can set an environment variable to override the default backend.

VITE_APP_API_URL=https://[your-app-endpoint].modal.run npm run devCreated by Eric Zhang (@ekzhang1) for Neuroaesthetics at Harvard. All code is licensed under MIT, and data is generously provided by the Harvard Art Museums public access collection.

I learned a lot from Jono Brandel's Curaturae when designing this.