This article is part of a series that discusses disaster recovery (DR) in Google Cloud. This part discusses the process for architecting workloads using Google Cloud and building blocks that are resilient to cloud infrastructure outages.

The series consists of these parts:

- Disaster recovery planning guide

- Disaster recovery building blocks

- Disaster recovery scenarios for data

- Disaster recovery scenarios for applications

- Architecting disaster recovery for locality-restricted workloads

- Disaster recovery use cases: locality-restricted data analytic applications

- Architecting disaster recovery for cloud infrastructure outages (this document)

Introduction

As enterprises move workloads on to the public cloud, they need to translate their understanding of building resilient on-premises systems to the hyperscale infrastructure of cloud providers like Google Cloud. This article maps industry standard concepts around disaster recovery such as RTO (Recovery Time Objective) and RPO (Recovery Point Objective) to the Google Cloud infrastructure.

The guidance in this document follows one of Google's key principles for achieving extremely high service availability: plan for failure. While Google Cloud provides extremely reliable service, disasters will strike - natural disasters, fiber cuts, and complex unpredictable infrastructure failures - and these disasters cause outages. Planning for outages enables Google Cloud customers to build applications that perform predictably through these inevitable events, by making use of Google Cloud products with "built-in" DR mechanisms.

Disaster recovery is a broad topic which covers a lot more than just infrastructure failures, such as software bugs or data corruption, and you should have a comprehensive end-to-end plan. However this article focuses on one part of an overall DR plan: how to design applications that are resilient to cloud infrastructure outages. Specifically, this article walks through:

- The Google Cloud infrastructure, how disaster events manifest as Google Cloud outages, and how Google Cloud is architected to minimize the frequency and scope of outages.

- An architecture planning guide that provides a framework for categorizing and designing applications based on the desired reliability outcomes.

- A detailed list of select Google Cloud products that offer built-in DR capabilities which you may want to use in your application.

For further details on general DR planning and using Google Cloud as a component in your on-premises DR strategy, see the disaster recovery planning guide. Also, while High Availability is a closely related concept to disaster recovery, it is not covered in this article. For further details on architecting for high availability see the Google Cloud architecture framework.

A note on terminology: this article refers to availability when discussing the ability for a product to be meaningfully accessed and used over time, while reliability refers to a set of attributes including availability but also things like durability and correctness.

How Google Cloud is designed for resilience

Google data centers

Traditional data centers rely on maximizing availability of individual components. In the cloud, scale allows operators like Google to spread services across many components using virtualization technologies and thus exceed traditional component reliability. This means you can shift your reliability architecture mindset away from the myriad details you once worried about on-premises. Rather than worry about the various failure modes of components -- such as cooling and power delivery -- you can plan around Google Cloud products and their stated reliability metrics. These metrics reflect the aggregate outage risk of the entire underlying infrastructure. This frees you to focus much more on application design, deployment, and operations rather than infrastructure management.

Google designs its infrastructure to meet aggressive availability targets based on our extensive experience building and running modern data centers. Google is a world leader in data center design. From power to cooling to networks, each data center technology has its own redundancies and mitigations, including FMEA plans. Google's data centers are built in a way that balances these many different risks and presents to customers a consistent expected level of availability for Google Cloud products. Google uses its experience to model the availability of the overall physical and logical system architecture to ensure that the data center design meets expectations. Google's engineers take great lengths operationally to help ensure those expectations are met. Actual measured availability normally exceeds our design targets by a comfortable margin.

By distilling all of these data center risks and mitigations into user-facing products, Google Cloud relieves you from those design and operational responsibilities. Instead, you can focus on the reliability designed into Google Cloud regions and zones.

Regions and zones

Google Cloud products are provided across a large number of regions and zones. Regions are physically independent geographic areas that contain three or more zones. Zones represent groups of physical computing resources within a region that have a high degree of independence from one another in terms of physical and logical infrastructure. They provide high-bandwidth, low-latency network connections to other zones in the same region. For example, the asia-northeast1 region in Japan contains three zones: asia-northeast1-a, asia-northeast1-b, and asia-northeast1-c.

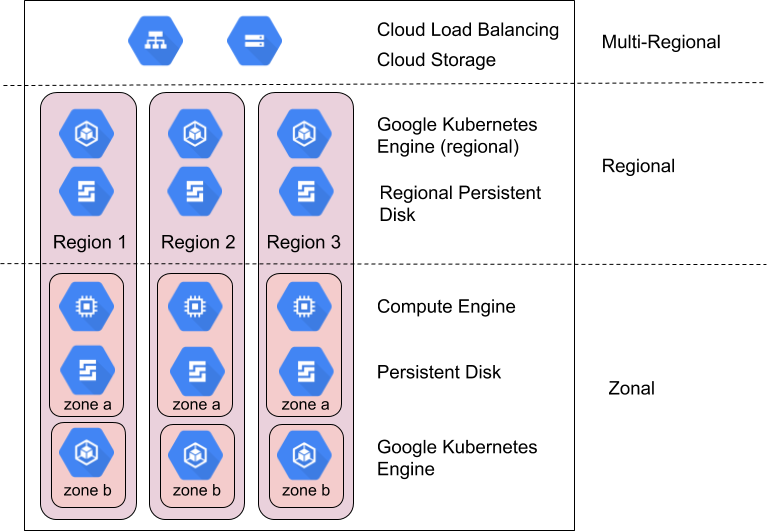

Google Cloud products are divided into zonal resources, regional resources, or multi-regional resources.

Zonal resources are hosted within a single zone. A service interruption in that zone can affect all of the resources in that zone. For example, a Compute Engine instance runs in a single, specified zone; if a hardware failure interrupts service in that zone, that Compute Engine instance is unavailable for the duration of the interruption.

Regional resources are redundantly deployed across multiple zones within a region. This gives them higher reliability relative to zonal resources.

Multi-regional resources are distributed within and across regions. In general, multi-regional resources have higher reliability than regional resources. However, at this level products must optimize availability, performance, and resource efficiency. As a result, it is important to understand the tradeoffs made by each multi-regional product you decide to use. These tradeoffs are documented on a product-specific basis later in this document.

How to leverage zones and regions to achieve reliability

Google SREs manage and scale highly reliable, global user products like Gmail and Search through a variety of techniques and technologies that seamlessly leverage computing infrastructure around the world. This includes redirecting traffic away from unavailable locations using global load balancing, running multiple replicas in many locations around the planet, and replicating data across locations. These same capabilities are available to Google Cloud customers through products like Cloud Load Balancing, Google Kubernetes Engine (GKE), and Spanner.

Google Cloud generally designs products to deliver the following levels of availability for zones and regions:

| Resource | Examples | Availability design goal | Implied downtime |

|---|---|---|---|

| Zonal | Compute Engine, Persistent Disk | 99.9% | 8.75 hours / year |

| Regional | Regional Cloud Storage, Replicated Persistent Disk, Regional GKE | 99.99% | 52 minutes / year |

Compare the Google Cloud availability design goals against your acceptable level of downtime to identify the appropriate Google Cloud resources. While traditional designs focus on improving component-level availability to improve the resulting application availability, cloud models focus instead on composition of components to achieve this goal. Many products within Google Cloud use this technique. For example, Spanner offers a multi-region database that composes multiple regions in order to deliver 99.999% availability.

Composition is important because without it, your application availability cannot exceed that of the Google Cloud products you use; in fact, unless your application never fails, it will have lower availability than the underlying Google Cloud products. The remainder of this section shows generally how you can use a composition of zonal and regional products to achieve higher application availability than a single zone or region would provide. The next section gives a practical guide for applying these principles to your applications.

Planning for zone outage scopes

Infrastructure failures usually cause service outages in a single zone. Within a region, zones are designed to minimize the risk of correlated failures with other zones, and a service interruption in one zone would usually not affect service from another zone in the same region. An outage scoped to a zone doesn't necessarily mean that the entire zone is unavailable, it just defines the boundary of the incident. It is possible for a zone outage to have no tangible effect on your particular resources in that zone.

It's a rarer occurrence, but it's also critical to note that multiple zones will eventually still experience a correlated outage at some point within a single region. When two or more zones experience an outage, the regional outage scope strategy below applies.

Regional resources are designed to be resistant to zone outages by delivering service from a composition of multiple zones. If one of the zones backing a regional resource is interrupted, the resource automatically makes itself available from another zone. Carefully check the product capability description in the appendix for further details.

Google Cloud only offers a few zonal resources, namely Compute Engine virtual machines (VMs) and Persistent Disk. If you plan to use zonal resources, you'll need to perform your own resource composition by designing, building, and testing failover and recovery between zonal resources located in multiple zones. Some strategies include:

- Routing your traffic quickly to virtual machines in another zone using Cloud Load Balancing when a health check determines that a zone is experiencing issues.

- Use Compute Engine instance templates and/or managed instance groups to run and scale identical VM instances in multiple zones.

- Use a regional Persistent Disk to synchronously replicate data to another zone in a region. See High availability options using regional PDs for more details.

Planning for regional outage scopes

A regional outage is a service interruption affecting more than one zone in a single region. These are larger scale, less frequent outages and can be caused by natural disasters or large scale infrastructure failures.

For a regional product that is designed to provide 99.99% availability, an outage can still translate to nearly an hour of downtime for a particular product every year. Therefore, your critical applications may need to have a multi-region DR plan in place if this outage duration is unacceptable.

Multi-regional resources are designed to be resistant to region outages by delivering service from multiple regions. As described above, multi-region products trade off between latency, consistency, and cost. The most common trade off is between synchronous and asynchronous data replication. Asynchronous replication offers lower latency at the cost of risk of data loss during an outage. So, it is important to check the product capability description in the appendix for further details.

If you want to use regional resources and remain resilient to regional outages, then you must perform your own resource composition by designing, building, and testing their failover and recovery between regional resources located in multiple regions. In addition to the zonal strategies above, which you can apply across regions as well, consider:

- Regional resources should replicate data to a secondary region, to a multi-regional storage option such as Cloud Storage, or a hybrid cloud option such as GKE Enterprise.

- After you have a regional outage mitigation in place, test it regularly. There are few things worse than thinking you're resistant to a single-region outage, only to find that this isn't the case when it happens for real.

Google Cloud resilience and availability approach

Google Cloud regularly beats its availability design targets, but you should not assume that this strong past performance is the minimum availability you can design for. Instead, you should select Google Cloud dependencies whose designed-for targets exceed your application's intended reliability, such that your application downtime plus the Google Cloud downtime delivers the outcome you are seeking.

A well-designed system can answer the question: "What happens when a zone or region has a 1, 5, 10, or 30 minute outage?" This should be considered at many layers, including:

- What will my customers experience during an outage?

- How will I detect that an outage is happening?

- What happens to my application during an outage?

- What happens to my data during an outage?

- What happens to my other applications due to an outage (due to cross-dependencies)?

- What do I need to do in order to recover after an outage is resolved? Who does it?

- Who do I need to notify about an outage, within what time period?

Step-by-step guide to designing disaster recovery for applications in Google Cloud

The previous sections covered how Google builds cloud infrastructure, and some approaches for dealing with zonal and regional outages.

This section helps you develop a framework for applying the principle of composition to your applications based on your desired reliability outcomes.

Customer applications in Google Cloud that target disaster recovery objectives such as RTO and RPO must be architected so that business-critical operations, subject to RTO/RPO, only have dependencies on data plane components that are responsible for continuous processing of operations for the service. In other words, such customer business-critical operations must not depend on management plane operations, which manage configuration state and push configuration to the control plane and the data plane.

For example, Google Cloud customers who intend to achieve RTO for business-critical operations should not depend on a VM-creation API or on the update of an IAM permission.

Step 1: Gather existing requirements

The first step is to define the availability requirements for your applications. Most companies already have some level of design guidance in this space, which may be internally developed or derived from regulations or other legal requirements. This design guidance is normally codified in two key metrics: Recovery Time Objective (RTO) and Recovery Point Objective (RPO). In business terms, RTO translates as "How long after a disaster before I'm up and running." RPO translates as "How much data can I afford to lose in the event of a disaster."

Historically, enterprises have defined RTO and RPO requirements for a wide range of disaster events, from component failures to earthquakes. This made sense in the on-premises world where planners had to map the RTO/RPO requirements through the entire software and hardware stack. In the cloud, you no longer need to define your requirements with such detail because the provider takes care of that. Instead, you can define your RTO and RPO requirements in terms of the scope of loss (entire zones or regions) without being specific about the underlying reasons. For Google Cloud this simplifies your requirement gathering to 3 scenarios: a zonal outage, a regional outage, or the extremely unlikely outage of multiple regions.

Recognizing that not every application has equal criticality, most customers categorize their applications into criticality tiers against which a specific RTO/RPO requirement can be applied. When taken together, RTO/RPO and application criticality streamline the process of architecting a given application by answering:

- Does the application need to run in multiple zones in the same region, or in multiple zones in multiple regions?

- On which Google Cloud products can the application depend?

This is an example of the output of the requirements gathering exercise:

RTO and RPO by Application Criticality for Example Organization Co:

| Application criticality | % of Apps | Example apps | Zone outage | Region outage |

|---|---|---|---|---|

| Tier 1

(most important) |

5% | Typically global or external customer-facing applications, such as real-time payments and eCommerce storefronts. | RTO Zero

RPO Zero |

RTO Zero

RPO Zero |

| Tier 2 | 35% | Typically regional applications or important internal applications, such as CRM or ERP. | RTO 15mins

RPO 15mins |

RTO 1hr

RPO 1hr |

| Tier 3

(least important) |

60% | Typically team or departmental applications, such as back office, leave booking, internal travel, accounting, and HR. | RTO 1hr

RPO 1hr |

RTO 12hrs

RPO 12hrs |

Step 2: Capability mapping to available products

The second step is to understand the resilience capabilities of Google Cloud products that your applications will be using. Most companies review the relevant product information and then add guidance on how to modify their architectures to accommodate any gaps between the product capabilities and their resilience requirements. This section covers some common areas and recommendations around data and application limitations in this space.

As mentioned previously, Google's DR-enabled products broadly cater for two types of outage scopes: regional and zonal. Partial outages should be planned for the same way as a full outage when it comes to DR. This gives an initial high level matrix of which products are suitable for each scenario by default:

Google Cloud Product General Capabilities

(see Appendix for specific product capabilities)

| All Google Cloud products | Regional Google Cloud products with automatic replication across zones | Multi-regional or global Google Cloud products with automatic replication across regions | |

|---|---|---|---|

| Failure of a component within a zone | Covered* | Covered | Covered |

| Zone outage | Not covered | Covered | Covered |

| Region outage | Not covered | Not covered | Covered |

* All Google Cloud products are resilient to component failure, except in specific cases noted in product documentation. These are typically scenarios where the product offers direct access or static mapping to a piece of speciality hardware such as memory or Solid State Disks (SSD).

How RPO limits product choices

In most cloud deployments, data integrity is the most architecturally significant aspect to be considered for a service. At least some applications have an RPO requirement of zero, meaning there should be no data loss in the event of an outage. This typically requires data to be synchronously replicated to another zone or region. Synchronous replication has cost and latency tradeoffs, so while many Google Cloud products provide synchronous replication across zones, only a few provide it across regions. This cost and complexity tradeoff means that it's not unusual for different types of data within an application to have different RPO values.

For data with an RPO greater than zero, applications can take advantage of asynchronous replication. Asynchronous replication is acceptable when lost data can either be recreated easily, or can be recovered from a golden source of data if needed. It can also be a reasonable choice when a small amount of data loss is an acceptable tradeoff in the context of zonal and regional expected outage durations. It is also relevant that during a transient outage, data written to the affected location but not yet replicated to another location generally becomes available after the outage is resolved. This means that the risk of permanent data loss is lower than the risk of losing data access during an outage.

Key actions: Establish whether you definitely need RPO zero, and if so whether you can do this for a subset of your data - this dramatically increases the range of DR-enabled services available to you. In Google Cloud, achieving RPO zero means using predominantly regional products for your application, which by default are resilient to zone-scale, but not region-scale, outages.

How RTO limits product choices

One of the primary benefits of cloud computing is the ability to deploy infrastructure on demand; however, this isn't the same as instantaneous deployment. The RTO value for your application needs to accommodate the combined RTO of the Google Cloud products your application utilizes and any actions your engineers or SREs must take to restart your VMs or application components. An RTO measured in minutes means designing an application which recovers automatically from a disaster without human intervention, or with minimal steps such as pushing a button to failover. The cost and complexity of this kind of system historically has been very high, but Google Cloud products like load balancers and instance groups make this design both much more affordable and simpler. Therefore, you should consider automated failover and recovery for most applications. Be aware that designing a system for this kind of hot failover across regions is both complicated and expensive; only a very small fraction of critical services warrant this capability.

Most applications will have an RTO of between an hour and a day, which allows for a warm failover in a disaster scenario, with some components of the application running all the time in a standby mode--such as databases--while others are scaled out in the event of an actual disaster, such as web servers. For these applications, you should strongly consider automation for the scale-out events. Services with an RTO over a day are the lowest criticality and can often be recovered from a backup or recreated from scratch.

Key actions: Establish whether you definitely need an RTO of (near) zero for regional failover, and if so whether you can do this for a subset of your services. This changes the cost of running and maintaining your service.

Step 3: Develop your own reference architectures and guides

The final recommended step is building your own company-specific architecture patterns to help your teams standardize their approach to disaster recovery. Most Google Cloud customers produce a guide for their development teams that matches their individual business resilience expectations to the two major categories of outage scenarios on Google Cloud. This allows teams to easily categorize which DR-enabled products are suitable for each criticality level.

Create product guidelines

Looking again at the example RTO/RPO table from above, you have a hypothetical guide that lists which products would be allowed by default for each criticality tier. Note that where certain products have been identified as not suitable by default, you can always add your own replication and failover mechanisms to enable cross-zone or cross-region synchronization, but this exercise is beyond the scope of this article. The tables also link to more information about each product to help you understand their capabilities with respect to managing zone or region outages.

Sample Architecture Patterns for Example Organization Co -- Zone Outage Resilience

| Google Cloud Product | Does product meet zonal outage requirements for Example Organization (with appropriate product configuration) | ||

|---|---|---|---|

| Tier 1 | Tier 2 | Tier 3 | |

| Compute Engine | No | No | No |

| Dataflow | No | No | No |

| BigQuery | No | No | Yes |

| GKE | Yes | Yes | Yes |

| Cloud Storage | Yes | Yes | Yes |

| Cloud SQL | No | Yes | Yes |

| Spanner | Yes | Yes | Yes |

| Cloud Load Balancing | Yes | Yes | Yes |

This table is an example only based on hypothetical tiers shown above.

Sample Architecture Patterns for Example Organization Co -- Region Outage Resilience

| Google Cloud Product | Does product meet region outage requirements for Example Organization (with appropriate product configuration) | ||

|---|---|---|---|

| Tier 1 | Tier 2 | Tier 3 | |

| Compute Engine | Yes | Yes | Yes |

| Dataflow | No | No | No |

| BigQuery | No | No | Yes |

| GKE | Yes | Yes | Yes |

| Cloud Storage | No | No | No |

| Cloud SQL | No | Yes | Yes |

| Spanner | Yes | Yes | Yes |

| Cloud Load Balancing | Yes | Yes | Yes |

This table is an example only based on hypothetical tiers shown above.

To show how these products would be used, the following sections walk through some reference architectures for each of the hypothetical application criticality levels. These are deliberately high level descriptions to illustrate the key architectural decisions, and aren't representative of a complete solution design.

Example tier 3 architecture

| Application criticality | Zone outage | Region outage |

|---|---|---|

| Tier 3 (least important) |

RTO 12 hours RPO 24 hours |

RTO 28 days RPO 24 hours |

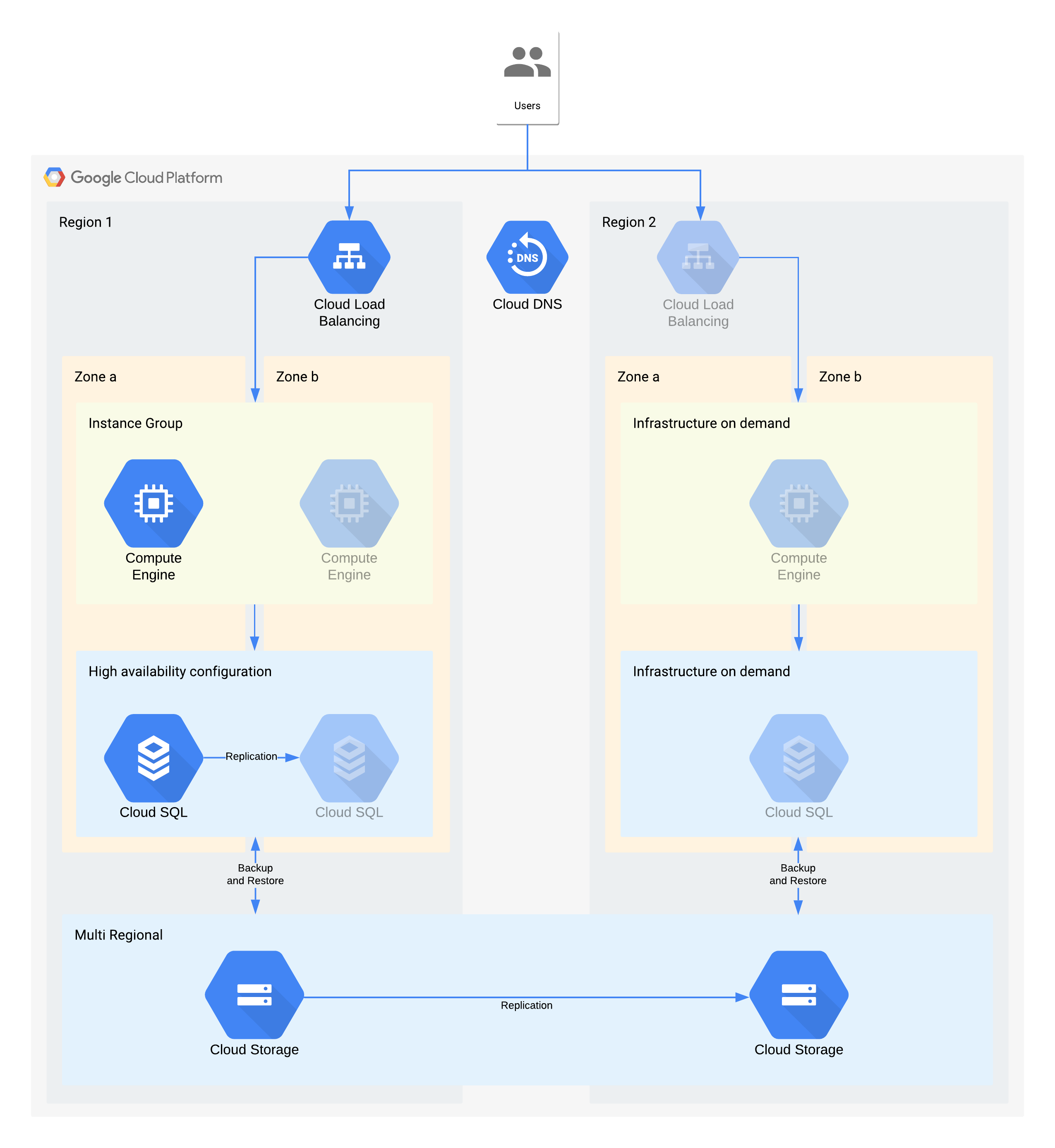

(Greyed-out icons indicate infrastructure to be enabled for recovery)

This architecture describes a traditional client/server application: internal users connect to an application running on a compute instance which is backed by a database for persistent storage.

It's important to note that this architecture supports better RTO and RPO values than required. However, you should also consider eliminating additional manual steps when they could prove costly or unreliable. For example, recovering a database from a nightly backup could support the RPO of 24 hours, but this would usually need a skilled individual such as a database administrator who might be unavailable, especially if multiple services were impacted at the same time. With Google Cloud's on demand infrastructure you are able to build this capability without making a major cost tradeoff, and so this architecture uses Cloud SQL HA rather than a manual backup/restore for zonal outages.

Key architectural decisions for zone outage - RTO of 12hrs and RPO of 24hrs:

- An internal load balancer is used to provide a scalable access point for users, which allows for automatic failover to another zone. Even though the RTO is 12 hours, manual changes to IP addresses or even DNS updates can take longer than expected.

- A regional managed instance group is configured with multiple zones but minimal resources. This optimizes for cost but still allows for virtual machines to be quickly scaled out in the backup zone.

- A high availability Cloud SQL configuration provides for automatic failover to another zone. Databases are significantly harder to recreate and restore compared to the Compute Engine virtual machines.

Key architectural decisions for region outage - RTO of 28 Days and RPO of 24 hours:

- A load balancer would be constructed in region 2 only in the event of a regional outage. Cloud DNS is used to provide an orchestrated but manual regional failover capability, since the infrastructure in region 2 would only be made available in the event of a region outage.

- A new managed instance group would be constructed only in the event of a region outage. This optimizes for cost and is unlikely to be invoked given the short length of most regional outages. Note that for simplicity the diagram doesn't show the associated tooling needed to redeploy, or the copying of the Compute Engine images needed.

- A new Cloud SQL instance would be recreated and the data restored from a backup. Again the risk of an extended outage to a region is extremely low so this is another cost optimization trade-off.

- Multi-regional Cloud Storage is used to store these backups. This provides automatic zone and regional resilience within the RTO and RPO.

Example tier 2 architecture

| Application criticality | Zone outage | Region outage |

|---|---|---|

| Tier 2 | RTO 4 hours RPO zero |

RTO 24 hours RPO 4 hours |

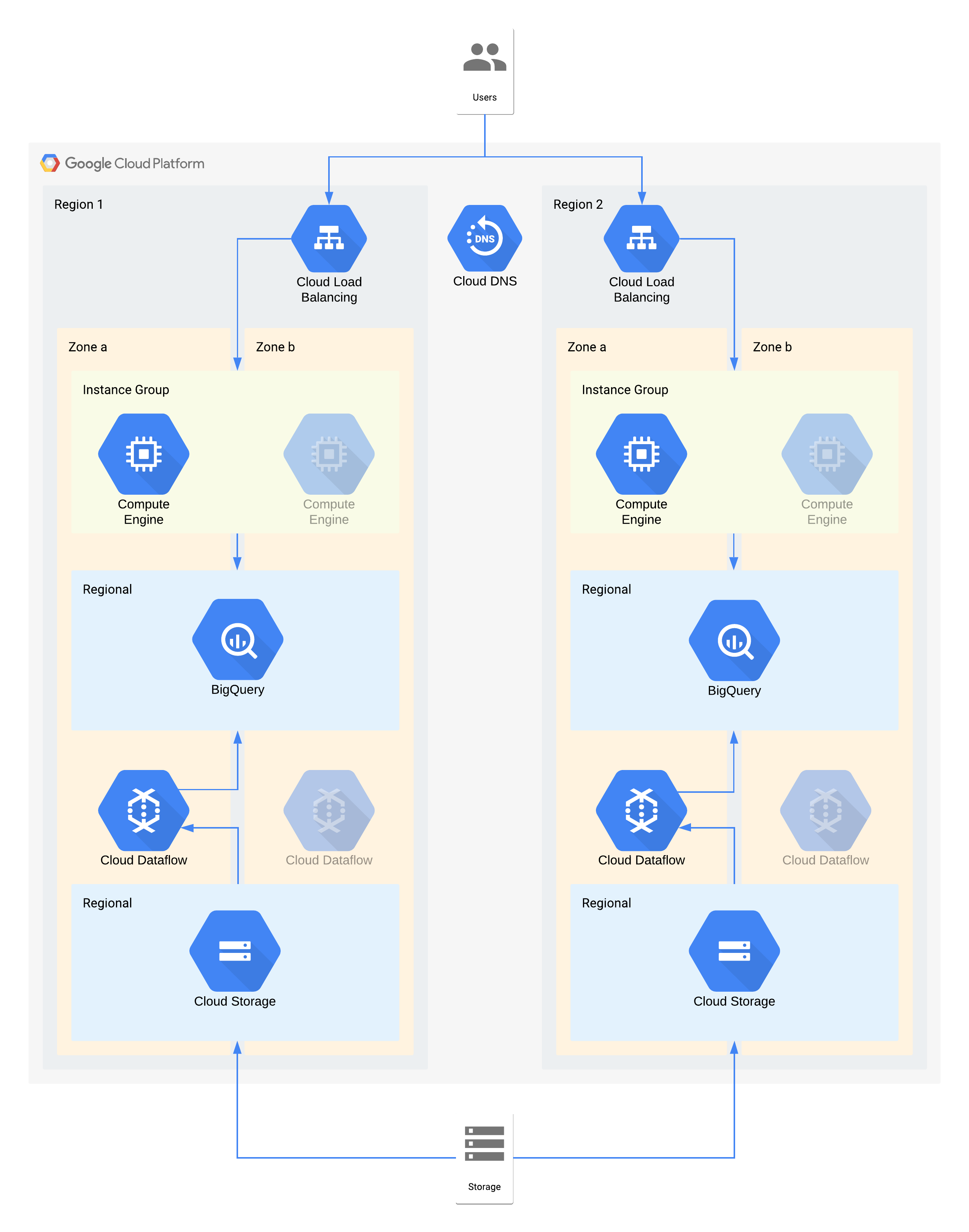

This architecture describes a data warehouse with internal users connecting to a compute instance visualization layer, and a data ingest and transformation layer which populates the backend data warehouse.

Some individual components of this architecture do not directly support the RPO required for their tier. However, because of how they are used together, the overall service does meet the RPO. In this case, because Dataflow is a zonal product, follow the recommendations for high availability design. to help prevent data loss during an outage. However, the Cloud Storage layer is the golden source of this data and supports an RPO of zero. As a result, you can re-ingest any lost data into BigQuery by using zone b in the event of an outage in zone a.

Key architectural decisions for zone outage - RTO of 4hrs and RPO of zero:

- A load balancer is used to provide a scalable access point for users, which allows for automatic failover to another zone. Even though the RTO is 4 hours, manual changes to IP addresses or even DNS updates can take longer than expected.

- A regional managed instance group for the data visualization compute layer is configured with multiple zones but minimal resources. This optimizes for cost but still allows for virtual machines to be quickly scaled out.

- Regional Cloud Storage is used as a staging layer for the initial ingest of data, providing automatic zone resilience.

- Dataflow is used to extract data from Cloud Storage and transform it before loading it into BigQuery. In the event of a zone outage this is a stateless process that can be restarted in another zone.

- BigQuery provides the data warehouse backend for the data visualization front end. In the event of a zone outage, any data lost would be re-ingested from Cloud Storage.

Key architectural decisions for region outage - RTO of 24hrs and RPO of 4 hours:

- A load balancer in each region is used to provide a scalable access point for users. Cloud DNS is used to provide an orchestrated but manual regional failover capability, since the infrastructure in region 2 would only be made available in the event of a region outage.

- A regional managed instance group for the data visualization compute layer is configured with multiple zones but minimal resources. This isn't accessible until the load balancer is reconfigured but doesn't require manual intervention otherwise.

- Regional Cloud Storage is used as a staging layer for the initial ingest of data. This is being loaded at the same time into both regions to meet the RPO requirements.

- Dataflow is used to extract data from Cloud Storage and transform it before loading it into BigQuery. In the event of a region outage this would populate BigQuery with the latest data from Cloud Storage.

- BigQuery provides the data warehouse backend. Under normal operations this would be intermittently refreshed. In the event of a region outage the latest data would be re-ingested via Dataflow from Cloud Storage.

Example tier 1 architecture

| Application criticality | Zone outage | Region outage |

|---|---|---|

| Tier 1 (most important) |

RTO zero RPO zero |

RTO 4 hours RPO 1 hour |

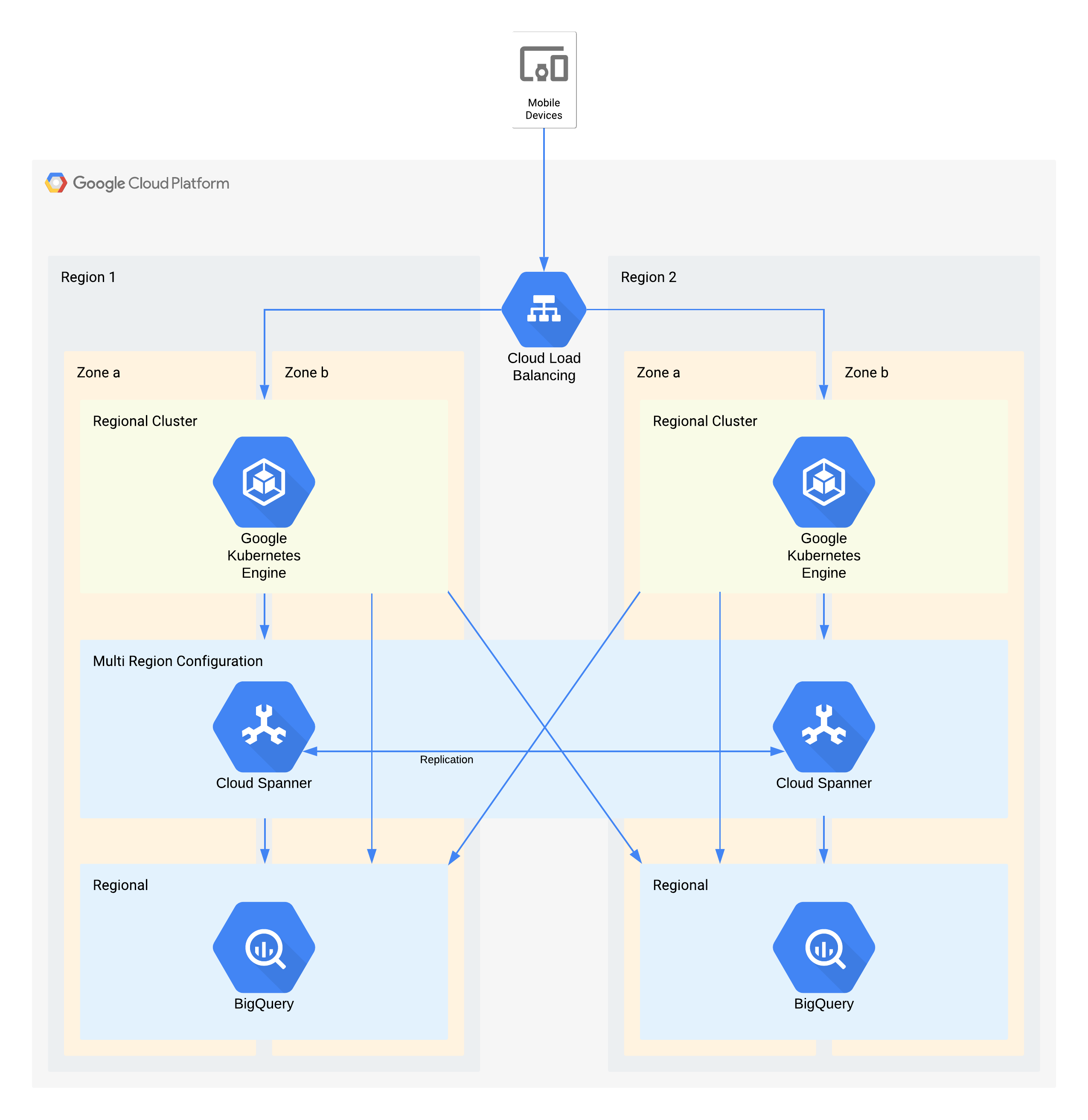

This architecture describes a mobile app backend infrastructure with external users connecting to a set of microservices running in GKE. Spanner provides the backend data storage layer for real time data, and historical data is streamed to a BigQuery data lake in each region.

Again, some individual components of this architecture do not directly support the RPO required for their tier, but because of how they are used together the overall service does. In this case BigQuery is being used for analytic queries. Each region is fed simultaneously from Spanner.

Key architectural decisions for zone outage - RTO of zero and RPO of zero:

- A load balancer is used to provide a scalable access point for users, which allows for automatic failover to another zone.

- A regional GKE cluster is used for the application layer which is configured with multiple zones. This accomplishes the RTO of zero within each region.

- Multi-region Spanner is used as a data persistence layer, providing automatic zone data resilience and transaction consistency.

- BigQuery provides the analytics capability for the application. Each region is independently fed data from Spanner, and independently accessed by the application.

Key architectural decisions for region outage - RTO of 4 hrs and RPO of 1 hr:

- A load balancer is used to provide a scalable access point for users, which allows for automatic failover to another region.

- A regional GKE cluster is used for the application layer which is configured with multiple zones. In the event of a region outage, the cluster in the alternate region automatically scales to take on the additional processing load.

- Multi-region Spanner is used as a data persistence layer, providing automatic regional data resilience and transaction consistency. This is the key component in achieving the cross region RPO of 1 hour.

- BigQuery provides the analytics capability for the application. Each region is independently fed data from Spanner, and independently accessed by the application. This architecture compensates for the BigQuery component allowing it to match the overall application requirements.

Appendix: Product reference

This section describes the architecture and DR capabilities of Google Cloud products that are most commonly used in customer applications and that can be easily leveraged to achieve your DR requirements.

Common themes

Many Google Cloud products offer regional or multi-regional configurations. Regional products are resilient to zone outages, and multi-region and global products are resilient to region outages. In general, this means that during an outage, your application experiences minimal disruption. Google achieves these outcomes through a few common architectural approaches, which mirror the architectural guidance above.

- Redundant deployment: The application backends and data storage are deployed across multiple zones within a region and multiple regions within a multi-region location.

Data replication: Products use either synchronous or asynchronous replication across the redundant locations.

Synchronous replication means that when your application makes an API call to create or modify data stored by the product, it receives a successful response only once the product has written the data to multiple locations. Synchronous replication ensures that you do not lose access to any of your data during a Google Cloud infrastructure outage because all of your data is available in one of the available backend locations.

Although this technique provides maximum data protection, it can have tradeoffs in terms of latency and performance. Multi-region products using synchronous replication experience this tradeoff most significantly -- typically on the order of 10s or 100s of milliseconds of added latency.

Asynchronous replication means that when your application makes an API call to create or modify data stored by the product, it receives a successful response once the product has written the data to a single location. Subsequent to your write request, the product replicates your data to additional locations.

This technique provides lower latency and higher throughput at the API than synchronous replication, but at the expense of data protection. If the location in which you have written data suffers an outage before replication is complete, you lose access to that data until the location outage is resolved.

Handling outages with load balancing: Google Cloud uses software load balancing to route requests to the appropriate application backends. Compared to other approaches like DNS load balancing, this approach reduces the system response time to an outage. When a Google Cloud location outage occurs, the load balancer quickly detects that the backend deployed in that location has become "unhealthy" and directs all requests to a backend in an alternate location. This enables the product to continue serving your application's requests during a location outage. When the location outage is resolved, the load balancer detects the availability of the product backends in that location, and resumes sending traffic there.

Access Context Manager

Access Context Manager lets enterprises configure access levels that map to a policy that's defined on request attributes. Policies are mirrored regionally.

In the case of a zonal outage, requests to unavailable zones are automatically and transparently served from other available zones in the region.

In the case of regional outage, policy calculations from the affected region are unavailable until the region becomes available again.

Access Transparency

Access Transparency lets Google Cloud organization administrators define fine-grained, attribute-based access control for projects and resources in Google Cloud. Occasionally, Google must access customer data for administrative purposes. When we access customer data, Access Transparency provides access logs to affected Google Cloud customers. These Access Transparency logs help ensure Google's commitment to data security and transparency in data handling.

Access Transparency is resilient against zonal and regional outages. If a zonal or regional outage happens, Access Transparency continues to process administrative access logs in another zone or region.

AlloyDB for PostgreSQL

AlloyDB for PostgreSQL is a fully managed, PostgreSQL-compatible database service. AlloyDB for PostgreSQL offers high availability in a region through its primary instance's redundant nodes that are located in two different zones of the region. The primary instance maintains regional availability by triggering an automatic failover to the standby zone if the active zone encounters an issue. Regional storage guarantees data durability in the event of a single-zone loss.

As a further method of disaster recovery, AlloyDB for PostgreSQL uses cross-region replication to provide disaster recovery capabilities by asynchronously replicating your primary cluster's data into secondary clusters that are located in separate Google Cloud regions.

Zonal outage: During normal operation, only one of the two nodes of a high-availability primary instance is active, and it serves all data writes. This active node stores the data in the cluster's separate, regional storage layer.

AlloyDB for PostgreSQL automatically detects zone-level failures and triggers a failover to restore database availability. During failover, AlloyDB for PostgreSQL starts the database on the standby node, which is already provisioned in a different zone. New database connections automatically get routed to this zone.

From the perspective of a client application, a zonal outage resembles a temporary interruption of network connectivity. After the failover completes, a client can reconnect to the instance at the same address, using the same credentials, with no loss of data.

Regional Outage: Cross-region replication uses asynchronous replication, which allows the primary instance to commit transactions before they are committed on replicas. The time difference between when a transaction is committed on the primary instance and when it is committed on the replica is known as replication lag. The time difference between when the primary generates the write-ahead log (WAL) and when the WAL reaches the replica is known as flush lag. Replication lag and flush lag depend on database instance configuration and on the user-generated workload.

In the event of a regional outage, you can promote secondary clusters in a different region to a writeable, standalone primary cluster. This promoted cluster no longer replicates the data from the original primary cluster that it was formerly associated with. Due to flush lag, some data loss might occur because there could be transactions on the original primary that were not propagated to the secondary cluster.

Cross-region replication RPO is affected by both the CPU utilization of the primary cluster, and physical distance between the primary cluster's region and the secondary cluster's region. To optimize RPO, we recommend testing your workload with a configuration that includes a replica to establish a safe transactions per second (TPS) limit, which is the highest sustained TPS that doesn't accumulate flush lag. If your workload exceeds the safe TPS limit, flush lag accumulates, which can affect RPO. To limit network lag, pick region pairs within the same continent.

For more information about monitoring network lag and other AlloyDB for PostgreSQL metrics, see Monitor instances.

Anti Money Laundering AI

Anti Money Laundering AI (AML AI) provides an API to help global financial institutions more effectively and efficiently detect money laundering. Anti Money Laundering AI is a regional offering, meaning customers can choose the region, but not the zones that make up a region. Data and traffic are automatically load balanced across zones within a region. The operations (for example, to create a pipeline or run a prediction) are automatically scaled in the background and are load balanced across zones as necessary.

Zonal outage: AML AI stores data for its resources regionally, replicated in a synchronous manner. When a long-running operation finishes successfully, the resources can be relied on regardless of zonal failures. Processing is also replicated across zones, but this replication aims at load balancing and not high availability, so a zonal failure during an operation can result in an operation failure. If that happens, retrying the operation can address the issue. During a zonal outage, processing times might be affected.

Regional outage: Customers choose the Google Cloud region they want to create their AML AI resources in. Data is never replicated across regions. Customer traffic is never be routed to a different region by AML AI. In the case of a regional failure, AML AI will become available again as soon as the outage is resolved.

API keys

API keys provides a scalable API key resource management for a project. API keys is a global service, meaning that keys are visible and accessible from any Google Cloud location. Its data and metadata are stored redundantly across multiple zones and regions.

API keys is resilient to both zonal and regional outages. In the case of zonal outage or regional outage, API keys continues to serve requests from another zone in the same or different region.

For more information about API keys, see API keys API overview.

Apigee

Apigee provides a secure, scalable, and reliable platform for developing and managing APIs. Apigee offers both single-region and multi-region deployments.

Zonal outage: Customer runtime data is replicated across multiple availability zones. Therefore, a single-zone outage does not impact Apigee.

Regional Outage: For single-region Apigee instances, if a region goes down, Apigee instances are unavailable in that region and can't be restored to different regions. For multi-region Apigee instances, the data is replicated across all of the regions asynchronously. Therefore, failure of one region doesn't reduce traffic entirely. However, you might not be able to access uncommitted data in the failed region. You can divert the traffic away from unhealthy regions. To achieve automatic traffic failover, you can configure network routing using managed instance groups (MIGs).

AutoML Translation

AutoML Translation is a machine translation service that allows you import your own data (sentence pairs) to train custom models for your domain-specific needs.

Zonal outage: AutoML Translation has active compute servers in multiple zones and regions. It also supports synchronous data replication across zones within regions. These features help AutoML Translation achieve instantaneous failover without any data loss for zonal failures, and without requiring any customer input or adjustments.

Regional outage: In the case of a regional failure, AutoML Translation is not available.

AutoML Vision

AutoML Vision is part of Vertex AI. It offers a unified framework to create datasets, import data, train models, and serve models for online prediction and batch prediction.

AutoML Vision is a regional offering. Customers can choose which region they want to launch a job from, but they can't choose the specific zones within that region. The service automatically load-balances workloads across different zones within the region.

Zonal outage: AutoML Vision stores metadata for the jobs regionally, and writes synchronously across zones within the region. The jobs are launched in a specific zone, as selected by Cloud Load Balancing.

AutoML Vision training jobs: A zonal outage causes any running jobs to fail, and the job status updates to failed. If a job fails, retry it immediately. The new job is routed to an available zone.

AutoML Vision batch prediction jobs: Batch prediction is built on top of Vertex AI Batch prediction. When a zonal outage occurs, the service automatically retries the job by routing it to available zones. If multiple retries fail, the job status updates to failed. Subsequent user requests to run the job are routed to an available zone.

Regional outage: Customers choose the Google Cloud region they want to run their jobs in. Data is never replicated across regions. In a regional failure, AutoML Vision service is unavailable in that region. It becomes available again when the outage resolves. To run their jobs, we recommend that customers use multiple regions. In case a regional outage occurs, direct jobs to a different available region.

Batch

Batch is a fully managed service to queue, schedule, and execute batch jobs on Google Cloud. Batch settings are defined at the region level. Customers must choose a region to submit their batch jobs, not a zone in a region. When a job is submitted, Batch synchronously writes customer data to multiple zones. However, customers can specify the zones where Batch VMs run jobs.

Zonal Failure: When a single zone fails, the tasks running in that zone also fail. If tasks have retry settings, Batch automatically fails over those tasks to other active zones in the same region. The automatic failover is subject to availability of resources in active zones in the same region. Jobs that require zonal resources (like VMs, GPUs, or zonal persistent disks) that are only available in the failed zone are queued until the failed zone recovers or until the queueing timeouts of the jobs are reached. When possible, we recommend that customers let Batch choose zonal resources to run their jobs. Doing so helps ensure that the jobs are resilient to a zonal outage.

Regional Failure: In case of a regional failure, the service control plane is unavailable in the region. The service doesn't replicate data or redirect requests across regions. We recommend that customers use multiple regions to run their jobs and redirect jobs to a different region if a region fails.

Chrome Enterprise Premium threat and data protection

Chrome Enterprise Premium threat and data protection is part of the Chrome Enterprise Premium solution. It extends Chrome with a variety of security features, including malware and phishing protection, Data Loss Prevention (DLP), URL filtering rules and security reporting.

Chrome Enterprise Premium admins can opt-in to storing customer core contents that violate DLP or malware policies into Google Workspace rule log events and/or into Cloud Storage for future investigation. Google Workspace rule log events are powered by a multi-regional Spanner database. Chrome Enterprise Premium can take up to several hours to detect policy violations. During this time, any unprocessed data is subject to data loss from a zonal or regional outage. Once a violation is detected, the contents that violate your policies are written to Google Workspace rule log events and/or to Cloud Storage.

Zonal and Regional outage: Because Chrome Enterprise Premium threat and data protection are multi-zonal and multi-regional, it can survive a complete, unplanned loss of a zone or a region without a loss in availability. It provides this level of reliability by redirecting traffic to its service on other active zones or regions. However, because it can take Chrome Enterprise Premium threat and data protection several hours to detect DLP and malware violations, any unprocessed data in a specific zone or region is subject to loss from a zonal or regional outage.

BigQuery

BigQuery is a serverless, highly scalable, and cost-effective cloud data warehouse designed for business agility. BigQuery supports the following location types for user datasets:

- A region: a specific geographical location, such as Iowa (

us-central1) or Montréal (northamerica-northeast1). - A multi-region: a large geographic area that contains two or more geographic

places, such as the United States (

US) or Europe (EU).

In either case, data is stored redundantly in two zones within a single region within the selected location. Data written to BigQuery is synchronously written to both the primary and secondary zones. This protects against unavailability of a single zone within the region, but not against a regional outage.

Binary Authorization

Binary Authorization is a software supply chain security product for GKE and Cloud Run.

All Binary Authorization policies are replicated across multiple zones within every region. Replication helps Binary Authorization policy read operations recover from failures of other regions. Replication also makes read operations tolerant of zonal failures within each region.

Binary Authorization enforcement operations are resilient against zonal outages, but they are not resilient against regional outages. Enforcement operations run in the same region as the GKE cluster or Cloud Run job that's making the request. Therefore, in the event of a regional outage, there is nothing running to make Binary Authorization enforcement requests.

Certificate Manager

Certificate Manager lets you acquire and manage Transport Layer Security (TLS) certificates for use with different types of Cloud Load Balancing.

In the case of a zonal outage, regional and global Certificate Manager are resilient to zonal failures because jobs and databases are redundant across multiple zones within a region. In the case of a regional outage, global Certificate Manager is resilient to regional failures because jobs and databases are redundant across multiple regions. Regional Certificate Manager is a regional product, so it cannot withstand a regional failure.

Cloud Intrusion Detection System

Cloud Intrusion Detection System (Cloud IDS) is a zonal service that provides zonally-scoped IDS Endpoints, which process the traffic of VMs in one specific zone, and thus isn't tolerant of zonal or regional outages.

Zonal outage: Cloud IDS is tied to VM instances. If a customer plans to mitigate zonal outages by deploying VMs in multiple zones (manually or via Regional Managed Instance Groups), they will need to deploy Cloud IDS Endpoints in those zones as well.

Regional Outage: Cloud IDS is a regional product. It doesn't provide any cross-regional functionality. A regional failure will take down all Cloud IDS functionality in all zones in that region.

Google Security Operations SIEM

Google Security Operations SIEM (which is part of Google Security Operations) is a fully managed service that helps security teams detect, investigate, and respond to threats.

Google Security Operations SIEM has regional and multi-regional offerings.

In regional offerings, data and traffic are automatically load-balanced across zones within the chosen region, and data is stored redundantly across availability zones within the region.

Multi-regions are geo-redundant. That redundancy provides a broader set of protections than regional storage. It also helps to ensure that the service continues to function even if a full region is lost.

The majority of data ingestion paths replicate customer data synchronously across multiple locations. When data is replicated asynchronously, there is a time window (a recovery point objective, or RPO) during which the data isn't yet replicated across several locations. This is the case when ingesting with feeds in multi-regional deployments. After the RPO, the data is available in multiple locations.

Zonal outage:

Regional deployments: Requests are served from any zone within the region. Data is synchronously replicated across multiple zones. In case of a full-zone outage, the remaining zones continue to serve traffic and continue to process the data. Redundant provisioning and automated scaling for Google Security Operations SIEM helps to ensure that the service remains operational in the remaining zones during these load shifts.

Multi-regional deployments: Zonal outages are equivalent to regional outages.

Regional outage:

Regional deployments: Google Security Operations SIEM stores all customer data within a single region and traffic is never routed across regions. In the event of a regional outage, Google Security Operations SIEM is unavailable in the region until the outage is resolved.

Multi-regional deployments (without feeds): Requests are served from any region of the multi-regional deployment. Data is synchronously replicated across multiple regions. In case of a full-region outage, the remaining regions continue to serve traffic and continue to process the data. Redundant provisioning and automated scaling for Google Security Operations SIEM helps ensure that the service remains operational in the remaining regions during these load shifts.

Multi-regional deployments (with feeds): Requests are served from any region of the multi-regional deployment. Data is replicated asynchronously across multiple regions with the provided RPO. In case of a full-region outage, only data stored after the RPO is available in the remaining regions. Data within the RPO window might not be replicated.

Cloud Asset Inventory

Cloud Asset Inventory is a high-performance, resilient, global service that maintains a repository of Google Cloud resource and policy metadata. Cloud Asset Inventory provides search and analysis tools that help you track deployed assets across organizations, folders, and projects.

In the case of a zone outage, Cloud Asset Inventory continues to serve requests from another zone in the same or different region.

In the case of a regional outage, Cloud Asset Inventory continues to serve requests from other regions.

Bigtable

Bigtable is a fully managed high performance NoSQL database service for large analytical and operational workloads.

Bigtable replication overview

Bigtable offers a flexible and fully configurable replication feature, which you can use to increase the availability and durability of your data by copying it to clusters in multiple regions or multiple zones within the same region. Bigtable can also provide automatic failover for your requests when you use replication.

When using multi-zonal or multi-regional configurations with multi-cluster routing, in the case of a zonal or regional outage, Bigtable automatically reroutes traffic and serves requests from the nearest available cluster. Because Bigtable replication is asynchronous and eventually consistent, very recent changes to data in the location of the outage might be unavailable if they have not been replicated yet to other locations.

Performance considerations

When CPU resource demands exceed available node capacity, Bigtable always prioritizes serving incoming requests ahead of replication traffic.

For more information about how to use Bigtable replication with your workload, see Cloud Bigtable replication overview and examples of replication settings.

Bigtable nodes are used both for serving incoming requests and for performing replication of data from other clusters. In addition to maintaining sufficient node counts per cluster, you must also ensure that your applications use proper schema design to avoid hotspots, which can cause excessive or imbalanced CPU usage and increased replication latency.

For more information about designing your application schema to maximize Bigtable performance and efficiency, see Schema design best practices.

Monitoring

Bigtable provides several ways to visually monitor the replication latency of your instances and clusters using the charts for replication available in the Google Cloud console.

You can also programmatically monitor Bigtable replication metrics using the Cloud Monitoring API.

Certificate Authority Service

Certificate Authority Service (CA Service) lets customers simplify, automate, and customize the deployment, management, and security of private certificate authorities (CA) and to resiliently issue certificates at scale.

Zonal outage: CA Service is resilient to zonal failures because its control plane is redundant across multiple zones within a region. If there is a zonal outage, CA Service continues to serve requests from another zone in the same region without interruption. Because data is replicated synchronously there is no data loss or corruption.

Regional outage: CA Service is a regional product, so it cannot withstand a regional failure. If you require resilience to regional failures, create issuing CAs in two different regions. Create the primary issuing CA in the region where you need certificates. Create a fallback CA in a different region. Use the fallback when the primary subordinate CA's region has an outage. If needed, both CAs can chain up to the same root CA.

Cloud Billing

The Cloud Billing API allows developers to manage billing for their Google Cloud projects programmatically. The Cloud Billing API is designed as a global system with updates synchronously written to multiple zones and regions.

Zonal or regional failure: The Cloud Billing API will automatically fail over to another zone or region. Individual requests may fail, but a retry policy should allow subsequent attempts to succeed.

Cloud Build

Cloud Build is a service that executes your builds on Google Cloud.

Cloud Build is composed of regionally isolated instances that synchronously replicate data across zones within the region. We recommend that you use specific Google Cloud regions instead of the global region, and ensure that the resources your build uses (including log buckets, Artifact Registry repositories, and so on) are aligned with the region that your build runs in.

In the case of a zonal outage, control plane operations are unaffected. However, currently executing builds within the failing zone will be delayed or permanently lost. Newly triggered builds will automatically be distributed to the remaining functioning zones.

In the case of a regional failure, the control plane will be offline, and currently executing builds will be delayed or permanently lost. Triggers, worker pools, and build data are never replicated across regions. We recommend that you prepare triggers and worker pools in multiple regions to make mitigation of an outage easier.

Cloud CDN

Cloud CDN distributes and caches content across many locations on Google's network to reduce serving latency for clients. Cached content is served on a best-effort basis -- when a request cannot be served by the Cloud CDN cache, the request is forwarded to origin servers, such as backend VMs or Cloud Storage buckets, where the original content is stored.

When a zone or a region fails, caches in the affected locations are unavailable. Inbound requests are routed to available Google edge locations and caches. If these alternate caches cannot serve the request they will forward the request to an available origin server. Provided that server can serve the request with up-to-date data, there will be no loss of content. An increased rate of cache misses will cause the origin servers to experience higher than normal traffic volumes as the caches are filled. Subsequent requests are be served from the caches unaffected by the zone or region outage.

For more information about Cloud CDN and cache behavior, see the Cloud CDN documentation.

Cloud Composer

Cloud Composer is a managed workflow orchestration service that lets you create, schedule, monitor, and manage workflows that span across clouds and on-premises data centers. Cloud Composer environments are built on the Apache Airflow open source project.

Cloud Composer API availability isn't affected by zonal unavailability. During a zonal outage, you retain access to the Cloud Composer API, including the ability to create new Cloud Composer environments.

A Cloud Composer environment has a GKE cluster as a part of its architecture. During a zonal outage, workflows on the cluster might be disrupted:

- In Cloud Composer 1, the environment's cluster is a zonal resource, thus a zonal outage might make the cluster unavailable. Workflows that are executing at the time of the outage might be stopped before completion.

- In Cloud Composer 2, the environment's cluster is a regional resource. However, workflows that are executed on nodes in the zones that are affected by a zonal outage might be stopped before completion.

In both versions of Cloud Composer, a zonal outage might cause partially executed workflows to stop executing, including any external actions that the workflow was configured by you to accomplish. Depending on the workflow, this can cause inconsistencies externally, such as if the workflow stops in the middle of a multi-step execution to modify external data stores. Therefore, you should consider the recovery process when you design your Airflow workflow, including how to detect partially unexecuted workflow states and repair any partial data changes.

In Cloud Composer 1, during a zone outage, you can choose to start a new Cloud Composer environment in another zone. Because Airflow keeps the state of your workflows in its metadata database, transferring this information to a new Cloud Composer environment can take additional steps and preparation.

In Cloud Composer 2, you can address zonal outages by setting up disaster recovery with environment snapshots in advance. During a zone outage, you can switch to another environment by transferring the state of your workflows with an environment snapshot. Only Cloud Composer 2 supports disaster recovery with environment snapshots.

Cloud Data Fusion

Cloud Data Fusion is a fully managed enterprise data integration service for quickly building and managing data pipelines. It provides three editions.

Zonal outages impact Developer edition instances.

Regional outages impact Basic and Enterprise edition instances.

To control access to resources, you might design and run pipelines in separate environments. This separation lets you design a pipeline once, and then run it in multiple environments. You can recover pipelines in both environments. For more information, see Back up and restore instance data.

The following advice applies to both regional and zonal outages.

Outages in the pipeline design environment

In the design environment, save pipeline drafts in case of an outage. Depending on specific RTO and RPO requirements, you can use the saved drafts to restore the pipeline in a different Cloud Data Fusion instance during an outage.

Outages in the pipeline execution environment

In the execution environment, you start the pipeline internally with Cloud Data Fusion triggers or schedules, or externally with orchestration tools, such as Cloud Composer. To be able to recover runtime configurations of pipelines, back up the pipelines and configurations, such as plugins and schedules. In an outage, you can use the backup to replicate an instance in an unaffected region or zone.

Another way to prepare for outages is to have multiple instances across the regions with the same configuration and pipeline set. If you use external orchestration, running pipelines can be load balanced automatically between instances. Take special care to ensure that there are no resources (such as data sources or orchestration tools) tied to a single region and used by all instances, as this could become a central point of failure in an outage. For example, you can have multiple instances in different regions and use Cloud Load Balancing and Cloud DNS to direct the pipeline run requests to an instance that isn't affected by an outage (see the example tier one and tier three architectures).

Outages for other Google Cloud data services in the pipeline

Your instance might use other Google Cloud services as data sources or pipeline execution environments, such as Dataproc, Cloud Storage, or BigQuery. Those services can be in different regions. When cross-regional execution is required, a failure in either region leads to an outage. In this scenario, you follow the standard disaster recovery steps, keeping in mind that cross-regional setup with critical services in different regions is less resilient.

Cloud Deploy

Cloud Deploy provides continuous delivery of workloads into runtime services such as GKE and Cloud Run. The service is composed of regional instances that synchronously replicate data across zones within the region.

Zonal outage: Control plane operations are unaffected. However, Cloud Build builds (for example, render or deploy operations) that are running when a zone fails are delayed or permanently lost. During an outage, the Cloud Deploy resource that triggered the build (a release or rollout) displays a failure status that indicates the underlying operation failed. You can re-create the resource to start a new build in the remaining functioning zones. For example, create a new rollout by redeploying the release to a target.

Regional outage: Control plane operations are unavailable, as is data from Cloud Deploy, until the region is restored. To help make it easier to restore service in the event of a regional outage, we recommend that you store your delivery pipeline and target definitions in source control. You can use these configuration files to re-create your Cloud Deploy pipelines in a functioning region. During an outage, data about existing releases is lost. Create a new release to continue deploying software to your targets.

Cloud DNS

Cloud DNS is a high-performance, resilient, global Domain Name System (DNS) service that publishes your domain names to the global DNS in a cost-effective way.

In the case of a zonal outage, Cloud DNS continues to serve requests from another zone in the same or different region without interruption. Updates to Cloud DNS records are synchronously replicated across zones within the region where they are received. Therefore, there is no data loss.

In the case of a regional outage, Cloud DNS continues to serve requests from other regions. It is possible that very recent updates to Cloud DNS records will be unavailable because updates are first processed in a single region before being asynchronously replicated to other regions.

Cloud Run functions

Cloud Run functions is a stateless computing environment where customers can run their function code on Google's infrastructure. Cloud Run functions is a regional offering, meaning customers can choose the region but not the zones that make up a region. Data and traffic are automatically load balanced across zones within a region. Functions are automatically scaled to meet incoming traffic and are load balanced across zones as necessary. Each zone maintains a scheduler that provides this autoscaling per-zone. It's also aware of the load other zones are receiving and will provision extra capacity in-zone to allow for any zonal failures.

Zonal outage: Cloud Run functions stores metadata as well as the deployed function. This data is stored regionally and written in a synchronous manner. The Cloud Run functions Admin API only returns the API call once the data has been committed to a quorum within a region. Since data is regionally stored, data plane operations are not affected by zonal failures either. Traffic automatically routes to other zones in the event of a zonal failure.

Regional outage: Customers choose the Google Cloud region they want to create their function in. Data is never replicated across regions. Customer traffic will never be routed to a different region by Cloud Run functions. In the case of a regional failure, Cloud Run functions will become available again as soon as the outage is resolved. Customers are encouraged to deploy to multiple regions and use Cloud Load Balancing to achieve higher availability if desired.

Cloud Healthcare API

Cloud Healthcare API, a service for storing and managing healthcare data, is built to provide high availability and offers protection against zonal and regional failures, depending on a chosen configuration.

Regional configuration: in its default configuration, Cloud Healthcare API offers protection against zonal failure. Service is deployed in three zones across one region, with data also triplicated across different zones within the region. In case of a zonal failure, affecting either service layer or data layer, the remaining zones take over without interruption. With regional configuration, if a whole region where service is located experiences an outage, service will be unavailable until the region comes back online. In the unforeseen event of a physical destruction of a whole region, data stored in that region will be lost.

Multi-regional configuration: in its multiregional configuration, Cloud Healthcare API is deployed in three zones belonging to three different regions. Data is also replicated across three regions. This guards against loss of service in case of a whole-region outage, since the remaining regions would automatically take over. Structured data, such as FHIR, is synchronously replicated across multiple regions, so it's protected against data loss in case of a whole-region outage. Data that is stored in Cloud Storage buckets, such as DICOM and Dictation or large HL7v2/FHIR objects, is asynchronously replicated across multiple regions.

Cloud Identity

Cloud Identity services are distributed across multiple regions and use dynamic load balancing. Cloud Identity does not allow users to select a resource scope. If a particular zone or region experiences an outage, traffic is automatically distributed to other zones or regions.

Persistent data is mirrored in multiple regions with synchronous replication in most cases. For performance reasons, a few systems, such as caches or changes affecting large numbers of entities, are asynchronously replicated across regions. If the primary region in which the most current data is stored experiences an outage, Cloud Identity serves stale data from another location until the primary region becomes available.

Cloud Interconnect

Cloud Interconnect offers customers RFC 1918 access to Google Cloud networks from their on-premises data centers, over physical cables connected to Google peering edge.

Cloud Interconnect provides customers with a 99.9% SLA if they provision connections to two EADs (Edge Availability Domains) in a metropolitan area. A 99.99% SLA is available if the customer provisions connections in two EADs in two metropolitan areas to two regions with Global Routing. See Topology for non-critical applications overview and Topology for production-level applications overview for more information.

Cloud Interconnect is compute-zone independent and provides high availability in the form of EADs. In the event of an EAD failure, the BGP session to that EAD breaks and traffic fails over to the other EAD.

In the event of a regional failure, BGP sessions to that region break and traffic fails over to the resources in the working region. This applies when Global Routing is enabled.

Cloud Key Management Service

Cloud Key Management Service (Cloud KMS) provides scalable and highly-durable cryptographic key resource management. Cloud KMS stores all of its data and metadata in Spanner databases which provide high data durability and availability with synchronous replication.

Cloud KMS resources can be created in a single region, multiple regions, or globally.

In the case of zonal outage, Cloud KMS continues to serve requests from another zone in the same or different region without interruption. Because data is replicated synchronously, there is no data loss or corruption. When the zone outage is resolved, full redundancy is restored.

In the case of a regional outage, regional resources in that region are offline until the region becomes available again. Note that even within a region, at least 3 replicas are maintained in separate zones. When higher availability is required, resources should be stored in a multi-region or global configuration. Multi-region and global configurations are designed to stay available through a regional outage by geo-redundantly storing and serving data in more than one region.

Cloud External Key Manager (Cloud EKM)

Cloud External Key Manager is integrated with Cloud Key Management Service to let you control and access external keys through supported third-party partners. You can use these external keys to encrypt data at rest to use for other Google Cloud services that support customer-managed encryption keys (CMEK) integration.

Zonal outage: Cloud External Key Manager is resilient to zonal outages because of the redundancy that's provided by multiple zones in a region. If a zonal outage occurs, traffic is rerouted to other zones within the region. While traffic is rerouting, you might see an increase in errors, but the service is still available.

Regional outage: Cloud External Key Manager isn't available during a regional outage in the affected region. There is no failover mechanism that redirects requests across regions. We recommend that customers use multiple regions to run their jobs.

Cloud External Key Manager doesn't store any customer data persistently. Thus, there's no data loss during a regional outage within the Cloud External Key Manager system. However, Cloud External Key Manager depends on the availability of other services, like Cloud Key Management Service and external third party vendors. If those systems fail during a regional outage, you could lose data. The RPO/RTO of these systems are outside the scope of Cloud External Key Manager commitments.

Cloud Load Balancing

Cloud Load Balancing is a fully distributed, software-defined managed service. With Cloud Load Balancing, a single anycast IP address can serve as the frontend for backends in regions around the world. It isn't hardware-based, so you don't need to manage a physical load-balancing infrastructure. Load balancers are a critical component of most highly available applications.

Cloud Load Balancing offers both regional and global load balancers. It also provides cross-region load balancing, including automatic multi-region failover, which moves traffic to failover backends if your primary backends become unhealthy.

The global load balancers are resilient to both zonal and regional outages. The regional load balancers are resilient to zonal outages but are affected by outages in their region. However, in either case, it is important to understand that the resilience of your overall application depends not just on which type of load balancer you deploy, but also on the redundancy of your backends.

For more information about Cloud Load Balancing and its features, see Cloud Load Balancing overview.

Cloud Logging

Cloud Logging consists of two main parts: the Logs Router and Cloud Logging storage.

The Logs Router handles streaming log events and directs the logs to Cloud Storage, Pub/Sub, BigQuery, or Cloud Logging storage.

Cloud Logging storage is a service for storing, querying, and managing compliance for logs. It supports many users and workflows including development, compliance, troubleshooting, and proactive alerting.

Logs Router & incoming logs: During a zonal outage, the Cloud Logging API routes logs to other zones in the region. Normally, logs being routed by the Logs Router to Cloud Logging, BigQuery, or Pub/Sub are written to their end destination as soon as possible, while logs sent to Cloud Storage are buffered and written in batches hourly.

Log Entries: In the event of a zonal or regional outage, log entries that have been buffered in the affected zone or region and not written to the export destination become inaccessible. Logs-based metrics are also calculated in the Logs Router and subject to the same constraints. Once delivered to the selected log export location, logs are replicated according to the destination service. Logs that are exported to Cloud Logging storage are synchronously replicated across two zones in a region. For the replication behavior of other destination types, see the relevant section in this article. Note that logs exported to Cloud Storage are batched and written every hour. Therefore we recommend using Cloud Logging storage, BigQuery, or Pub/Sub to minimize the amount of data impacted by an outage.

Log Metadata: Metadata such as sink and exclusion configuration is stored globally but cached regionally so in the event of an outage, the regional Log Router instances would operate. Single region outages have no impact outside of the region.

Cloud Monitoring

Cloud Monitoring consists of a variety of interconnected features, such as dashboards (both built-in and user-defined), alerting, and uptime monitoring.