Abstract

Computational Pathology (CPath) is an interdisciplinary science that augments developments of computational approaches to analyze and model medical histopathology images. The main objective for CPath is to develop infrastructure and workflows of digital diagnostics as an assistive CAD system for clinical pathology, facilitating transformational changes in the diagnosis and treatment of cancer that are mainly address by CPath tools. With evergrowing developments in deep learning and computer vision algorithms, and the ease of the data flow from digital pathology, currently CPath is witnessing a paradigm shift. Despite the sheer volume of engineering and scientific works being introduced for cancer image analysis, there is still a considerable gap of adopting and integrating these algorithms in clinical practice. This raises a significant question regarding the direction and trends that are undertaken in CPath. In this article we provide a comprehensive review of more than 800 papers to address the challenges faced in problem design all-the-way to the application and implementation viewpoints. We have catalogued each paper into a model-card by examining the key works and challenges faced to layout the current landscape in CPath. We hope this helps the community to locate relevant works and facilitate understanding of the field’s future directions. In a nutshell, we oversee the CPath developments in cycle of stages which are required to be cohesively linked together to address the challenges associated with such multidisciplinary science. We overview this cycle from different perspectives of data-centric, model-centric, and application-centric problems. We finally sketch remaining challenges and provide directions for future technical developments and clinical integration of CPath. For updated information on this survey review paper and accessing to the original model cards repository, please refer to GitHub. Updated version of this draft can also be found from arXiv.

Keywords: Digital pathology, Whole slide image (WSI), Deep learning, Computer aided diagnosis (CAD), Clinical pathology, Survey

1. Introduction

April 2017 marked a turning point for digital pathology when the Philips IntelliSite digital scanner received the US Food & Drugs Administration (FDA) approval (with limited use case) for diagnostic applications in clinical pathology.1,2 A subsequent validation guideline was created to help ensure the produced Whole Slide Image (WSI) scans could be used in clinical settings without compromising patient care, while maintaining similar results to the current gold standard of optical microscopy.3, 4, 5, 6 The use of WSIs offers significant advantages to the pathologist’s workflow: digitally captured images, compared to tissue slides, are immune from accidental physical damage and maintain their quality over time.7,8 Clinics and practices can share and store these high-resolution images digitally enabling asynchronous viewing/collaboration worldwide.9,10 The development of digital pathology shows great promise as a framework to improve work efficiency in the practice of pathology.10,11 Adopting a digital workflow also opens immense opportunities for using computational methods to augment and expedite their workflow–the field of Computational Pathology (CPath) is dedicated to researching and developing these methods.12, 13, 14, 15, 16, 17

However, despite the aforementioned advantages, the adoption of digital pathology, and hence computational pathology, has been slow. Some pathologists consider the analysis of WSIs as opposed to glass slides as an unnecessary change in their workflow9,18, 19, 20 and recent surveys indicate that the switch to digital pathology does not provide enough financial incentive.8,21, 22, 23, 24, 25 This is where advances from CPath can address or overpower many of the concerns in adopting a digital workflow. For example, CPath models to identify morphological features that correlate with breast cancer26 provide substantial benefits to clinical accuracy. Further, CPath models that identify lymph node metastases with better sensitivity while reducing diagnostic time27 can streamline workflows to increase pathologist throughput and generate more revenue.28,29

Similar to digital pathology, the adoption of CPath methods has also lagged despite the many benefits it offers to improve efficiency and accuracy in pathology.2,30, 31, 32 This lack of adoption and integration into clinical practice raises a significant question regarding the direction and trends of current work in CPath. This survey looks to review the field of CPath in a systematic fashion by breaking down the various steps involved in a CPath workflow and categorizing CPath works to both determine trends in the field and provide a resource for the community to reference when creating new works.

Existing survey papers in the field of CPath can be clustered into a few groups. The first focuses on the design and applications of smart diagnosis tools.15, 16, 17,33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43 These works focus on designing novel architectures for artificial intelligence (AI) models with regards to specific clinical tasks, although they may briefly discuss clinical challenges and limitations. A second group of works focus on clinical barriers for AI integration discussing specific certifications and regulations required for the development of medical devices under clinical settings.44, 45, 46, 47, 48, 49 Lastly, the final group focuses on both the design and the integration of AI tools with clinical applications.12, 13, 14,29,50, 51, 52, 53, 54, 55, 56 These works speak to both the computer vision and pathology communities in developing machine learning (ML) models that can satisfy clinical use cases.

Our work is situated in this final group as we breakdown the end-to-end CPath workflow into stages and systematically review works related to and addressing those stages. We oversee this as a workflow for CPath research that breaks down the process of problem definition, data collection, model creation, and clinical validation into a cycle of stages. A visual representation of this cycle is provided in Fig. 1. We review over 700 papers from all areas of the CPath field to examine key works and challenges faced. By reviewing the field so comprehensively, our goal is to layout the current landscape of key developments to allow computer scientists and pathologists alike to situate their work in the overall CPath workflow, locate relevant works, and facilitate an understanding of the field’s future directions. We also adopt the idea of generating model cards from57 and designed a card format specifically tailored for CPath. Each paper we reviewed was catalogued as a model card that concisely describes (1) the organ of application, (2) the compiled dataset, (3) the machine learning model, and (4) the target task. The complete model card categorization of the reviewed publications is provided in Appendix A.12 for the reader’s use.

Fig. 1.

We divide the data science workflow for pathology into multiple stages, wherein each brings a different level of experience. For example, the annotation/ground truth labelling stage (c) is where domain expert knowledge is consulted as to augment images with associated metadata. Meanwhile, in the evaluation phase (e), we have computer vision scientists, software developers, and pathologists working in concert to extract meaningful results and implications from the representation learning.

In our review of the CPath field, we find that two main approaches emerge in works: 1) a data-centric approach and 2) a model-centric approach. Considering a given application area, such as specific cancers, e.g. breast ductal carcinoma in-situ (DCIS), or a specific task, e.g. segmentation of benign and malignant regions of tissue, researchers in the CPath field focus generally on either improving the data or innovating on the model used.

Works with data-centric approaches focus on collecting pathology data and compiling datasets to train models on certain tasks based on the premise that the transfer of domain expert knowledge to models is captured by the process of collecting and labeling high-quality data.51,58,59 The motivation behind this approach in CPath is driven by the need to 1) address the lack of labeled WSI data representing both histology and histopathology cases due to the laborious annotation process24 and 2) capture a predefined pathology ontology provided by domain expert pathologists for the class definitions and relations in tissue samples. Regarding the lack of labeled WSI data our analysis reveals that there are a larger number of datasets with granular labels, but there is a larger total amount of data available for a given organ and disease application that have weakly supervised labels at the Slide or Patient-level. Although some tasks, such as segmentation and detection, require WSI data to have more granular labels at the region-of-interest (ROI) or image mosaic/tiles (known as patch) levels, to capture more precise information for training models, there is a potential gap to leverage the large amount of weakly-supervised data to train models that can be later used downstream on smaller strongly-supervised datasets for those tasks. When considering the ontology of pathology as compared to the field of computer vision, we note that pathology datasets have far fewer classes than computer vision (e.g. ImageNet-20K contains 20,000 class categories for natural images60 whereas CAMELYON17 has four annotated classes for breast cancer metastases61), but has much more variation within each of these classes in terms of representations and fuzzy boundaries around the grade of cancers which subdivides each class into many more in reality. There are also very rare classes in the form of rare diseases and cancers, as presented in Fig. 12 and discussed in Section 2, which present a class imbalance challenge when compiling data or training models. If one considers the complexities involved in representational learning of related tissues and diseases, it raises the question of whether there is a clear understanding and consensus in the field of how an efficient dataset should be compiled for model development. Our survey analyzes the availability of CPath datasets along with what area of application they address and their annotation level in detail in Section 3.3, and the complete table of datasets we have covered is available in Appendix A.9. Section 4 goes into more depth about the various levels of annotation, the annotation process, and selecting the appropriate annotation level for a task.

The model-centric approach, by contrast, is favoured by computer scientists and engineers, who design algorithmic approaches based on the available pathology data. Selection of a modelling approach, such as self-supervised, weakly-supervised, or strongly-supervised learning, is dictated directly by the amount of data available for a given annotation level and task. Currently, many models are developed on datasets with strongly-supervised labels at the ROI, Patch, or Pixel-levels to address tasks such as tissue type classification or disease detection. However, a recent trend is developing to apply self-supervised and weakly-supervised learning methods to leverage the large amount of data with Slide and Patient-level annotations.62 Models are trained in a self or weakly supervised manner to learn representations on a wider range of pathology data across organs and diseases, which can be leveraged for other tasks requiring more supervision but without the need for massive labeled datasets.63, 64, 65 This trend points to the future direction of CPath models following a similar trend to that in computer vision, where large-scale models are being pre-trained using self-supervised techniques to achieve state-of-the-art performance in downstream tasks.66,67

Although data and model centric approaches are both important in advancing the performance of models and tools in CPath, we note a need for much more application centric work in CPath. We define a study to be application centric if the primary focus is on addressing a particularly impactful task or need in the clinical workflow, ideally including clinical validation of the method or tool. To this end, Section 2 details the clinical pathology workflow from specimen collection to report generation, major task categories in CPath, and specific applications per organ. Particularly, we find that very few works focus on the pre or post-analytical phases of the pathology workflow where many errors can occur, instead focusing on the analytical phase where interpretation tasks take place. Additionally, certain types of cancer with deadly survival rates are underrepresented in CPath datasets and works. Very few CPath models and tools have been validated in a clinical setting by pathologists, suggesting that there may still be massive barriers to actually using CPath tools in practice. All of this points to a severe oversight by the CPath community towards considering the actual application and implementation of tools in a clinical setting. We suspect this to be a major reason as to why there is a slow uptake in adopting CPath tools by pathology labs.

The contributions of this survey include the provision of an end-to-end workflow for developing CPath work which outlines the various stages involved and is reflected within the survey sections. Further, we propose and provide a comprehensive conceptual model card framework for CPath that clearly categorizes works by their application of interest, dataset usage, and model, enabling consistent and easy comparison and retrieval of papers in relevant areas. Based on our analysis of the field, we highlight several challenges and trends, including the availability of datasets, focus on models leveraging existing data, disregard of impactful application areas, and lack of clinical validation. Finally, we give suggestions for addressing these aforementioned challenges and provide directions for future work in the hopes of aiding the adoption and implementation of CPath tools in clinical settings.

The structure of this survey closely follows the CPath data workflow illustrated in Fig. 1. Section 2 begins by outlining the clinical pathology workflow and covers the various task domains in CPath, along with organ specific tasks and diseases. The next step of the workflow involves the processes and methods of histopathology data collection, which is outlined in Section 3. Following data collection, Section 4 details the corresponding annotation and labeling methodology and considerations. Section 5 covers deep learning designs and methodologies for CPath applications. Section 6 focuses on regulatory measures and clinical validation of CPath tools. Section 7 explores emerging trends in recent CPath research. Finally, we provide our perceived challenges and future outlook of CPath in Section 8.

2. Clinical applications for CPath

The field of CPath is dedicated to the creation of tools that address and aid steps in the clinical pathology workflow. Thus, a grounded understanding of the clinical workflow is of paramount importance before development of any CPath tool. The outcomes of clinical pathology are diagnostics, prognostics, and predictions of therapy response. Computational pathology systems that focus on diagnostic tasks aim to assist the pathologists in tasks such as tumour detection, tumour grading, quantification of cell numbers, etc. Prognostic systems aim to predict survival for individual patients while therapy response predictive models aid personalized treatment decisions based on histopathology images. Fig. 3 visualizes the goals pertaining to these tasks. In this section, we provide detail on the clinical pathology workflow, the major application areas in diagnostics, prognostics, and therapy response, and finally detail the cancers and CPath applications in specific organs. The goal is to outline the tasks and areas of application in pathology where CPath tools and systems can be developed and implemented.

Fig. 3.

The categorization of diagnostic tasks in computational pathology along with examples A) Detection: common detection task such as differentiating positive from negative classes like malignant from benign, B) Tissue Subtype Classification: classification task for tumorous tissue, Stroma, and adipose tissue, C) Disease Diagnosis: common disease diagnosis task like cancer staging, D) Segmentation: tumor segmentation in WSIs, and E) Prognosis tasks: shows a graph comparing survival rate and months after surgery.

2.1. Clinical pathology workflow

This subsection provides a general overview of the clinical workflow in pathology covering the collection of a tissue sample, its subsequent processing into a slide, inspection by a pathologist, and compilation of the analysis and diagnosis into a pathology. Fig. 2 summarizes these steps at a high level and provides suggestions for corresponding CPath applications. The steps are organized under the conventional pathology phases for samples: pre-analytical, analytical, and post-analytical. These phases were developed to categorize quality control measures, as each phase has its own set of potential sources of errors,68 and thus potential sources of corrections during which CPath and healthcare artificial intelligence tools could prove useful. For details about each step of the workflow, please refer to the Appendix A.1.

Fig. 2.

Quality assurance and control phases developed by pathologists to oversee the clinical pathology workflow into three main phases of pre-analytical, analytical, and post-analytica phases. We further show how each of these processes can be augmented under the potential CPath applications in an end-to-end pipeline.

Pre Analytical Phase The first step of the pre-analytical phase is a biopsy performed to collect a tissue sample, where the biopsy method is dependent on the type of sample required and the tissue characteristics. Sample collection is followed by accessioning of the sample which involves entering of the patient and specimen information into a Laboratory Information System (LIS) and linking to the Electronic Medical Records (EMR) and potentially a Slide Tracking System (STS). After accessioning, smaller specimens that have not already been preserved by fixation in formalin are fixated. Once the basic specimen preparation has occurred, the tissue is analyzed by the pathology team without the use of a microscope; a step called grossing. Grossing involves cross-referencing the clinical findings and the EMR reports, with the operator localizing the disease, locating the pathological landmarks, describing these landmarks, and measuring disease extent. Specific sampling of these landmarks is performed, and these samples are then put into cassettes for the final fixation. Subsequently, the samples are then sliced using a microtome, stained using the relevant stains for diagnosis, and covered with a glass slide.

Analytical Phase After a slide is processed and prepared, a pathologist views the slide to analyze and interpret the sample. The approach to interpretation varies depending on the specimen type. Interpretation of smaller specimens is focused on diagnosis of any disease. Analysis is performed in a decision-tree style approach to add diagnosis-specific parameters, e.g. esophagus biopsy type of sampled mucosa presence of folveolar-type mucosa identify Barrett’s metaplasia identify degree of dysplasia. Once the main diagnosis has been identified and characterized, the pathologist sweeps the remaining tissue for secondary diagnoses which can also be characterized depending on their nature. Larger specimens are more complex and usually focus on characterizing the tissue and identifying unexpected diagnoses beyond the prior diagnosis from a small specimen biopsy. Microscopic interpretation of large specimens is highly dependent on the quality of the grossing and the appropriate detection and sampling of landmarks. Each landmark (e.g., tumor surface, tumor at deepest point, surgical margins, lymph node in mesenteric fat) is characterized either according to guidelines, if available, or according to the pathologist’s judgment. After the initial microscopic interpretation additional deeper cuts (“levels”), special stains, immunohistochemistry (IHC), and/or molecular testing may be performed to hone the diagnosis by generating new material or slides from the original tissue block.

Post-Analytical Phase The pathologist synthesizes a diagnosis by aggregating their findings from grossing and microscopic examination in combination with the patient’s clinical information, all of which are included in a final pathology report. The classic sections of a pathology report are patient information, a list of specimens included, clinical findings, grossing report, microscopic description, final diagnosis, and comment. The length and degree of complexity of the report again depends on the specimen type. Small specimen reports are often succinct, clearly and unambiguously listing relevant findings which guide treatment and follow-up. Large specimen reports depend on the disease, for example, in cancer resection specimens the grossing landmarks are specifically targeted at elements that will guide subsequent treatment.

In the past, pathology reports had no standardized format, usually taking a narrative-free text form. Free text reports can omit necessary data, include irrelevant information, and contain inconsistent descriptions.69 To combat this, synoptic reporting was introduced to provide a structured and standardized reporting format specific to each organ and cancer of interest.69,70 Over the last 15 years, synoptic reporting has enabled pathologists to communicate information to surgeons, oncologists, patients, and researchers in a consistent manner across institutions and even countries. The College of American Pathologists (CAP) and the International Collaboration on Cancer Reporting (ICCR) are the two major institutions publishing synoptic reporting protocols. The parameters included in these protocols are determined and updated by CAP and ICCR respectively to remain up-to-date and relevant for diagnosis of each cancer type. For the field of computational pathology, synoptic reporting provides a significant advantage in dataset and model creation, as a pre-normalized set of labels exist across a variety of cases and slides in the form of the synoptic parameters filled out in each report. Additionally, suggestion or prediction of synoptic report values are a possible CPath application area.

2.2. Diagnostic tasks

Computational pathology systems that focus on diagnostic tasks can broadly be categorized as: (1) disease detection, (2) tissue subtype classification, (3) disease diagnosis, and (4) segmentation. These tasks are visually depicted in Fig. 3. Note how the detection tasks all involve visual analysis of the tissue in WSI format. Thus computer vision approach is primarily adopted towards tackling diagnostic tasks in computer aided diagnosis (CAD). For additional detail on some previous works on these diagnostic tasks, we refer the reader to Appendix A.2

Detection We define the detection task as a binary classification problem where inputs are labeled as positive or negative, indicating the presence or absence of a certain feature. There may be variations in the level of annotation required, e.g. slide-level, patch-level, pixel-level detection depending on the feature in question. Although detection tasks may not provide an immediate disease diagnosis, it is a highly relevant task in many pathology workflows as pathologists incorporate the presence or absence of various histological features into synoptic reports that lead to diagnosis. Broadly, detection tasks fall into two main categories: (1) screening the presence of cancers and (2) detecting histopathological features specific to certain diagnoses.

Cancer detection algorithms can assist the pathologists by filtering obviously normal WSIs and directing pathologist’s focus to metastatic regions.71 Although pathologists have to review all the slides to check for multiple conditions regardless of the clinical diagnosis, an accurate cancer detection CAD would expedite the workflow by pinpointing the ROIs and summarizing results into synoptic reports, ultimately leading to a reduces time per slide. Due to this potential impact, cancer detection tasks have been explored in a broad set of organs. Additionally, the simple labeling in binary detection tasks allows for deep learning methods to generalize across different organs where similar cancers form.72, 73, 74

Tissue Subtype Classification Treatment and patient prognosis can vary widely depending on the stage of cancer, and finely classifying specific tissue structures associated with a specific disease type provides essential diagnostic and prognostic information.75 Accordingly, accurately classifying tissue subtypes is a crucial component of the disease diagnosis process. As an example, discriminating between two forms of glioma (a type of brain cancer), glioblastoma multiforme and lower grade glioma, is critical as they differ by over in patient survival rates.76 Additionally, accurate classification is key in colorectal cancer (CRC) diagnosis, as high morphological variation in tumor cells77 makes certain forms of CRC difficult to diagnose by pathologists.78 We define this classification of histological features as tissue subtype classification.

Disease Diagnosis The most frequently explored design of deep learning in digital pathology involves emulating pathologist diagnosis. We define this multi-class diagnosis problem as a disease diagnosis task. Note the similarity with detection–disease diagnosis can be considered a fine-grained classification problem which subdivides the general positive disease class into finer disease-specific labels based on the organ and patient context.

Segmentation The segmentation task moves one step beyond classification by adding an element of spatial localization to the predicted label(s). In semantic segmentation, objects of interest are delineated in an image by assigning class labels to every pixel. These class labels can be discrete or non-discrete, the latter being a more difficult task.79 Another variant of the segmentation task is instance segmentation, which aims to achieve both pixel-level segmentation accuracy as well as clearly defined object (instance) boundaries. Segmentation approaches can accurately capture many morphological statistics80 and textural features,81 both of which are relevant for cancer diagnosis and prognosis. Most frequently, segmentation is used to capture characteristics of individual glands, nuclei, and tumor regions in WSIs. For instance, glandular structure is a critical indicator of the severity of colorectal carcinoma,82 thus accurate segmentation could highlight particularly abnormal glands to the pathologist as demonstrated in.82, 83, 84 Overall, segmentation provides localization and classification of cancer-specific tumors and of specific histological features that can be meaningful for the pathologist’s clinical interpretation.

2.3. Prognosis

Prognosis involves predicting the likely development of a disease based on given patient features. For accurate survival prediction, models must learn to both identify and infer the effects of histological features on patient risk. Prognosis represents a merging of the diagnosis classification task and the disease-survivability regression task.

Training a model for prognosis requires a comprehensive set of both histopathology slides and patient survival data (i.e. a variant of multi-modal representation learning). Despite the complexity of the input data, ML models are still capable of extracting novel histological patterns for disease-specific survivability.85, 86, 87 Furthermore, strong models can discover novel prognostically-relevant histological features from WSI analysis.88,89 As the quality and comprehensiveness of data improves, additional clinical factors could be incorporated into deep learning analysis to improve prognosis.

2.4. Prediction of treatment response

With the recent advances in targeted therapy for cancer treatment, clinicians are able to use treatment options that precisely identify and attack certain types of cancer cells. While the number of options for targeted therapy are constantly increasing, it becomes increasingly important to identify patients who are potential therapy responders to a specific therapy option and avoid treating non-responding patients who may experience severe side effects. Deep learning can be used to detect structures and transformations in tumour tissue that could be used as predictive markers of a positive treatment response. Training such deep learning models usually requires large cohorts of patient data for whom the specific type of treatment option and the corresponding response is known.

2.5. Organs and diseases

This section presents an overview of the various anatomical application areas for computational pathology grouped by the targeted organ. Each organ section gives a brief overview of the types of cancers typically found and the content of the pathology report as noted from the corresponding CAP synoptic reporting outline (discussed at 2.1). Fig. 4 highlights the intersection between the major diagnostic tasks and the anatomical focuses in state-of-the-art research. The majority of papers are dedicated to the four most common cancer sites: breast, colon, prostate, and lung.90 Additionally, a significant amount of research is also done on cancer types with highest mortality, brain and liver.90 Note that details of some additional works that may be of interest for each organ type can be found in Appendix A.7 (see Fig. 5).

Fig. 4.

Distribution of diagnostic tasks in CPath for different organs from Table 9.11. This distribution includes more than 400 cited works from 2018 to 2022 inclusive. The x-axis covers different organs, the y-axis displays different diagnostic tasks, and the height of the bars along the vertical axis measures the number of works that have examined the specific task and organ. Please refer to Table 9.11 in the supplementary section for more information.

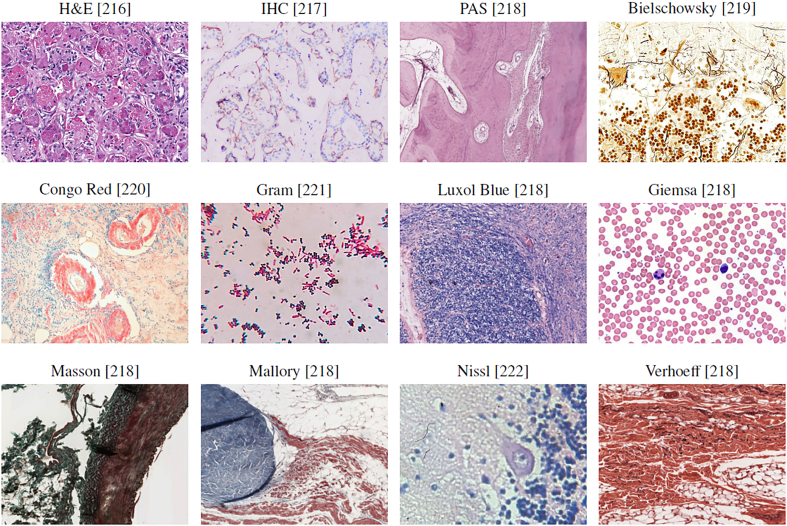

Fig. 5.

WSI tissue images with different types of histological stains. Each stain highlights different areas and structures of the tissue in order to aid in visualizing underlying characteristics. Amongst this diversity, there is Hematoxylin and Eosin or H&E which is mainly used in studies as most histopathological processes can be understood from this stain. All images provided are under a Creative Commons license, specifics on the license can be found in the references.

Breast Breast cancers can start from different parts of the breast and majorly consist of 1) Lobular cancers that start from lobular glands, 2) Ductal cancers, 3) Paget cancer which involves the nipple, 4) Phyllodes tumor that stems from fat and connective tissue surrounding the ducts and lobules, and 5) Angiosarcoma which starts in the lining of the blood and lymph vessels. In addition, based on whether the cancer has spread or not, breast cancers can be categorized into in situ or invasive/infiltrating forms. DCIS is a precancerous state and is still confined to the ducts. Once the cancerous cells grow out of the ducts, the carcinoma is now considered invasive or infiltrative and can metastasize.91

Synoptic reports for breast cancer diagnosis are divided based on the type of cancers mentioned above. For DCIS and invasive breast cancers, synoptic reports focus on the histologic type and grade, along with the nuclear grade, evidence of necrosis, margin, involvement of regional lymph nodes, and biomarker status. Notably, architectural patterns are no longer a valuable predictive tool compared to nuclear grade and necrosis to determine a relative ordering of diagnostic importance for DCIS.92 In contrast to DCIS and invasive cancers, Phyllodes tumours vary due to their differing origin in the fat and connective tissue, focusing on analyzing the stroma characteristics, existence of heterologous elements, mitotic rate, along with the involvement of lymph nodes. Finally, to determine therapy response and treatments, biomarker tests for Estrogen, Progesterone93 and HER-294 receptors are recommended, along with occasional tests for Ki67 antigens.95,96

Most breast cancer-focused works in CPath propose various solutions for carcinoma detection and metastasis detection, an important step for assessing cancer stage and morbidity. Metastasis detection using deep learning methods was shown to outperform pathologists’ exhaustive diagnosis by free-response receiver operating characteristic (FROC) in.97

Prostate Prostate cancer is the second most prevalent cancer among the total population and the most common cancer among men (both excluding non-melanoma skin cancers). However, most prostate cancers are not lethal. Prostate cancer can occur in any of the three prostate zones: Central (CZ), Peripheral (PZ), and Transition (TZ), in increasing order of aggressiveness. Prostate cancers are almost always adenocarcinomas, which develop from the gland cells that make prostate fluid. The other types of prostate cancers are small cell carcinomas, neuroendocrine tumors, transitional cell carcinomas, isolated intraductal carcinoma, and sarcomas (which are very rare). Other than cancers, there are multiple conditions that are important to identify or diagnose as precursors to cancer or not. Prostatic intraepithelial neoplasia (PIN) is diagnosed as either low-grade PIN or high-grade PIN. Men with high-grade PIN need closely monitored follow-up sessions to screen for prostate cancer. Similarly, atypical small acinar proliferation (ASAP) is another precancerous condition requiring follow-up biopsies.98

To grade and score tumours, pathologists use a Tumor, Nodes, Metastasis (TNM) framework. In the synoptic report, pathologists identify and report the histologic type and grades, and involvement of regional lymph nodes to help grade and provide prognosis for any tumours. Specifically for prostate analysis, tumour size and volume are both important factors in prognosis according to multiple studies.99, 100, 101, 102 Similarly, location is important to note for both prognosis and therapy response.103 Invasion to nearby (except perineural invasion) tissues is noted and can correlate to TMN classification.104 Additionally, margin analysis is especially important in prostate cancers as the presence of a positive margin increases the risk of cancer recurrence and metastasis.105 Finally, intraductal carcinoma (IDC) must be identified and distinguished from PIN and PIA; as it is strongly associated with a high Gleason score, a high-volume tumor, and metastatic disease.106, 107, 108, 109, 110

After a prostate cancer diagnosis is established, pathologists assign a Gleason Score to determine the cancer’s grade: a grade from 1 to 5 is assigned to the two most common areas and those two grades are summed to make a final Gleason Score.111 For Gleason scores of 7, where survival and clinical outcomes demonstrate large variance, the identification of Cribriform glands is key in helping to narrow possible outcomes.112,113

Ovary Ovarian cancer is the deadliest gynecologic malignancy and accounts for more than deaths each year.114 Ovarian cancer manifests in three types: 1) epithelial cell tumors that start from the epithelial cells covering the outer surface of the ovary, 2) germ cell tumors which start from the cells that produce eggs, and 3) stromal tumors which start from cells that hold the ovary together and produce the hormones estrogen and progesterone. Each of these cancer types can be classified into benign, intermediate and malignant categories. Overall, epithelial cell tumors are the most common ovarian cancer and have the worst prognosis.115

When compiling a synoptic report for ovarian cancer diagnosis, pathologists focus on histologic type and grade, extra-organ involvement, regional lymph nodes, T53 gene mutations, and serous tubal intraeptithelial carconma (STIC). Varying histologic tissue types are vital to determine the pathology characteristics and determining eventual prognosis. For example, generally endometrioid, mucinous, and clear cell carcinomas have better outcomes than serous carcinomas.116 Additionally, lymph node involvement and metastasis in both regional and distant nodes has a direct correlation to patient survival, grading, and treatment. Determining the presence of STICs correlates directly to the presence of ovarian cancer, as of ovarian cancer patients will also have an associated STIC.114 Finally, T53 gene mutations are the most common in epithelial ovarian cancer; which has the worst prognosis among ovarian cancers, so determining their presence is critical to patient cancer risk and therapy response.117,118 There are not a large number of works dedicated to the ovary specifically, but most works on ovary focus on classification of its five most common cancer subtypes: high-grade serous (HGSC), low-grade serous (LGSC), endometriod (ENC), clear cell (CCC), and mucinous (MUC).119,120

Lung Lung cancer is the third most common cancer, next to breast and prostate cancer.121 Lung cancers mostly start in the bronchi, bronchioles, or alveoli and are divided into two major types, non-small cell lung carcinomas (NSCLC) () and small cell lung carcinomas (SCLC) (). Although NSCLS cancers are different in terms of origin, they are grouped because they have similar outcomes and treatment plans. Common NSCLS cancers are 1) adenocarcinoma, 2) squamous cell carcinoma 3) large cell carcinoma, and some other uncommon subtypes.122

For reporting, histologic type helps determine NSCLC vs SCLC and the subtype of NSCLC. Although NSCLC generally has favourable survival rates and prognosis as compared to SCLC, certain subtypes of NSCLC can have lower survival rates due to co-factors.123 Histologic patterns are applicable in adenocarcinomas, consisting of favourable types: lepidic, intermediate: acinar and papillary, and unfavourable: micropapillary and solid.124 Grading each histologic type aids in categorization but is differentiated based on each type, and thus is out of scope for this paper. Importantly for lung cancers, tumour size is an independent prognostic factor for early cancer stages, lymph node positivity, and locally invasive disease. Additionally, the size of the invasive portion is an important factor for prognosis of nonmucinous adenocarcinoma with lepidic pattern.123,125, 126, 127, 128, 129 Other important lung specific features are visceral pleural invasion, which is associated with worse prognosis in early-stage lung cancer even with tumors <3cm,130 and lymphatic invasion which indicates an unfavourable prognostic finding.125,131

Colon and Rectum Colorectal cancers are two of the five most common cancer types.90 Cancer cells usually start to develop in the innermost layer of the colon and rectum walls, known as the mucosa, and continue their way up to other layers. In other layers, there are lymph and blood vessels that can be used by cancer cells to travel to nearby lymph nodes or other organs.132 Colorectal cancers usually start with the creation of different types of polyps, each possessing a unique risk of developing into cancer. Most colorectal cancers are adenocarcinomas, which are split into three well-studied subtypes: classic adenocarcinoma (AC), signet ring cell carcinoma (SRCC), and mucinous adenocarcinoma (MAC). In most cases, AC has a better prognosis than MAC or SRCC. Other types, albeit uncommon, of colorectal cancers are: carcinoid tumors, gastrointestinal stromal tumors (GISTs), lymphomas, and sarcomas.133

As in other cancers, histologic grade is the most important factor in cancer prognosis along with regional lymph node status and metastasis. The tumor site is also important in determining survival rates and prognosis.134 Vascular invasion of both small and large vessels are important factors in adverse outcomes and metastasis,135, 136, 137 and perineural invasion has been shown in multiple studies to be an indicator of poor prognosis.137, 138, 139 Additionally, microsatellite instability (MSI) is shown to be a good indicator of prognosis and is divided into three stages in decreasing adversity of Stable (MSI-S), Low (MSI-L), and High (MSI-H).140 Finally, some studies have indicated the usefulness of biomarkers in colorectal cancer treatment, with biomarkers such as BRAF mutations, KRAS mutations, MSI, APC, Micro-RNA, and PIK3CA.141

Works are relatively well-distributed among various tasks including disease diagnosis, segmentation, and detection. Expanding on colorectal cancer detection, work from142 used feature analysis for colorectal and mucinous adenocarcinomas using heatmap visualizations. They discovered that adenocarcinoma is often detected by ill-shaped epithelial cells and that misclassification can occur due to lumen regions that resemble the malformed epithelial cells. Similarly for mucinous carcinoma, the model again recognizes the dense epithelium, but this time ignores the primary characteristic of the carcinoma (abundance of extracellular mucin). These findings suggest that a thorough analysis of class activation maps can be helpful for improving the classifier’s accuracy and intuitiveness.

Bladder There are several layers within the bladder wall with most cancers starting in the internal layer, called the urothelium or transitional epithelium. Cancers remaining in the inner layer are non-invasive or carcinoma in situ (CIS) or stage 0. If they grow into other layers such as the muscle or fatty layer, the cancer is now invasive. Nearly all bladder cancers are urothelial carcinomas or transitional cell carcinomas (TCC). However, there are other types of cancer such as squamous cell carcinomas, adenocarcinomas, small cell carcinomas, and sarcomas which all are very rare. In the early stages, all types of bladder cancers are treated similarly but as their stage progresses, and chemotherapy is needed, different drugs might be used based on the type of the cancer.143 As with other organs, histologic type and grade also play a role in prognosis and treatment,144 and lymphovascular invasion is independently associated with poor prognosis and recurrence.145

Works focusing on the bladder display promising results that could lead to rapid clinical application. For example, a prediction method for four molecular subtypes (basal, luminal, luminal p53, and double negative) of muscle-invasive bladder cancer was proposed in,146 outperforming pathologists by in classification accuracy when restricted to a tissue morphology-based assessment. Further improvements in accuracy could help expedite diagnosis by complementing traditional molecular testing methods.

Kidney Each kidney is made up of thousands of glomeruli which feed into the renal tubules. Kidney cancer can occur in the cells that line the tubules (renal cell carcinoma (RCC)), blood vessels and connective tissue (sarcomas), or urothelial cells (Urothelial carcinoma). RCC accounts for about of kidney cancers and comes in two types: 1) clear cell renal carcinoma, which are most common and 2) non-clear cell renal carcinoma consisting of papillary, chromophobe and some very rare subtypes.147 The CAP’s cancer protocol template for the kidney is solely focused on RCCs,148 likely due to their high probability. Tumour size is directly associated with malignancy rates, with cm size increase resulting in increase in malignancy chance.149 Additionally, the RCC histologic type is correlated with metastasis, with clear cell, capillary, collecting ducts (Bellini), and medullary being the most aggressive ones.150

Many works are focused on glomeruli segmentation, as the number of glomeruli and glomerulosclerosis constitute standard components of a renal pathology report.151 In addition to glomeruli detection, some works have also detected other relevant features such as tubules, Bowman’s capsules, and arteries.152 The results display strong performance on PAS-stained nephrectomy samples and tissue transplant biopsies, and there seems to be a strong correlation between the visual elements identified by the network and those identified by renal pathologists.

Brain There are two main types of brain tumors: malignant and non-malignant. Malignant tumors can be classified as primary tumors (originating from the brain) or secondary (metastatic).153,154 The most common type of brain cancers is gliomas, occurring of the time, and are classified into four grades.155 In the synoptic reporting, tumour location is noted as it has some impact on the prognosis, with parietal tumours showing better prognosis compared to other locations.153 Additionally, focality of glioblastomas (a subtype of gliomas) is important to determine as multifocal glioblastoma is far more aggressive and resistant to chemotherapy as compared to unifocal.154 A recent summary of the World Health Organization’s (WHO) classification of tumors of the central nervous system has indicated that biomarkers as both ancillary and diagnostic predictive tools.156 Additionally, in a recent WHO edition of classification of tumours of the central nervous system, molecular information is now integrated with histologic information into tumor diagnosis for cases such as diffuse gliomas and embryonal tumors.157

Accordingly, most works focus on gliomas and more specifically glioblastoma, the most aggressive and invasive form of glioma. Due to glioblastoma’s extremely low survival rate of after 5 years, compared to a low grade glioma’s survival rate of over after 5 years,76,158 it is critical to distinguish the two forms for improved patient care and prognosis.

Liver Liver cancer is one of the most common causes of cancer death.159 In particular, hepatocellular carcinoma (HCC) is the most common type of primary liver cancer and has various subtypes, but they generally have little impact on treatment.160 Histogolical grade is divided into nuclear features and differentiation, which directly correlate to tumour size, presentation, and metastatic rate.161,162 Notably, high-grade dysplastic nodules are included in synoptic reports for HCC but are difficult to assess and have high inter-observer disagreement,163 and thus is an area where CAD systems could be leveraged to normalize assessments. Current grading of this cancer suffers from an unsatisfactory level of standardization,164 likely due to the diversity and complexity of the tissue. This could explain why relatively low number of works are dedicated to liver disease diagnosis and prognosis. Instead, most works focus on the segmentation of cancerous tissues.

Lymph Nodes There are hundreds of lymph nodes in the human body that contain immune cells capable of fighting infections. Cancer manifests in lymph nodes in two ways: 1) cancer that originates in the lymph node itself known as lymphoma and 2) cancer cells from different origins that invade lymph nodes.165 As mentioned in the prior organ sections, lymphocytic infiltration is correlated with cancer recurrence on multiple organs and lymph nodes are the most common site for metastasis. The generalizable impact to multiple organs and importance of detecting lymphocytic infiltration is why many works focused on lymph nodes address metastasis detection.166

Organ Agnostic The remaining papers focus on segmentation, diagnosis, and prognosis tasks that attempt to generalize to multiple organs, or target organ agnostic applications. An interesting approach to increase the generalization capability of deep learning in histopathology is proposed in.167 Currently, publicly available datasets with thorough histological tissue type annotations are organ specific or disease specific and thus constrain the generalizability of CPath research. To fill this gap, a novel dataset called Atlas of Digital Pathology (ADP) is proposed.167 This dataset contains multi-label patch-level annotations of Histological Tissue Types (HTTs) arranged in a hierarchical taxonomy. Through supervised training on ADP, high performance on multiple tasks is achieved even on unseen tissue types.

3. Data collection for CPath

One of the first steps in the workflow for any CPath research is the collection of a representative dataset. This procedure often requires large volumes of data that should be annotated with ground-truth labels for further analysis.62,64,168 However, creating a meaningful dataset with corresponding annotations is a significant challenge faced in the CPath community.62,64,168, 169, 170

This section outlines the entire process of the data-centric design approach in CPath, including tissue slide preparation and WSI scanning–the first two stages in the proposed workflow shown in Fig. 1. Additionally, the trend in dataset compilation across the 700 papers surveyed is discussed regarding dataset sizes, public availability, and annotation types; see Table 9.11 in the Supplementary Material for information regarding the derivations and investigation of said trends.

3.1. Tissue slide preparation

For the application development stages in CPath, the creation of a new WSI dataset must begin with selection of relevant glass slides. High quality WSIs are required for effective analysis, however, considerations must be made for potential slide artifacts and variations inherently present. As described in Section 2.1, pathology samples are categorized as either biopsy or resection samples, with most samples being prepared as permanent samples and some intra-operative resection samples being prepared as frozen samples.

Variations and Irregularities Throughout the slide sectioning process, artifacts and irregularities can occur which reduce the slide quality, including: uncovered portions, air bubbles in between the glass seal, tissue chatter artifacts, tissue folding and tears, ink markings present on the slide, and dirt, debris, microorganisms, or cross-contamination of slides by unrelated tissue from other organs.171, 172, 173 Frozen sections can present unique irregularities and variations, such as freezing artifacts, cracks in the tissue specimen block, or delay of fixation causing drying artifacts.174,175 Beyond these irregularities, glass slides may vary in stain colouring, occurring due to differences in slide thickness, tissue thickness, fixation, tissue processing schedule, patient variation, stain variation, and lab variation.174,176, 177, 178, 179, 180

All such defects and variations are important to keep in mind when selecting glass slides for the development and application process in CPath, as they can both reduce the quality of the WSI as well as impact the performance of developed CAD tools trained with these WSIs.171,172,177 A more detailed discussion on the surveyed works in CPath which seek to identify and correct issues in slide artifacts and colour variation in WSIs is found in Section 3.2. However, prior to digitization, artifacts, and irregularities can be kept at a minimum by following good pathology practices. While an in-depth discussion of this topic is outside the scope of this paper, some research provides an extensive list of recommendations for reducing such errors in slide sectioning.173

3.2. Whole slide imaging (WSI)

WSI Scan Once a glass slide is prepared, it must be digitized into a WSI. The digitization and processing workflow for WSIs can be summarized as a four-step process181: (1) Image acquisition via scanning; (2) Image storage; (3) Image editing and annotation; (4) Image display.182 As the first two steps of the digitization workflow are the most relevant for WSI collection and with regards to the CPath workflow, they are discussed to a greater extent below.

Slide scanning is carried out through a dedicated slide scanner device. A plethora of such devices currently exist or are in development; see Appendix Table 1 for a collection of commercially available WSI scanners. Additionally, some research has investigated and compared the capabilities and performances of various WSI scanners.183, 184, 185, 186

In order to produce a WSI that is in focus, which is especially important for CPath works, appropriate focal points must be chosen across the slide either using a depth map or by selecting arbitrarily spaced tiles in a subset.187 Once focal points are chosen, the image is scanned by capturing tiles or linear scans of the image, which are stitched together to form the full image.180,187 Slides can be scanned at various magnification levels depending on the downstream task and analysis required, with the vast majority being scanned at (/pixel) or (/pixel) magnification.180

WSI Storage and Standards WSIs are in giga-pixel dimension format.30,188 For instance a tissue in size scanned /pixel resolution can produce a GB image (uncompressed) with pixels. Due to this large size, hardware constraints may not support viewing entire WSIs at full resolution, thus WSIs are most often stored in a tiled format so only the viewed portion of the image (tile) is loaded into memory and rendered.189 When building CAD tools for CPath, this large WSI dimensionality must be taken into account in determining how much compute is required to analyze a WSI. Alongside the WSI, metadata regarding patient, tissue specimen, scanner, and WSI information is stored for reference.30,188,190 Due to their clinical use, it is important to develop effective storage solutions for WSI images and metadata, allowing for robust data management, querying of WSIs, and efficient data retrieval.191,192 Further details on WSI image formats and storage methods are discussed in Appendix A.6.

To develop CPath CAD tools in a widespread and general manner, a standardized format for WSIs and their corresponding metadata is essential.188 However, there is a general lack of standardization for WSI formats outputted by various scanners, as shown in Table 1, especially regarding metadata storage. The Digital Imaging and Communications in Medicine (DICOM) standard provides a format for CPath image formatting and data management through Supplement 145,190,193 and has been shown in research to allow for efficient access and interoperability of data between varying medical centers and devices.188 However, few scanners are DICOM-compliant and thus there are challenges to using different models of scanners, thus different image formats and metadata structures, in the context of dataset aggregation and processing.

Apart from storage format, a general framework for storing and distributing WSIs is also an important pillar for CPath. In other medical imaging fields such as radiology, images are often stored in a picture archiving and communications systems (PACS) in a standardized DICOM format, with DICOM storage and retrieval protocols to interface with other systems.189 The need for standardization persists in pathology for WSI storage solutions; few works have proposed solutions to incorporate DICOM-based WSIs in a PACS, although some research has successfully implemented a WSI PACS consistent using the DICOM standard using a web-based service for viewing and image querying.189

WSI Defects and Variations Certain aspects of the slide scanning process can introduce unfavorable irregularities and variations.194 A major source of defects is out-of-focus regions in a generated WSI; often caused by glass slide artifacts, such as air bubbles and tissue folds, which interfere with selection of focus points for a slide.171,195 Out-of-focus regions degrade WSI quality and are detrimental to the performance of CAD tools developed with these WSIs, presenting concerns for performance with studies showing high false-positive errors.196,172 Additionally, as WSIs are scanned in strips or tiles, any misalignment between sections can introduce striping/stitching errors in the final image.197 Another source of error may appear during tissue-background segmentation where the scanner may misidentify some tissue regions as background, potentially missing crucial tissue areas on the glass slide from being digitized.198

Variations in staining refers to differences in colour and contrast of the tissue structures in the final WSI occurring due to differences in the staining process, staining chemicals, and tissue state. Variations in colour can lead to difficulty in generalizing CAD tools to WSIs from different labs, institutions, and settings.199,200 Even identical staining techniques can yield different WSIs due to scanner differences in sensor design, light source and calibration,180,201 creating challenges for cross-laboratory dataset generation. These additional sources of variation add layers of complexity to the WSI processing workflow, and must be kept in mind during slide selection and dataset curation for CAD tool development and deployment.

Addressing Irregularities and Variations Much work has gone into identifying areas of irregularities within WSIs, most notably blur and tissue fold detection.195,196 Some research has explored automated deep learning tools to identify these irregularities at a more efficient pace than manual inspection.195,196 Developing techniques for addressing staining variation has also been a significant research area177,202, 203, 204, 205, 206, 207 as the use of techniques addressing stain variation is important for all future works. We list some computational approaches proposed to address these issues: An example method proposed in202 uses a stain normalization technique, attempting to map the original WSI onto a target color profile. In this technique, a color deconvolution matrix is estimated to convert each image to a target hematoxylin and eosin (HE) color space and each image is normalized to a target image colour profile through spline interpolation.202 A second approach applies color normalization using the H channel with a threshold on the H channel on a Lymphocyte Detection dataset.205 Recent studies have shown promise in having deep neural networks accomplish the stain normalization in contrast to the previous classical approaches,203,208, 209, 210 commonly applying generative models such as generative adversarial networks (GANs) to stain normalization. Furthermore, Histogram Equalization (HE) technique for contrast enhancement is used in,211 where novel preprocessing technique is proposed to select and enhance a portion of the images instead of the whole dataset, resulting in improved performance and computational efficiency.

An alternative approach to address the impact of stain variation on training CAD tools is data augmentation. Such methods augment the data with duplicates of the original data, containing adjustments to the color channels of the image, creating images of varying stain coloration, and training train models that are accustomed to stain variations.200 This method has been frequently used as a pre-processing step in the development of training datasets for deep learning.212, 213, 214 A form of medically-irrelevant data augmentation based on random style transfer, called STRAP, was proposed by researchers and outperformed stain normalization.206 Similar to style transfer,215 proposes stain transfer which allows one to virtually generate multiple types of staining from a single stained WSI.

3.3. Cohort selection, scale, and challenges

The data used to create/train CPath CAD tools can greatly impact the performance and success of the tool. Curating the ideal dataset, and thus selecting the ideal set of WSIs for the development of a CAD tool is a nontrivial task. Several works suggest that datasets for deep learning in CPath should include a large quantity of data with a degree of variation and artifacts in the WSIs.62,172 Some works also recommend the inclusion of difficult or rarely diagnosed cases; other works indicate that inclusion of extremely difficult cases may decrease the performance of advanced models.172,216

A study highlighting the results of the 2016 Dataset Session at the first annual Conference on Machine Intelligence in Medical Imaging outlines several key attributes to create an ideal medical imaging dataset,217 including: having a large amount of data to achieve high performance on the desired task, quality ground truth annotations, and being reusable for further efforts in the field. While the scope of this conference did not include CPath, many of the points made regarding medical imaging datasets are also relevant to the development of CPath datasets. The session also outlined the impact that class imbalances can have on ML models, an issue also prevalent in CPath as healthy or benign regions often outnumber diseased regions by a significant margin.218

Our survey of past works in the literature reveals some trends in CPath datasets. Currently, the majority of datasets presented in the literature for CAD tool development are small-scale datasets,62 using a small number of images, and/or images from a small number of pathology laboratories. Examples of these smaller datasets include a dataset with 596 WSIs (401 training, 195 testing) from four centres for breast cancer detection219 and the Breast Cancer Histology (BACH2018) dataset, which has 500 ROI images (400 training, 100 testing) and 40 WSIs (30 training, 10 testing).220 Although curating a dataset from fewer pathology laboratories may be simpler, these smaller scale datasets may not be able to effectively generalize to data from other pathology centres.199,120 An example of this can be seen in which data from different pathology centres are clustered disjointly in a t-distributed stochastic neighbor embedding (t-SNE) representation demonstrated in.172 Another alternative was proposed in221: using a swarm learning technique multiple AI models were trained on different small data sets separately and then unified into one central model.

Additionally, stain variations, slide artifacts, and variation of disease prevalence may sufficiently shift the feature space such that a deep learning model may not sustain high performance on unseen data in new settings.120,222 As artifacts in WSIs are inevitable, with some artifacts, such as ink mark-up on glass slides, being an important part of the pathology workflow,223 the ability of CAD tools to become robust to these artifacts through exposure to a diverse set of images is an important consideration.

Compared to the number of studies conducted on small-scale datasets, relatively fewer studies have been performed using large-scale, multi-centre datasets.62,224,172 One study uses over 44,715 WSIs from three organ types, with very little curation of the WSIs for multi-instance learning detailed in.62 Stomach and colon epithelial tumors were classified using 8,164 WSIs in.224 A similar study uses 13,537 WSIs from three laboratories to test a machine learning model trained on 5,070 WSIs and achieves high performance.62

Despite some advancements, there exist major barriers to using such large, multi-centre datasets in CAD development. Notably, for strongly supervised methods of learning, an immense amount of time is needed to acquire granular ground truth annotations on a large amount of data.224 To combat this, some researchers have implemented weakly-supervised learning by harvesting existing slide level annotations to forego the need for further annotation.62 Additionally, it may be difficult to aggregate data from multiple pathology centres due to regulatory, privacy, and attribution concerns, despite the improvements that diverse datasets offer. Section 5 discusses model architectures and training techniques that harness curated datasets of various annotation levels.

Dataset Availability In general computer vision, progress can be tracked by the increasing size and availability of datasets used to train models, e.g. ImageNet grew from 3.2 million images and 5000 classes in 2009 to 14 million images and 21,000 classes in 2021.225 We infer a similar trend in dataset growth and availability indicates progress in CPath. In our survey of over 700 CPath papers, we determine the current landscape by noting the dataset(s) used in work, along with dataset details such as the organ(s) of interest, annotation level, and stain type, tabulating the results into Table 9.11 of the supplementary materials, with summarized findings from Table 9.11 are shown in Fig. 6.

Fig. 6.

(left) shows the distribution of datasets per organ as a capture of the current trend in datasets, although the number of datasets can change over time an understanding of what organs have more available data is important for developing CAD tools. Along the vertical axis, we list different organs, while the horizontal axis shows the number of datasets; wherein the darker color denotes public availability while the light color includes unavailable or by request statuses. (right) Distribution of staining types, annotation levels, and magnification details per organ color coded consistently with the bar graph. Organs have been sorted based on the abundance of datasets. For more details, please refer to Table 9.11 in the supplementary section.

From Fig. 6 we can clearly see that the majority of datasets used for research developments in computational pathology are privately sourced or require additional registration/request. With organs represented in a small number of datasets, such as the liver, thyroid, brain, etc, having a smaller proportion of freely accessible datasets as compared to the Breast, Colon, or Porstate. This can be problematic when trying to create CAD tools for cancers in these organs due to a lack of accessible data. We additionally note that although data sets requiring registration/request for access can be easily accessible, as in the case of Breast Cancer Histopathological Database (BreakHis)226 being used in multiple works,227, 228, 229 the need for registration presents a barrier to access as requests may go unanswered or take much time to review.

In our categorization of CPath datasets, we find that a few prominent datasets have been released publicly for use by the research community. Many such datasets are made available through grand challenges in computational pathology,230 such as the CAMELYON16 and CAMELYON17 challenges for breast lymph node metastases detection,61,231,232 and the Gland Segmentation in Colon Histology Images Challenge (GLaS) competition for colon gland segmentation in conjunction with Medical Image Computing and Computer Assisted Intervention (MICCAI) 2015.233,234 Notable amongst publicly available data repositories is the cancer genome atlas (TCGA),235 a very large-scale repository of WSI-data containing many organs and diseases, along with covering a variety of stain types, magnification levels, and scanners. Data collected from TCGA has been used in a large number of works in the literature for the development of CAD tools.200,236,237 As such, TCGA represents an essential repository for the development of computational pathology. While patient confidentiality is a general concern when compiling and releasing a CPath dataset, large-scale databases such as TCGA prove that it is possible to provide relatively unrestricted data access without compromising patient confidentiality. Further evaluating public source datasets, it seems that the majority of them use data extracted from large data repositories, such as TCGA, without specifying the IDs of the images used, which provides a challenge in comparing datasets or CAD tool performance across works. However, there are a few datasets that are exceptions to that phenomenon.64,238, 239, 240

Fig. 6 also provides some insights on the dataset breakdown by organ, stain type, and annotation level. Per organ, it can be seen that the breast, colon, prostate/ovary, and lung tissue datasets are amongst the most common, understandably since cancer occurrence in these regions is the most frequent90–complying with cancer statistics findings in 9.5. Multi-organ datasets are the other most common type, where we have designated a dataset to be multi-organ if it compiles WSIs from several different organs. To note, multi-organ datasets are especially useful for the development of generalized image analysis tools in computational pathology. The annotation level provided in the datasets did not indicate any pattern across most organs.

Dataset Bias It is also important to note the potential for bias in datasets that may influence the ability of any deep learning algorithm to generalize on unseen data.241,242 This problem is a prevalent issue in general machine learning applications,243, 244, 245, 246 and CPath is not immune to it. The survey review in247 reviews a large number of other examples in machine learning that exhibit such bias, both from a dataset-standpoint and an algorithm-standpoint.

Such a lack of generalizability in CPath can impact the ability of machine learning models trained on biased data to meet the needs of patients. As noted in,241 minority groups may be disproportionately negatively impacted if care is not taken in curating a diverse dataset that adequately reflects the relevant demographics for the problem to be solved.

Several works have delved into the issue of dataset bias in CPath specifically.248,249 A notable example is in,249 where the study was able to demonstrate that deep learning models trained on WSIs from TCGA were able to infer the organization that contributed the slide sample. Notably, some features, such as genetic ancestry, patient prognosis, and several key genomic markers were significantly correlated with the site the WSI was provided from. As the vast majority of data in TCGA is acquired from 24 origin centers,248 such site-specific factors may impact the ability of a DL model to perform well on patient data from different sites.

As discussed previously, having a large set of diverse data may help to mitigate generalization issues.120,199,241 Additionally, the study249 makes the suggestion that training data should be from separate sites than validation data, and that per-site performance of a model should be reported when validating a model. In doing this, the robustness of the model to site-specific variation, including both stain and demographic related variation, can be evaluated.

4. Domain expert knowledge annotation

A primary goal of CPath is to capture and distill domain expert knowledge, in this case the expertise of pathologists, into increasingly efficient and accurate CAD tools to aid pathologists everywhere. Much of the domain knowledge transfer is encompassed within the process of human experts, in this case pathologists, generating diagnostically-relevant annotations and labels for WSIs. It must be emphasized, that without some level of label, a WSI dataset is not directly usable to train a model for most CAD tasks that involve the generation of diagnoses, prognoses, or suggestions for pathologists. Thus, the process of obtaining and/or using annotations at the appropriate granularity and quality is paramount in the field. This section focuses on describing various types of ground-truth annotation to cover the spectrum of weak to strong supervision of labels, discussing the practicality of labeling across this supervision spectrum, and how a labeling workflow can be potentially designed to optimize related annotation tasks.

4.1. Supervised annotation

In contrast to general computer vision, computer scientists do not have expert-level knowledge of histopathology and thus they are not as efficient at generating annotations or labels of pathology images. Further, labels cannot be easily obtained by outsourcing the task to the general public. As a result, pathologists must be leveraged to obtain labels at some stage of the data collection and curation process, and in many annotation pipelines the first step involves recruiting the help of pathologists for their expertise in labelling.

Obtaining Expert Domain Knowledge The knowledge of pathologists is essential in the development of accurate ground truth annotations–a process most commonly completed by encircling ROI.219 However, there are studied instances of inter-observer variance between pathologists when determining a diagnosis.250, 251, 252 As obtaining the most correct label is essential when training a model for CAD, this issue must be addressed and a review of the data by several pathologists can result in higher quality ground truth data as compared to that of a single pathologist. As a result, most datasets are curated by involving a group of pathologists in the annotation process. If there exists a disagreement between the expert pathologists on the annotation of a ground truth, one of several methods is usually employed to rectify the discrepancy. A consensus can be reached on the annotation label through discussion amongst pathologists, as is done in the triple negative breast cancer (TNBC-CI) dataset,253 the Breast Cancer Surveillance Consortium (BCSC) dataset254 and the minimalist histopathology image analysis dataset (MHIST) dataset.255 Alternatively, images, where disagreements occur, can be discarded, as is done in some works.256,257 Further, the disagreement between annotators can be recorded to determine the difficulty level of the images, as is done in the MHIST dataset.216 This extra metadata aids in the development of CAD tools for analysis.

Pathologists can also be involved indirectly in dataset annotation. Both the Multi-organ nuclear segmentation dataset (MoNuSeg)258 and ADP167 have non-expert labelers annotate their respective datasets. A board-certified pathologist is then tasked with reviewing the annotations for correctness. Alternatively, some researchers have employed a pathologist in performing quality control on WSIs for curating a high-quality dataset with minimal artifacts.87,259 To enable the large scale collection of accurate annotated data, Lizard260 was developed using a multi-stage pipeline with several significant “pathologist-in-the-loop” refinement steps.

Existing pathological reports, along with the metadata that comes from public large-scale databases like TCGA, can also be leveraged as additional sources of task-dependent annotations without the use of further annotation. For example, TCGA metadata was used to identify desirable slides in,26 while pathological diagnostic reports were used for breast ductal carcinoma in-situ grading in.261

To note, there are some tasks where manual annotation by pathologists can be bypassed altogether. For instance, IHC was applied to generate mitosis figure labels using a Phospho-Histone H3 (PHH3) slide-restaining approach in,262 while immunofluorescence staining was used as annotations to identify nuclei associated with pancreatic adenocarcinoma.263 These works parallel the techniques that pathologists often use in clinical practice, such as the use of IHC staining as a supplement to HE stained slides for difficult to diagnose cases.264 They demonstrate high performance on their respective tasks wherein the top-performing models on the Tumor Proliferation Assessment Challenge 2016 (TUPAC16)265 dataset were achieved.262 Importantly, these techniques still utilize supervision, albeit weakly, by leveraging lab techniques that have been developed and refined to identify the desired regions visually.

Ground-Truth Diagnostic Information Understanding different annotation levels and their impact on the procedural development of ML pipelines is an important step in solving tasks within CPath. There are five possible levels of annotation, in order of increasing granularity (from weakly-supervised to fully-supervised): patient, slide, ROI, patch, and pixel. Fig. 7 overviews the benefits and limitations of each level. For additional information regarding each annotation level please refer to Appendix A.8.

Fig. 7.

Details of the five different types of annotations in computational pathology. From left to right: a) Patient-level annotations: can include high level information about the patient like status of cancer, test results, etc. b) Slide-level: are annotations associated with the whole slide, like a slide of normal tissue or a diseased one c) ROI-level annotations: are more focused on diagnosis and structure details d) Patch-level: are separated into Large FOV (field of view) and small FOV, each having different computational requirements for processing, and finally e) Pixel-level: includes information about color, texture and brightness

Picking the Annotation Level Selecting an annotation level depends largely on the specific CPath task being addressed, as shown in Fig. 8. For example, segmentation tasks tend to favor pixel-level annotations as they require precise delineation of a nucleus or tissue ROI. Conversely, disease diagnosis tends to favor datasets with ROI-level annotations, as diagnosis tasks are predominantly associated with the classification of diseased tissue, the higher-level annotations may provide a sufficient level of detail and context for this task.266

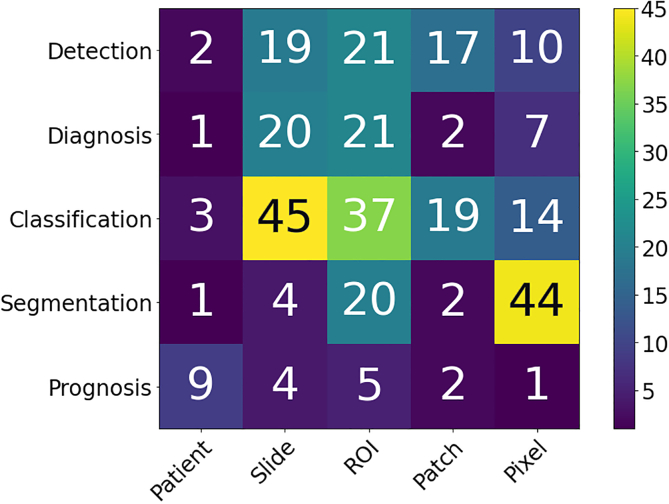

Fig. 8.

A snapshot of the distribution of different annotation levels based on the CPath task being addressed in the surveyed literature for the purposes of highlighting the trend of datasets. The x-axis displays the different annotation levels studied in the papers (from left to right): Patient, Slide, ROI, Patch, and Pixel. The y-axis shows the different tasks (top to bottom): Detection, Diagnosis, Classification, Segmentation, and Prognosis. The height of the bars along the vertical axis measures the number of works that have examined the specific task and annotation level.

Fig. 8 shows that tasks that use stronger supervision are more likely to be used in CAD tool model development. However, due to the high cost of pixel-level annotation, fully supervised annotations are challenging to develop. Even patch-based annotations often require the division and analysis of a WSI into many small individual sub-images resulting in a similar problem to pixel-based annotations.63,212 In contrast, WSI data is most often available with an accompanying slide-level pathology report regarding diagnosis thus making such weakly labeled information at the WSI level significantly more abundant than ROI, patches, or pixel-level data.267,268 Different levels of annotation can be leveraged together, as demonstrated by a framework to use both pixel and slide level annotations to generate pseudo labels in.269 Additionally, it is common in CPath to further annotate the slide-level WSIs on an ROI or patch level structure.32,85,270,271