AI-powered test case creation & execution

Create test cases 90% faster with AI-based suggestions. Identify relevant test cases to execute and convert test cases to low-code automated tests.

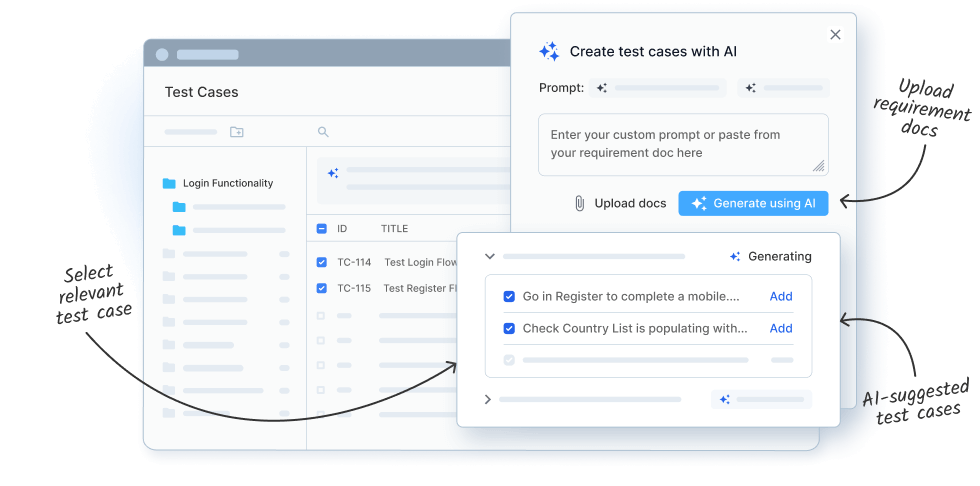

Generate test cases based on requirement docs & user prompts

Add text prompts or upload requirement docs, and let AI generate context-aware test cases, analyzing existing ones to ensure consistency and avoid duplicates.

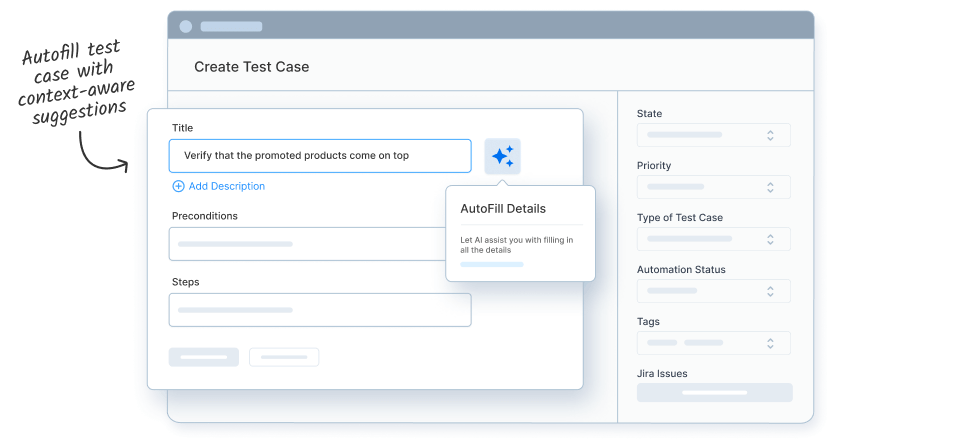

Autofill test cases with AI-based recommendation

Auto-populate different test case fields while creating test cases.

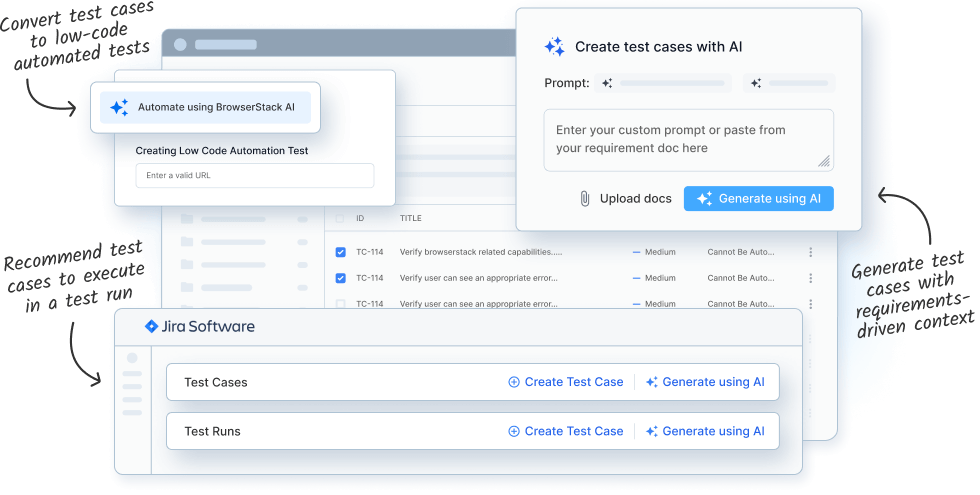

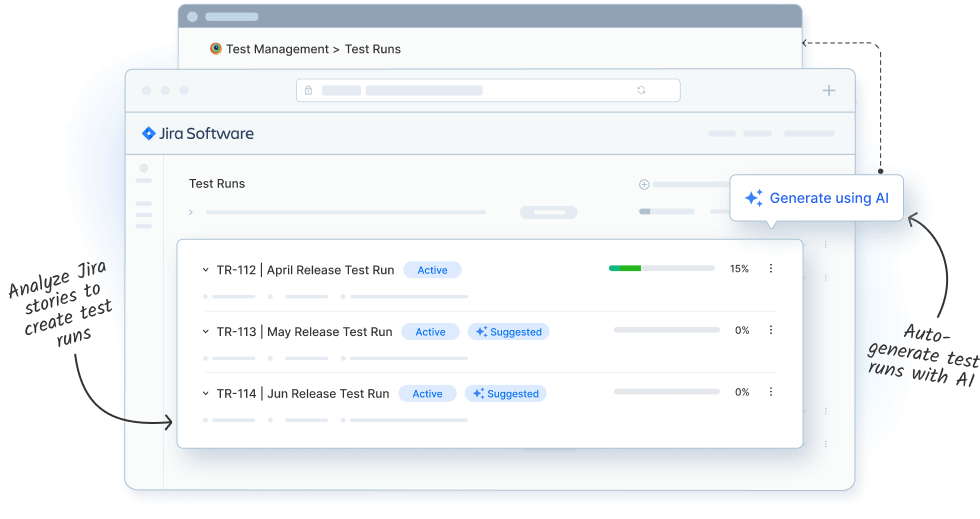

Recommendations on test cases to execute in a test run

Create test runs by automatically selecting test cases suggested by AI. AI will intelligently analyze Jira stories and your past test runs to recommend the most relevant test cases.

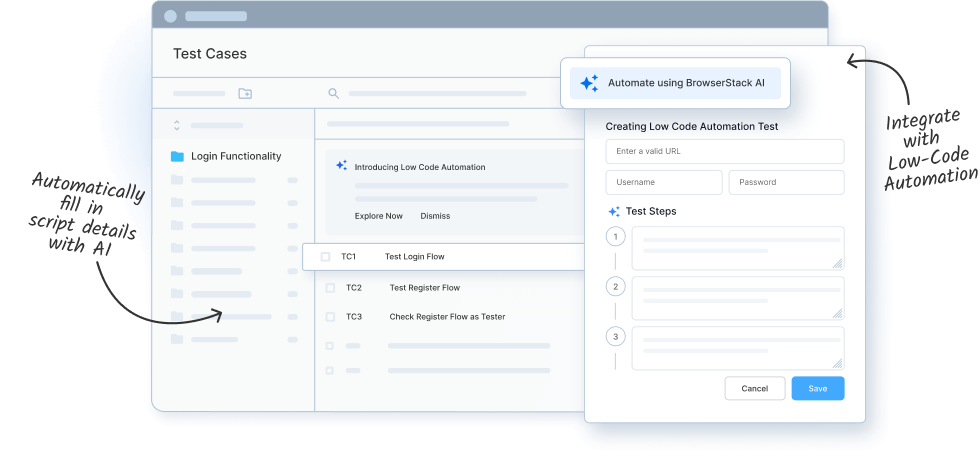

Convert test cases to low-code automated tests

Integrate with BrowserStack Low-Code Automation and automate existing test cases. AI will intelligently fill in script details based on your test case descriptions, saving you time and effort.

Save time and effort with AI-powered Test Management

Frequently Asked Questions

Currently, we have the following AI Agents in Test Management and 30+ agents are under development.

- Test case generator agent: Create test cases from Product Requirements Documents (PRDs) & user stories. Save time analyzing requirements.

- Test case design agent: Generate test details such as steps, preconditions etc to create comprehensive and effective test cases.

- Test selection agent: Take the guesswork out of deciding which tests to execute with. Identify most relevant tests in a test run.

- Test automation agent: Accelerate test development by converting test cases into low-code automated tests.

BrowserStack AI significantly reduces time and effort in test case authoring and execution — enabling up to 90% faster test case creation and 10X faster automated test development. It also helps you improve test coverage by 50%.

We orchestrate different third-party AI tools depending on the use case to optimize results, performance, and cost. This configuration is pre-defined and not customizable. For the list of third-party tools, refer to: https://www.browserstack.com/terms/ai-terms.

Yes, users can provide detailed descriptions, select folders with related test cases, or upload requirement documents to generate context-based test cases.

Test cases are generated using existing test case details and additional context provided by users through requirement documents or custom prompts.

AI models generate test cases based on user input, such as descriptions or requirement documents. The accuracy of the output depends on the quality and completeness of the input provided. Currently, it provides 91% accuracy.

We have the capability to support multiple languages.

BrowserStack AI will analyze the uploaded requirement doc and provide the recommendations based on the available context. You can also provide the missing data by uploading other requirement documents or by providing context through the user prompts.

Customer data is strictly isolated, ensuring that no data from one customer crosses over to another. Strict checks are in place to ensure that only the relevant AI processes can access customer content, maintaining security and confidentiality.

Currently, we do not train or fine-tune AI models on customer data. Our approach leverages foundation models, which are pre-trained and not fine-tuned with any customer data.

Customer data is processed in real-time using online prediction (one-shot inference) without storage. Data remains isolated throughout the inference process, following stringent security protocols aligned with industry standards.