List of posts in this series

After reviewing the state of play in the previous post, I'm now going to start defining an ordering function in code. First I need to decide where to put the code.

Code repository

I took a closer look at the URL shortener repository. The code to generate a new short link simply returns a random adjective separated from a random name by a hyphen, e.g. “clever-knuth”. No state is maintained except for a database mapping previously generated short links to their original, unshortened URLs.

So there is actually very little in the URL shortener's repository of relevance to the ordering function I want to develop. To focus on the task at hand, I decided to create a new repository. I might eventually port some code back to my fork of the URL shortener repository, but I'll think about that later.

Repository name

As Leon Bambrick quipped, there are only two hard problems in computer science: cache invalidation, naming things, and off-by-1 errors. I want a repository to host the ordering function. I don't want to tie the name to the specific application of URL shortening, especially as the ordering function will be much more general purpose than that. I'll probably use a Feistel network, but that's an implementation choice, so I don't think Feistel network should necessarily appear in the name. So what will the ordering function actually do? It will permute a range of integers.

After searching crates.io for “permute” and “permutation”, just in case that turned up an existing piece of code, I settled on a repository name of range-permutation-rs (the “-rs” suffix is a convention indicating Rust code).

Setting up the repository

If you're happy setting up a git repository with Rust content, you may wish to skip this section.

I created the repository in a new directory, with the usual empty commit:

mkdir range-permutation-rs

cd range-permutation-rs

git init

git commit --allow-empty

I then set up a remote repository and pushed the main branch up.

Before I get into Rust, I populated the repository with some basic documentation: a README, a LICENSE, code of conduct, and contributor guide. This probably seems over the top, but I think it's good practice to take 10 minutes to set these things up early, so anyone who comes across the repository knows the ground rules. I also added a .editorconfig file to set my default editor conventions and try to ensure some consistency between editors.

I intend the repository to be a library, so I then checked my Rust toolchain was up to date and used cargo to initialise the Rust side of the repository:

rustup update

cargo init --lib --name range-permutation --vcs git

The option --vcs git does not affect the existing git repository, but adds a suitable .gitignore file listing just /target.

Defining an interface

I want to start off with a very simple interface: a function that takes the size of the range and returns an ordering function that permutes integers in the specified range. What does this look like in Rust?

I want to separate out the creation of the permutation function from the function itself, because I want to develop some generic tests of the permutation function and I expect the creation function to become more complicated over time (e.g. to take a key which will act as a randomisation seed).

The creation function will take an integer to set the size of the range. The permutation function will take an integer in the range and return another in the range. I expect the permutation function not to mutate any internal state, so it will be a pure function with no side-effects. I looked at some ways of representing an integer in a range in Rust, but decided that they didn't make the functions any clearer. So I ended up with this:

pub fn create(size: u64) -> impl Fn(u64) -> Result<u64, &'static str> {

...

}

This is admittedly quite busy, especially if you're not used to Rust. Let's go through it in detail.

The input parameter size is an unsigned 64-bit integer specifying the size of the range we want to permute.

The return type of create is the Rust way of specifying a function that takes an unsigned 64-bit integer and returns a result which contains either an unsigned 64-bit integer (in the successful case) or a string error message (when the input is outside the range).

As a placeholder, I made the permutation function simply return its input value, wrapped in Ok(), or an error if the input was out of range.

Docs and tests

Next I wrote some documentation, with a simple example, and some tests and checked the example and tests passed.

Good news, bad news

While I was writing the docs, I had another look at Tom Conway's feistel-permutation-rs repository. After he merged my PR to add an open source license, I was delighted to find he had written up a very clear README.

In fact, his code pretty much meets my requirements for an ordering function. I could press on with my own implementation, but my motivation has disappeared. Indeed, to write my own code would amount to NIH (“not invented here”).

Apologies to anyone who was hoping to see me develop some Feistel network code from scratch in Rust. The good news is you can now read Tom's clear and well-tested code, which I'll explore further below. The bad news (kind of) is that I enjoy writing bit-twiddling code, but now I'm not going to have the pleasure.

Implementing in terms of feistel-permutation-rs

One aspect where feistel-permutation-rs didn't match my ideal interface was the way it handled a range of numbers. The permutation (get) function asserts that the input value is in the relevant range. The effect is that the code panics if an out of range value is passed to get. I prefer to return an error rather than panic.

So I implemented my ordering function in terms of feistel-permutation-rs, like this:

pub fn create(size: u64, seed: u64) -> impl Fn(u64) -> Result<u64, &'static str> {

let perm = Permutation::new(size, seed, DefaultBuildHasher::new());

move |n| {

if n >= size {

Err("out of range")

} else {

Ok(perm.get(n))

}

}

}

As expected, I added the key or pseudorandom seed to the signature of create.

The code above is straightforward, except for the use of move to capture the value of size in the closure, rather than a reference to size. Capturing a reference fails to compile because the lifetime of the closure exceeds the lifetime of size. In other words, size goes out of scope and cannot be referred to afterwards.

With this code, it's not surprising my tests passed. So let's take a look at Tom's code.

A Feistel network: feistel.rs

The core of feistel-permutation-rs is a Feistel network with a user-specified round function, a range, and a key schedule.

The range (of values to be encrypted) is specified as an even number of bits, so that values in the range can be split into left and right portions with the same number of bits.

The round function is applied on each iteration, or “round”, of the Feistel network and is a way of making the order of the results pseudorandom. The inputs to the round function are an encryption key (described next) and the right portion of the data being encrypted. The round function is supplied via a hash function builder (conventionally named “bob” – geddit?).

The key schedule is a vector of encryption keys which are passed in turn to the round function. The number of rounds is determined by the length of the vector.

The code is straightforward¹:

pub fn encrypt(&self, x: u64) -> u64 {

let (mut l, mut r) = self.split(x);

for k in self.keys.iter() {

l ^= self.hash(*k, r);

(l, r) = (r, l);

}

self.combine(r, l)

}

The value to be encrypted (x) is split into left and right portions. If the number of bits in the range is 2n the valid range of inputs is 0..2^(2n). Then the input value has 2n significant bits and these are splits into left and right portions, each with n bits.

There are some interesting (at least to me) edge cases. It's perfectly valid to specify a range of 0 bits which will then encrypt just one value (0).

It's also valid to provide an empty vector for the key schedule, in which case the encryption will be the identity function.

Crucially, the encrypt function permutes the range. The body of the for loop is clearly invertible (one-one) and it follows that so is encrypt. Since all the values of the range are mapped to distinct values, also in the range, encrypt is onto. In other words encrypt is a bijection from the range to itself, i.e. a permutation.

Arbitrary range permutation: permutation.rs

feistel-permutation-rs builds on the Feistel network to create

a permutation of an arbitrary unsigned 64-bit range.

The first step is to build a key schedule of five keys from a single input seed. The first key in the schedule is the hash

of the input seed. Each subsequent key is the hash of the previous seed. This relieves the user of the responsibility to provide a complete key schedule.

The second step is to obtain a permutation of an arbitrary range by

taking the next even power of 2 higher than the size of the range and using a Feistel network with the corresponding number of bits.

This is combined with an algorithm that applies the Feistel network encryption and, if the encrypted value exceeds the range, keeps on applying the Feistel network encryption until the encrypted value falls in the range. The code looks like this:

pub fn get(&self, x: u64) -> u64 {

assert!(x < self.n);

let mut res = self.feistel.encrypt(x);

while res >= self.n {

res = self.feistel.encrypt(res);

}

res

}

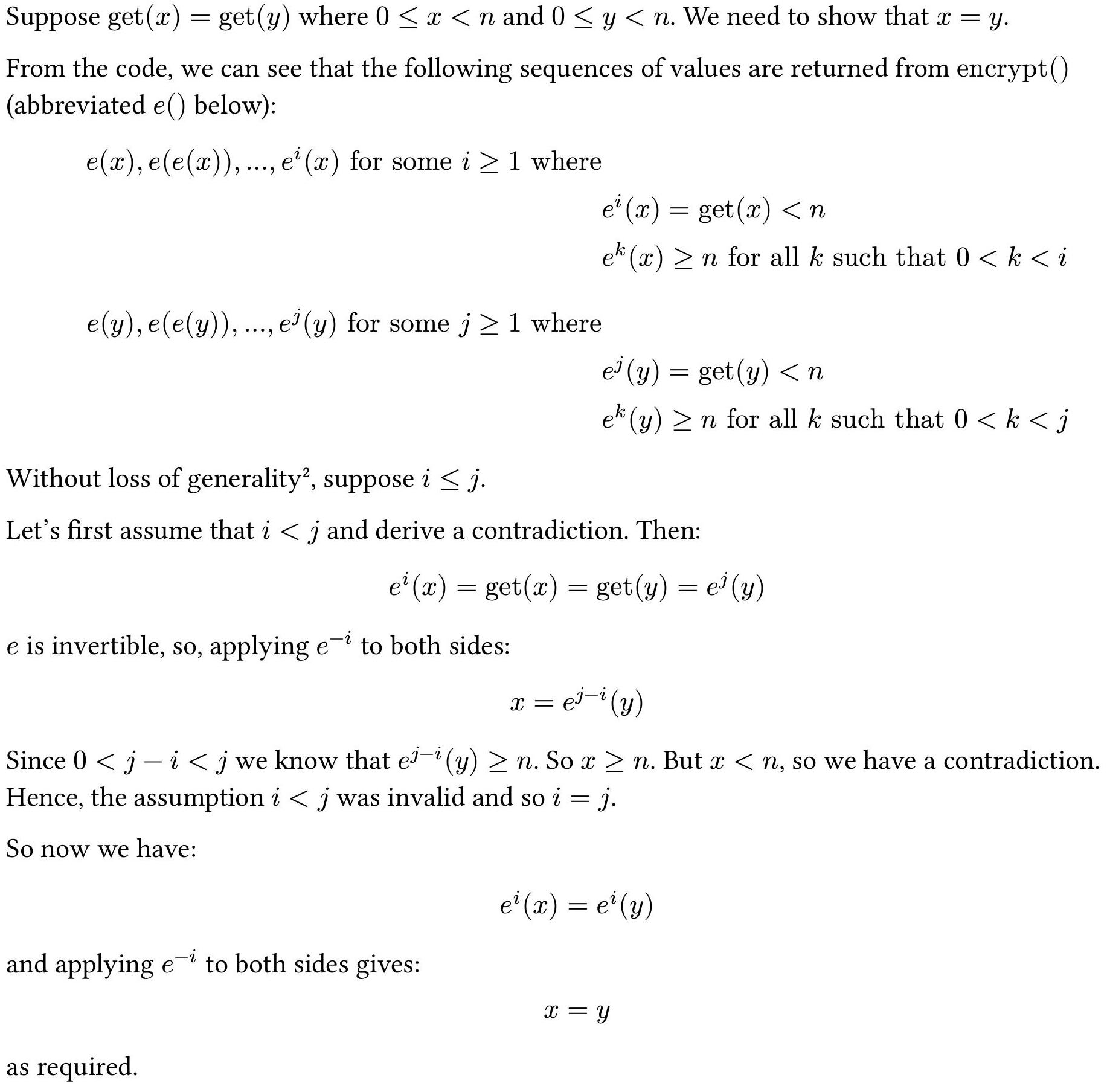

To see that this provides a permutation of the range 0..n, first

observe that all the results are in the range. Secondly, let's prove get() is one-one.

Proof

(The proof was formatted with Typst. Click here to enlarge.)

permutation.rs also includes an interesting test that the

generated permutation is random. I don't pretend to understand the relevant theory, so I wouldn't have included such a test.

Contributions

While reading through feistel-permutation-rs, I made a number of very small contributions by way of pull requests:

I also raised Fix test which wasn't valid, but which prompted Tom to add the interesting test mentioned above.

Conclusion

By depending on feistel-permutation-rs the ordering function, with my preferred interface, is now complete.

I'll have to think about whether it's worth the effort to use this in the URL shortener. The URL shortener is sufficient for practical use and I'd have to fork the code and add a dependency on the above ordering function. I don't think I could justify making such a substantial change in the upstream repository, so I'd end up with a hard fork.

In fact, as I've been working through this experiment, I've become less keen to support a URL shortener on my site, so I may just ditch it.

But the point of the experiment was to see what it was like doing a piece of development in the open and to have some fun. The actual coding side of the experiment ended up being a lot smaller than expected, but I've enjoyed reusing and analysing Tom Conway's code.

The experiment demonstrates one of the trade-offs in open source development: whether to reuse existing code or write your own. It also demonstrates some of the costs associated with reusing code:

- wrapping reused code to provide a different interface

- analysing the correctness of reused code

- taking some parts of the reused code on trust (the permutation randomness test, which I don't understand).

Footnotes:

¹ The original used a temporary value to swap l and r.

² In mathematical proofs, “without loss of generality” (“WLOG”) is a way of abbreviating a proof by considering just one of a number of very similar cases. Here the cases are symmetric. See WLOG on Wikipedia for more explanation.

#OpenDevelopment #Mathematics

[  | about | blogroll | contact | notes | now | search | subscribe | © |

| about | blogroll | contact | notes | now | search | subscribe | © |  ]

]

![]() | about | blogroll | contact | notes | now | search | subscribe | © |

| about | blogroll | contact | notes | now | search | subscribe | © | ![]() ]

]