CN113918530B - Method and device for realizing distributed lock, electronic equipment and medium - Google Patents

Method and device for realizing distributed lock, electronic equipment and medium Download PDFInfo

- Publication number

- CN113918530B CN113918530B CN202111519652.2A CN202111519652A CN113918530B CN 113918530 B CN113918530 B CN 113918530B CN 202111519652 A CN202111519652 A CN 202111519652A CN 113918530 B CN113918530 B CN 113918530B

- Authority

- CN

- China

- Prior art keywords

- lock

- locked

- data

- data set

- service node

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/10—File systems; File servers

- G06F16/17—Details of further file system functions

- G06F16/176—Support for shared access to files; File sharing support

- G06F16/1767—Concurrency control, e.g. optimistic or pessimistic approaches

- G06F16/1774—Locking methods, e.g. locking methods for file systems allowing shared and concurrent access to files

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04L—TRANSMISSION OF DIGITAL INFORMATION, e.g. TELEGRAPHIC COMMUNICATION

- H04L67/00—Network arrangements or protocols for supporting network services or applications

- H04L67/01—Protocols

- H04L67/10—Protocols in which an application is distributed across nodes in the network

- H04L67/1097—Protocols in which an application is distributed across nodes in the network for distributed storage of data in networks, e.g. transport arrangements for network file system [NFS], storage area networks [SAN] or network attached storage [NAS]

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Data Mining & Analysis (AREA)

- Databases & Information Systems (AREA)

- Physics & Mathematics (AREA)

- General Engineering & Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Computer Networks & Wireless Communication (AREA)

- Signal Processing (AREA)

- Information Retrieval, Db Structures And Fs Structures Therefor (AREA)

Abstract

The disclosure relates to a method and a device for realizing a distributed lock, electronic equipment and a medium, and relates to the technical field of computers. The method performed by the cluster service node comprises: sending a request for applying for an operation lock to a server; if the server distributes the operation lock to the cluster service node, acquiring a data set locked by the data segmentation lock; if the intersection does not exist between the data set locked by the data segment lock and the data set to be locked, writing the data set to be locked into the data set locked by the data segment lock; releasing the operating lock; and acquiring a data segmented lock corresponding to the data set to be locked. The method adopts double locking operation of an operation lock and a data segmentation lock, realizes locking of different segments of a data set by a plurality of service nodes, and solves the problem that concurrent operation cannot be performed on large-batch data in the related technology.

Description

Technical Field

The present disclosure relates to the field of computer technologies, and in particular, to a method for implementing a distributed lock, an apparatus for implementing a distributed lock, an electronic device, and a computer-readable storage medium.

Background

Distributed locks are lock implementations that control shared resources accessed by distributed systems or between different systems together, and often require mutual exclusion to prevent interference with each other to ensure consistency if a resource is shared between different systems or between different hosts of the same system. In the related art, a distributed lock is realized based on Redis, and mainly comprises two operations of locking and unlocking, wherein the lock needs to be acquired before shared resources are operated, and the lock needs to be released after the operation is finished. However, the existing lock can only lock a single record, and cannot handle the situation of locking large-batch records at the same time, namely, cannot perform concurrent operation on large-batch data.

It is to be noted that the information disclosed in the above background section is only for enhancement of understanding of the background of the present disclosure, and thus may include information that does not constitute prior art known to those of ordinary skill in the art.

Disclosure of Invention

The present disclosure provides a method for implementing a distributed lock, an apparatus for implementing a distributed lock, an electronic device, and a computer-readable storage medium, so as to at least solve a problem that concurrent operations cannot be performed on large-batch data in related technologies. The technical scheme of the disclosure is as follows:

according to an aspect of the embodiments of the present disclosure, a method for implementing a distributed lock is provided, which is applied to a cluster service node, and includes: sending a request for applying for an operation lock to a server; if the server distributes the operation lock to the cluster service node, acquiring a data set locked by a data segmentation lock; if the data set locked by the data segment lock does not have an intersection with the data set to be locked, writing the data set to be locked into the data set locked by the data segment lock; releasing the operating lock; and acquiring a data segment lock corresponding to the data set to be locked.

In an embodiment of the present disclosure, writing the data set to be locked into the data set locked by the data segment lock includes: determining a data segmentation lock corresponding to the data set to be locked; and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

In an embodiment of the present disclosure, after obtaining a data segment lock corresponding to the data set to be locked, the method further includes: executing business operation; and after the business operation is finished, removing the data set to be locked from the data set locked by the data segment lock, and releasing the data segment lock corresponding to the data set to be locked.

In one embodiment of the present disclosure, the method further comprises: if the data set locked by the data segmentation lock and the data set to be locked have intersection, entering a waiting state; and applying for the data segment lock after the data segment lock locking the intersection is released.

In one embodiment of the present disclosure, the method further comprises: and if the data segment lock locking the intersection is not released within the preset waiting time, releasing the operation lock.

In one embodiment of the present disclosure, the method further comprises: if the server does not distribute the operation lock to the cluster service node, entering a waiting state; and after waiting for the service node holding the operation lock to release the operation lock, applying for the operation lock.

According to another aspect of the embodiments of the present disclosure, a method for implementing a distributed lock is provided, which is applied to a server, and includes: receiving a request for applying an operation lock sent by a cluster service node; if the operation lock is not held by other service nodes, the operation lock is distributed to the cluster service nodes; if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node; and after the operation lock is distributed to the cluster service node, if the intersection exists between the data set locked by the data segment lock and the data set to be locked, generating the data segment lock corresponding to the data set to be locked.

According to another aspect of the embodiments of the present disclosure, an apparatus for implementing a distributed lock is provided, which is applied to a cluster service node, and includes: the lock application module is configured to send a request for applying an operation lock to a server; the data acquisition module is configured to acquire a data set locked by a data segment lock if the server allocates an operation lock to the cluster service node; the writing module is configured to write the data set to be locked into the data set locked by the data segment lock if the intersection exists between the data set locked by the data segment lock and the data set to be locked; a release lock module configured to release the operation lock; and the lock acquisition module is configured to acquire a data segment lock corresponding to the data set to be locked.

In one embodiment of the disclosure, the write module is configured to: determining a data segmentation lock corresponding to the data set to be locked; and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

In one embodiment of the present disclosure, the system further includes an execution module configured to: executing business operation; and, the release lock module is configured to: and after the business operation is finished, removing the data set to be locked from the data set locked by the data segment lock, and releasing the data segment lock corresponding to the data set to be locked.

In one embodiment of the present disclosure, the application lock module is configured to: if the data set locked by the data segmentation lock and the data set to be locked have intersection, entering a waiting state; and applying for the data segment lock after the data segment lock locking the intersection is released.

In one embodiment of the disclosure, the release lock module is configured to: and if the data segment lock locking the intersection is not released within the preset waiting time, releasing the operation lock.

In one embodiment of the present disclosure, the application lock module is configured to: if the server does not distribute the operation lock to the cluster service node, entering a waiting state; and after waiting for the service node holding the operation lock to release the operation lock, applying for the operation lock.

According to another aspect of the embodiments of the present disclosure, there is provided an apparatus for implementing a distributed lock, which is applied to a server, and includes: the receiving module is configured to receive a request for applying an operation lock sent by a cluster service node; the distribution module is configured to distribute the operation lock to the cluster service node if the operation lock is not held by other service nodes; if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node; and the generating module is configured to generate a data segment lock corresponding to the data set to be locked if no intersection exists between the data set locked by the data segment lock and the data set to be locked after the operation lock is allocated to the cluster service node.

According to still another aspect of the embodiments of the present disclosure, there is provided an electronic device including: a processor; a memory for storing the processor-executable instructions; wherein the processor is configured to execute the instructions to implement the method for implementing the distributed lock.

According to still another aspect of the embodiments of the present disclosure, there is provided a computer-readable storage medium, wherein instructions of the computer-readable storage medium, when executed by a processor of an electronic device, enable the electronic device to perform the above-mentioned implementation method of the distributed lock.

The technical scheme provided by the embodiment of the disclosure at least brings the following beneficial effects: under the condition that the cluster service node successfully applies for the operation lock, if the intersection of the data set locked by the data segmented lock and the data set to be locked corresponding to the cluster service node is empty, the data set to be locked is locked in a data writing mode, namely, the data set to be locked is locked, and then the cluster service node can execute business operation.

It is to be understood that both the foregoing general description and the following detailed description are exemplary and explanatory only and are not restrictive of the disclosure.

Drawings

The accompanying drawings, which are incorporated in and constitute a part of this specification, illustrate embodiments consistent with the present disclosure and, together with the description, serve to explain the principles of the disclosure and are not to be construed as limiting the disclosure.

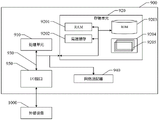

FIG. 1 is a schematic diagram of a distributed system architecture;

FIG. 2 is a flowchart illustrating a method of implementing a distributed lock in accordance with an illustrative embodiment;

FIG. 3 is a flow diagram illustrating locking and unlocking of a data segment lock in accordance with an illustrative embodiment;

FIG. 4 is a flowchart illustrating a method of implementing a distributed lock in accordance with another illustrative embodiment;

FIG. 5 is a flowchart illustrating a method of implementing a distributed lock, in accordance with yet another illustrative embodiment;

FIG. 6 is a diagram of data interactions between a service node and a server;

FIG. 7 is a block diagram illustrating an apparatus for implementing a distributed lock in accordance with an illustrative embodiment;

FIG. 8 is a block diagram illustrating an apparatus for implementing a distributed lock in accordance with another illustrative embodiment;

FIG. 9 is a block diagram illustrating an architecture of a distributed lock implementation apparatus in accordance with an illustrative embodiment.

Detailed Description

In order to make the technical solutions of the present disclosure better understood by those of ordinary skill in the art, the technical solutions in the embodiments of the present disclosure will be clearly and completely described below with reference to the accompanying drawings.

It should be noted that the terms "first," "second," and the like in the description and claims of the present disclosure and in the above-described drawings are used for distinguishing between similar elements and not necessarily for describing a particular sequential or chronological order. It is to be understood that the data so used is interchangeable under appropriate circumstances such that the embodiments of the disclosure described herein are capable of operation in sequences other than those illustrated or otherwise described herein. The implementations described in the exemplary embodiments below are not intended to represent all implementations consistent with the present disclosure. Rather, they are merely examples of apparatus and methods consistent with certain aspects of the disclosure, as detailed in the appended claims.

The method provided by the embodiment of the disclosure can be executed by any type of electronic device, such as a server or a terminal device, or by interaction between the server and the terminal device. The terminal device and the server may be directly or indirectly connected through wired or wireless communication, and the application is not limited herein.

The server may be an independent physical server, a server cluster or a distributed system formed by a plurality of physical servers, or a cloud server providing basic cloud computing services such as a cloud service, a cloud database, cloud computing, a cloud function, cloud storage, a Network service, cloud communication, a middleware service, a domain name service, a security service, a CDN (Content Delivery Network), a big data and artificial intelligence platform, and the like.

The terminal device may be, but is not limited to, a smart phone, a tablet computer, a notebook computer, a desktop computer, a smart speaker, a smart watch, and the like.

Fig. 1 is a schematic structural diagram of a distributed system. As shown in fig. 1, the distributed system includes servers and cluster service nodes. The cluster service nodes are server nodes for processing data requests, and the number of the cluster service nodes may be one or more, and two service nodes are exemplified in fig. 1. Each service node may include a plurality of services, each service node applies for a distributed lock to a server, and the server may be regarded as a lock manager for managing the distributed lock. Next, a method for implementing a distributed lock provided by the embodiment of the present disclosure is described with an execution subject as a cluster service node and a server, respectively.

FIG. 2 is a flow diagram illustrating a method for implementing a distributed lock in accordance with an exemplary embodiment. As shown in fig. 2, the method is applied to a cluster service node, and includes the following steps:

step S210, sending a request for applying an operation lock to a server;

step S220, if the server distributes the operation lock to the cluster service node, a data set locked by the data segment lock is obtained;

step S230, if the data set locked by the data segment lock does not have an intersection with the data set to be locked, writing the data set to be locked into the data set locked by the data segment lock;

step S240, releasing the operation lock;

step S250, obtaining a data segment lock corresponding to the data set to be locked.

For ease of understanding, the operation lock and the data segment lock of the embodiments of the present disclosure are explained first. Both the operation lock and the data segment lock realize locking and unlocking based on Redis, and can be used in the distributed system shown in FIG. 1. Before the cluster service node performs the service operation, the cluster service node needs to apply an operation lock and a data segmentation lock to the server, and if the application is successful, the service operation can be performed. The operation lock is responsible for locking the operation on the Redis data segment; the data segment lock can lock a data range needing to be operated, and the data range can be represented by a key set. Specifically, the operation lock may be implemented by a set command based on Redis, keys and values are character strings, and the keys may be labeled actionkey. actionkey is the name of the operation lock, and can uniquely mark the operation lock, and value is the mark of the locking thread. The data segment lock can be realized by a set command based on Redis, a key is a character string, the key can be marked as a datakey, and the value is a character string set and is marked as a dataSet. datakey is the name of a data segment lock, and can uniquely mark a data segment lock, and dataSet is a data set in a locked state. In addition, the server mentioned above can be regarded as a lock manager for managing distributed locks, and both the operation lock and the data segment lock are implemented based on the Redis, and the server can be regarded as a Redis server.

In step S210, the cluster service node sends a request for applying an operation lock to the server, and then the server reads the current state of the operation lock and determines whether to assign the operation lock to the cluster service node. If the operation lock is not held by other service nodes, the server assigns the operation lock to the cluster service node. If the operation lock is held by other service nodes, the server cannot assign the operation lock to the cluster service node. In this case, the cluster service node enters a waiting state, and can apply for the operation lock after waiting for the service node holding the operation lock to release the operation lock. For example, there are a first service node and a second service node, when the first service node applies for an operation lock from the server, the server allocates the operation lock to the second service node, and at this time, the first service node needs to wait for the second service node to release the operation lock and then apply for the operation lock from the server again. The first service node and the second service node may be different service nodes; the first serving node and the second serving node may also be the same serving node. For example, the first service node includes a service 1 and a service 2, and when the service 1 applies for the operation lock and is not released, the service 2 applies for the operation lock from the server, and at this time, after the service 1 releases the operation lock, the service 2 may successfully apply for the operation lock.

It is mentioned above that the data segment lock may lock a data range that needs to be operated, or the data set that the data segment lock locks is the data range that is currently operated. For example, if the data set locked by the data segment lock includes data a1 through An, it may be considered that data a1 through An is currently being processed, such as importing data a1 through An into the database. In step S220, after the cluster service node applies for the operation lock successfully, the data set locked by the data segment lock may be acquired, that is, the data range of the current operation is acquired. Of course, the data range may be processed by other service nodes, or the data range may be processed by the cluster service node. For example, the first service node includes service 1 and service 2, where the service 1 performs service processing on a certain data range, that is, a data set locked by a data segment lock includes the data range, and the service 2 has a service request to process, at this time, the first service node may also apply for an operation lock.

In step S230, the cluster service node may determine whether there is an intersection between the data set locked by all the data segment locks currently (i.e., the data range of the current operation) and the data set to be locked. The data set to be locked may be considered as a data range that needs to be operated when applying for the distributed lock, for example, if the first service node needs to perform service processing on the data a2 to a5 and needs to apply for the distributed lock, the data set to be locked is the data a2 to a 5.

If the cluster service node judges that no intersection exists between the currently operated data range and the data set to be locked, that is, the currently operated data range does not contain the data of the data set to be locked, that is, no service node performs service processing on the data which needs to be operated when applying for the distributed lock currently. In this case, the data set to be locked may be written into the data set locked by the data segment lock, that is, the data set to be locked is locked by the data segment lock, so that when the data set to be locked is locked, other service nodes (i.e., nodes other than the cluster service node) may not perform service processing on the data set to be locked.

In addition, after the data set to be locked is written into the data set locked by the data segment lock, the cluster service node may release the operation lock, so that other service nodes waiting for the operation lock may apply for the operation lock. Then, the cluster service node may obtain a data segment lock corresponding to the data set to be locked, that is, the cluster service node successfully adds the data segment lock.

It should be noted that in the embodiment of the present disclosure, the number of the data segment locks is one or more, and the data set locked by the data segment lock is the data set currently locked by all the data segment locks. For example, the first service node is to perform service processing on data a2 to a5, and the second service node is to perform service processing on data a10 to a20, which shows that the range of data to be processed by the first service node is different from the range of data to be processed by the second service node. If the first service node and the second service node both apply for the lock, the data segment lock obtained by the first service node is used for locking the data A2 to A5, and the data segment lock obtained by the second service node is used for locking the data A10 to A20, at this time, the number of the data segment locks is two. Of course, there is also a case where the number of the data segment locks is zero, for example, at an initial execution stage of the distributed lock implementation method, the server has not generated the data segment locks, and in this case, the data set locked by the data segment locks is empty.

In the implementation method of the distributed lock provided by the embodiment of the disclosure, under the condition that the cluster service node applies for the operation lock successfully, if an intersection of a data set locked by the data segment lock and a data set to be locked corresponding to the cluster service node is empty, the data set to be locked is locked by writing data, that is, the data set to be locked is locked, and then the cluster service node can execute the service operation.

In an exemplary embodiment, writing the data set to be locked into the data set locked by the data segment lock may include: determining a data segmented lock corresponding to a data set to be locked; and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

If the data set locked by the data segmented lock does not have an intersection with the data set to be locked, the server generates a data segmented lock corresponding to the data set to be locked, the data segmented lock is used for locking the data set to be locked, the cluster service node can determine the data segmented lock corresponding to the data set to be locked generated by the server, and then the cluster service node can write the data set to be locked into the data set locked by the data segmented lock according to the unique identifier of the determined data segmented lock.

And, in an exemplary embodiment, after obtaining the data segment lock corresponding to the data set to be locked, the cluster service node may perform a business operation, and after the business operation is completed, remove the data set to be locked from the data set locked by the data segment lock, and release the data segment lock corresponding to the data set to be locked.

After the cluster service node successfully adds the data segmented lock, business operation can be performed, for example, data corresponding to a data set to be locked is imported into a database. After the business operation is completed, the cluster service node can remove the data set to be locked from the data set locked by the data segment lock, and release the data segment lock corresponding to the data set to be locked, so that other service nodes can lock the data in the data set to be locked and carry out the business operation.

FIG. 3 illustrates a flow diagram showing locking and unlocking of a data segment lock, according to an example embodiment. As shown in fig. 3, the method is applied to a cluster service node, and includes the following steps:

step S310, under the condition that an intersection does not exist between the data set locked by the data subsection lock and the data set to be locked, acquiring the data subsection lock corresponding to the data set to be locked;

step S320, writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked;

step S330, releasing the operation lock;

step S340, acquiring a data segmented lock corresponding to the data set to be locked;

step S350, executing business operation;

step S360, after the business operation is completed, removing the data set to be locked from the data set locked by the data segment lock, and releasing the data segment lock corresponding to the data set to be locked.

Under the condition that the cluster service node successfully applies to the operation lock, whether an intersection exists between the data set locked by the data segmentation lock and the data set to be locked can be judged. If the intersection does not exist, the cluster service node may acquire the data segment lock corresponding to the to-be-locked data set generated by the server. And then, writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock, namely locking the data set to be locked. Then, the cluster service node may release the operation lock, and after releasing the operation lock, it indicates that the cluster service node successfully adds the data segment lock. In this way, the cluster service node may perform a service operation, and remove the data set to be locked from the data set locked by the data segment lock after the service operation is completed, so that the data segment lock corresponding to the data set to be locked may be released.

In the locking and releasing processes of the data segmented lock, the cluster service node can successfully lock the data segmented lock by writing the data set to be locked into the data set locked by the data segmented lock, so that the cluster service node only performs business operation on the data in the data set to be locked at the same time, and the atomicity of the operation is ensured; and after the service operation is completed, removing the data set to be locked from the data set locked by the data segment lock, so that the cluster service node successfully releases the data segment lock, and other service nodes can apply for the data segment lock corresponding to the data set to be locked.

In an exemplary embodiment, the method for implementing a distributed lock may further include: if the intersection exists between the data set locked by the data segmentation lock and the data set to be locked, entering a waiting state; and after the data segment lock of the lock intersection is released, applying for the data segment lock.

If the intersection exists between the data set locked by the data segmentation lock and the data set to be locked, the data range of the current operation contains the data of the data set to be locked, namely the data which needs to be operated by the application of the distributed lock is processed by a certain service node. Under the condition, it is determined that the addition of the data segment lock by the cluster service node fails, a waiting state needs to be entered, and after the data segment lock for locking the intersection is released, the cluster service node applies for the data segment lock. For example, the data sets to be locked are data a1 to a5, and the data sets locked by the data segment lock include data a1 and a2, which indicates that a service node performs service processing on data a1 and a 2. After waiting for the service node to perform the business operation on the data a1 and a2, the data a1 and a2 are removed from the locked data set, that is, the data segment lock of the locked data a1 and a2 is released, and the cluster service node may apply for the data segment lock.

In addition, in the embodiment of the present disclosure, if the data segment lock that locks the intersection is not released within the preset waiting time, the operation lock is released. That is, if there is an intersection between the data set locked by the data segment lock and the data set to be locked, the cluster service node needs to enter a waiting state, and the data segment lock for locking the intersection is released. However, if the data segment lock that locks the intersection is not released within the preset latency, it may be that the data segment lock that locks the intersection is in question, that the data of the intersection is in question, and that the business operation being performed is complicated. In this case, the cluster service node may release the operation lock, so that other service nodes waiting for the operation lock may apply for the operation lock. After releasing the operation lock, the cluster service node may join the queue waiting for the operation lock, and reapply the operation lock.

In summary, when there is an intersection between the data set locked by the data segment lock and the data set to be locked, the cluster service node enters a waiting state, and after the data segment lock for locking the intersection is released, the cluster service node can apply for the data segment lock to perform a business operation; if the data segment lock for locking the intersection is not released within the preset waiting time, the operation lock can be released, so that the process waiting for the operation lock can apply for the operation lock; after the cluster service node releases the operation lock, the cluster service node is added into the queue waiting for the operation lock, so that the cluster service node can apply for the operation lock again.

FIG. 4 is a flowchart illustrating a method of implementing a distributed lock, according to another example embodiment. As shown in fig. 4, the method is applied to a cluster service node, and includes the following steps:

step S401, applying for an operation lock, specifically an operation lock applying for an actionkey by using a set command of Redis, acquiring the operation lock if the application is successful, and waiting and retrying until a specified timeout time is exceeded if the application is failed;

step S402, after applying for the operation lock, obtaining a locked data set dataSet according to the unique identifier datakey of the data segment lock, and obtaining the data set locked by all the data segment locks;

step S403, judging whether an intersection exists between the data set to be locked waitLockSet and the acquired dataSet;

step S404, if the judgment result is that no intersection exists, acquiring a data segment lock corresponding to the data set to be locked, and writing all values of the waitLockSet set into the dataSet of Redis by using the SADD command of Redis according to the unique identifier datakey of the data segment lock;

step S405, after the writing is finished, releasing the operation lock, namely deleting the actionkey, and after the operation lock is released, determining that the data adding segmented lock is successful;

step S406, after the data segmented locking is successfully performed, business operation is performed, for example, data corresponding to a data set to be locked is imported into a database;

step S407, after the business operation is completed, releasing the data segment lock, and removing waitLockSet from the value set dataSet of the datakey by using an SREM command of Redis;

step S408, if the judgment result is that intersection exists, determining that the data segmented lock adding fails and needing to enter a waiting state;

step S409, if the data segment lock for locking the intersection is released within the preset waiting time, applying for the data segment lock;

step S410, if the data segment lock of the locking intersection is not released in the preset waiting time, releasing the operation lock, and adding the operation lock into the queue of the waiting operation lock, so that the operation lock can be applied again.

Under the condition that the cluster service node successfully applies for the operation lock, if the intersection of the data set locked by the data segmented lock and the data set to be locked corresponding to the cluster service node is empty, the data set to be locked is locked in a data writing mode, namely, the data set to be locked is locked, and then the cluster service node can execute business operation. And under the condition that the data set locked by the data segmented lock and the data set to be locked have intersection, the cluster service node enters a waiting state, and after the data segmented lock for locking the intersection is released, the cluster service node can apply for the data segmented lock to further perform business operation; and if the data segment lock of the locking intersection is not released in the preset waiting time, the operation lock can be released, so that other service nodes waiting for the operation lock can apply for the operation lock, and the cluster service nodes are added into the queue of the waiting operation lock after releasing the operation lock, so that the operation lock can be applied again.

The method for implementing the distributed lock according to the embodiment of the present disclosure is described below with an execution subject as a server. FIG. 5 is a flowchart illustrating a method of implementing a distributed lock, according to yet another example embodiment. As shown in fig. 5, the method is applied to a server, and includes the following steps:

step S510, receiving a request for applying an operation lock sent by a cluster service node;

step S520, if the operation lock is not held by other service nodes, the operation lock is distributed to the cluster service node;

step S530, if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node;

step S540, after the operation lock is allocated to the cluster service node, if there is no intersection between the data set locked by the data segment lock and the data set to be locked, a data segment lock corresponding to the data set to be locked is generated.

It has been explained above that a server can be seen as a lock manager for managing distributed locks, i.e. a server is used for managing operational locks and data segment locks. The server may receive a request for applying an operation lock sent by each cluster service node, and for convenience of understanding, the first service node is taken as an example for description. If the operation lock is not held by other service nodes (nodes outside the first service node), the server may assign the operation lock to the first service node, i.e., the first service node succeeds in adding the operation lock. If the operation lock is occupied by other service nodes, the server may cause the first service node to enter a wait state, and after the other service nodes release the operation lock, the operation lock may be assigned to the first service node. Also, if there are multiple service nodes waiting on an operation lock, then the operation locks may be assigned in the order of waiting.

After the server assigns the operation lock to the cluster service node, the cluster service node may determine whether there is an intersection between the data set locked by the data segment lock and the data set to be locked, where the specific determination process has been described above and is not described here again. If it is determined that the data set locked by the data segment lock does not have an intersection with the data set to be locked, the server may generate a data segment lock corresponding to the data set to be locked. The cluster service node may then: determining a data segmentation lock corresponding to the data set to be locked; writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked; releasing the operation lock to obtain a data segmented lock corresponding to the data set to be locked, namely the data segmented lock is successfully added by the cluster service node; and executing the business operation, removing the data set to be locked from the data set locked by the data segment lock after the business operation is finished, and releasing the data segment lock corresponding to the data set to be locked.

In the method for implementing a distributed lock provided by the embodiment of the present disclosure, a server is used to manage an operation lock and a data segment lock, and specifically, when the operation lock is not occupied, the operation lock is allocated to a service node, and when the service node determines that a data set locked by the data segment lock does not have an intersection with a data set to be locked, a data segment lock corresponding to the data set to be locked is generated, and then the generated data segment lock may be used to lock the service node, so that the service node performs a service operation.

FIG. 6 is a diagram of data interactions between a service node and a server. Referring to fig. 6, a first service node and a second service node send requests for operation locks to a server. The first service node applies for the operation lock successfully, namely the server distributes the operation lock to the first service node; the first service node acquires a data set locked by a data segmentation lock and judges whether an intersection exists between the locked data set and a data set to be locked; if the intersection does not exist, the server generates a data segmented lock corresponding to the data set to be locked, and the first service node acquires the generated data segmented lock; the first service node writes a data set to be locked into a data set locked by the data segment lock according to the generated unique identifier of the data segment lock, namely, the data set to be locked is locked; the first service node releases the operation lock and determines that the data segmentation lock is successfully added; and the first service node performs service operation, removes the data set to be locked from the data set locked by the data segment lock after the service operation is completed, and releases the data segment lock corresponding to the data set to be locked. As can also be seen from fig. 6, if the second service node fails to apply for the operation lock, the second service node enters a waiting state, and after waiting for the first service node to release the operation lock, applies for the operation lock again.

Fig. 6 shows a case where the first service node determines that there is no intersection between the data set locked by the data segment lock and the data set to be locked, and fig. 6 does not show a case where there is an intersection between the data set locked by the data segment lock and the data set to be locked. If the first service node judges that the data set locked by the data segment lock has an intersection with the data set to be locked, the first service node needs to enter a waiting state, and the data segment lock waiting for locking the intersection is released. If the data segment lock for locking the intersection is released within the preset waiting time, the first service node can apply for the data segment lock, and then service operation is performed. If the data segment lock locking the intersection is not released within the preset waiting time, the first service node may release the operation lock, so that the second service node waiting for the operation lock may apply for the operation lock. In addition, after releasing the operation lock, the first service node may join the queue waiting for the operation lock, and reapply the operation lock.

FIG. 7 is a block diagram illustrating an apparatus for implementing a distributed lock, according to an example embodiment. The distributed lock implementation apparatus 700 shown in fig. 7 is applied to a cluster service node. Referring to fig. 7, the apparatus includes: an apply lock module 710, a data acquisition module 720, a write module 730, a release lock module 740, and a lock acquisition module 750.

The apply for lock module 710 is configured to send a request for an operation lock to a server.

The data acquisition module 720 is configured to acquire a data set locked by a data segment lock if the server assigns an operation lock to a cluster service node.

The writing module 730 is configured to write the data set to be locked into the data set locked by the data segment lock if there is no intersection between the data set locked by the data segment lock and the data set to be locked.

The release lock module 740 is configured to release the operating lock.

The lock acquisition module 750 is configured to acquire a data segment lock corresponding to a data set to be locked.

In an exemplary embodiment, the writing module 730 is further configured to: determining a data segmented lock corresponding to a data set to be locked; and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

In an exemplary embodiment, the apparatus 700 for implementing a distributed lock shown in fig. 7 may further include an execution module 760. The execution module 760 is configured to: and executing the business operation. And, the release lock module 740 is configured to: and after the business operation is finished, removing the data set to be locked from the data set locked by the data segment lock, and releasing the data segment lock corresponding to the data set to be locked.

In an exemplary embodiment, the application lock module 710 is further configured to: if the intersection exists between the data set locked by the data segmentation lock and the data set to be locked, entering a waiting state; and after the data segment lock of the lock intersection is released, applying for the data segment lock.

In an exemplary embodiment, the release lock module 740 is further configured to: and if the data segment lock for locking the intersection is not released in the preset waiting time, releasing the operation lock.

In an exemplary embodiment, the application lock module 710 is further configured to: if the server does not distribute the operation lock to the cluster service node, entering a waiting state; and after the service node holding the operation lock releases the operation lock, applying for the operation lock.

With regard to the apparatus in the above-described embodiment, the specific manner in which each module performs the operation has been described in detail in the embodiment related to the method, and will not be elaborated here.

FIG. 8 is a block diagram illustrating an apparatus for implementing a distributed lock, according to another example embodiment. The distributed lock implementation apparatus 800 shown in fig. 8 is applied to a server. Referring to fig. 8, the apparatus includes: a receiving module 810, an assigning module 820, and a generating module 830.

The receiving module 810 is configured to receive a request for applying an operation lock sent by a cluster service node.

The assignment module 820 is configured to assign an operation lock to a cluster service node if the operation lock is not held by other service nodes; and if the operation lock is held by other service nodes, enabling the cluster service node to enter a waiting state, and after the service node holding the operation lock releases the operation lock, allocating the operation lock to the cluster service node.

The generating module 830 is configured to, after the operation lock is allocated to the cluster service node, generate a data segment lock corresponding to the data set to be locked if there is no intersection between the data set locked by the data segment lock and the data set to be locked.

With regard to the apparatus in the above-described embodiment, the specific manner in which each module performs the operation has been described in detail in the embodiment related to the method, and will not be elaborated here.

FIG. 9 is a block diagram illustrating an architecture of a distributed lock implementation apparatus in accordance with an illustrative embodiment. It should be noted that the illustrated electronic device is only an example, and should not bring any limitation to the functions and the scope of the embodiments of the present invention.

An electronic device 900 according to this embodiment of the invention is described below with reference to fig. 9. The electronic device 900 shown in fig. 9 is only an example and should not bring any limitations to the function and scope of use of the embodiments of the present invention.

As shown in fig. 9, the electronic device 900 is embodied in the form of a general purpose computing device. Components of electronic device 900 may include, but are not limited to: the at least one processing unit 910, the at least one memory unit 920, and a bus 930 that couples various system components including the memory unit 920 and the processing unit 910.

Wherein the storage unit stores program code that can be executed by the processing unit 910, such that the processing unit 910 performs the steps according to various exemplary embodiments of the present invention described in the above section "exemplary method" of the present specification. For example, the processing unit 910 may execute step S210 shown in fig. 2, and send a request for applying for an operation lock to a server; step S220, if the server distributes the operation lock to the cluster service node, a data set locked by the data segment lock is obtained; step S230, if the data set locked by the data segment lock does not have an intersection with the data set to be locked, writing the data set to be locked into the data set locked by the data segment lock; step S240, releasing the operation lock; step S250, obtaining a data segment lock corresponding to the data set to be locked. For another example, the processing unit 910 may execute step S510 shown in fig. 5, and receive a request for applying an operation lock sent by a cluster service node; step S520, if the operation lock is not held by other service nodes, the operation lock is distributed to the cluster service node; step S530, if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node; step S540, after the operation lock is allocated to the cluster service node, if there is no intersection between the data set locked by the data segment lock and the data set to be locked, a data segment lock corresponding to the data set to be locked is generated.

The storage unit 920 may include a readable medium in the form of a volatile storage unit, such as a random access memory unit (RAM) 9201 and/or a cache memory unit 9202, and may further include a read only memory unit (ROM) 9203.

The electronic device 900 may also communicate with one or more external devices 1000 (e.g., keyboard, pointing device, bluetooth device, etc.), with one or more devices that enable a user to interact with the electronic device 900, and/or with any devices (e.g., router, modem, etc.) that enable the electronic device 900 to communicate with one or more other computing devices. Such communication may occur via input/output (I/O) interface 950. Also, the electronic device 900 may communicate with one or more networks (e.g., a Local Area Network (LAN), a Wide Area Network (WAN), and/or a public network such as the internet) via the network adapter 990. As shown, the network adapter 940 communicates with the other modules of the electronic device 900 over the bus 930. It should be appreciated that although not shown, other hardware and/or software modules may be used in conjunction with the electronic device 900, including but not limited to: microcode, device drivers, redundant processing units, external disk drive arrays, RAID systems, tape drives, and data backup storage systems, among others.

Through the above description of the embodiments, those skilled in the art will readily understand that the exemplary embodiments described herein may be implemented by software, or by software in combination with necessary hardware. Therefore, the technical solution according to the embodiments of the present disclosure may be embodied in the form of a software product, which may be stored in a non-volatile storage medium (which may be a CD-ROM, a usb disk, a removable hard disk, etc.) or on a network, and includes several instructions to enable a computing device (which may be a personal computer, a server, a terminal device, or a network device, etc.) to execute the method according to the embodiments of the present disclosure.

In an exemplary embodiment of the present disclosure, there is also provided a computer-readable storage medium having stored thereon a program product capable of implementing the above-described method of the present specification. In some possible embodiments, aspects of the invention may also be implemented in the form of a program product comprising program code means for causing a terminal device to carry out the steps according to various exemplary embodiments of the invention described in the above section "exemplary methods" of the present description, when said program product is run on the terminal device.

According to the program product for implementing the method, the portable compact disc read only memory (CD-ROM) can be adopted, the program code is included, and the program product can be operated on terminal equipment, such as a personal computer. However, the program product of the present invention is not limited in this regard and, in the present document, a readable storage medium may be any tangible medium that can contain, or store a program for use by or in connection with an instruction execution system, apparatus, or device.

The program product may employ any combination of one or more readable media. The readable medium may be a readable signal medium or a readable storage medium. A readable storage medium may be, for example, but not limited to, an electronic, magnetic, optical, electromagnetic, infrared, or semiconductor system, apparatus, or device, or any combination of the foregoing. More specific examples (a non-exhaustive list) of the readable storage medium include: an electrical connection having one or more wires, a portable disk, a hard disk, a Random Access Memory (RAM), a read-only memory (ROM), an erasable programmable read-only memory (EPROM or flash memory), an optical fiber, a portable compact disc read-only memory (CD-ROM), an optical storage device, a magnetic storage device, or any suitable combination of the foregoing.

A computer readable signal medium may include a propagated data signal with readable program code embodied therein, for example, in baseband or as part of a carrier wave. Such a propagated data signal may take many forms, including, but not limited to, electro-magnetic, optical, or any suitable combination thereof. A readable signal medium may also be any readable medium that is not a readable storage medium and that can communicate, propagate, or transport a program for use by or in connection with an instruction execution system, apparatus, or device.

Program code embodied on a readable medium may be transmitted using any appropriate medium, including but not limited to wireless, wireline, optical fiber cable, RF, etc., or any suitable combination of the foregoing.

Program code for carrying out operations for aspects of the present invention may be written in any combination of one or more programming languages, including an object oriented programming language such as Java, C + + or the like and conventional procedural programming languages, such as the "C" programming language or similar programming languages. The program code may execute entirely on the user's computing device, partly on the user's device, as a stand-alone software package, partly on the user's computing device and partly on a remote computing device, or entirely on the remote computing device or server. In the case of a remote computing device, the remote computing device may be connected to the user computing device through any kind of network, including a Local Area Network (LAN) or a Wide Area Network (WAN), or may be connected to an external computing device (e.g., through the internet using an internet service provider).

It should be noted that although in the above detailed description several modules or units of the device for action execution are mentioned, such a division is not mandatory. Indeed, the features and functionality of two or more modules or units described above may be embodied in one module or unit, according to embodiments of the present disclosure. Conversely, the features and functions of one module or unit described above may be further divided into embodiments by a plurality of modules or units.

Moreover, although the steps of the methods of the present disclosure are depicted in the drawings in a particular order, this does not require or imply that the steps must be performed in this particular order, or that all of the depicted steps must be performed, to achieve desirable results. Additionally or alternatively, certain steps may be omitted, multiple steps combined into one step execution, and/or one step broken into multiple step executions, etc.

Through the above description of the embodiments, those skilled in the art will readily understand that the exemplary embodiments described herein may be implemented by software, or by software in combination with necessary hardware. Therefore, the technical solution according to the embodiments of the present disclosure may be embodied in the form of a software product, which may be stored in a non-volatile storage medium (which may be a CD-ROM, a usb disk, a removable hard disk, etc.) or on a network, and includes several instructions to enable a computing device (which may be a personal computer, a server, a mobile terminal, or a network device, etc.) to execute the method according to the embodiments of the present disclosure.

Other embodiments of the disclosure will be apparent to those skilled in the art from consideration of the specification and practice of the disclosure disclosed herein. This application is intended to cover any variations, uses, or adaptations of the disclosure following, in general, the principles of the disclosure and including such departures from the present disclosure as come within known or customary practice within the art to which the disclosure pertains. It is intended that the specification and examples be considered as exemplary only, with a true scope and spirit of the disclosure being indicated by the following claims.

It will be understood that the present disclosure is not limited to the precise arrangements described above and shown in the drawings and that various modifications and changes may be made without departing from the scope thereof. The scope of the present disclosure is limited only by the appended claims.

Claims (14)

1. A method for realizing distributed lock is applied to a cluster service node, and is characterized by comprising the following steps:

sending a request for applying for an operation lock to a server;

if the server distributes the operation lock to the cluster service node, acquiring a data set locked by a data segmentation lock;

if the data set locked by the data segmented lock does not have an intersection with the data set to be locked, generating a data segmented lock corresponding to the data set to be locked by the server, writing the data set to be locked into the data set locked by the data segmented lock, and locking the data set to be locked in a data writing mode;

after the data set to be locked is written into the data set locked by the data segment lock, releasing the operation lock, wherein the operation lock is responsible for locking the operation on the data segment;

acquiring a data segmented lock corresponding to the data set to be locked; and

and executing business operation, removing the data set to be locked from the data set locked by the data segment lock after the business operation is finished, and releasing the data segment lock corresponding to the data set to be locked.

2. The method of claim 1, wherein writing the set of data to be locked into the set of data locked by the data segment lock comprises:

determining a data segmentation lock corresponding to the data set to be locked;

and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

3. The method of claim 1, further comprising:

if the data set locked by the data segmentation lock and the data set to be locked have intersection, entering a waiting state;

and applying for the data segment lock after the data segment lock locking the intersection is released.

4. The method of claim 3, further comprising:

and if the data segment lock locking the intersection is not released within the preset waiting time, releasing the operation lock.

5. The method of claim 1, further comprising:

if the server does not distribute the operation lock to the cluster service node, entering a waiting state;

and after waiting for the service node holding the operation lock to release the operation lock, applying for the operation lock.

6. A method for realizing a distributed lock is applied to a server, and is characterized by comprising the following steps:

receiving a request for applying an operation lock sent by a cluster service node;

if the operation lock is not held by other service nodes, the operation lock is distributed to the cluster service nodes;

if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node; and the number of the first and second groups,

after the operation lock is allocated to the cluster service node, if there is no intersection between the data set locked by the data segment lock and the data set to be locked, generating a data segment lock corresponding to the data set to be locked, so that the data set to be locked is written into the data set locked by the data segment lock through the cluster service node, then the cluster service node releases the operation lock to obtain the data segment lock corresponding to the data set to be locked, then the cluster service node executes a business operation, after the business operation is completed, the data set to be locked is removed from the data set locked by the data segment lock, and the data segment lock corresponding to the data set to be locked is released, wherein the operation lock is responsible for locking the operation on the data segments.

7. An implementation apparatus of a distributed lock, applied to a cluster service node, includes:

the lock application module is configured to send a request for applying an operation lock to a server;

the data acquisition module is configured to acquire a data set locked by a data segmentation lock if the server allocates an operation lock to the cluster service node;

a writing module configured to generate, by the server, a data segment lock corresponding to the data set to be locked if there is no intersection between the data set to be locked and the data set to be locked, write the data set to be locked into the data set to be locked by the data segment lock, and lock the data set to be locked by writing data;

the unlocking module is configured to unlock the operation lock after the data set to be locked is written into the data set locked by the data segment lock, wherein the operation lock is responsible for locking the operation on the data segment;

the lock acquisition module is configured to acquire a data segmented lock corresponding to the data set to be locked;

an execution module configured to execute a business operation; and, the release lock module is configured to: and after the business operation is finished, removing the data set to be locked from the data set locked by the data segment lock, and releasing the data segment lock corresponding to the data set to be locked.

8. The apparatus of claim 7, wherein the write module is configured to:

determining a data segmentation lock corresponding to the data set to be locked;

and writing the data set to be locked into the data set locked by the data segment lock according to the unique identifier of the data segment lock corresponding to the data set to be locked.

9. The apparatus of claim 7, wherein the application lock module is configured to:

if the data set locked by the data segmentation lock and the data set to be locked have intersection, entering a waiting state;

and applying for the data segment lock after the data segment lock locking the intersection is released.

10. The apparatus of claim 9, wherein the release lock module is configured to:

and if the data segment lock locking the intersection is not released within the preset waiting time, releasing the operation lock.

11. The apparatus of claim 7, wherein the application lock module is configured to:

if the server does not distribute the operation lock to the cluster service node, entering a waiting state;

and after waiting for the service node holding the operation lock to release the operation lock, applying for the operation lock.

12. The utility model provides an apparatus for realizing distributed lock, is applied to the server, its characterized in that includes:

the receiving module is configured to receive a request for applying an operation lock sent by a cluster service node;

the distribution module is configured to distribute the operation lock to the cluster service node if the operation lock is not held by other service nodes; if the operation lock is held by other service nodes, the cluster service node is enabled to enter a waiting state, and after the service node holding the operation lock releases the operation lock, the operation lock is distributed to the cluster service node;

the generation module is configured to, after the operation lock is allocated to the cluster service node, generate a data segment lock corresponding to the data set to be locked if there is no intersection between the data set to be locked and the data set to be locked, write the data set to be locked into the data set locked by the data segment lock through the cluster service node, then release the operation lock by the cluster service node, obtain the data segment lock corresponding to the data set to be locked, then execute a business operation by the cluster service node, remove the data set to be locked from the data set locked by the data segment lock after the business operation is completed, and release the data segment lock corresponding to the data set to be locked, where the operation lock is responsible for locking operations on data segments.

13. An electronic device, comprising:

a processor;

a memory for storing the processor-executable instructions;

wherein the processor is configured to execute the instructions to implement the method of implementing a distributed lock according to any one of claims 1 to 5 or the method of implementing a distributed lock according to claim 6.

14. A computer-readable storage medium, whose instructions, when executed by a processor of an electronic device, enable the electronic device to perform the method of implementing a distributed lock according to any one of claims 1 to 5 or the method of implementing a distributed lock according to claim 6.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111519652.2A CN113918530B (en) | 2021-12-14 | 2021-12-14 | Method and device for realizing distributed lock, electronic equipment and medium |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202111519652.2A CN113918530B (en) | 2021-12-14 | 2021-12-14 | Method and device for realizing distributed lock, electronic equipment and medium |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN113918530A CN113918530A (en) | 2022-01-11 |

| CN113918530B true CN113918530B (en) | 2022-05-13 |

Family

ID=79249144

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202111519652.2A Active CN113918530B (en) | 2021-12-14 | 2021-12-14 | Method and device for realizing distributed lock, electronic equipment and medium |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN113918530B (en) |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104391935A (en) * | 2014-11-21 | 2015-03-04 | 华为技术有限公司 | Implementation method and device of range lock |

| CN106202271A (en) * | 2016-06-30 | 2016-12-07 | 携程计算机技术(上海)有限公司 | The read method of the product database of OTA |

| CN109766324A (en) * | 2018-12-14 | 2019-05-17 | 东软集团股份有限公司 | Control method, device, readable storage medium storing program for executing and the electronic equipment of distributed lock |

| CN110888858A (en) * | 2019-10-29 | 2020-03-17 | 北京奇艺世纪科技有限公司 | Database operation method and device, storage medium and electronic device |

Family Cites Families (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US9887937B2 (en) * | 2014-07-15 | 2018-02-06 | Cohesity, Inc. | Distributed fair allocation of shared resources to constituents of a cluster |

| CN105224255B (en) * | 2015-10-14 | 2018-10-30 | 浪潮(北京)电子信息产业有限公司 | A kind of storage file management method and device |

-

2021

- 2021-12-14 CN CN202111519652.2A patent/CN113918530B/en active Active

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104391935A (en) * | 2014-11-21 | 2015-03-04 | 华为技术有限公司 | Implementation method and device of range lock |

| CN106202271A (en) * | 2016-06-30 | 2016-12-07 | 携程计算机技术(上海)有限公司 | The read method of the product database of OTA |

| CN109766324A (en) * | 2018-12-14 | 2019-05-17 | 东软集团股份有限公司 | Control method, device, readable storage medium storing program for executing and the electronic equipment of distributed lock |

| CN110888858A (en) * | 2019-10-29 | 2020-03-17 | 北京奇艺世纪科技有限公司 | Database operation method and device, storage medium and electronic device |

Also Published As

| Publication number | Publication date |

|---|---|

| CN113918530A (en) | 2022-01-11 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US12003571B2 (en) | Client-directed placement of remotely-configured service instances | |

| CN109274731B (en) | Method and device for deploying and calling web service based on multi-tenant technology | |

| US10033816B2 (en) | Workflow service using state transfer | |

| US9270703B1 (en) | Enhanced control-plane security for network-accessible services | |

| US8949430B2 (en) | Clustered computer environment partition resolution | |

| US10691502B2 (en) | Task queuing and dispatching mechanisms in a computational device | |

| CN112153167B (en) | Internet interconnection protocol management method, device, electronic equipment and storage medium | |

| US20190205168A1 (en) | Grouping of tasks for distribution among processing entities | |

| US10817327B2 (en) | Network-accessible volume creation and leasing | |

| CN103514298A (en) | Method for achieving file lock and metadata server | |

| US10970132B2 (en) | Deadlock resolution between distributed processes | |

| CN108563509A (en) | Data query implementation method, device, medium and electronic equipment | |

| CN112182526A (en) | Community management method and device, electronic equipment and storage medium | |

| CN112688799B (en) | Redis cluster-based client number distribution method and distribution device | |

| CN112348302B (en) | Scalable workflow engine with stateless coordinator | |

| CN114489954A (en) | Tenant creation method based on virtualization platform, tenant access method and equipment | |

| CN113918530B (en) | Method and device for realizing distributed lock, electronic equipment and medium | |

| CN111124291B (en) | Data storage and processing method, device, and electronic equipment of distributed storage system | |

| US12158801B2 (en) | Method of responding to operation, electronic device, and storage medium | |

| CN110941496A (en) | Distributed lock implementation method and device, computer equipment and storage medium | |

| US20230114321A1 (en) | Cloud Data Ingestion System | |

| US10963303B2 (en) | Independent storage and processing of data with centralized event control | |

| US11176121B2 (en) | Global transaction serialization | |

| CN115220908A (en) | Resource scheduling method, device, electronic equipment and storage medium | |

| US10831563B2 (en) | Deadlock resolution between distributed processes using process and aggregated information |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |