CN112767252A - Image super-resolution reconstruction method based on convolutional neural network - Google Patents

Image super-resolution reconstruction method based on convolutional neural network Download PDFInfo

- Publication number

- CN112767252A CN112767252A CN202110105967.6A CN202110105967A CN112767252A CN 112767252 A CN112767252 A CN 112767252A CN 202110105967 A CN202110105967 A CN 202110105967A CN 112767252 A CN112767252 A CN 112767252A

- Authority

- CN

- China

- Prior art keywords

- image

- convolution

- layer

- neural network

- convolutional neural

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T3/00—Geometric image transformations in the plane of the image

- G06T3/40—Scaling of whole images or parts thereof, e.g. expanding or contracting

- G06T3/4007—Scaling of whole images or parts thereof, e.g. expanding or contracting based on interpolation, e.g. bilinear interpolation

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/21—Design or setup of recognition systems or techniques; Extraction of features in feature space; Blind source separation

- G06F18/214—Generating training patterns; Bootstrap methods, e.g. bagging or boosting

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T3/00—Geometric image transformations in the plane of the image

- G06T3/40—Scaling of whole images or parts thereof, e.g. expanding or contracting

- G06T3/4046—Scaling of whole images or parts thereof, e.g. expanding or contracting using neural networks

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T3/00—Geometric image transformations in the plane of the image

- G06T3/40—Scaling of whole images or parts thereof, e.g. expanding or contracting

- G06T3/4053—Scaling of whole images or parts thereof, e.g. expanding or contracting based on super-resolution, i.e. the output image resolution being higher than the sensor resolution

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Evolutionary Computation (AREA)

- Artificial Intelligence (AREA)

- Data Mining & Analysis (AREA)

- Life Sciences & Earth Sciences (AREA)

- General Engineering & Computer Science (AREA)

- General Health & Medical Sciences (AREA)

- Software Systems (AREA)

- Molecular Biology (AREA)

- Computing Systems (AREA)

- Biophysics (AREA)

- Biomedical Technology (AREA)

- Mathematical Physics (AREA)

- Computational Linguistics (AREA)

- Health & Medical Sciences (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Bioinformatics & Computational Biology (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Evolutionary Biology (AREA)

- Image Analysis (AREA)

Abstract

本发明属于图像技术领域,具体是涉及一种基于卷积神经网络的图像超分辨率重建方法。本发明方法根据不同卷积核的感受野差异原理,利用异形卷积核对卷积神经网络进行改进,使得网络在具有较少的参数的情况下能够提取图像的丰富特征。同时,利用tanh函数作为激活函数以及跳跃连接的方式将多层特征图像信息充分利用,使得重建图像的质量有所提高。另外,本发明方法利用卷积神经网络轻量化原理,对卷积神经网络进行轻量化优化,使得网络在重建图像质量略有下降的情况下整个网络的参数减少,运算量有下降。本发明方法能够在相较于传统网络的参数没有较大提升的基础上提升重建图像质量,同时重建图像相较与传统方法所重建的图像在人眼观感上有明显提升。

The invention belongs to the field of image technology, in particular to an image super-resolution reconstruction method based on a convolutional neural network. According to the difference principle of receptive field of different convolution kernels, the method of the invention improves the convolutional neural network by using special-shaped convolution kernels, so that the network can extract the rich features of the image under the condition of less parameters. At the same time, the multi-layer feature image information is fully utilized by using the tanh function as the activation function and the skip connection, which improves the quality of the reconstructed image. In addition, the method of the present invention utilizes the light-weight principle of the convolutional neural network to optimize the weight of the convolutional neural network, so that the parameters of the entire network are reduced and the computation amount is reduced when the reconstructed image quality of the network is slightly reduced. The method of the present invention can improve the quality of the reconstructed image on the basis that the parameters of the traditional network are not greatly improved, and at the same time, the reconstructed image has an obvious improvement in the human eye perception compared with the image reconstructed by the traditional method.

Description

技术领域technical field

本发明属于图像处理技术领域,具体是涉及一种基于卷积神经网络的图像超分辨率重建方法。The invention belongs to the technical field of image processing, in particular to an image super-resolution reconstruction method based on a convolutional neural network.

背景技术Background technique

图像超分辨率重建是低分辨率图像重建成高分辨率图像的图像处理技术。随着设备显示能力的提升,用户需要有更高分辨率的图像带来更好的视觉体验,同时更高的分辨率也能方便更多行业的从业人员进行科研,这就需要对图像进行超分辨率重建。Image super-resolution reconstruction is an image processing technique that reconstructs low-resolution images into high-resolution images. With the improvement of device display capabilities, users need higher resolution images to bring better visual experience, and higher resolutions can also facilitate scientific research by practitioners in more industries. Resolution reconstruction.

图像超分辨率重建方法包括基于插值的图像超分辨率重建、基于拟合的图像超分辨率重建和基于学习的图像超分辨率重建。基于学习的图像超分辨率重建是当前较为热门的一种重建方法,其具有重建质量好、重建图像细节丰富和人眼观感好等特点,在医疗、卫星和民用领域均得到广泛的应用,因此对图像超分辨率重建的重建质量提升具有重要意义。基于学习的图像超分辨率重建方法又有基于字典学习的图像超分辨率重建方法和基于卷积神经网络的图像超分辨率重建方法,基于卷积神经网络的图像超分辨率重建方法由于其具有较好的特征提取能力和非线性映射能力,使其重建图像质量优于其他方法。Image super-resolution reconstruction methods include interpolation-based image super-resolution reconstruction, fitting-based image super-resolution reconstruction and learning-based image super-resolution reconstruction. Image super-resolution reconstruction based on learning is a popular reconstruction method at present. It has the characteristics of good reconstruction quality, rich reconstructed image details and good visual perception. It has been widely used in medical, satellite and civil fields. Therefore, It is of great significance to improve the reconstruction quality of image super-resolution reconstruction. The image super-resolution reconstruction method based on learning includes the image super-resolution reconstruction method based on dictionary learning and the image super-resolution reconstruction method based on convolutional neural network. The image super-resolution reconstruction method based on convolutional neural network has The better feature extraction ability and nonlinear mapping ability make the reconstructed image quality better than other methods.

目前针对基于卷积神经网络的图像超分辨率重建方法主要集中在以下几种:At present, the image super-resolution reconstruction methods based on convolutional neural networks mainly focus on the following:

1.卷积神经网络1. Convolutional Neural Networks

卷积神经网络方法通过将卷积神经网络利用在图像超分辨率重建问题上,该方法主要利用三层卷积层对图像进行超分辨率重建:特征提取层、非线性映射层和超分辨率重建层,该方法有效的提升了重建图像质量,但是由于网络层数少、图像特征利用率低等弊端,并没有充分使用图像信息,重建效果还可以进一步提升。The convolutional neural network method uses the convolutional neural network in the problem of image super-resolution reconstruction. This method mainly uses three layers of convolutional layers to perform super-resolution reconstruction of images: feature extraction layer, nonlinear mapping layer and super-resolution. Reconstruction layer, this method effectively improves the quality of the reconstructed image, but due to the disadvantages of few network layers and low utilization of image features, the image information is not fully used, and the reconstruction effect can be further improved.

2.循环神经网络2. Recurrent Neural Network

循环神经网络方法是利用循环神经网络对图像进行超分辨率重建,在特征提取层之后,利用循环卷积的原理,对特征图像进行循环卷积,并将每次卷积的结果传给最后一层卷积层,实现对图像特征的提取。利用循环神经网络进行图像超分辨率重建的方法虽然改动不大,但是循环卷积的方式可以对图像的某些特征进行充分的提取,避免了一部分卷积神经网络层数少,没有充分提取特征的问题;但是循环神经网络使用同一层卷积层进行循环卷积,无法充分提取图像的不同特征,使得重建图像会对某类重复出现的特征的重建效果较好、其他特征效果一般的问题。The cyclic neural network method is to use the cyclic neural network to perform super-resolution reconstruction of the image. After the feature extraction layer, the principle of cyclic convolution is used to perform cyclic convolution on the feature image, and the result of each convolution is passed to the last one. Layer convolution layer to realize the extraction of image features. Although the method of image super-resolution reconstruction using cyclic neural network has not changed much, the cyclic convolution method can fully extract some features of the image, avoiding some convolutional neural networks with few layers and insufficient feature extraction. However, the cyclic neural network uses the same convolution layer for cyclic convolution, which cannot fully extract different features of the image, so that the reconstructed image will have a better reconstruction effect on certain types of recurring features, and other features have a general effect.

3.对抗神经网络3. Adversarial Neural Networks

该方法基于对抗神经网络,利用生成网络和对抗网络两个网络同时训练,得到能够重建出具有丰富纹理的图案,但是由于该网络在训练过程中用到了对抗网络对图像的观感进行提升,会造成重建图像与真实图像所呈现的内容有较大的差异,并且同时训练两个网络需要较长的时间和较多的资源,对设备的要求非常高,实用性有一定的限制。This method is based on the adversarial neural network, and uses the generative network and the adversarial network to train at the same time, and obtains a pattern with rich texture that can be reconstructed. The content presented by the reconstructed image is quite different from the real image, and training the two networks at the same time requires a long time and more resources, which requires very high equipment and has certain limitations in practicability.

发明内容SUMMARY OF THE INVENTION

本发明的目的在于,针对上述问题,提出一种基于改进的卷积神经网络——水平垂直网络(Horizonal and Vertical Super-Resolution,HVSR)的图像超分辨率重建方法,是一种重建图像性能好且网络参数较少的图像超分辨率重建方法。The purpose of the present invention is to propose an image super-resolution reconstruction method based on an improved convolutional neural network-Horizonal and Vertical Super-Resolution (HVSR) network, in view of the above-mentioned problems, which is a kind of reconstructed image with good performance. And image super-resolution reconstruction method with fewer network parameters.

本发明的技术方案为,一种基于卷积神经网络的图像超分辨率重建方法,如图1所示,包括以下步骤:The technical solution of the present invention is, a method for image super-resolution reconstruction based on convolutional neural network, as shown in FIG. 1 , comprising the following steps:

S1、选取n张图像作为训练集{PH1,PH2,...,PHn},下标H表示图像为高分辨率图像;S1. Select n images as the training set {P H1 , P H2 ,..., P Hn }, and the subscript H indicates that the images are high-resolution images;

S2、对训练集进行预处理:随机提取训练集每张图像中大小为100×100的像素区域,图像像素区域小于100则用0补齐不足区域,然后对这些像素区域进行下采样,得到对应的低分辨率图像集{PL1,PL2,...,PLn};S2. Preprocess the training set: randomly extract a pixel area with a size of 100 × 100 in each image of the training set. If the pixel area of the image is less than 100, fill in the insufficient area with 0, and then downsample these pixel areas to obtain the corresponding The set of low-resolution images {P L1 ,P L2 ,...,P Ln };

S3、构建卷积神经网络,将网络从输入到输出的方向定义为垂直方向,则:S3. Construct a convolutional neural network, and define the direction of the network from input to output as the vertical direction, then:

沿垂直方向,依次包括第一卷积层、第二卷积层、第三卷积层、第四卷积层、第五卷积层、第六卷积层、反卷积层、第七卷积层;其中,Along the vertical direction, it includes the first convolutional layer, the second convolutional layer, the third convolutional layer, the fourth convolutional layer, the fifth convolutional layer, the sixth convolutional layer, the deconvolutional layer, and the seventh convolutional layer. layered; of which,

所述第一卷积层的输入为低分辨率图像集,第一卷积层输出的特征图像分为数量相等的两组;The input of the first convolution layer is a low-resolution image set, and the feature images output by the first convolution layer are divided into two groups of equal numbers;

所述第二卷积层具有a×1和1×a的两种卷积核,两种卷积核分别将第一卷积层的两组输出作为输入,每种卷积核的输出又分为数量相等的两组,即第二卷积层输出四组特征图像;The second convolution layer has two convolution kernels of a×1 and 1×a. The two convolution kernels take the two sets of outputs of the first convolution layer as inputs, and the output of each convolution kernel is divided into two groups. For two groups of equal numbers, that is, the second convolutional layer outputs four groups of feature images;

所述第三卷积层具有四个卷积核,沿水平方向,交替由a×1和1×a的两种卷积核构成,第一组a×1和1×a卷积核对应的输入为第二卷积层中a×1卷积核输出的两组特征图像,第二组a×1和1×a卷积核对应的输入为第二卷积层中1×a卷积核输出的两组特征图像,每种卷积核的输出又分为数量相等的两组,即第三卷积层输出八组特征图像;The third convolution layer has four convolution kernels, which are alternately composed of two convolution kernels of a×1 and 1×a along the horizontal direction. The first group of a×1 and 1×a convolution kernels correspond to The input is the two sets of feature images output by the a×1 convolution kernel in the second convolution layer, and the input corresponding to the second group of a×1 and 1×a convolution kernels is the 1×a convolution kernel in the second convolution layer. The output of two sets of feature images, the output of each convolution kernel is divided into two groups of equal numbers, that is, the third convolution layer outputs eight sets of feature images;

所述第四卷积层具有八个卷积核,沿水平方向,交替由a×1和1×a的两种卷积核构成,每个卷积核的输入与第三卷积层中卷积核同理,每个卷积核输出一组特征图像;The fourth convolution layer has eight convolution kernels, which are alternately composed of two convolution kernels of a×1 and 1×a along the horizontal direction. The input of each convolution kernel is the same as that of the third convolution layer. In the same way as the product kernel, each convolution kernel outputs a set of feature images;

所述第五卷积层为1×1的卷积核,输入为第二卷积层、第三卷积层、第四卷积层输出的所有特征图像,输出特征图像数目为第一层特征图像数目的一半;The fifth convolution layer is a 1×1 convolution kernel, the input is all feature images output by the second convolution layer, the third convolution layer, and the fourth convolution layer, and the number of output feature images is the first layer feature half the number of images;

网络中每一次卷积后采用tanh函数作为激活函数;After each convolution in the network, the tanh function is used as the activation function;

S4、对构建的卷积神经网络进行训练,具体为:设置目标图像为残差图像,通过将低分辨率图像集利用双线性插值法放大至与训练集中对应图像等大,得到放大的低分辨率图像集{PIL1,PIL2,...,PILn},将放大的低分辨率图像集与提取的像素区域中对应的图像的对应位置的像素值相减,得到残差图像集{PR1,PR2,...,PRn};将输入低分辨率图像PLi到网络中后所获得的输出图像POi与对应的残差图像PRi比较,得到均方差将均方差作为网络的损失函数,对网络参数进行更新,从而获得训练好的卷积神经网络;S4. Train the constructed convolutional neural network, specifically: setting the target image as a residual image, and enlarging the low-resolution image set to the same size as the corresponding image in the training set by using the bilinear interpolation method, so as to obtain an enlarged low-resolution image. Resolution image set {P IL1 ,P IL2 ,...,P ILn }, the pixel value of the corresponding position of the corresponding image in the extracted pixel area is subtracted from the enlarged low-resolution image set to obtain the residual image set {P R1 ,P R2 ,...,P Rn }; compare the output image P Oi obtained after inputting the low-resolution image P Li into the network with the corresponding residual image P Ri to obtain the mean square error The mean square error is used as the loss function of the network, and the network parameters are updated to obtain a trained convolutional neural network;

S5、利用训练好的卷积神经网络进行图像的超分辨率重建,如图2所示,具体为:S5. Use the trained convolutional neural network to perform super-resolution reconstruction of the image, as shown in Figure 2, specifically:

S51、将需要超分辨率重建的图像PL作为输入图像,输入卷积神经网络后得到输出图像PO;S51, taking the image PL that needs super-resolution reconstruction as the input image, and inputting the convolutional neural network to obtain the output image PO ;

S52、对图像PL利用双三次插值法放大至与图像PO等大,获得放大后的图像PIL;S52, utilize the bicubic interpolation method to enlarge the image PL to be as large as the image P O , and obtain the enlarged image P IL ;

S53、将PIL与PO对应的像素点的像素值进行相加,得到超分辨率图像PS。S53. Add the pixel values of the pixel points corresponding to P IL and PO to obtain a super-resolution image P S .

进一步的,所述第一卷积层为3×3的卷积核,第二卷积层中为1×5和5×1的卷积核,第六卷积层为1×1的卷积核,第七卷积层为3×3的卷积核。Further, the first convolution layer is a 3×3 convolution kernel, the second convolution layer is a 1×5 and 5×1 convolution kernel, and the sixth convolution layer is a 1×1 convolution kernel. kernel, the seventh convolution layer is a 3×3 convolution kernel.

本发明的有益效果是:The beneficial effects of the present invention are:

(1)通过采用与传统n×n卷积核不同的1×5和5×1卷积核,使感受野更加丰富;同时,与常用的3×3的卷积核相比,每个卷积核具有的参数更少,但是最终可以获得的感受野相同。(1) By using 1×5 and 5×1 convolution kernels that are different from the traditional n×n convolution kernels, the receptive field is richer; at the same time, compared with the commonly used 3×3 convolution kernels, each convolution kernel The kernel has fewer parameters, but the same receptive field can be obtained in the end.

(2)在一层卷积层中同时使用了1×5和5×1的卷积核,在保证水平和垂直方向特征都提取的同时,通过多层卷积层中不同卷积核的组合得到不同的感受野形状,丰富了卷积神经网络提取图像中不同形状特征的能力。(2) Convolution kernels of 1×5 and 5×1 are used in one convolutional layer at the same time. While ensuring the extraction of horizontal and vertical features, the combination of different convolutional kernels in multi-layer convolutional layers Obtaining different receptive field shapes enriches the ability of convolutional neural networks to extract different shape features in images.

(3)本发明采用了轻量化的思想,利用分组卷积的思想,在重建性能没有较大改变的基础上,减少了网络的运算量,使得该卷积神经网络的适用范围更广。(3) The present invention adopts the idea of light weight, utilizes the idea of grouped convolution, and reduces the computation amount of the network on the basis that the reconstruction performance does not change greatly, so that the convolutional neural network has a wider application range.

附图说明Description of drawings

图1为本发明方法训练流程图。Fig. 1 is the training flow chart of the method of the present invention.

图2为本发明方法图像超分辨率重建流程图。FIG. 2 is a flowchart of image super-resolution reconstruction according to the method of the present invention.

图3为本发明的卷积神经网络结构图。FIG. 3 is a structural diagram of a convolutional neural network of the present invention.

图4为不同模型在不同训练周期后在测试集上的表现。Figure 4 shows the performance of different models on the test set after different training cycles.

图5为不同模型对同一图像的超分辨率重建效果图。Figure 5 shows the super-resolution reconstruction effect of different models on the same image.

具体实施方式Detailed ways

下面结合附图,详细描述本发明的技术方案:Below in conjunction with accompanying drawing, the technical scheme of the present invention is described in detail:

卷积神经网络定义为:A convolutional neural network is defined as:

Y=a(w*X+b)Y=a(w*X+b)

Y代表输出,a(·)代表激活函数,w代表卷积核,*代表卷积操作,b代表偏置项。Y represents the output, a( ) represents the activation function, w represents the convolution kernel, * represents the convolution operation, and b represents the bias term.

如图3所示,相比于传统卷积神经网络进行超分辨率重建时使用n×n的卷积核进行图像超分辨率重建,本发明定义异形卷积核使用了1×5和5×1的两种卷积核代替原始卷积核,这种卷积核能够使用较少的参数达到与传统卷积核相同感受野的作用,如使用5×5的卷积核作为卷积神经网络的卷积核时,感受野大小为5×5,所使用的参数数目为25个。当使用3×3的卷积核作为卷积神经网络的卷积核时,达到5×5的感受野时需要两个同样大小的3×3卷积核,此时所用参数为18个。而使用1×5和5×1的两种卷积核进行卷积的时候,要达到5×5的感受野需要一个1×5和一个5×1的卷积核,此时所用参数为10个,远远小于传统的卷积核数目。As shown in Figure 3, compared with the use of n×n convolution kernels for image super-resolution reconstruction when the traditional convolutional neural network performs super-resolution reconstruction, the present invention defines special-shaped convolution kernels using 1×5 and 5× The two convolution kernels of 1 replace the original convolution kernel. This convolution kernel can use fewer parameters to achieve the same receptive field effect as the traditional convolution kernel, such as using a 5×5 convolution kernel as a convolutional neural network. The size of the receptive field is 5 × 5, and the number of parameters used is 25. When a 3×3 convolution kernel is used as the convolution kernel of a convolutional neural network, two 3×3 convolution kernels of the same size are required to achieve a 5×5 receptive field, and 18 parameters are used at this time. When using two convolution kernels of 1 × 5 and 5 × 1 for convolution, a 1 × 5 and a 5 × 1 convolution kernel are required to achieve a 5 × 5 receptive field, and the parameters used at this time are 10 , which is much smaller than the number of traditional convolution kernels.

传统卷积神经网络进行超分辨率重建时每一层卷积层只会使用一种形状卷积核进行卷积,经过多层卷积之后,特征图像中的某一点所具有的感受野形状单一,对原本的图像所提取特征的区域较为固定,而本发明方法将1×5和5×1的两种卷积核放置在同一层卷积层中,可以保证提取上一层中水平和竖直方向的图像信息,而避免了只使用单一方向提取图像信息时造成的另一方向的图像特征信息没有提取的问题,同时多种卷积核组合,可以使整个卷积神经网络的感受野形状丰富,对于提取某些形状的图像特征更有利;使得通过多层卷积层后,不同形状的感受野相互组合,得到不同形状的感受野,而不同形状的感受野对于不同形状的图像特征具有不同的提取能力,相对于单一形状的感受野,不同形状的感受野能够更好的捕捉图像不同的特征。When the traditional convolutional neural network performs super-resolution reconstruction, each convolutional layer uses only one shape convolution kernel for convolution. After multi-layer convolution, a certain point in the feature image has a single receptive field shape. , the region of the extracted features of the original image is relatively fixed, and the method of the present invention places two convolution kernels of 1×5 and 5×1 in the same convolution layer, which can ensure the extraction of horizontal and vertical in the previous layer. The image information in the straight direction avoids the problem of not extracting image feature information in the other direction caused by using only a single direction to extract image information. At the same time, the combination of multiple convolution kernels can make the shape of the receptive field of the entire convolutional neural network. Rich, it is more beneficial to extract image features of certain shapes; after multi-layer convolution layers, the receptive fields of different shapes are combined with each other to obtain receptive fields of different shapes, and the receptive fields of different shapes have different shapes for image features of different shapes. With different extraction capabilities, compared with the receptive field of a single shape, the receptive field of different shapes can better capture different features of the image.

本发明的网络采用分组卷积的方式,将每层所提取的特征图像进行分组,每组分别用下一层的卷积核进行卷积,如将第二层卷积核所提取的图像分成两组,每组分别用1×5和5×1的卷积核进行卷积。这样做,每层卷积核的数目是不变的,但是通过分组,使得每层所需的运算量有了较大的减少。例如第二层提取的特征图像分成两组后,第三层卷积层所需的运算量就只有第二层的50%,而第三层提取的特征图像分成四组,则运算量变为第二层的25%,以此类推。并且在后面的卷积之前,利用跳跃连接的方法将所有的特征图像集合,并用1×1的卷积核对所有的特征图像进行“混合”,并且改变特征图像的图像数目,减少后层卷积层的运算量。The network of the present invention adopts the method of grouping convolution to group the feature images extracted from each layer, and each group is convolved with the convolution kernel of the next layer. For example, the image extracted by the second layer convolution kernel is divided into There are two groups, each of which is convolved with 1×5 and 5×1 convolution kernels, respectively. In this way, the number of convolution kernels in each layer is unchanged, but by grouping, the amount of computation required for each layer is greatly reduced. For example, after the feature images extracted by the second layer are divided into two groups, the amount of computation required by the third convolution layer is only 50% of that of the second layer, and the feature images extracted by the third layer are divided into four groups. 25% of the second floor, and so on. And before the subsequent convolution, use the skip connection method to collect all the feature images, and use a 1×1 convolution kernel to "mix" all the feature images, and change the number of feature images to reduce the convolution of the latter layer. Layer operations.

每一次卷积后都采用tanh函数作为激活函数,因为在图像超分辨率重建问题中,所需要输出的结果是图像信息,图像中的物体都较为平滑和连续,并且经过处理之后的目标图像的取值范围在[-1,1]之间,而tanh函数的表达式如下:After each convolution, the tanh function is used as the activation function, because in the image super-resolution reconstruction problem, the required output result is image information, the objects in the image are relatively smooth and continuous, and the processed target image has The value range is between [-1, 1], and the expression of the tanh function is as follows:

tanh函数的输出值也是在[-1,1]之间,不像ReLU和PReLU函数在取值为0的点有不可导的点。tanh函数不会将负数的输出值直接置0,使得该图像特征不被激活,造成训练过程中的一部分图像信息的丢失,tanh函数在接近0点处的输出值有较大的变化率,对较小的变化能够比较好的捕捉,在远离0点处的输出值有较小的变化率,且输出范围有限,对较大的卷积结果能够很好的抑制其增长,防止梯度消失和梯度爆炸的问题。因此通过激活函数之后的特征图像更接近目标图像,更有利于后续对图像超分辨率重建的处理。The output value of the tanh function is also between [-1, 1], unlike the ReLU and PReLU functions, which have non-derivative points at the point where the value is 0. The tanh function will not directly set the negative output value to 0, so that the image feature will not be activated, resulting in the loss of part of the image information during the training process. The output value of the tanh function near 0 has a large rate of change. Small changes can be better captured. The output value far from 0 has a small rate of change, and the output range is limited. For large convolution results, its growth can be well suppressed to prevent gradient disappearance and gradient. Explosion problem. Therefore, the feature image after passing through the activation function is closer to the target image, which is more conducive to the subsequent processing of image super-resolution reconstruction.

训练中使用dropout方法进行训练,在训练一定周期数后,随机屏蔽一部分卷积核再次训练,训练一段时间后重新选择新的卷积核进行屏蔽,最后再将所有卷积核进行组合,这样可以防止整个卷积神经网络过拟合,提高网络的鲁棒性。In training, the dropout method is used for training. After a certain number of training cycles, a part of the convolution kernels are randomly shielded for retraining. After training for a period of time, new convolution kernels are re-selected for shielding, and finally all the convolution kernels are combined. Prevent the entire convolutional neural network from overfitting and improve the robustness of the network.

本发明的卷积神经网络进行了轻量化处理,具体体现为:The convolutional neural network of the present invention carries out lightweight processing, which is embodied as follows:

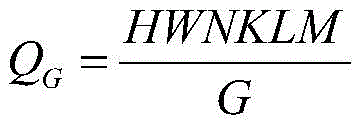

统的卷积神经网络进行图像超分辨率重建时,采用的方式为下层的卷积核对所有上一层的特征图像全部进行卷积,因此传统的卷积神经网络在每一层卷积层上所需的计算量都较大,所需的参数也较多,标准卷积的计算量如下:When the traditional convolutional neural network performs image super-resolution reconstruction, the method used is that the convolution kernel of the lower layer convolves all the feature images of the upper layer, so the traditional convolutional neural network is used in each convolutional layer. The amount of calculation required is large, and the required parameters are also more. The calculation amount of standard convolution is as follows:

Q=HWNKLMQ=HWNKLM

H×W表示输入图像的空间尺寸(H表示图像的高度,W表示图像的宽度),N表示输入图像的通道数,K×L表示卷积核的尺寸(K表示卷积核的长,L表示卷积核的宽),M表示卷积核数目(输出图像的通道数目)。H×W represents the spatial size of the input image (H represents the height of the image, W represents the width of the image), N represents the number of channels of the input image, and K×L represents the size of the convolution kernel (K represents the length of the convolution kernel, L represents the width of the convolution kernel), and M represents the number of convolution kernels (the number of channels of the output image).

而本发明采用了分组卷积的方式,对前一层的特征图像进行分组,后一层的卷积核只对分组后的特征图像中的其中一组进行卷积,该卷积的计算量为:In the present invention, the method of grouping convolution is adopted to group the feature images of the previous layer, and the convolution kernel of the latter layer only performs convolution on one of the grouped feature images, and the calculation amount of the convolution is for:

G为特征图像所分的组的数目,可见,当组分的越多时,卷积运算的运算量就越小,但同时后一层的特征图像中每一张特征图像所提取的前一层的特征图像数目就越少,当G取最大值N时,整个网络就变成了单通道的卷积神经网络。G is the number of groups into which the feature image is divided. It can be seen that the more the number of components, the smaller the amount of convolution operation, but at the same time, the previous layer extracted from each feature image in the feature image of the latter layer The smaller the number of feature images, when G takes the maximum value N, the entire network becomes a single-channel convolutional neural network.

如图3,本发明的方法在卷积神经网络的第2-4层对提取的特征图像进行了分组处理,其中第2层分为两组,分为1×5卷积核所提取的特征图像组和5×1卷积核所提取的特征图像组,并且让每组特征图像对应的后一层的卷积层中都包含1×5和5×1两种形状的卷积核,这样做是为了保证感受野的丰富性,使得网络能够提取丰富的图像特征。第3层分按照4组卷积核将特征图像分为4组,以此类推,第4层8组,使用分组卷积后的卷积神经网络比未使用的参数减少了24%,大大节约了计算量。As shown in Figure 3, the method of the present invention performs grouping processing on the extracted feature images in the 2-4 layers of the convolutional neural network, wherein the second layer is divided into two groups, which are divided into the features extracted by the 1×5 convolution kernel. The image group and the feature image group extracted by the 5×1 convolution kernel, and the convolution layer of the latter layer corresponding to each group of feature images contains two types of convolution kernels, 1×5 and 5×1, so that The purpose is to ensure the richness of the receptive field, so that the network can extract rich image features. The third layer divides the feature images into 4 groups according to 4 groups of convolution kernels, and so on. In the fourth layer of 8 groups, using the convolutional neural network after grouping convolution reduces 24% of the unused parameters, which greatly saves calculated amount.

同时,本发明在使用分组卷积之后,利用跳跃连接的方式,将前几层中的特征图像组合在一起,并且利用1×1的卷积核对这些图像进行混合,使后一层的特征图像数量减少,减少后一层卷积运算的运算量和网络参数数目。At the same time, after using grouped convolution, the present invention combines the feature images in the first several layers together by means of skip connection, and uses a 1×1 convolution kernel to mix these images, so that the feature images of the latter layer are mixed together. The number is reduced, reducing the amount of operation and the number of network parameters of the convolution operation of the latter layer.

下面通过仿真示例并结合图4、图5来证明本发明的有效性:The effectiveness of the present invention is proved below through simulation example and in conjunction with Fig. 4, Fig. 5:

在训练过程中,使用的是ImageNet2012数据集中的10000张图片作为训练集,每张图片随机选取100×100的像素快作为训练区域,图像放大倍数设置成4倍。学习率设置成0.0001。During the training process, 10,000 images in the ImageNet2012 dataset were used as the training set, and each image was randomly selected as the training area with a pixel size of 100 × 100, and the image magnification was set to 4 times. The learning rate is set to 0.0001.

图4是不同的网络模型在不同训练周期时,在测试集的重建性能比较。其中横坐标是模型所训练的周期数,纵坐标是峰值信噪比,是一种客观评价图像重建质量的指标。从图中可以看出,本发明的网络在十几个训练周期后就得到了良好的图像重建质量,优于其他网络。此实例说明本发明具有很好的特征提取能力和图像超分辨率重建的能力,并且易于训练。Figure 4 is a comparison of the reconstruction performance of different network models in the test set in different training cycles. The abscissa is the number of cycles for which the model is trained, and the ordinate is the peak signal-to-noise ratio, which is an indicator for objectively evaluating the quality of image reconstruction. It can be seen from the figure that the network of the present invention obtains good image reconstruction quality after more than a dozen training cycles, which is superior to other networks. This example shows that the present invention has good feature extraction capability and image super-resolution reconstruction capability, and is easy to train.

图5是不同的网络模型的重建效果,从图上可以看出,本发明的网络所重建的图像相较于其他网络在人眼观感上有一定提升,其模糊程度较低,相比于其他网络,也更没有重建时的锯齿感。此实例说明,本发明重建的图像具有很好的人眼观感。Fig. 5 is the reconstruction effect of different network models. It can be seen from the figure that the image reconstructed by the network of the present invention has a certain improvement in the human eye perception compared with other networks, and the degree of blurring is lower. The network also has no jaggedness during reconstruction. This example shows that the image reconstructed by the present invention has a very good appearance to the human eye.

Claims (2)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110105967.6A CN112767252B (en) | 2021-01-26 | 2021-01-26 | An image super-resolution reconstruction method based on convolutional neural network |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202110105967.6A CN112767252B (en) | 2021-01-26 | 2021-01-26 | An image super-resolution reconstruction method based on convolutional neural network |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN112767252A true CN112767252A (en) | 2021-05-07 |

| CN112767252B CN112767252B (en) | 2022-08-02 |

Family

ID=75705858

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202110105967.6A Expired - Fee Related CN112767252B (en) | 2021-01-26 | 2021-01-26 | An image super-resolution reconstruction method based on convolutional neural network |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN112767252B (en) |

Cited By (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113284112A (en) * | 2021-05-27 | 2021-08-20 | 中国科学院国家空间科学中心 | Molten drop image contour extraction method and system based on deep neural network |

| CN113409195A (en) * | 2021-07-06 | 2021-09-17 | 中国标准化研究院 | Image super-resolution reconstruction method based on improved deep convolutional neural network |

| CN113610706A (en) * | 2021-07-19 | 2021-11-05 | 河南大学 | Fuzzy monitoring image super-resolution reconstruction method based on convolutional neural network |

| CN114469174A (en) * | 2021-12-17 | 2022-05-13 | 上海深至信息科技有限公司 | A method and system for arterial plaque identification based on ultrasound scanning video |

Citations (19)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5696848A (en) * | 1995-03-09 | 1997-12-09 | Eastman Kodak Company | System for creating a high resolution image from a sequence of lower resolution motion images |

| US20150116545A1 (en) * | 2013-10-30 | 2015-04-30 | Ilia Ovsiannikov | Super-resolution in processing images such as from multi-layer sensors |

| CN106548449A (en) * | 2016-09-18 | 2017-03-29 | 北京市商汤科技开发有限公司 | Generate method, the apparatus and system of super-resolution depth map |

| CN106600538A (en) * | 2016-12-15 | 2017-04-26 | 武汉工程大学 | Human face super-resolution algorithm based on regional depth convolution neural network |

| CN107194893A (en) * | 2017-05-22 | 2017-09-22 | 西安电子科技大学 | Depth image ultra-resolution method based on convolutional neural networks |

| US20180137603A1 (en) * | 2016-11-07 | 2018-05-17 | Umbo Cv Inc. | Method and system for providing high resolution image through super-resolution reconstruction |

| CN108492249A (en) * | 2018-02-08 | 2018-09-04 | 浙江大学 | Single frames super-resolution reconstruction method based on small convolution recurrent neural network |

| WO2019032304A1 (en) * | 2017-08-07 | 2019-02-14 | Standard Cognition Corp. | Subject identification and tracking using image recognition |

| CN109544457A (en) * | 2018-12-04 | 2019-03-29 | 电子科技大学 | Image super-resolution method, storage medium and terminal based on fine and close link neural network |

| US20190139191A1 (en) * | 2017-11-09 | 2019-05-09 | Boe Technology Group Co., Ltd. | Image processing methods and image processing devices |

| CN110136067A (en) * | 2019-05-27 | 2019-08-16 | 商丘师范学院 | A real-time image generation method for super-resolution B-ultrasound images |

| CN110427922A (en) * | 2019-09-03 | 2019-11-08 | 陈�峰 | One kind is based on machine vision and convolutional neural networks pest and disease damage identifying system and method |

| US20200019860A1 (en) * | 2017-03-24 | 2020-01-16 | Huawei Technologies Co., Ltd. | Neural network data processing apparatus and method |

| WO2020109001A1 (en) * | 2018-11-29 | 2020-06-04 | Commissariat A L'energie Atomique Et Aux Energies Alternatives | Super-resolution device and method |

| CN111402138A (en) * | 2020-03-24 | 2020-07-10 | 天津城建大学 | An image super-resolution reconstruction method based on multi-scale feature extraction and fusion with supervised convolutional neural network |

| CN111402131A (en) * | 2020-03-10 | 2020-07-10 | 北京师范大学 | The acquisition method of super-resolution land cover classification map based on deep learning |

| CN111612799A (en) * | 2020-05-15 | 2020-09-01 | 中南大学 | Facial data-oriented method, system and storage medium for incomplete reticulated face restoration |

| CN111754400A (en) * | 2020-06-01 | 2020-10-09 | 杭州电子科技大学 | An efficient image super-resolution reconstruction method |

| KR20200139500A (en) * | 2019-06-04 | 2020-12-14 | 국방과학연구소 | Learning method and inference method based on convolutional neural network for tonal frequency analysis |

-

2021

- 2021-01-26 CN CN202110105967.6A patent/CN112767252B/en not_active Expired - Fee Related

Patent Citations (19)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5696848A (en) * | 1995-03-09 | 1997-12-09 | Eastman Kodak Company | System for creating a high resolution image from a sequence of lower resolution motion images |

| US20150116545A1 (en) * | 2013-10-30 | 2015-04-30 | Ilia Ovsiannikov | Super-resolution in processing images such as from multi-layer sensors |

| CN106548449A (en) * | 2016-09-18 | 2017-03-29 | 北京市商汤科技开发有限公司 | Generate method, the apparatus and system of super-resolution depth map |

| US20180137603A1 (en) * | 2016-11-07 | 2018-05-17 | Umbo Cv Inc. | Method and system for providing high resolution image through super-resolution reconstruction |

| CN106600538A (en) * | 2016-12-15 | 2017-04-26 | 武汉工程大学 | Human face super-resolution algorithm based on regional depth convolution neural network |

| US20200019860A1 (en) * | 2017-03-24 | 2020-01-16 | Huawei Technologies Co., Ltd. | Neural network data processing apparatus and method |

| CN107194893A (en) * | 2017-05-22 | 2017-09-22 | 西安电子科技大学 | Depth image ultra-resolution method based on convolutional neural networks |

| WO2019032304A1 (en) * | 2017-08-07 | 2019-02-14 | Standard Cognition Corp. | Subject identification and tracking using image recognition |

| US20190139191A1 (en) * | 2017-11-09 | 2019-05-09 | Boe Technology Group Co., Ltd. | Image processing methods and image processing devices |

| CN108492249A (en) * | 2018-02-08 | 2018-09-04 | 浙江大学 | Single frames super-resolution reconstruction method based on small convolution recurrent neural network |

| WO2020109001A1 (en) * | 2018-11-29 | 2020-06-04 | Commissariat A L'energie Atomique Et Aux Energies Alternatives | Super-resolution device and method |

| CN109544457A (en) * | 2018-12-04 | 2019-03-29 | 电子科技大学 | Image super-resolution method, storage medium and terminal based on fine and close link neural network |

| CN110136067A (en) * | 2019-05-27 | 2019-08-16 | 商丘师范学院 | A real-time image generation method for super-resolution B-ultrasound images |

| KR20200139500A (en) * | 2019-06-04 | 2020-12-14 | 국방과학연구소 | Learning method and inference method based on convolutional neural network for tonal frequency analysis |

| CN110427922A (en) * | 2019-09-03 | 2019-11-08 | 陈�峰 | One kind is based on machine vision and convolutional neural networks pest and disease damage identifying system and method |

| CN111402131A (en) * | 2020-03-10 | 2020-07-10 | 北京师范大学 | The acquisition method of super-resolution land cover classification map based on deep learning |

| CN111402138A (en) * | 2020-03-24 | 2020-07-10 | 天津城建大学 | An image super-resolution reconstruction method based on multi-scale feature extraction and fusion with supervised convolutional neural network |

| CN111612799A (en) * | 2020-05-15 | 2020-09-01 | 中南大学 | Facial data-oriented method, system and storage medium for incomplete reticulated face restoration |

| CN111754400A (en) * | 2020-06-01 | 2020-10-09 | 杭州电子科技大学 | An efficient image super-resolution reconstruction method |

Non-Patent Citations (6)

| Title |

|---|

| LIUPENG LIN 等: "《Polarimetric SAR Image Super-Resolution VIA Deep Convolutional Neural Network》", 《IGARSS 2019 - 2019 IEEE INTERNATIONAL GEOSCIENCE AND REMOTE SENSING SYMPOSIUM》 * |

| YONGWOO KIM 等: "《A Real-Time Convolutional Neural Network for Super-Resolution on FPGA With Applications to 4K UHD 60 fps Video Services》", 《 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY 》 * |

| 卢建昀: "《基于Inception模型和超分辨率迁移学习的交通信号识别》", 《中国优秀博硕士学位论文全文数据库(硕士)信息科技辑》 * |

| 秦兴 等: "《基于压缩卷积神经网络的图像超分辨率算法》", 《电子科技》 * |

| 赵春林: "《战场目标毫米波辐射特性及无源成像超分辨算法研究》", 《中国优秀博硕士学位论文全文数据库(硕士)信息科技辑》 * |

| 龙祥: "《基于深度学习的图像超分辨率重建技术研究》", 《中国优秀博硕士学位论文全文数据库(硕士)信息科技辑》 * |

Cited By (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN113284112A (en) * | 2021-05-27 | 2021-08-20 | 中国科学院国家空间科学中心 | Molten drop image contour extraction method and system based on deep neural network |

| CN113284112B (en) * | 2021-05-27 | 2023-11-10 | 中国科学院国家空间科学中心 | A method and system for extracting the contour of molten droplet images based on deep neural network |

| CN113409195A (en) * | 2021-07-06 | 2021-09-17 | 中国标准化研究院 | Image super-resolution reconstruction method based on improved deep convolutional neural network |

| CN113610706A (en) * | 2021-07-19 | 2021-11-05 | 河南大学 | Fuzzy monitoring image super-resolution reconstruction method based on convolutional neural network |

| CN114469174A (en) * | 2021-12-17 | 2022-05-13 | 上海深至信息科技有限公司 | A method and system for arterial plaque identification based on ultrasound scanning video |

Also Published As

| Publication number | Publication date |

|---|---|

| CN112767252B (en) | 2022-08-02 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN112767252B (en) | An image super-resolution reconstruction method based on convolutional neural network | |

| CN110415170B (en) | Image super-resolution method based on multi-scale attention convolution neural network | |

| CN112419150B (en) | Image super-resolution reconstruction method of arbitrary multiple based on bilateral upsampling network | |

| CN115147271B (en) | A multi-view information attention interaction network system for light field super-resolution | |

| CN112837224A (en) | A super-resolution image reconstruction method based on convolutional neural network | |

| CN111161146B (en) | Coarse-to-fine single-image super-resolution reconstruction method | |

| CN108921786A (en) | Image super-resolution reconstructing method based on residual error convolutional neural networks | |

| Xu et al. | Dense bynet: Residual dense network for image super resolution | |

| CN109389556A (en) | The multiple dimensioned empty convolutional neural networks ultra-resolution ratio reconstructing method of one kind and device | |

| CN110322402A (en) | Medical image super resolution ratio reconstruction method based on dense mixing attention network | |

| CN112001843B (en) | A deep learning-based infrared image super-resolution reconstruction method | |

| CN105631807A (en) | Single-frame image super resolution reconstruction method based on sparse domain selection | |

| CN116468605A (en) | Video Super-Resolution Reconstruction Method Based on Spatiotemporal Hierarchical Mask Attention Fusion | |

| CN110533591A (en) | Super resolution image reconstruction method based on codec structure | |

| Qiu et al. | Multi-window back-projection residual networks for reconstructing COVID-19 CT super-resolution images | |

| CN111861886A (en) | An image super-resolution reconstruction method based on multi-scale feedback network | |

| CN119228651B (en) | Image super-resolution reconstruction method and device based on high-frequency feature enhancement | |

| CN112017116A (en) | Image super-resolution reconstruction network based on asymmetric convolution and its construction method | |

| Ai et al. | Single image super-resolution via residual neuron attention networks | |

| CN109064394A (en) | A kind of image super-resolution rebuilding method based on convolutional neural networks | |

| Wan et al. | Arbitrary-scale image super-resolution via degradation perception | |

| CN110211059A (en) | A kind of image rebuilding method based on deep learning | |

| Rashid et al. | Single MR image super-resolution using generative adversarial network | |

| Liu et al. | Promptsr: Cascade prompting for lightweight image super-resolution | |

| CN109272450A (en) | An image super-division method based on convolutional neural network |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant | ||

| CF01 | Termination of patent right due to non-payment of annual fee |

Granted publication date: 20220802 |

|

| CF01 | Termination of patent right due to non-payment of annual fee |