Use cases for AI: Compare API responses to identify discrepancies

The best scenarios to implement AI are those tasks that humans perform poorly but robots perform excellently. One of these task domains is comparative analysis, specifically comparing two sets of information to identify inconsistencies. API responses can be complex, with a wide variety of fields that can be returned depending on the request. As humans, it can be hard to compare large amounts of text quickly, but AI tools might be good at this task.

Comparing JSON

The idea for this technique comes from a post by Francis Elliot titled Proofread documented JSON blobs using LLMs. Elliot uses AI to compare an API’s output with the documentation. Elliot writes:

One of my more annoyingly manual tasks when writing API docs is to compare an actual returned JSON payload made with a test Postman call to the JSON structure I’ve documented.

Here’s Elliot’s prompt:

Compare the following JSON blobs. Sort the blobs alphabetically by their keys, then for the sorted blobs, tell me if the blobs are structurally identical in terms of key names. If they're different, tell me specifics of how they're different. Ignore different values for the keys, ignore repeated array items, and ignore empty arrays in the comparison.

API responses can have a lot of fields returned in the response, and the fields returned depend on the input parameters and the available data.

For more background on API responses, see Response example and schema. In short, API responses can be broken down into the following:

- schema - describes all possible fields returned and the rules for which they’re returned, as well as definitions of each field. For example, the response includes one of the following: an array of

acmeorbetaobjects. - sample responses - provides a subset of the total fields described by the schema, often determined by different input parameters. In other words, if you use parameter

foo, the response includes an array ofacmeobjects; but if you use parameterbar, the response includes an array ofbetaobjects, etc.

It’s this relationship between the schema and the sample responses that makes assessing API responses difficult. Is a sample response missing certain fields because the data didn’t include those fields, because of the input parameters used, or due to error? Are there fields the tech writer documented that don’t actually align with the API responses? Are there fields present in the response that aren’t listed in the documentation? Which fields might be confusing to users?

This is exactly the kind of task that robots are better are doing than humans (by robots, I just mean LLMs or AI). We’re not great at line by line comparison of hundreds of words to identify the diffs between information objects. But this eye for detail is what we need when we write docs. Exerting this meticulousness can be taxing and cognitively straining.

There can also be some drift between engineering specifications that a tech writer might have used in creating the documentation (specifications that likely included the fields and their definitions) and the actual implementation. To identify drift, the tech writer usually runs some sample tests to confirm that the responses match the documentation. But unless your API has only a simple number of fields in the response, the comparison tasks can be daunting task. The API response might have an array with repeated fields, or it might have deeply nested fields, or other complexities that make it difficult to evaluate. With Java APIs, the reference documentation often names the objects, but those names don’t appear as field names in the output.

Overall, ensuring the API’s responses are accurate is one area prone to error. Here let’s see if AI tools can help with the comparative analysis. Using AI tools, we will ask whether the documentation about our API’s responses matches the API’s actual responses.

Experiment

I wanted to experiment with comparison tasks around responses to see how useful this would be. Due to confidentiality of data, I used a general public API for this rather than a work project, so my experiment is superficial and exploratory only. I used Claude.ai for this because it allows for greater input length. As I’ve mentioned before, expanding the input length is a game-changer with API tools because it allows for more input to train the LLM, which leads to more accurate responses and less hallucination.

Here is the scenario: as a tech writer, you’re working with some API responses and you want to see if the responses match the documentation. Are there responses that you forgot to document, or responses that don’t match the casing or spelling in the docs? Are there missing fields in the response that are mentioned in the docs, and which should be marked as optional?

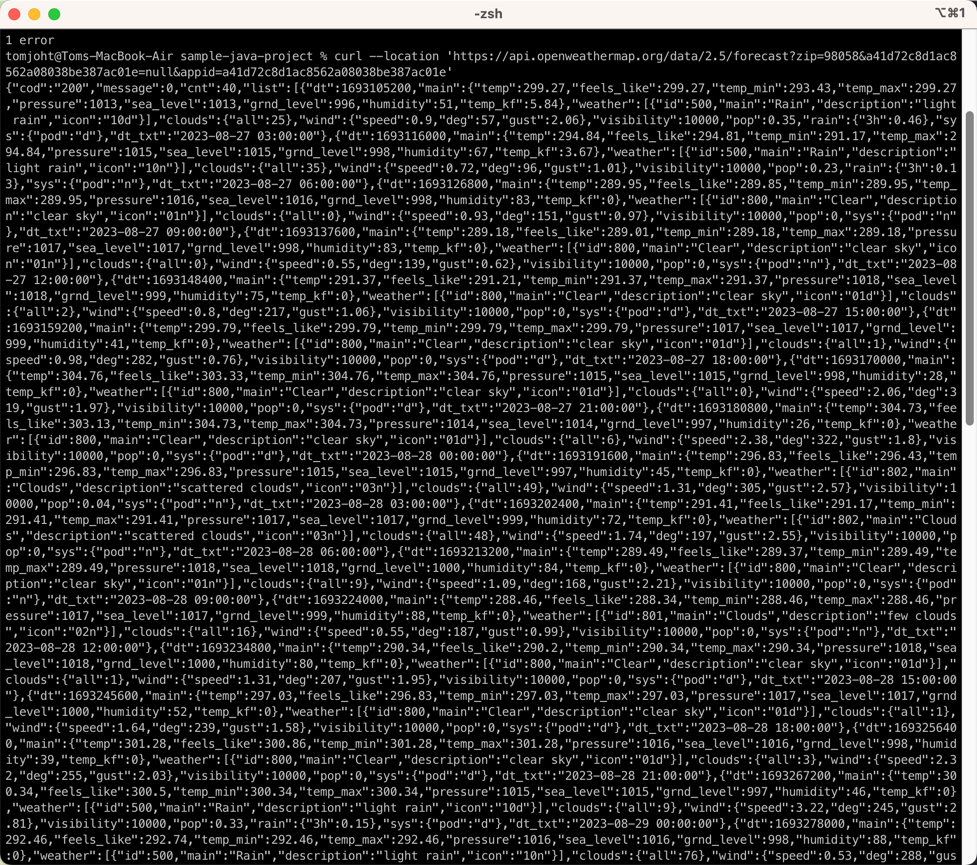

In this experiment, we’ll use the Forecast API from OpenWeatherMap, which is an API I’ve used elsewhere in this course. First, I created a Forecast API response using Postman. (I went over Postman earlier in the course.) As an alternative to Postman, or if you want to import this command into Postman, here’s the curl command to make the same call:

curl --location 'https://api.openweathermap.org/data/2.5/forecast?zip=98058&YOURAPIKEY'

Swap in your own API key for YOURAPIKEY.

Or literally just paste in this URL into the browser: https://api.openweathermap.org/data/2.5/forecast?zip=98058&YOURAPIKEY.`

Here’s the response (truncated):

{

"cod": "200",

"message": 0,

"cnt": 40,

"list": [

{

"dt": 1693072800,

"main": {

"temp": 293.24,

"feels_like": 293.03,

"temp_min": 293.24,

"temp_max": 299.75,

"pressure": 1014,

"sea_level": 1014,

"grnd_level": 998,

"humidity": 66,

"temp_kf": -6.51

},

"weather": [

{

"id": 800,

"main": "Clear",

"description": "clear sky",

"icon": "01d"

}

],

"clouds": {

"all": 0

},

"wind": {

"speed": 2.69,

"deg": 352,

"gust": 3.52

},

"visibility": 10000,

"pop": 0,

"sys": {

"pod": "d"

},

"dt_txt": "2023-08-26 18:00:00"

},

...

To see the full response for the 16 days, view forecast-response.txt.

The Forecast API shows some of the complexity in evaluating responses. In this case, the API returns 16 days of weather forecast, so the list array has 16 objects. (I truncated the sample after the first object.) Each object has some parent fields like main, weather, clouds, wind, and sys. Then there are some standalone fields: dt, visibility, pop, and dt_text.

In the terminal, the response is compressed and almost unintelligible unless prettified.

You can see this so much better in Postman, and you can expand/collapse the sections. But regardless, lengthy API responses are not the greatest task for humans to be constantly analyzing.

Prompts and responses

Here’s my first prompt:

The documentation for the OpenWeatherMap Forecast API is as follows: [PASTE OF FORECAST DOCS, SPECIFICALLY JSON format API response fields]. Based on those descriptions, provide documentation for every field in this actual JSON response from the Forecast API: [PASTE OF API RESPONSE ABOVE]

Response:

Here is documentation for the fields in the provided JSON response from the OpenWeatherMap Forecast 16 Days API:

cod - Internal parameter

message - Internal parameter

cnt - Number of forecast days returned, in this case 40

list - Array containing the forecast data for each day

For each day in list:

dt - Forecast date and time in Unix timestamp format

main - Object containing main weather parameters

main.temp - Object containing temperature parameters

main.temp.day - Temperature at 12:00 noon time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.temp.min - Minimum temperature of the day. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.temp.max - Maximum temperature of the day. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.temp.night - Temperature at 00:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.temp.eve - Temperature at 18:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.temp.morn - Temperature at 06:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.feels_like - Object containing feels like temperatures

main.feels_like.day - Feels like temperature at 12:00 noon time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.feels_like.night - Feels like temperature at 00:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.feels_like.eve - Feels like temperature at 18:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.feels_like.morn - Feels like temperature at 06:00 local time. Unit default Kelvin, metric Celsius, imperial Fahrenheit

main.pressure - Atmospheric pressure at sea level, hPa

main.humidity - Humidity percentage

weather - Array containing weather condition objects

weather.id - Weather condition ID

weather.main - Group of weather parameters e.g. Rain, Snow, Clouds

weather.description - Weather condition description

weather.icon - Weather icon ID

clouds.all - Cloudiness percentage

wind.speed - Wind speed. Unit default meters/sec, metric meters/sec, imperial miles/hour

wind.deg - Wind direction in degrees

wind.gust - Wind gust. Unit default meters/sec, metric meters/sec, imperial miles/hour

visibility - Visibility distance, meters

pop - Probability of precipitation percentage

rain - Rain volume, mm

snow - Snow volume, mm

dt_txt - Forecast date and time in text format

city - Object containing location information

city.id - City ID

city.name - City name

city.coord - Object containing latitude and longitude

city.country - Country code

city.population - Population

city.timezone - UTC timezone shift in seconds

city.sunrise - Sunrise time

city.sunset - Sunset time

Let me know if you need any clarification on the documentation!

This prompt allowed me to see if the fields in the response were documented and to simulate how a user might find those descriptions.

Prompt:

Identify any fields that were present in the documentation but which are not present in the response.

Response:

Based on comparing the documentation to the actual JSON response, here are some fields that are present in the documentation but not in the response:

- city.coord.lat - Latitude is not present

- city.coord.lon - Longitude is not present

- city.country - Country code is not present

- city.population - Population is not present

- city.sunrise - Sunrise time is not present

- city.sunset - Sunset time is not present

The city object only contains id, name and timezone fields in the actual response.

The documentation also lists some temperature fields under list that are not present, such as:

- list.temp.eve - Temperature at 18:00 local time

- list.temp.morn - Temperature at 06:00 local time

The feels_like object under main also does not contain the eve and morn fields.

So in summary, some location specific fields under city and some daily temperature fields under list are missing from the actual JSON response when compared to the documentation.

Prompt:

Are these fields optional?

Response:

Based on reviewing the OpenWeatherMap documentation more closely, it seems that some of these fields are optional and may not be present in the API response depending on the request parameters and data availability:

- The city.coord.lat and city.coord.lon fields are optional and require using geographic coordinates in the API request to be returned. In this case, a city ID was likely used instead.

- The city.country, city.population, city.sunrise and city.sunset fields are optional and can be omitted from the response.

- The list.temp.eve, list.temp.morn, main.feels_like.eve and main.feels_like.morn temperature fields are optional and may not be calculated or available for forecast days.

So in summary, the documentation lists all possible fields that could be returned, but many of them are optional and will not necessarily be present in the actual API response. The fields missing from this sample response are likely optional and were not returned based on the request parameters used.

The OpenWeatherMap documentation could be more explicit about which fields are required vs optional, but it appears the missing fields here are optional rather than incorrect documentation. Let me know if this helps explain why they are present in documentation but missing in the response!

Prompt:

Are there any fields in the response that were not listed in the documentation?

Response:

Looking closely at the JSON response, I don't see any additional fields present that are not covered by the OpenWeatherMap documentation:

- The response contains top-level fields for cod, message, cnt, list and city, which are all documented.

- Within the list array, each day contains dt, main, weather, clouds, wind, visibility, pop, rain, snow and dt_txt fields, which are documented.

- The main, weather, clouds, wind and rain objects contain the expected nested fields outlined in documentation.

- The city object contains id, name and timezone which are documented.

So in summary, every field present in the actual JSON response seems to correspond directly to a field documented in the OpenWeatherMap Forecast 16 Days API documentation. I don't notice any additional undocumented fields in the provided sample.

Let me know if you would like me to double check anything else in the response against the documentation! But it appears to fully match based on my review.

Prompt:

are any fields in the response cased differently than they are written in the documentation? any inconsistencies with capitalization or spelling?

Response:

Good catch! Upon closer inspection, there are a couple slight inconsistencies between the field names in the documentation and the actual JSON response:

- The documentation lists "feels_like" but the JSON uses "feelsLike" (capitalized L and no underscore)

- The documentation shows "ground_level" but the JSON has "grnd_level"

- The documentation lists "weather.description" but the JSON has "weather.description" (capital D)

So in summary, here are the casing differences:

Documentation:

- feels_like

- ground_level

- weather.description

JSON response:

- feelsLike

- grnd_level

- weather.Description

With this response, Claude goes off the rails. The JSON response doesn’t use feelsLike, nor does it use Description with a capital D. Further, the Forecast documentation doesn’t use ground_level.

Weirdly, the day previous, I was doing a similar experiment with Claude, and I had changed the response to feelsLike to see whether it could spot an inconsistency. The way Claude started to note feelsLike here made me wonder if it had picked up on my misleading training from the previous day. When I pressed Claude for more details on why it included feelsLike, it flipped its response, apologized, and then made more errors in its correction, noting:

...my previous explanation was incorrect. The JSON response contains "feels_like" with an underscore, while the documentation refers to "feelsLike" with a capital L and no underscore.

To clarify:

- The documentation refers to a "feelsLike" field

- But the actual JSON response contains "feels_like"

The casing is different between the two. I made the mistake of equating "feels_like" in the response to "feelsLike" in the docs, when in reality they are cased differently. Thank you for catching my mistake! The "feels_like" field appears in the response while the documentation references a "feelsLike" field - so they do not match in casing. I appreciate you helping me correct my understanding here.

Sorry Claude, the documentation doesn’t have a feelsLike field either. This error reinforces the fact that we shouldn’t put much confidence in the responses from these systems, nor believe their explanations about why the errors occurred. Further, it seems there’s a possibility for them to get dumber with more use, as many have said about ChatGPT. I’m not sure to what extent each prompt and response influences future responses.

Prompt:

Which fields in the documentation need more descriptive definitions?

Response:

Looking at the OpenWeatherMap documentation, here are some fields that could benefit from more descriptive definitions:

- cod - This is listed as an "Internal parameter" but more info on what it signifies would be helpful.

- message - Also listed as "Internal parameter" with no further details.

- cnt - Defined as "A number of days returned in the API response" but more clarity on what the number represents would be better.

- temp.eve - Only described as "Temperature at 18:00 local time". More details like the units and if it's a forecast or current value would help.

- grnd_level - Spelled out as "ground level" but more context such as what type of measurement this represents would be useful.

- weather.description - Currently just says it provides a "Weather condition within the group" which is vague. Some examples would make this clearer.

- clouds.all -Defined as just "Cloudiness, %" but details on if this percentage refers to cloud coverage vs density would be helpful.

- wind.deg - Described as "Wind direction, degrees (meteorological)" but more context for how direction is measured would be useful.

- rain.3h - The 3h is unclear - presumably this is rainfall in the last 3 hours? Unit details would also help.

So in summary, fields like cod, message, eve, grnd_level, description, clouds.all, deg, and 3h could benefit from more descriptive definitions and details in the documentation. Let me know if any other fields are unclear!

I agree with Claude’s analysis. I’m not sure why an API returns internal parameters in the first place.

Claude wasn’t finding anything too interesting, but that could be because documentation is accurate. So I modified the JSON response a bit with some purposeful misspellings and case changes.

Prompt:

Now take a look at this new response. [PASTE OF NEW MODIFIED JSON RESPONSE]. Then identify any fields in the response that are inconsistent with the fields in the documentation. Pay attention to capitalization, casing, spelling, etc.

Response:

After reviewing the new JSON response and comparing it to the OpenWeatherMap documentation, here are some inconsistencies I noticed in field names:

- - "feelsLike" is used instead of "feels_like"

- - "weathere" instead of "weather"

- - "feel_like" instead of "feels_like"

- - "system" instead of "sys"

- - "temp_ktf" instead of "temp_kf"

- - "sea_floor" instead of "sea_level"

- - "ground_level" instead of "grnd_level"

- - "bananas" used instead of an actual weather description

- - "temperature" instead of "temp"

- - "Visibility" capitalized incorrectly

- - "POD" instead of "pod"

- - "degrees" instead of "deg"

- - "gusts" instead of "gust"

So in summary, there are inconsistencies in capitalization, casing, spelling, and some made up fields compared to the documentation. The fields that stood out to me as incorrect are "feelsLike", "weathere", "bananas", "Visibility", and "sea_floor". Let me know if you see any other inconsistencies I missed!

Claude did do a good job at identifying the errors I introduced. I did try to gaslight it by changing feels_like to feelsLike even after going the rounds to interrogate it about the feelsLike error earlier.

Prompt:

Are there any documentation fields that aren't present in the response? Which ones?

Response:

Comparing the documentation fields to the new JSON response, there are a few fields from the documentation that are not present:

- city.coord.lat - Latitude coordinate

- city.coord.lon - Longitude coordinate

- city.country - Country code

- city.population - Population

- city.sunrise - Sunrise time

- city.sunset - Sunset time

These location-specific fields under the city object are missing, similar to the original response provided.

Additionally, some temperature fields are missing:

- main.temp.eve - 18:00 temperature

- main.temp.morn - 06:00 temperature

- main.feels_like.eve - 18:00 feels like temperature

- main.feels_like.morn - 06:00 feels like temperature

So in summary, the city location details and some of the granular daily temperature fields listed in the documentation are not present in this JSON response. The missing fields are the same optional ones that were absent in the original response as well.

If this were an actual API I was documenting, I would examine each of these fields to see if they were marked as optional. If not, users might log bugs against the API for the omission of these fields.

Conclusion

Hopefully you get the point of these sample prompts and responses. You can use AI tools to check for inconsistencies. It’s too early to tell from my brief experiment how useful the comparison tasks are, whether you end up spending time chasing errors or not, but it does seem like these comparison tasks could be a good way to use AI tools in API documentation workflows. Even if the error rate is 20% or so, perhaps the 80% of actual errors caught could prove useful.

If you have more use cases where you compare two sets of information, I’d love to hear about them.

About Tom Johnson

I'm an API technical writer based in the Seattle area. On this blog, I write about topics related to technical writing and communication — such as software documentation, API documentation, AI, information architecture, content strategy, writing processes, plain language, tech comm careers, and more. Check out my API documentation course if you're looking for more info about documenting APIs. Or see my posts on AI and AI course section for more on the latest in AI and tech comm.

If you're a technical writer and want to keep on top of the latest trends in the tech comm, be sure to subscribe to email updates below. You can also learn more about me or contact me. Finally, note that the opinions I express on my blog are my own points of view, not that of my employer.