This repository contains code for reproducing experimental results from the paper. The paper introduces a sparsity-based framework to quantify algorithmic fairness for various machine learning tasks. The code currently supports five datasets: Adult, SimulatedC, SimulatedR, AdultM and SimulateCM. AdultM and SimulateCM are specifically used in intersectional fairness experiments. Other datasets presented in the paper require users to manually download the corresponding data files.

See fair.yml

- Global experimental hyperparameters are configured in

config.yml - Use

make_dataset.pyto generate dataset statistics. - Use

make_baseline.pyto generate experimental script. - Experimental setup are listed in

make_baseline.py - Model and data hyperparameters can be found at

process_control()insrc/module/hyper.py. src/modules/debiasfolder contains implementations for different fairness algorithms.- The data generating scripts are under

src/dataset.

Follow the steps below to run the code for generating data statistics, training and testing models, and generating evaluation figures. (We use Adult as an example here)

- Generate the necessary statistics for feature and target standardization by running the

make_data.pyscript for the dataset. This script processes the datasets and computes statistics that are used for training and testing.

python make_dataset.py --data_name Adult- Generate training and testing scripts, which allows parallelization by running the

make_baseline.pyscript. This creates the scripts for training and testing your baseline models.

python make_baseline.py --mode baseline --dataset Adult- Train the Model

bash output/script/train_baseline_Adult_1.shor one can directly run a single experiment by running:

python train_baseline.py --init_seed 0 --num_experiments 1 --control_name Adult_baseline_none_noneFor AdultM and SimulateCM, they take one additional parameter to indicate the number of groups, use 10 groups as an example:

python train_baseline.py --init_seed 0 --num_experiments 1 --control_name AdultM_baseline_none_none_10- Once the model is trained, execute the script to test the model's performance. For example, to test the model on the Adult dataset:

bash output/script/test_baseline_Adult_1.sh- Generate Figures to compare performances of different fairness metrics (clf for classification)

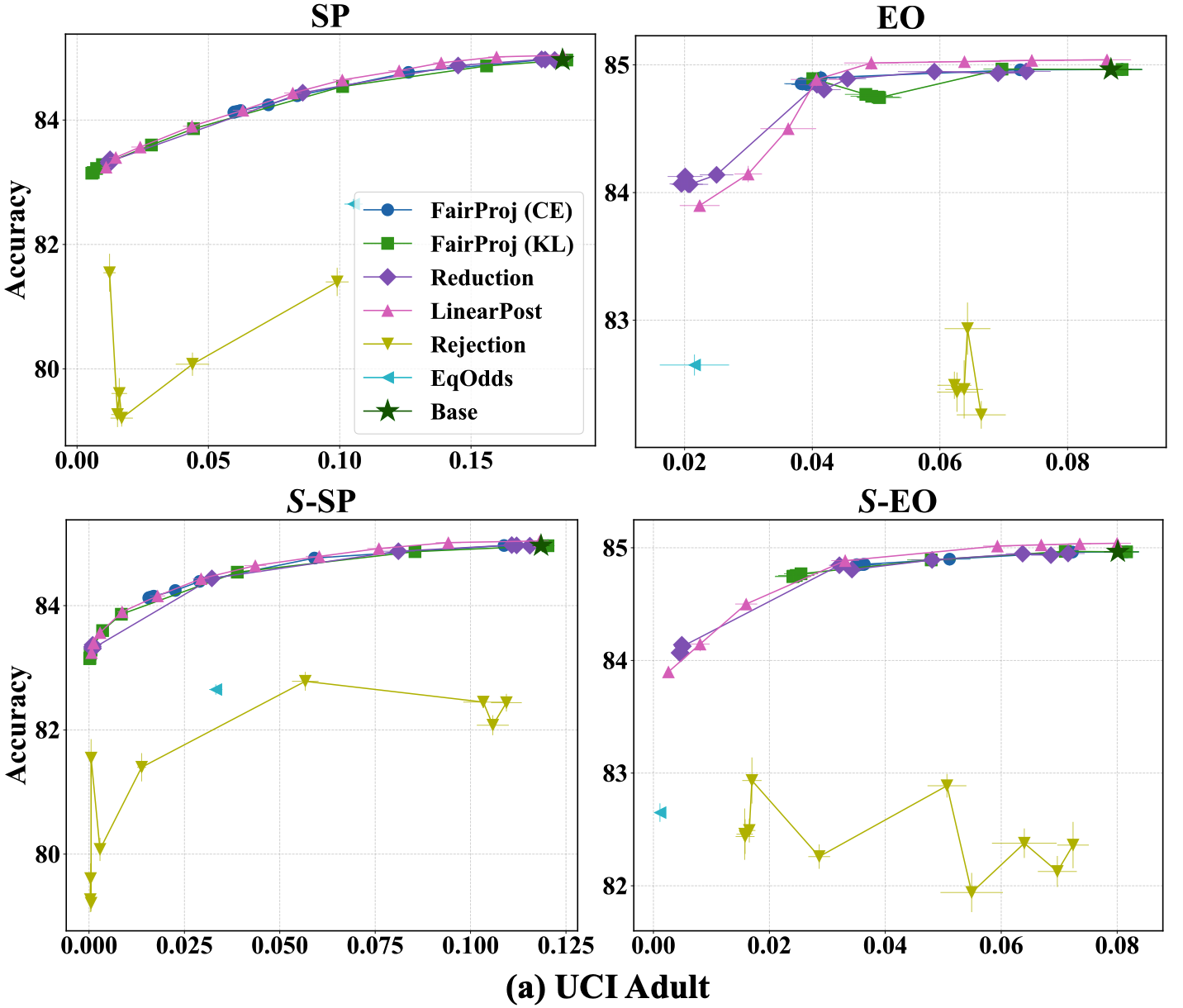

python make_figure.py --task clf- Figures from running the example above.