- Nov 23, 2025: 📊 Step-Audio-Edit-Benchmark Released!

- Nov 19, 2025: ⚙️ We release a new version of our model, which supports polyphonic pronunciation control and improves the performance of emotion, speaking style, and paralinguistic editing.

- Nov 12, 2025: 📦 We release the optimized inference code and model weights of Step-Audio-EditX (HuggingFace; ModelScope) and Step-Audio-Tokenizer(HuggingFace; ModelScope)

- Nov 07, 2025: ✨ Demo Page ; 🎮 HF Space Playground

- Nov 06, 2025: 👋 We release the technical report of Step-Audio-EditX.

We are open-sourcing Step-Audio-EditX, a powerful 3B-parameter LLM-based Reinforcement Learning audio model specialized in expressive and iterative audio editing. It excels at editing emotion, speaking style, and paralinguistics, and also features robust zero-shot text-to-speech (TTS) capabilities.

- Inference Code

- Online demo (Gradio)

- Step-Audio-Edit-Benchmark

- Model Checkpoints

- Step-Audio-Tokenizer

- Step-Audio-EditX

- Step-Audio-EditX-Int4

- Training Code

- SFT training

- PPO training

- ⏳ Feature Support Plan

- Editing

- Polyphone pronunciation control

- More paralinguistic tags ([Cough, Crying, Stress, etc.])

- Filler word removal

- Other Languages

- Japanese, Korean, Arabic, French, Russian, Spanish, etc.

- Editing

-

Zero-Shot TTS

- Excellent zero-shot TTS cloning for Mandarin, English, Sichuanese, and Cantonese.

- To use a dialect, just add a [Sichuanese] or [Cantonese] tag before your text.

- 🔥 Polyphone pronunciation control, all you need to do is replace the polyphonic characters with pinyin.

- [我也想过过过儿过过的生活] -> [我也想guo4guo4guo1儿guo4guo4的生活]

-

Emotion and Speaking Style Editing

- Remarkably effective iterative control over emotions and styles, supporting dozens of options for editing.

- Emotion Editing : [ Angry, Happy, Sad, Excited, Fearful, Surprised, Disgusted, etc. ]

- Speaking Style Editing: [ Act_coy, Older, Child, Whisper, Serious, Generous, Exaggerated, etc.]

- Editing with more emotion and more speaking styles is on the way. Get Ready! 🚀

- Remarkably effective iterative control over emotions and styles, supporting dozens of options for editing.

-

Paralinguistic Editing

- Precise control over 10 types of paralinguistic features for more natural, human-like, and expressive synthetic audio.

- Supporting Tags:

- [ Breathing, Laughter, Suprise-oh, Confirmation-en, Uhm, Suprise-ah, Suprise-wa, Sigh, Question-ei, Dissatisfaction-hnn ]

-

Available Tags

| emotion | happy | Expressing happiness | angry | Expressing anger |

| sad | Expressing sadness | fear | Expressing fear | |

| surprised | Expressing surprise | confusion | Expressing confusion | |

| empathy | Expressing empathy and understanding | embarrass | Expressing embarrassment | |

| excited | Expressing excitement and enthusiasm | depressed | Expressing a depressed or discouraged mood | |

| admiration | Expressing admiration or respect | coldness | Expressing coldness and indifference | |

| disgusted | Expressing disgust or aversion | humour | Expressing humor or playfulness | |

| speaking style | serious | Speaking in a serious or solemn manner | arrogant | Speaking in an arrogant manner |

| child | Speaking in a childlike manner | older | Speaking in an elderly-sounding manner | |

| girl | Speaking in a light, youthful feminine manner | pure | Speaking in a pure, innocent manner | |

| sister | Speaking in a mature, confident feminine manner | sweet | Speaking in a sweet, lovely manner | |

| exaggerated | Speaking in an exaggerated, dramatic manner | ethereal | Speaking in a soft, airy, dreamy manner | |

| whisper | Speaking in a whispering, very soft manner | generous | Speaking in a hearty, outgoing, and straight-talking manner | |

| recite | Speaking in a clear, well-paced, poetry-reading manner | act_coy | Speaking in a sweet, playful, and endearing manner | |

| warm | Speaking in a warm, friendly manner | shy | Speaking in a shy, timid manner | |

| comfort | Speaking in a comforting, reassuring manner | authority | Speaking in an authoritative, commanding manner | |

| chat | Speaking in a casual, conversational manner | radio | Speaking in a radio-broadcast manner | |

| soulful | Speaking in a heartfelt, deeply emotional manner | gentle | Speaking in a gentle, soft manner | |

| story | Speaking in a narrative, audiobook-style manner | vivid | Speaking in a lively, expressive manner | |

| program | Speaking in a show-host/presenter manner | news | Speaking in a news broadcasting manner | |

| advertising | Speaking in a polished, high-end commercial voiceover manner | roar | Speaking in a loud, deep, roaring manner | |

| murmur | Speaking in a quiet, low manner | shout | Speaking in a loud, sharp, shouting manner | |

| deeply | Speaking in a deep and low-pitched tone | loudly | Speaking in a loud and high-pitched tone | |

| paralinguistic | Breathing | Breathing sound | Laughter | Laughter or laughing sound |

| Uhm | Hesitation sound: "Uhm" | Sigh | Sighing sound | |

| Surprise-oh | Expressing surprise: "Oh" | Surprise-ah | Expressing surprise: "Ah" | |

| Surprise-wa | Expressing surprise: "Wa" | Confirmation-en | Confirming: "En" | |

| Question-ei | Questioning: "Ei" | Dissatisfaction-hnn | Dissatisfied sound: "Hnn" |

💡 We welcome all ideas for new features! If you'd like to see a feature added to the project, please start a discussion in our Discussions section.

We'll be collecting community feedback here and will incorporate popular suggestions into our future development plans. Thank you for your contribution!

| Task | Text | Source | Edited |

|---|---|---|---|

| Emotion-Fear | 我总觉得,有人在跟着我,我能听到奇怪的脚步声。 |

fear_zh_female_prompt.webm |

fear_zh_female_output.webm |

| Style-Whisper | 比如在工作间隙,做一些简单的伸展运动,放松一下身体,这样,会让你更有精力。 |

whisper_prompt.webm |

whisper_output.webm |

| Style-Act_coy | 我今天想喝奶茶,可是不知道喝什么口味,你帮我选一下嘛,你选的都好喝~ |

act_coy_prompt.webm |

act_coy_output.webm |

| Paralinguistics | 你这次又忘记带钥匙了 [Dissatisfaction-hnn],真是拿你没办法。 |

paralingustic_prompt.webm |

paralingustic_output.webm |

| Denoising | Such legislation was clarified and extended from time to time thereafter. No, the man was not drunk, he wondered how we got tied up with this stranger. Suddenly, my reflexes had gone. It's healthier to cook without sugar. |

denoising_prompt.webm |

denoising_output.webm |

| Speed-Faster | 上次你说鞋子有点磨脚,我给你买了一双软软的鞋垫。 |

speed_faster_prompt.webm |

speed_faster_output.webm |

For more examples, see demo page.

| Models | 🤗 Hugging Face | ModelScope |

|---|---|---|

| Step-Audio-EditX | stepfun-ai/Step-Audio-EditX | stepfun-ai/Step-Audio-EditX |

| Step-Audio-Tokenizer | stepfun-ai/Step-Audio-Tokenizer | stepfun-ai/Step-Audio-Tokenizer |

The following table shows the requirements for running Step-Audio-EditX model (batch size = 1):

| Model | Parameters | Setting (sample frequency) |

GPU Optimal Memory |

|---|---|---|---|

| Step-Audio-EditX | 3B | 41.6Hz | 12 GB |

- An NVIDIA GPU with CUDA support is required.

- The model is tested on a single L40S GPU.

- 12GB is just a critical value, and 16GB GPU memory shoule be safer.

- Tested operating system: Linux

- Python >= 3.10.0 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 2.4.1-cu121

- CUDA Toolkit

git clone https://github.com/stepfun-ai/Step-Audio-EditX.git

conda create -n stepaudioedit python=3.10

conda activate stepaudioedit

cd Step-Audio-EditX

pip install -r requirements.txt

git lfs install

git clone https://huggingface.co/stepfun-ai/Step-Audio-Tokenizer

git clone https://huggingface.co/stepfun-ai/Step-Audio-EditX

After downloading the models, where_you_download_dir should have the following structure:

where_you_download_dir

├── Step-Audio-Tokenizer

├── Step-Audio-EditX

You can set up the environment required for running Step-Audio-EditX using the provided Dockerfile.

# build docker

docker build . -t step-audio-editx

# run docker

docker run --rm --gpus all \

-v /your/code/path:/app \

-v /your/model/path:/model \

-p 7860:7860 \

step-audio-editxTip

For optimal performance, keep audio under 30 seconds per inference.

# zero-shot cloning

# The path of the generated audio file is output/fear_zh_female_prompt_cloned.wav

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "我总觉得,有人在跟着我,我能听到奇怪的脚步声。"\

--prompt-audio "examples/fear_zh_female_prompt.wav"\

--generated-text "可惜没有如果,已经发生的事情终究是发生了。" \

--edit-type "clone" \

--output-dir ./output

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "His political stance was conservative, and he was particularly close to margaret thatcher."\

--prompt-audio "examples/zero_shot_en_prompt.wav"\

--generated-text "Underneath the courtyard is a large underground exhibition room which connects the two buildings. " \

--edit-type "clone" \

--output-dir ./output

# edit

# There will be one or multiple wave files corresponding to each edit iteration, for example: output/fear_zh_female_prompt_edited_iter1.wav, output/fear_zh_female_prompt_edited_iter2.wav, ...

# emotion; fear

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "我总觉得,有人在跟着我,我能听到奇怪的脚步声。" \

--prompt-audio "examples/fear_zh_female_prompt.wav"\

--edit-type "emotion" \

--edit-info "fear" \

--n-edit-iter 2 \

--output-dir ./output

# emotion; happy

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "You know, I just finished that big project and feel so relieved. Everything seems easier and more colorful, what a wonderful feeling!" \

--prompt-audio "examples/en_happy_prompt.wav"\

--edit-type "emotion" \

--edit-info "happy" \

--n-edit-iter 2 \

--output-dir ./output

# style; whisper

# for style whisper, the edit iteration num should be set bigger than 1 to get better results.

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "比如在工作间隙,做一些简单的伸展运动,放松一下身体,这样,会让你更有精力." \

--prompt-audio "examples/whisper_prompt.wav" \

--edit-type "style" \

--edit-info "whisper" \

--n-edit-iter 2 \

--output-dir ./output

# paraliguistic

# supported tags, Breathing, Laughter, Suprise-oh, Confirmation-en, Uhm, Suprise-ah, Suprise-wa, Sigh, Question-ei, Dissatisfaction-hnn

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "我觉得这个计划大概是可行的,不过还需要再仔细考虑一下。" \

--prompt-audio "examples/paralingustic_prompt.wav" \

--generated-text "我觉得这个计划大概是可行的,[Uhm]不过还需要再仔细考虑一下。" \

--edit-type "paralinguistic" \

--output-dir ./output

# denoise

# Prompt text is not needed.

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-audio "examples/denoise_prompt.wav"\

--edit-type "denoise" \

--output-dir ./output

# vad

# Prompt text is not needed.

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-audio "examples/vad_prompt.wav" \

--edit-type "vad" \

--output-dir ./output

# speed

# supported edit-info: faster, slower, more faster, more slower

python3 tts_infer.py \

--model-path where_you_download_dir \

--prompt-text "上次你说鞋子有点磨脚,我给你买了一双软软的鞋垫。" \

--prompt-audio "examples/speed_prompt.wav" \

--edit-type "speed" \

--edit-info "faster" \

--output-dir ./output

Start a local server for online inference. Assume you have one GPU with at least 12GB memory available and have already downloaded all the models.

# Step-Audio-EditX demo

python app.py --model-path where_you_download_dir --model-source local

# Memory-efficient options with runtime quantization

# For systems with limited GPU memory, you can use quantization to reduce memory usage:

# INT8 quantization

python app.py --model-path where_you_download_dir --model-source local --quantization int8

# INT4 quantization

python app.py --model-path where_you_download_dir --model-source local --quantization int4

# Using pre-quantized AWQ models

python app.py --model-path path/to/quantized/model --model-source local --quantization awq-4bit

# Example with custom settings:

python app.py --model-path where_you_download_dir --model-source local --torch-dtype float16 --enable-auto-transcribeFor users with limited GPU memory, you can create quantized versions of the model to reduce memory requirements:

# Create an AWQ 4-bit quantized model

python quantization/awq_quantize.py --model_path path/to/Step-Audio-EditX

# Advanced quantization options

python quantization/awq_quantize.pyFor detailed quantization options and parameters, see quantization/README.md.

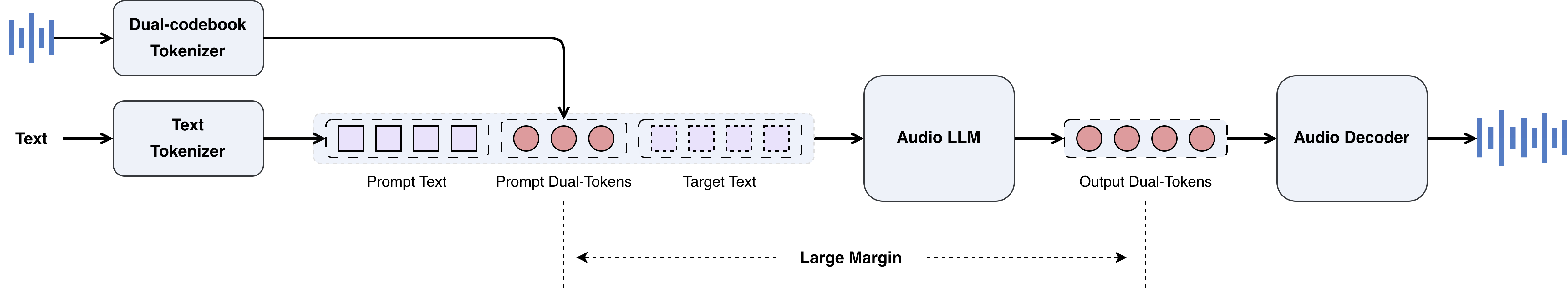

Step-Audio-EditX comprises three primary components:- A dual-codebook audio tokenizer, which converts reference or input audio into discrete tokens.

- An audio LLM that generates dual-codebook token sequences.

- An audio decoder, which converts the dual-codebook token sequences predicted by the audio LLM back into audio waveforms using a flow matching approach.

Audio-Edit enables iterative control over emotion and speaking style across all voices, leveraging large-margin data during SFT and PPO training.

- Step-Audio-EditX demonstrates superior performance over Minimax and Doubao in both zero-shot cloning and emotion control.

- Emotion editing of Step-Audio-EditX significantly improves the emotion-controlled audio outputs of all three models after just one iteration. With further iterations, their overall performance continues to improve.

-

For emotion and speaking style editing, the built-in voices of leading closed-source systems possess considerable in-context capabilities, allowing them to partially convey the emotions in the text. After a single editing round with Step-Audio-EditX, the emotion and style accuracy across all voice models exhibited significant improvement. Further enhancement was observed over the next two iterations, robustly demonstrating our model's strong generalization.

-

For paralinguistic editing, after editing with Step-Audio-EditX, the performance of paralinguistic reproduction is comparable to that achieved by the built-in voices of closed-source models when synthesizing native paralinguistic content directly. (sub means replacement of paralinguistic tags with native words)

| Language | Model | Emotion ↑ | Speaking Style ↑ | Paralinguistic ↑ | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Iter0 | Iter1 | Iter2 | Iter3 | Iter0 | Iter1 | Iter2 | Iter3 | Iter0 | sub | Iter1 | ||

| Chinese | MiniMax-2.6-hd | 71.6 | 78.6 | 81.2 | 83.4 | 36.7 | 58.8 | 63.1 | 67.3 | 1.73 | 2.80 | 2.90 |

| Doubao-Seed-TTS-2.0 | 67.4 | 77.8 | 80.6 | 82.8 | 38.2 | 60.2 | 65.0 | 64.9 | 1.67 | 2.81 | 2.90 | |

| GPT-4o-mini-TTS | 62.6 | 76.0 | 77.0 | 81.8 | 45.9 | 64.0 | 65.7 | 69.7 | 1.71 | 2.88 | 2.93 | |

| ElevenLabs-v2 | 60.4 | 74.6 | 77.4 | 79.2 | 43.8 | 63.3 | 69.7 | 70.8 | 1.70 | 2.71 | 2.92 | |

| English | MiniMax-2.6-hd | 55.0 | 64.0 | 64.2 | 66.4 | 51.9 | 60.3 | 62.3 | 64.3 | 1.72 | 2.87 | 2.88 |

| Doubao-Seed-TTS-2.0 | 53.8 | 65.8 | 65.8 | 66.2 | 47.0 | 62.0 | 62.7 | 62.3 | 1.72 | 2.75 | 2.92 | |

| GPT-4o-mini-TTS | 56.8 | 61.4 | 64.8 | 65.2 | 52.3 | 62.3 | 62.4 | 63.4 | 1.90 | 2.90 | 2.88 | |

| ElevenLabs-v2 | 51.0 | 61.2 | 64.0 | 65.2 | 51.0 | 62.1 | 62.6 | 64.0 | 1.93 | 2.87 | 2.88 | |

| Average | MiniMax-2.6-hd | 63.3 | 71.3 | 72.7 | 74.9 | 44.2 | 59.6 | 62.7 | 65.8 | 1.73 | 2.84 | 2.89 |

| Doubao-Seed-TTS-2.0 | 60.6 | 71.8 | 73.2 | 74.5 | 42.6 | 61.1 | 63.9 | 63.6 | 1.70 | 2.78 | 2.91 | |

| GPT-4o-mini-TTS | 59.7 | 68.7 | 70.9 | 73.5 | 49.1 | 63.2 | 64.1 | 66.6 | 1.81 | 2.89 | 2.90 | |

| ElevenLabs-v2 | 55.7 | 67.9 | 70.7 | 72.2 | 47.4 | 62.7 | 66.1 | 67.4 | 1.82 | 2.79 | 2.90 | |

Part of the code and data for this project comes from:

Thank you to all the open-source projects for their contributions to this project!

- The code in this open-source repository is licensed under the Apache 2.0 License.

@misc{yan2025stepaudioeditxtechnicalreport,

title={Step-Audio-EditX Technical Report},

author={Chao Yan and Boyong Wu and Peng Yang and Pengfei Tan and Guoqiang Hu and Yuxin Zhang and Xiangyu and Zhang and Fei Tian and Xuerui Yang and Xiangyu Zhang and Daxin Jiang and Gang Yu},

year={2025},

eprint={2511.03601},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2511.03601},

}

- Do not use this model for any unauthorized activities, including but not limited to:

- Voice cloning without permission

- Identity impersonation

- Fraud

- Deepfakes or any other illegal purposes

- Ensure compliance with local laws and regulations, and adhere to ethical guidelines when using this model.

- The model developers are not responsible for any misuse or abuse of this technology.

We advocate for responsible generative AI research and urge the community to uphold safety and ethical standards in AI development and application. If you have any concerns regarding the use of this model, please feel free to contact us.