-

Notifications

You must be signed in to change notification settings - Fork 0

Migrate from fastmcp to official modelcontextprotocol/python-sdk #13

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: copilot/fix-041ae223-9eb2-4b3b-a57b-a4de19237dc0

Are you sure you want to change the base?

Migrate from fastmcp to official modelcontextprotocol/python-sdk #13

Conversation

Co-authored-by: primeinc <4395149+primeinc@users.noreply.github.com>

Co-authored-by: primeinc <4395149+primeinc@users.noreply.github.com>

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

MCP Python SDK

Table of Contents

- MCP Python SDK

Overview

The Model Context Protocol allows applications to provide context for LLMs in a standardized way, separating the concerns of providing context from the actual LLM interaction. This Python SDK implements the full MCP specification, making it easy to:

- Build MCP clients that can connect to any MCP server

- Create MCP servers that expose resources, prompts and tools

- Use standard transports like stdio, SSE, and Streamable HTTP

- Handle all MCP protocol messages and lifecycle events

Installation

Adding MCP to your python project

We recommend using uv to manage your Python projects.

If you haven't created a uv-managed project yet, create one:

uv init mcp-server-demo

cd mcp-server-demoThen add MCP to your project dependencies:

uv add "mcp[cli]"Alternatively, for projects using pip for dependencies:

pip install "mcp[cli]"Running the standalone MCP development tools

To run the mcp command with uv:

uv run mcpQuickstart

Let's create a simple MCP server that exposes a calculator tool and some data:

# server.py

from mcp.server.fastmcp import FastMCP

# Create an MCP server

mcp = FastMCP("Demo")

# Add an addition tool

@mcp.tool()

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + b

# Add a dynamic greeting resource

@mcp.resource("greeting://{name}")

def get_greeting(name: str) -> str:

"""Get a personalized greeting"""

return f"Hello, {name}!"You can install this server in Claude Desktop and interact with it right away by running:

mcp install server.pyAlternatively, you can test it with the MCP Inspector:

mcp dev server.pyWhat is MCP?

The Model Context Protocol (MCP) lets you build servers that expose data and functionality to LLM applications in a secure, standardized way. Think of it like a web API, but specifically designed for LLM interactions. MCP servers can:

- Expose data through Resources (think of these sort of like GET endpoints; they are used to load information into the LLM's context)

- Provide functionality through Tools (sort of like POST endpoints; they are used to execute code or otherwise produce a side effect)

- Define interaction patterns through Prompts (reusable templates for LLM interactions)

- And more!

Core Concepts

Server

The FastMCP server is your core interface to the MCP protocol. It handles connection management, protocol compliance, and message routing:

# Add lifespan support for startup/shutdown with strong typing

from contextlib import asynccontextmanager

from collections.abc import AsyncIterator

from dataclasses import dataclass

from fake_database import Database # Replace with your actual DB type

from mcp.server.fastmcp import FastMCP

# Create a named server

mcp = FastMCP("My App")

# Specify dependencies for deployment and development

mcp = FastMCP("My App", dependencies=["pandas", "numpy"])

@dataclass

class AppContext:

db: Database

@asynccontextmanager

async def app_lifespan(server: FastMCP) -> AsyncIterator[AppContext]:

"""Manage application lifecycle with type-safe context"""

# Initialize on startup

db = await Database.connect()

try:

yield AppContext(db=db)

finally:

# Cleanup on shutdown

await db.disconnect()

# Pass lifespan to server

mcp = FastMCP("My App", lifespan=app_lifespan)

# Access type-safe lifespan context in tools

@mcp.tool()

def query_db() -> str:

"""Tool that uses initialized resources"""

ctx = mcp.get_context()

db = ctx.request_context.lifespan_context["db"]

return db.query()Resources

Resources are how you expose data to LLMs. They're similar to GET endpoints in a REST API - they provide data but shouldn't perform significant computation or have side effects:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("My App")

@mcp.resource("config://app", title="Application Configuration")

def get_config() -> str:

"""Static configuration data"""

return "App configuration here"

@mcp.resource("users://{user_id}/profile", title="User Profile")

def get_user_profile(user_id: str) -> str:

"""Dynamic user data"""

return f"Profile data for user {user_id}"Tools

Tools let LLMs take actions through your server. Unlike resources, tools are expected to perform computation and have side effects:

import httpx

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("My App")

@mcp.tool(title="BMI Calculator")

def calculate_bmi(weight_kg: float, height_m: float) -> float:

"""Calculate BMI given weight in kg and height in meters"""

return weight_kg / (height_m**2)

@mcp.tool(title="Weather Fetcher")

async def fetch_weather(city: str) -> str:

"""Fetch current weather for a city"""

async with httpx.AsyncClient() as client:

response = await client.get(f"https://api.weather.com/{city}")

return response.textPrompts

Prompts are reusable templates that help LLMs interact with your server effectively:

from mcp.server.fastmcp import FastMCP

from mcp.server.fastmcp.prompts import base

mcp = FastMCP("My App")

@mcp.prompt(title="Code Review")

def review_code(code: str) -> str:

return f"Please review this code:\n\n{code}"

@mcp.prompt(title="Debug Assistant")

def debug_error(error: str) -> list[base.Message]:

return [

base.UserMessage("I'm seeing this error:"),

base.UserMessage(error),

base.AssistantMessage("I'll help debug that. What have you tried so far?"),

]Images

FastMCP provides an Image class that automatically handles image data:

from mcp.server.fastmcp import FastMCP, Image

from PIL import Image as PILImage

mcp = FastMCP("My App")

@mcp.tool()

def create_thumbnail(image_path: str) -> Image:

"""Create a thumbnail from an image"""

img = PILImage.open(image_path)

img.thumbnail((100, 100))

return Image(data=img.tobytes(), format="png")Context

The Context object gives your tools and resources access to MCP capabilities:

from mcp.server.fastmcp import FastMCP, Context

mcp = FastMCP("My App")

@mcp.tool()

async def long_task(files: list[str], ctx: Context) -> str:

"""Process multiple files with progress tracking"""

for i, file in enumerate(files):

ctx.info(f"Processing {file}")

await ctx.report_progress(i, len(files))

data, mime_type = await ctx.read_resource(f"file://{file}")

return "Processing complete"Completions

MCP supports providing completion suggestions for prompt arguments and resource template parameters. With the context parameter, servers can provide completions based on previously resolved values:

Client usage:

from mcp.client.session import ClientSession

from mcp.types import ResourceTemplateReference

async def use_completion(session: ClientSession):

# Complete without context

result = await session.complete(

ref=ResourceTemplateReference(

type="ref/resource", uri="github://repos/{owner}/{repo}"

),

argument={"name": "owner", "value": "model"},

)

# Complete with context - repo suggestions based on owner

result = await session.complete(

ref=ResourceTemplateReference(

type="ref/resource", uri="github://repos/{owner}/{repo}"

),

argument={"name": "repo", "value": "test"},

context_arguments={"owner": "modelcontextprotocol"},

)Server implementation:

from mcp.server import Server

from mcp.types import (

Completion,

CompletionArgument,

CompletionContext,

PromptReference,

ResourceTemplateReference,

)

server = Server("example-server")

@server.completion()

async def handle_completion(

ref: PromptReference | ResourceTemplateReference,

argument: CompletionArgument,

context: CompletionContext | None,

) -> Completion | None:

if isinstance(ref, ResourceTemplateReference):

if ref.uri == "github://repos/{owner}/{repo}" and argument.name == "repo":

# Use context to provide owner-specific repos

if context and context.arguments:

owner = context.arguments.get("owner")

if owner == "modelcontextprotocol":

repos = ["python-sdk", "typescript-sdk", "specification"]

# Filter based on partial input

filtered = [r for r in repos if r.startswith(argument.value)]

return Completion(values=filtered)

return NoneElicitation

Request additional information from users during tool execution:

from mcp.server.fastmcp import FastMCP, Context

from mcp.server.elicitation import (

AcceptedElicitation,

DeclinedElicitation,

CancelledElicitation,

)

from pydantic import BaseModel, Field

mcp = FastMCP("Booking System")

@mcp.tool()

async def book_table(date: str, party_size: int, ctx: Context) -> str:

"""Book a table with confirmation"""

# Schema must only contain primitive types (str, int, float, bool)

class ConfirmBooking(BaseModel):

confirm: bool = Field(description="Confirm booking?")

notes: str = Field(default="", description="Special requests")

result = await ctx.elicit(

message=f"Confirm booking for {party_size} on {date}?", schema=ConfirmBooking

)

match result:

case AcceptedElicitation(data=data):

if data.confirm:

return f"Booked! Notes: {data.notes or 'None'}"

return "Booking cancelled"

case DeclinedElicitation():

return "Booking declined"

case CancelledElicitation():

return "Booking cancelled"The elicit() method returns an ElicitationResult with:

action: "accept", "decline", or "cancel"data: The validated response (only when accepted)validation_error: Any validation error message

Authentication

Authentication can be used by servers that want to expose tools accessing protected resources.

mcp.server.auth implements an OAuth 2.0 server interface, which servers can use by

providing an implementation of the OAuthAuthorizationServerProvider protocol.

from mcp import FastMCP

from mcp.server.auth.provider import OAuthAuthorizationServerProvider

from mcp.server.auth.settings import (

AuthSettings,

ClientRegistrationOptions,

RevocationOptions,

)

class MyOAuthServerProvider(OAuthAuthorizationServerProvider):

# See an example on how to implement at `examples/servers/simple-auth`

...

mcp = FastMCP(

"My App",

auth_server_provider=MyOAuthServerProvider(),

auth=AuthSettings(

issuer_url="https://myapp.com",

revocation_options=RevocationOptions(

enabled=True,

),

client_registration_options=ClientRegistrationOptions(

enabled=True,

valid_scopes=["myscope", "myotherscope"],

default_scopes=["myscope"],

),

required_scopes=["myscope"],

),

)See OAuthAuthorizationServerProvider for more details.

Running Your Server

Development Mode

The fastest way to test and debug your server is with the MCP Inspector:

mcp dev server.py

# Add dependencies

mcp dev server.py --with pandas --with numpy

# Mount local code

mcp dev server.py --with-editable .Claude Desktop Integration

Once your server is ready, install it in Claude Desktop:

mcp install server.py

# Custom name

mcp install server.py --name "My Analytics Server"

# Environment variables

mcp install server.py -v API_KEY=abc123 -v DB_URL=postgres://...

mcp install server.py -f .envDirect Execution

For advanced scenarios like custom deployments:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("My App")

if __name__ == "__main__":

mcp.run()Run it with:

python server.py

# or

mcp run server.pyNote that mcp run or mcp dev only supports server using FastMCP and not the low-level server variant.

Streamable HTTP Transport

Note: Streamable HTTP transport is superseding SSE transport for production deployments.

from mcp.server.fastmcp import FastMCP

# Stateful server (maintains session state)

mcp = FastMCP("StatefulServer")

# Stateless server (no session persistence)

mcp = FastMCP("StatelessServer", stateless_http=True)

# Stateless server (no session persistence, no sse stream with supported client)

mcp = FastMCP("StatelessServer", stateless_http=True, json_response=True)

# Run server with streamable_http transport

mcp.run(transport="streamable-http")You can mount multiple FastMCP servers in a FastAPI application:

# echo.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP(name="EchoServer", stateless_http=True)

@mcp.tool(description="A simple echo tool")

def echo(message: str) -> str:

return f"Echo: {message}"# math.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP(name="MathServer", stateless_http=True)

@mcp.tool(description="A simple add tool")

def add_two(n: int) -> int:

return n + 2# main.py

import contextlib

from fastapi import FastAPI

from mcp.echo import echo

from mcp.math import math

# Create a combined lifespan to manage both session managers

@contextlib.asynccontextmanager

async def lifespan(app: FastAPI):

async with contextlib.AsyncExitStack() as stack:

await stack.enter_async_context(echo.mcp.session_manager.run())

await stack.enter_async_context(math.mcp.session_manager.run())

yield

app = FastAPI(lifespan=lifespan)

app.mount("/echo", echo.mcp.streamable_http_app())

app.mount("/math", math.mcp.streamable_http_app())For low level server with Streamable HTTP implementations, see:

- Stateful server:

examples/servers/simple-streamablehttp/ - Stateless server:

examples/servers/simple-streamablehttp-stateless/

The streamable HTTP transport supports:

- Stateful and stateless operation modes

- Resumability with event stores

- JSON or SSE response formats

- Better scalability for multi-node deployments

Mounting to an Existing ASGI Server

Note: SSE transport is being superseded by Streamable HTTP transport.

By default, SSE servers are mounted at /sse and Streamable HTTP servers are mounted at /mcp. You can customize these paths using the methods described below.

You can mount the SSE server to an existing ASGI server using the sse_app method. This allows you to integrate the SSE server with other ASGI applications.

from starlette.applications import Starlette

from starlette.routing import Mount, Host

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("My App")

# Mount the SSE server to the existing ASGI server

app = Starlette(

routes=[

Mount('/', app=mcp.sse_app()),

]

)

# or dynamically mount as host

app.router.routes.append(Host('mcp.acme.corp', app=mcp.sse_app()))When mounting multiple MCP servers under different paths, you can configure the mount path in several ways:

from starlette.applications import Starlette

from starlette.routing import Mount

from mcp.server.fastmcp import FastMCP

# Create multiple MCP servers

github_mcp = FastMCP("GitHub API")

browser_mcp = FastMCP("Browser")

curl_mcp = FastMCP("Curl")

search_mcp = FastMCP("Search")

# Method 1: Configure mount paths via settings (recommended for persistent configuration)

github_mcp.settings.mount_path = "/github"

browser_mcp.settings.mount_path = "/browser"

# Method 2: Pass mount path directly to sse_app (preferred for ad-hoc mounting)

# This approach doesn't modify the server's settings permanently

# Create Starlette app with multiple mounted servers

app = Starlette(

routes=[

# Using settings-based configuration

Mount("/github", app=github_mcp.sse_app()),

Mount("/browser", app=browser_mcp.sse_app()),

# Using direct mount path parameter

Mount("/curl", app=curl_mcp.sse_app("/curl")),

Mount("/search", app=search_mcp.sse_app("/search")),

]

)

# Method 3: For direct execution, you can also pass the mount path to run()

if __name__ == "__main__":

search_mcp.run(transport="sse", mount_path="/search")For more information on mounting applications in Starlette, see the Starlette documentation.

Examples

Echo Server

A simple server demonstrating resources, tools, and prompts:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Echo")

@mcp.resource("echo://{message}")

def echo_resource(message: str) -> str:

"""Echo a message as a resource"""

return f"Resource echo: {message}"

@mcp.tool()

def echo_tool(message: str) -> str:

"""Echo a message as a tool"""

return f"Tool echo: {message}"

@mcp.prompt()

def echo_prompt(message: str) -> str:

"""Create an echo prompt"""

return f"Please process this message: {message}"SQLite Explorer

A more complex example showing database integration:

import sqlite3

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("SQLite Explorer")

@mcp.resource("schema://main")

def get_schema() -> str:

"""Provide the database schema as a resource"""

conn = sqlite3.connect("database.db")

schema = conn.execute("SELECT sql FROM sqlite_master WHERE type='table'").fetchall()

return "\n".join(sql[0] for sql in schema if sql[0])

@mcp.tool()

def query_data(sql: str) -> str:

"""Execute SQL queries safely"""

conn = sqlite3.connect("database.db")

try:

result = conn.execute(sql).fetchall()

return "\n".join(str(row) for row in result)

except Exception as e:

return f"Error: {str(e)}"Advanced Usage

Low-Level Server

For more control, you can use the low-level server implementation directly. This gives you full access to the protocol and allows you to customize every aspect of your server, including lifecycle management through the lifespan API:

from contextlib import asynccontextmanager

from collections.abc import AsyncIterator

from fake_database import Database # Replace with your actual DB type

from mcp.server import Server

@asynccontextmanager

async def server_lifespan(server: Server) -> AsyncIterator[dict]:

"""Manage server startup and shutdown lifecycle."""

# Initialize resources on startup

db = await Database.connect()

try:

yield {"db": db}

finally:

# Clean up on shutdown

await db.disconnect()

# Pass lifespan to server

server = Server("example-server", lifespan=server_lifespan)

# Access lifespan context in handlers

@server.call_tool()

async def query_db(name: str, arguments: dict) -> list:

ctx = server.request_context

db = ctx.lifespan_context["db"]

return await db.query(arguments["query"

8000

])The lifespan API provides:

- A way to initialize resources when the server starts and clean them up when it stops

- Access to initialized resources through the request context in handlers

- Type-safe context passing between lifespan and request handlers

import mcp.server.stdio

import mcp.types as types

from mcp.server.lowlevel import NotificationOptions, Server

from mcp.server.models import InitializationOptions

# Create a server instance

server = Server("example-server")

@server.list_prompts()

async def handle_list_prompts() -> list[types.Prompt]:

return [

types.Prompt(

name="example-prompt",

description="An example prompt template",

arguments=[

types.PromptArgument(

name="arg1", description="Example argument", required=True

)

],

)

]

@server.get_prompt()

async def handle_get_prompt(

name: str, arguments: dict[str, str] | None

) -> types.GetPromptResult:

if name != "example-prompt":

raise ValueError(f"Unknown prompt: {name}")

return types.GetPromptResult(

description="Example prompt",

messages=[

types.PromptMessage(

role="user",

content=types.TextContent(type="text", text="Example prompt text"),

)

],

)

async def run():

async with mcp.server.stdio.stdio_server() as (read_stream, write_stream):

await server.run(

read_stream,

write_stream,

InitializationOptions(

server_name="example",

server_version="0.1.0",

capabilities=server.get_capabilities(

notification_options=NotificationOptions(),

experimental_capabilities={},

),

),

)

if __name__ == "__main__":

import asyncio

asyncio.run(run())Caution: The mcp run and mcp dev tool doesn't support low-level server.

Writing MCP Clients

The SDK provides a high-level client interface for connecting to MCP servers using various transports:

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create server parameters for stdio connection

server_params = StdioServerParameters(

command="python", # Executable

args=["example_server.py"], # Optional command line arguments

env=None, # Optional environment variables

)

# Optional: create a sampling callback

async def handle_sampling_message(

message: types.CreateMessageRequestParams,

) -> types.CreateMessageResult:

return types.CreateMessageResult(

role="assistant",

content=types.TextContent(

type="text",

text="Hello, world! from model",

),

model="gpt-3.5-turbo",

stopReason="endTurn",

)

async def run():

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write, sampling_callback=handle_sampling_message

) as session:

# Initialize the connection

await session.initialize()

# List available prompts

prompts = await session.list_prompts()

# Get a prompt

prompt = await session.get_prompt(

"example-prompt", arguments={"arg1": "value"}

)

# List available resources

resources = await session.list_resources()

# List available tools

tools = await session.list_tools()

# Read a resource

content, mime_type = await session.read_resource("file://some/path")

# Call a tool

result = await session.call_tool("tool-name", arguments={"arg1": "value"})

if __name__ == "__main__":

import asyncio

asyncio.run(run())Clients can also connect using Streamable HTTP transport:

from mcp.client.streamable_http import streamablehttp_client

from mcp import ClientSession

async def main():

# Connect to a streamable HTTP server

async with streamablehttp_client("example/mcp") as (

read_stream,

write_stream,

_,

):

# Create a session using the client streams

async with ClientSession(read_stream, write_stream) as session:

# Initialize the connection

await session.initialize()

# Call a tool

tool_result = await session.call_tool("echo", {"message": "hello"})Client Display Utilities

When building MCP clients, the SDK provides utilities to help display human-readable names for tools, resources, and prompts:

from mcp.shared.metadata_utils import get_display_name

from mcp.client.session import ClientSession

async def display_tools(session: ClientSession):

"""Display available tools with human-readable names"""

tools_response = await session.list_tools()

for tool in tools_response.tools:

# get_display_name() returns the title if available, otherwise the name

display_name = get_display_name(tool)

print(f"Tool: {display_name}")

if tool.description:

print(f" {tool.description}")

async def display_resources(session: ClientSession):

"""Display available resources with human-readable names"""

resources_response = await session.list_resources()

for resource in resources_response.resources:

display_name = get_display_name(resource)

print(f"Resource: {display_name} ({resource.uri})")The get_display_name() function implements the proper precedence rules for displaying names:

- For tools:

title>annotations.title>name - For other objects:

title>name

This ensures your client UI shows the most user-friendly names that servers provide.

OAuth Authentication for Clients

The SDK includes authorization support for connecting to protected MCP servers:

from mcp.client.auth import OAuthClientProvider, TokenStorage

from mcp.client.session import ClientSession

from mcp.client.streamable_http import streamablehttp_client

from mcp.shared.auth import OAuthClientInformationFull, OAuthClientMetadata, OAuthToken

class CustomTokenStorage(TokenStorage):

"""Simple in-memory token storage implementation."""

async def get_tokens(self) -> OAuthToken | None:

pass

async def set_tokens(self, tokens: OAuthToken) -> None:

pass

async def get_client_info(self) -> OAuthClientInformationFull | None:

pass

async def set_client_info(self, client_info: OAuthClientInformationFull) -> None:

pass

async def main():

# Set up OAuth authentication

oauth_auth = OAuthClientProvider(

server_url="https://api.example.com",

client_metadata=OAuthClientMetadata(

client_name="My Client",

redirect_uris=["http://localhost:3000/callback"],

grant_types=["authorization_code", "refresh_token"],

response_types=["code"],

),

storage=CustomTokenStorage(),

redirect_handler=lambda url: print(f"Visit: {url}"),

callback_handler=lambda: ("auth_code", None),

)

# Use with streamable HTTP client

async with streamablehttp_client(

"https://api.example.com/mcp", auth=oauth_auth

) as (read, write, _):

async with ClientSession(read, write) as session:

await session.initialize()

# Authenticated session readyFor a complete working example, see examples/clients/simple-auth-client/.

MCP Primitives

The MCP protocol defines three core primitives that servers can implement:

| Primitive | Control | Description | Example Use |

|---|---|---|---|

| Prompts | User-controlled | Interactive templates invoked by user choice | Slash commands, menu options |

| Resources | Application-controlled | Contextual data managed by the client application | File contents, API responses |

| Tools | Model-controlled | Functions exposed to the LLM to take actions | API calls, data updates |

Server Capabilities

MCP servers declare capabilities during initialization:

| Capability | Feature Flag | Description |

|---|---|---|

prompts |

listChanged |

Prompt template management |

resources |

subscribelistChanged |

Resource exposure and updates |

tools |

listChanged |

Tool discovery and execution |

logging |

- | Server logging configuration |

completion

8000

|

- | Argument completion suggestions |

Documentation

- Model Context Protocol documentation

- Model Context Protocol specification

- Officially supported servers

Contributing

We are passionate about supporting contributors of all levels of experience and would love to see you get involved in the project. See the contributing guide to get started.

License

This project is licensed under the MIT License - see the LICENSE file for details.

Introduction

Get started with the Model Context Protocol (MCP)

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Why MCP?

MCP helps you build agents and complex workflows on top of LLMs. LLMs frequently need to integrate with data and tools, and MCP provides:

- A growing list of pre-built integrations that your LLM can directly plug into

- The flexibility to switch between LLM providers and vendors

- Best practices for securing your data within your infrastructure

General architecture

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers:

flowchart LR

subgraph "Your Computer"

Host["Host with MCP Client\n(Claude, IDEs, Tools)"]

S1["MCP Server A"]

S2["MCP Server B"]

S3["MCP Server C"]

Host <-->|"MCP Protocol"| S1

Host <-->|"MCP Protocol"| S2

Host <-->|"MCP Protocol"| S3

S1 <--> D1[("Local\nData Source A")]

S2 <--> D2[("Local\nData Source B")]

end

subgraph "Internet"

S3 <-->|"Web APIs"| D3[("Remote\nService C")]

end

- MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP

- MCP Clients: Protocol clients that maintain 1:1 connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

- Local Data Sources: Your computer's files, databases, and services that MCP servers can securely access

- Remote Services: External systems available over the internet (e.g., through APIs) that MCP servers can connect to

Get started

Choose the path that best fits your needs:

Quick Starts

Get started building your own server to use in Claude for Desktop and other clients Get started building your own client that can integrate with all MCP servers Get started using pre-built servers in Claude for DesktopExamples

Check out our gallery of official MCP servers and implementations View the list of clients that support MCP integrationsTutorials

Learn how to use LLMs like Claude to speed up your MCP development Learn how to effectively debug MCP servers and integrations Test and inspect your MCP servers with our interactive debugging tool <iframe src="https://www.youtube.com/embed/kQmXtrmQ5Zg" />Explore MCP

Dive deeper into MCP's core concepts and capabilities:

Understand how MCP connects clients, servers, and LLMs Expose data and content from your servers to LLMs Create reusable prompt templates and workflows Enable LLMs to perform actions through your server Let your servers request completions from LLMs Learn about MCP's communication mechanismContributing

Want to contribute? Check out our Contributing Guide to learn how you can help improve MCP.

Support and Feedback

Here's how to get help or provide feedback:

- For bug reports and feature requests related to the MCP specification, SDKs, or documentation (open source), please create a GitHub issue

- For discussions or Q&A about the MCP specification, use the specification discussions

- For discussions or Q&A about other MCP open source components, use the organization discussions

- For bug reports, feature requests, and questions related to Claude.app and claude.ai's MCP integration, please see Anthropic's guide on How to Get Support

For Server Developers

Get started building your own server to use in Claude for Desktop and other clients.

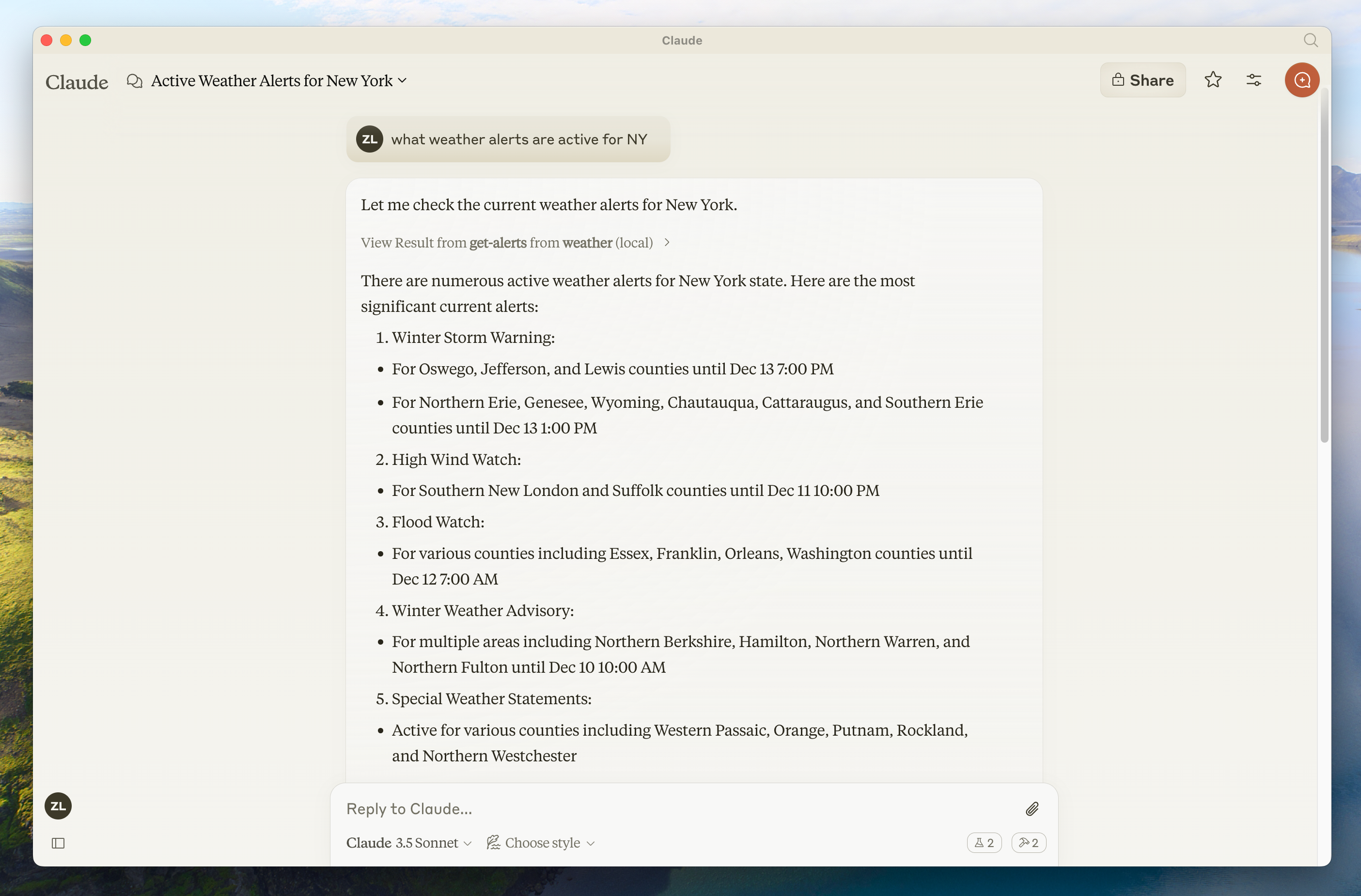

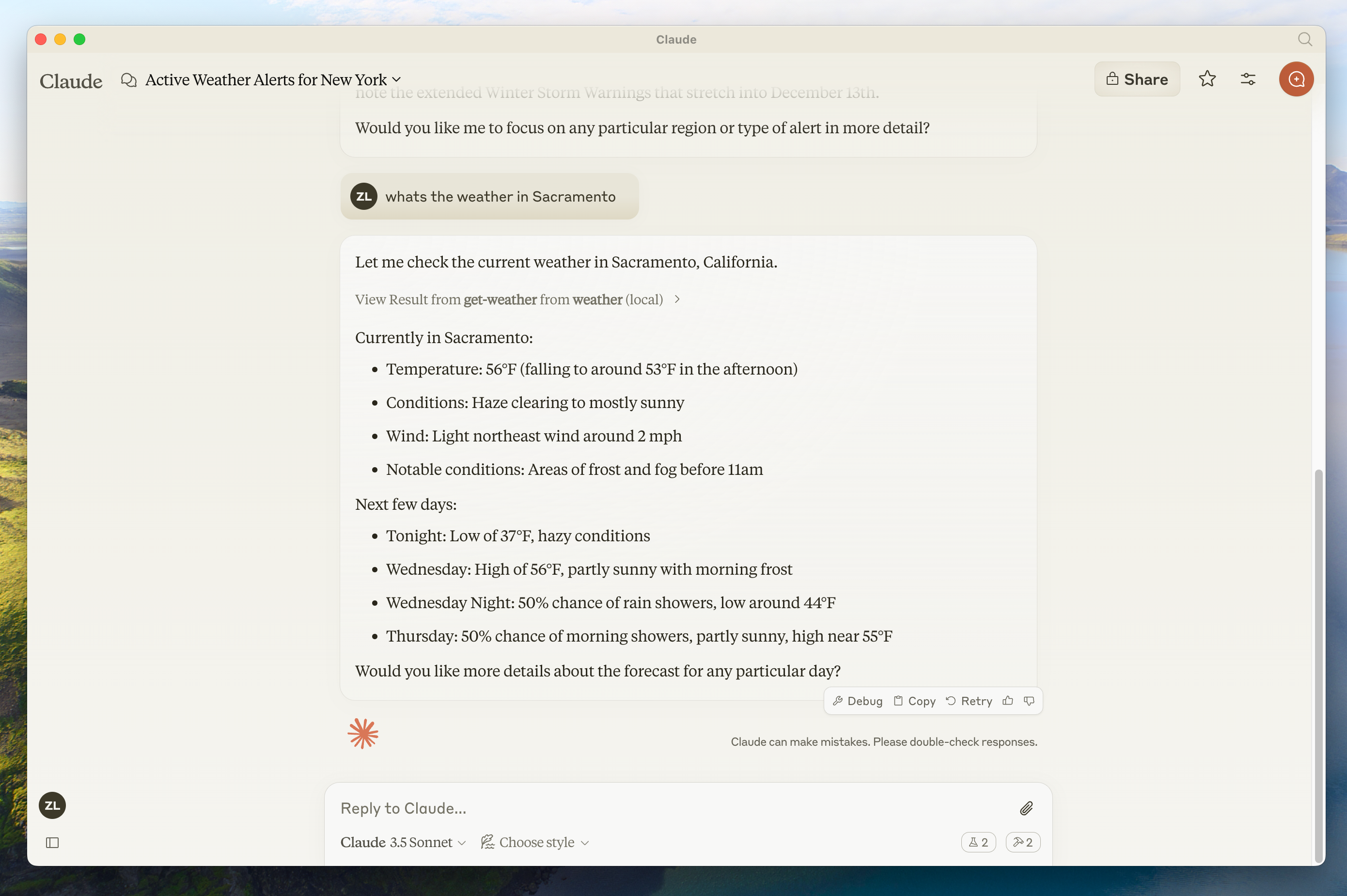

In this tutorial, we'll build a simple MCP weather server and connect it to a host, Claude for Desktop. We'll start with a basic setup, and then progress to more complex use cases.

What we'll be building

Many LLMs do not currently have the ability to fetch the forecast and severe weather alerts. Let's use MCP to solve that!

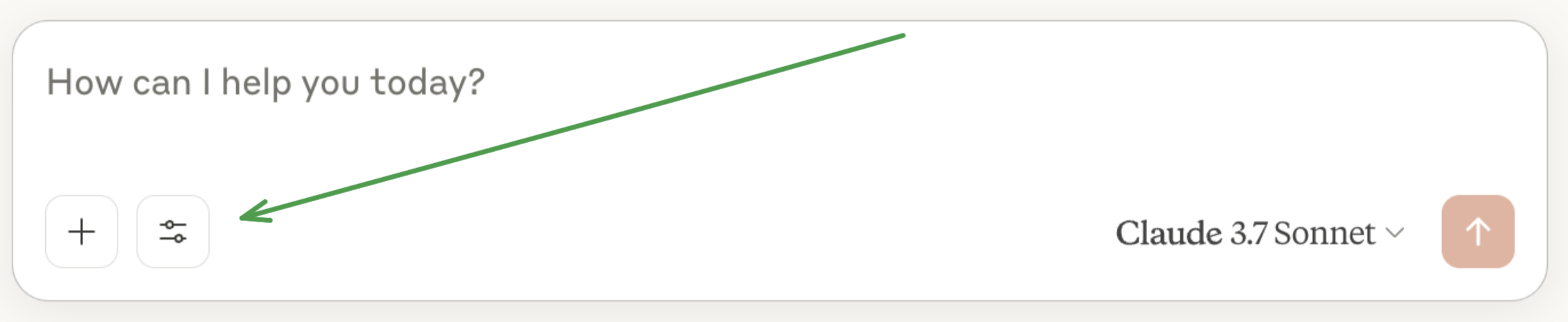

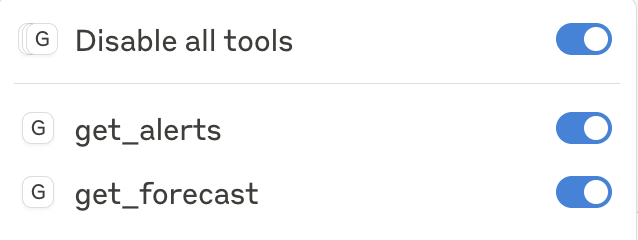

We'll build a server that exposes two tools: get-alerts and get-forecast. Then we'll connect the server to an MCP host (in this case, Claude for Desktop):

Core MCP Concepts

MCP servers can provide three main types of capabilities:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM (with user approval)

- Prompts: Pre-written templates that help users accomplish specific tasks

This tutorial will primarily focus on tools.

Let's get started with building our weather server! [You can find the complete code for what we'll be building here.](https://github.com/modelcontextprotocol/quickstart-resources/tree/main/weather-server-python)### Prerequisite knowledge

This quickstart assumes you have familiarity with:

* Python

* LLMs like Claude

### System requirements

* Python 3.10 or higher installed.

* You must use the Python MCP SDK 1.2.0 or higher.

### Set up your environment

First, let's install `uv` and set up our Python project and environment:

<CodeGroup>

```bash MacOS/Linux

curl -LsSf https://astral.sh/uv/install.sh | sh

```

```powershell Windows

powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex"

```

</CodeGroup>

Make sure to restart your terminal afterwards to ensure that the `uv` command gets picked up.

Now, let's create and set up our project:

<CodeGroup>

```bash MacOS/Linux

# Create a new directory for our project

uv init weather

cd weather

# Create virtual environment and activate it

uv venv

source .venv/bin/activate

# Install dependencies

uv add "mcp[cli]" httpx

# Create our server file

touch weather.py

```

```powershell Windows

# Create a new directory for our project

uv init weather

cd weather

# Create virtual environment and activate it

uv venv

.venv\Scripts\activate

# Install dependencies

uv add mcp[cli] httpx

# Create our server file

new-item weather.py

```

</CodeGroup>

Now let's dive into building your server.

## Building your server

### Importing packages and setting up the instance

Add these to the top of your `weather.py`:

```python

from typing import Any

import httpx

from mcp.server.fastmcp import FastMCP

# Initialize FastMCP server

mcp = FastMCP("weather")

# Constants

NWS_API_BASE = "https://api.weather.gov"

USER_AGENT = "weather-app/1.0"

```

The FastMCP class uses Python type hints and docstrings to automatically generate tool definitions, making it easy to create and maintain MCP tools.

### Helper functions

Next, let's add our helper functions for querying and formatting the data from the National Weather Service API:

```python

async def make_nws_request(url: str) -> dict[str, Any] | None:

"""Make a request to the NWS API with proper error handling."""

headers = {

"User-Agent": USER_AGENT,

"Accept": "application/geo+json"

}

async with httpx.AsyncClient() as client:

try:

response = await client.get(url, headers=headers, timeout=30.0)

response.raise_for_status()

return response.json()

except Exception:

return None

def format_alert(feature: dict) -> str:

"""Format an alert feature into a readable string."""

props = feature["properties"]

return f"""

Event: {props.get('event', 'Unknown')}

Area: {props.get('areaDesc', 'Unknown')}

Severity: {props.get('severity', 'Unknown')}

Description: {props.get('description', 'No description available')}

Instructions: {props.get('instruction', 'No specific instructions provided')}

"""

```

### Implementing tool execution

The tool execution handler is responsible for actually executing the logic of each tool. Let's add it:

```python

@mcp.tool()

async def get_alerts(state: str) -> str:

"""Get weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. CA, NY)

"""

url = f"{NWS_API_BASE}/alerts/active/area/{state}"

data = await make_nws_request(url)

if not data or "features" not in data:

return "Unable to fetch alerts or no alerts found."

if not data["features"]:

return "No active alerts for this state."

alerts = [format_alert(feature) for feature in data["features"]]

return "\n---\n".join(alerts)

@mcp.tool()

async def get_forecast(latitude: float, longitude: float) -> str:

"""Get weather forecast for a location.

Args:

latitude: Latitude of the location

longitude: Longitude of the location

"""

# First get the forecast grid endpoint

points_url = f"{NWS_API_BASE}/points/{latitude},{longitude}"

points_data = await make_nws_request(points_url)

if not points_data:

return "Unable to fetch forecast data for this location."

# Get the forecast URL from the points response

forecast_url = points_data["properties"]["forecast"]

forecast_data = await make_nws_request(forecast_url)

if not forecast_data:

return "Unable to fetch detailed forecast."

# Format the periods into a readable forecast

periods = forecast_data["properties"]["periods"]

forecasts = []

for period in periods[:5]: # Only show next 5 periods

forecast = f"""

{period['name']}:

Temperature: {period['temperature']}°{period['temperatureUnit']}

Wind: {period['windSpeed']} {period['windDirection']}

Forecast: {period['detailedForecast']}

"""

forecasts.append(forecast)

return "\n---\n".join(forecasts)

```

### Running the server

Finally, let's initialize and run the server:

```python

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')

```

Your server is complete! Run `uv run weather.py` to confirm that everything's working.

Let's now test your server from an existing MCP host, Claude for Desktop.

## Testing your server with Claude for Desktop

<Note>

Claude for Desktop is not yet available on Linux. Linux users can proceed to the [Building a client](/quickstart/client) tutorial to build an MCP client that connects to the server we just built.

</Note>

First, make sure you have Claude for Desktop installed. [You can install the latest version

here.](https://claude.ai/download) If you already have Claude for Desktop, **make sure it's updated to the latest version.**

We'll need to configure Claude for Desktop for whichever MCP servers you want to use. To do this, open your Claude for Desktop App configuration at `~/Library/Application Support/Claude/claude_desktop_config.json` in a text editor. Make sure to create the file if it doesn't exist.

For example, if you have [VS Code](https://code.visualstudio.com/) installed:

<Tabs>

<Tab title="MacOS/Linux">

```bash

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

```

</Tab>

<Tab title="Windows">

```powershell

code $env:AppData\Claude\claude_desktop_config.json

```

</Tab>

</Tabs>

You'll then add your servers in the `mcpServers` key. The MCP UI elements will only show up in Claude for Desktop if at least one server is properly configured.

In this case, we'll add our single weather server like so:

<Tabs>

<Tab title="MacOS/Linux">

```json Python

{

"mcpServers": {

"weather": {

"command": "uv",

"args": [

"--directory",

"/ABSOLUTE/PATH/TO/PARENT/FOLDER/weather",

"run",

"weather.py"

]

}

}

}

```

</Tab>

<Tab title="Windows">

```json Python

{

"mcpServers": {

"weather": {

"command": "uv",

"args": [

"--directory",

"C:\\ABSOLUTE\\PATH\\TO\\PARENT\\FOLDER\\weather",

"run",

"weather.py"

]

}

}

}

```

</Tab>

</Tabs>

<Warning>

You may need to put the full path to the `uv` executable in the `command` field. You can get this by running `which uv` on MacOS/Linux or `where uv` on Windows.

</Warning>

<Note>

Make sure you pass in the absolute path to your server.

</Note>

This tells Claude for Desktop:

1. There's an MCP server named "weather"

2. To launch it by running `uv --directory /ABSOLUTE/PATH/TO/PARENT/FOLDER/weather run weather.py`

Save the file, and restart **Claude for Desktop**.

### Prerequisite knowledge

This quickstart assumes you have familiarity with:

* TypeScript

* LLMs like Claude

### System requirements

For TypeScript, make sure you have the latest version of Node installed.

### Set up your environment

First, let's install Node.js and npm if you haven't already. You can download them from [nodejs.org](https://nodejs.org/).

Verify your Node.js installation:

```bash

node --version

npm --version

```

For this tutorial, you'll need Node.js version 16 or higher.

Now, let's create and set up our project:

<CodeGroup>

```bash MacOS/Linux

# Create a new directory for our project

mkdir weather

cd weather

# Initialize a new npm project

npm init -y

# Install dependencies

npm install @modelcontextprotocol/sdk zod

npm install -D @types/node typescript

# Create our files

mkdir src

touch src/index.ts

```

```powershell Windows

# Create a new directory for our project

md weather

cd weather

# Initialize a new npm project

npm init -y

# Install dependencies

npm install @modelcontextprotocol/sdk zod

npm install -D @types/node typescript

# Create our files

md src

new-item src\index.ts

```

</CodeGroup>

Update your package.json to add type: "module" and a build script:

```json package.json

{

"type": "module",

"bin": {

"weather": "./build/index.js"

},

"scripts": {

"build": "tsc && chmod 755 build/index.js"

},

"files": ["build"]

}

```

Create a `tsconfig.json` in the root of your project:

```json tsconfig.json

{

"compilerOptions": {

"target": "ES2022",

"module": "Node16",

"moduleResolution": "Node16",

"outDir": "./build",

"rootDir": "./src",

"strict": true,

"esModuleInterop": true,

"skipLibCheck": true,

"forceConsistentCasingInFileNames": true

},

"include": ["src/**/*"],

"exclude": ["node_modules"]

}

```

Now let's dive into building your server.

## Building your server

### Importing packages and setting up the instance

Add these to the top of your `src/index.ts`:

```typescript

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import { z } from "zod";

const NWS_API_BASE = "https://api.weather.gov";

const USER_AGENT = "weather-app/1.0";

// Create server instance

const server = new McpServer({

name: "weather",

version: "1.0.0",

capabilities: {

resources: {},

tools: {},

},

});

```

### Helper functions

Next, let's add our helper functions for querying and formatting the data from the National Weather Service API:

```typescript

// Helper function for making NWS API requests

async function makeNWSRequest<T>(url: string): Promise<T | null> {

const headers = {

"User-Agent": USER_AGENT,

Accept: "application/geo+json",

};

try {

const response = await fetch(url, { headers });

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

return (await response.json()) as T;

} catch (error) {

console.error("Error making NWS request:", error);

return null;

}

}

interface AlertFeature {

properties: {

event?: string;

areaDesc?: string;

severity?: string;

status?: string;

headline?: string;

};

}

// Format alert data

function formatAlert(feature: AlertFeature): string {

const props = feature.properties;

return [

`Event: ${props.event || "Unknown"}`,

`Area: ${props.areaDesc || "Unknown"}`,

`Severity: ${props.severity || "Unknown"}`,

`Status: ${props.status || "Unknown"}`,

`Headline: ${props.headline || "No headline"}`,

"---",

].join("\n");

}

interface ForecastPeriod {

name?: string;

temperature?: number;

temperatureUnit?: string;

windSpeed?: string;

windDirection?: string;

shortForecast?: string;

}

interface AlertsResponse {

features: AlertFeature[];

}

interface PointsResponse {

properties: {

forecast?: string;

};

}

interface ForecastResponse {

properties: {

periods: ForecastPeriod[];

};

}

```

### Implementing tool execution

The tool execution handler is responsible for actually executing the logic of each tool. Let's add it:

```typescript

// Register weather tools

server.tool(

"get-alerts",

"Get weather alerts for a state",

{

state: z.string().length(2).describe("Two-letter state code (e.g. CA, NY)"),

},

async ({ state }) => {

const stateCode = state.toUpperCase();

const alertsUrl = `${NWS_API_BASE}/alerts?area=${stateCode}`;

const alertsData = await makeNWSRequest<AlertsResponse>(alertsUrl);

if (!alertsData) {

return {

content: [

{

type: "text",

text: "Failed to retrieve alerts data",

},

],

};

}

const features = alertsData.features || [];

if (features.length === 0) {

return {

content: [

{

type: "text",

text: `No active alerts for ${stateCode}`,

},

],

};

}

const formattedAlerts = features.map(formatAlert);

const alertsText = `Active alerts for ${stateCode}:\n\n${formattedAlerts.join("\n")}`;

return {

content: [

{

type: "text",

text: alertsText,

},

],

};

},

);

server.tool(

"get-forecast",

"Get weather forecast for a location",

{

latitude: z.number().min(-90).max(90).describe("Latitude of the location"),

longitude: z

.number()

.min(-180)

.max(180)

.describe("Longitude of the location"),

},

async ({ latitude, longitude }) => {

// Get grid point data

const pointsUrl = `${NWS_API_BASE}/points/${latitude.toFixed(4)},${longitude.toFixed(4)}`;

const pointsData = await makeNWSRequest<PointsResponse>(pointsUrl);

if (!pointsData) {

return {

content: [

{

type: "text",

text: `Failed to retrieve grid point data for coordinates: ${latitude}, ${longitude}. This location may not be supported by the NWS API (only US locations are supported).`,

},

],

};

}

const forecastUrl = pointsData.properties?.forecast;

if (!forecastUrl) {

return {

content: [

{

type: "text",

text: "Failed to get forecast URL from grid point data",

},

],

};

}

// Get forecast data

const forecastData = await makeNWSRequest<ForecastResponse>(forecastUrl);

if (!forecastData) {

return {

content: [

{

type: "text",

text: "Failed to retrieve forecast data",

},

],

};

}

const periods = forecastData.properties?.periods || [];

if (periods.length === 0) {

return {

content: [

{

type: "text",

text: "No forecast periods available",

},

],

};

}

// Format forecast periods

const formattedForecast = periods.map((period: ForecastPeriod) =>

[

`${period.name || "Unknown"}:`,

`Temperature: ${period.temperature || "Unknown"}°${period.temperatureUnit || "F"}`,

`Wind: ${period.windSpeed || "Unknown"} ${period.windDirection || ""}`,

`${period.shortForecast || "No forecast available"}`,

"---",

].join("\n"),

);

const forecastText = `Forecast for ${latitude}, ${longitude}:\n\n${formattedForecast.join("\n")}`;

return {

content: [

{

type: "text",

text: forecastText,

},

],

};

},

);

```

### Running the server

Finally, implement the main function to run the server:

```typescript

async function main() {

const transport = new StdioServerTransport();

await server.connect(transport);

console.error("Weather MCP Server running on stdio");

}

main().catch((error) => {

console.error("Fatal error in main():", error);

process.exit(1);

});

```

Make sure to run `npm run build` to build your server! This is a very important step in getting your server to connect.

Let's now test your server from an existing MCP host, Claude for Desktop.

## Testing your server with Claude for Desktop

<Note>

Claude for Desktop is not yet available on Linux. Linux users can proceed to the [Building a client](/quickstart/client) tutorial to build an MCP client that connects to the server we just built.

</Note>

First, make sure you have Claude for Desktop installed. [You can install the latest version

here.](https://claude.ai/download) If you already have Claude for Desktop, **make sure it's updated to the latest version.**

We'll need to configure Claude for Desktop for whichever MCP servers you want to use. To do this, open your Claude for Desktop App configuration at `~/Library/Application Support/Claude/claude_desktop_config.json` in a text editor. Make sure to create the file if it doesn't exist.

For example, if you have [VS Code](https://code.visualstudio.com/) installed:

<Tabs>

<Tab title="MacOS/Linux">

```bash

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

```

</Tab>

<Tab title="Windows">

```powershell

code $env:AppData\Claude\claude_desktop_config.json

```

</Tab>

</Tabs>

You'll then add your servers in the `mcpServers` key. The MCP UI elements will only show up in Claude for Desktop if at least one server is properly configured.

In this case, we'll add our single weather server like so:

<Tabs>

<Tab title="MacOS/Linux">

<CodeGroup>

```json Node

{

"mcpServers": {

"weather": {

"command": "node",

"args": ["/ABSOLUTE/PATH/TO/PARENT/FOLDER/weather/build/index.js"]

}

}

}

```

</CodeGroup>

</Tab>

<Tab title="Windows">

<CodeGroup>

```json Node

{

"mcpServers": {

"weather": {

"command": "node",

"args": ["C:\\PATH\\TO\\PARENT\\FOLDER\\weather\\build\\index.js"]

}

}

}

```

</CodeGroup>

</Tab>

</Tabs>

This tells Claude for Desktop:

1. There's an MCP server named "weather"

2. Launch it by running `node /ABSOLUTE/PATH/TO/PARENT/FOLDER/weather/build/index.js`

Save the file, and restart **Claude for Desktop**.

Let's get started with building our weather server!

[You can find the complete code for what we'll be building here.](https://github.com/spring-projects/spring-ai-examples/tree/main/model-context-protocol/weather/starter-stdio-server)

For more information, see the [MCP Server Boot Starter](https://docs.spring.io/spring-ai/reference/api/mcp/mcp-server-boot-starter-docs.html) reference documentation.

For manual MCP Server implementation, refer to the [MCP Server Java SDK documentation](/sdk/java/mcp-server).

### System requirements

* Java 17 or higher installed.

* [Spring Boot 3.3.x](https://docs.spring.io/spring-boot/installing.html) or higher

### Set up your environment

Use the [Spring Initializer](https://start.spring.io/) to bootstrap the project.

You will need to add the following dependencies:

<Tabs>

<Tab title="Maven">

```xml

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-server</artifactId>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

</dependency>

</dependencies>

```

</Tab>

<Tab title="Gradle">

```groovy

dependencies {

implementation platform("org.springframework.ai:spring-ai-starter-mcp-server")

implementation platform("org.springframework:spring-web")

}

```

</Tab>

</Tabs>

Then configure your application by setting the application properties:

<CodeGroup>

```bash application.properties

spring.main.bannerMode=off

logging.pattern.console=

```

```yaml application.yml

logging:

pattern:

console:

spring:

main:

banner-mode: off

```

</CodeGroup>

The [Server Configuration Properties](https://docs.spring.io/spring-ai/reference/api/mcp/mcp-server-boot-starter-docs.html#_configuration_properties) documents all available properties.

Now let's dive into building your server.

## Building your server

### Weather Service

Let's implement a [WeatherService.java](https://github.com/spring-projects/spring-ai-examples/blob/main/model-context-protocol/weather/starter-stdio-server/src/main/java/org/springframework/ai/mcp/sample/server/WeatherService.java) that uses a REST client to query the data from the National Weather Service API:

```java

@Service

public class WeatherService {

private final RestClient restClient;

public WeatherService() {

this.restClient = RestClient.builder()

.baseUrl("https://api.weather.gov")

.defaultHeader("Accept", "application/geo+json")

.defaultHeader("User-Agent", "WeatherApiClient/1.0 (your@email.com)")

.build();

}

@Tool(description = "Get weather forecast for a specific latitude/longitude")

public String getWeatherForecastByLocation(

double latitude, // Latitude coordinate

double longitude // Longitude coordinate

) {

// Returns detailed forecast including:

// - Temperature and unit

// - Wind speed and direction

// - Detailed forecast description

}

@Tool(description = "Get weather alerts for a US state")

public String getAlerts(

@ToolParam(description = "Two-letter US state code (e.g. CA, NY)" String state

) {

// Returns active alerts including:

// - Event type

// - Affected area

// - Severity

// - Description

// - Safety instructions

}

// ......

}

```

The `@Service` annotation with auto-register the service in your application context.

The Spring AI `@Tool` annotation, making it easy to create and maintain MCP tools.

The auto-configuration will automatically register these tools with the MCP server.

### Create your Boot Application

```java

@SpringBootApplication

public class McpServerApplication {

public static void main(String[] args) {

SpringApplication.run(McpServerApplication.class, args);

}

@Bean

public ToolCallbackProvider weatherTools(WeatherService weatherService) {

return MethodToolCallbackProvider.builder().toolObjects(weatherService).build();

}

}

```

Uses the the `MethodToolCallbackProvider` utils to convert the `@Tools` into actionable callbacks used by the MCP server.

### Running the server

Finally, let's build the server:

```bash

./mvnw clean install

```

This will generate a `mcp-weather-stdio-server-0.0.1-SNAPSHOT.jar` file within the `target` folder.

Let's now test your server from an existing MCP host, Claude for Desktop.

## Testing your server with Claude for Desktop

<Note>

Claude for Desktop is not yet available on Linux.

</Note>

First, make sure you have Claude for Desktop installed.

[You can install the latest version here.](https://claude.ai/download) If you already have Claude for Desktop, **make sure it's updated to the latest version.**

We'll need to configure Claude for Desktop for whichever MCP servers you want to use.

To do this, open your Claude for Desktop App configuration at `~/Library/Application Support/Claude/claude_desktop_config.json` in a text editor.

Make sure to create the file if it doesn't exist.

For example, if you have [VS Code](https://code.visualstudio.com/) installed:

<Tabs>

<Tab title="MacO

10000

S/Linux">

```bash

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

```

</Tab>

<Tab title="Windows">

```powershell

code $env:AppData\Claude\claude_desktop_config.json

```

</Tab>

</Tabs>

You'll then add your servers in the `mcpServers` key.

The MCP UI elements will only show up in Claude for Desktop if at least one server is properly configured.

In this case, we'll add our single weather server like so:

<Tabs>

<Tab title="MacOS/Linux">

```json java

{

"mcpServers": {

"spring-ai-mcp-weather": {

"command": "java",

"args": [

"-Dspring.ai.mcp.server.stdio=true",

"-jar",

"/ABSOLUTE/PATH/TO/PARENT/FOLDER/mcp-weather-stdio-server-0.0.1-SNAPSHOT.jar"

]

}

}

}

```

</Tab>

<Tab title="Windows">

```json java

{

"mcpServers": {

"spring-ai-mcp-weather": {

"command": "java",

"args": [

"-Dspring.ai.mcp.server.transport=STDIO",

"-jar",

"C:\\ABSOLUTE\\PATH\\TO\\PARENT\\FOLDER\\weather\\mcp-weather-stdio-server-0.0.1-SNAPSHOT.jar"

]

}

}

}

```

</Tab>

</Tabs>

<Note>

Make sure you pass in the absolute path to your server.

</Note>

This tells Claude for Desktop:

1. There's an MCP server named "my-weather-server"

2. To launch it by running `java -jar /ABSOLUTE/PATH/TO/PARENT/FOLDER/mcp-weather-stdio-server-0.0.1-SNAPSHOT.jar`

Save the file, and restart **Claude for Desktop**.

## Testing your server with Java client

### Create a MCP Client manually

Use the `McpClient` to connect to the server:

```java

var stdioParams = ServerParameters.builder("java")

.args("-jar", "/ABSOLUTE/PATH/TO/PARENT/FOLDER/mcp-weather-stdio-server-0.0.1-SNAPSHOT.jar")

.build();

var stdioTransport = new StdioClientTransport(stdioParams);

var mcpClient = McpClient.sync(stdioTransport).build();

mcpClient.initialize();

ListToolsResult toolsList = mcpClient.listTools();

CallToolResult weather = mcpClient.callTool(

new CallToolRequest("getWeatherForecastByLocation",

Map.of("latitude", "47.6062", "longitude", "-122.3321")));

CallToolResult alert = mcpClient.callTool(

new CallToolRequest("getAlerts", Map.of("state", "NY")));

mcpClient.closeGracefully();

```

### Use MCP Client Boot Starter

Create a new boot starter application using the `spring-ai-starter-mcp-client` dependency:

```xml

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-client</artifactId>

</dependency>

```

and set the `spring.ai.mcp.client.stdio.servers-configuration` property to point to your `claude_desktop_config.json`.

You can reuse the existing Anthropic Desktop configuration:

```properties

spring.ai.mcp.client.stdio.servers-configuration=file:PATH/TO/claude_desktop_config.json

```

When you start your client application, the auto-configuration will create, automatically MCP clients from the claude\_desktop\_config.json.

For more information, see the [MCP Client Boot Starters](https://docs.spring.io/spring-ai/reference/api/mcp/mcp-server-boot-client-docs.html) reference documentation.

## More Java MCP Server examples

The [starter-webflux-server](https://github.com/spring-projects/spring-ai-examples/tree/main/model-context-protocol/weather/starter-webflux-server) demonstrates how to create a MCP server using SSE transport.

It showcases how to define and register MCP Tools, Resources, and Prompts, using the Spring Boot's auto-configuration capabilities.

### Prerequisite knowledge

This quickstart assumes you have familiarity with:

* Kotlin

* LLMs like Claude

### System requirements

* Java 17 or higher installed.

### Set up your environment

First, let's install `java` and `gradle` if you haven't already.

You can download `java` from [official Oracle JDK website](https://www.oracle.com/java/technologies/downloads/).

Verify your `java` installation:

```bash

java --version

```

Now, let's create and set up your project:

<CodeGroup>

```bash MacOS/Linux

# Create a new directory for our project

mkdir weather

cd weather

# Initialize a new kotlin project

gradle init

```

```powershell Windows

# Create a new directory for our project

md weather

cd weather

# Initialize a new kotlin project

gradle init

```

</CodeGroup>

After running `gradle init`, you will be presented with options for creating your project.

Select **Application** as the project type, **Kotlin** as the programming language, and **Java 17** as the Java version.

Alternatively, you can create a Kotlin application using the [IntelliJ IDEA project wizard](https://kotlinlang.org/docs/jvm-get-started.html).

After creating the project, add the following dependencies:

<CodeGroup>

```kotlin build.gradle.kts

val mcpVersion = "0.4.0"

val slf4jVersion = "2.0.9"

val ktorVersion = "3.1.1"

dependencies {

implementation("io.modelcontextprotocol:kotlin-sdk:$mcpVersion")

implementation("org.slf4j:slf4j-nop:$slf4jVersion")

implementation("io.ktor:ktor-client-content-negotiation:$ktorVersion")

implementation("io.ktor:ktor-serialization-kotlinx-json:$ktorVersion")

}

```

```groovy build.gradle

def mcpVersion = '0.3.0'

def slf4jVersion = '2.0.9'

def ktorVersion = '3.1.1'

dependencies {

implementation "io.modelcontextprotocol:kotlin-sdk:$mcpVersion"

implementation "org.slf4j:slf4j-nop:$slf4jVersion"

implementation "io.ktor:ktor-client-content-negotiation:$ktorVersion"

implementation "io.ktor:ktor-serialization-kotlinx-json:$ktorVersion"

}

```

</CodeGroup>

Also, add the following plugins to your build script:

<CodeGroup>

```kotlin build.gradle.kts

plugins {

kotlin("plugin.serialization") version "your_version_of_kotlin"

id("com.github.johnrengelman.shadow") version "8.1.1"

}

```

```groovy build.gradle

plugins {

id 'org.jetbrains.kotlin.plugin.serialization' version 'your_version_of_kotlin'

id 'com.github.johnrengelman.shadow' version '8.1.1'

}

```

</CodeGroup>

Now let’s dive into building your server.

## Building your server

### Setting up the instance

Add a server initialization function:

```kotlin

// Main function to run the MCP server

fun `run mcp server`() {

// Create the MCP Server instance with a basic implementation

val server = Server(

Implementation(

name = "weather", // Tool name is "weather"

version = "1.0.0" // Version of the implementation

),

ServerOptions(

capabilities = ServerCapabilities(tools = ServerCapabilities.Tools(listChanged = true))

)

)

// Create a transport using standard IO for server communication

val transport = StdioServerTransport(

System.`in`.asInput(),

System.out.asSink().buffered()

)

runBlocking {

server.connect(transport)

val done = Job()

server.onClose {

done.complete()

}

done.join()

}

}

```

### Weather API helper functions

Next, let's add functions and data classes for querying and converting responses from the National Weather Service API:

```kotlin

// Extension function to fetch forecast information for given latitude and longitude

suspend fun HttpClient.getForecast(latitude: Double, longitude: Double): List<String> {

val points = this.get("/points/$latitude,$longitude").body<Points>()

val forecast = this.get(points.properties.forecast).body<Forecast>()

return forecast.properties.periods.map { period ->

"""

${period.name}:

Temperature: ${period.temperature} ${period.temperatureUnit}

Wind: ${period.windSpeed} ${period.windDirection}

Forecast: ${period.detailedForecast}

""".trimIndent()

}

}

// Extension function to fetch weather alerts for a given state

suspend fun HttpClient.getAlerts(state: String): List<String> {

val alerts = this.get("/alerts/active/area/$state").body<Alert>()

return alerts.features.map { feature ->

"""

Event: ${feature.properties.event}

Area: ${feature.properties.areaDesc}

Severity: ${feature.properties.severity}

Description: ${feature.properties.description}

Instruction: ${feature.properties.instruction}

""".trimIndent()

}

}

@Serializable

data class Points(

val properties: Properties

) {

@Serializable

data class Properties(val forecast: String)

}

@Serializable

data class Forecast(

val properties: Properties

) {

@Serializable

data class Properties(val periods: List<Period>)

@Serializable

data class Period(

val number: Int, val name: String, val startTime: String, val endTime: String,

val isDaytime: Boolean, val temperature: Int, val temperatureUnit: String,

val temperatureTrend: String, val probabilityOfPrecipitation: JsonObject,

val windSpeed: String, val windDirection: String,

val shortForecast: String, val detailedForecast: String,

)

}

@Serializable

data class Alert(

val features: List<Feature>

) {

@Serializable

data class Feature(

val properties: Properties

)

@Serializable

data class Properties(

val event: String, val areaDesc: String, val severity: String,

val description: String, val instruction: String?,

)

}

```

### Implementing tool execution

The tool execution handler is responsible for actually executing the logic of each tool. Let's add it:

```kotlin

// Create an HTTP client with a default request configuration and JSON content negotiation

val httpClient = HttpClient {

defaultRequest {

url("https://api.weather.gov")

headers {

append("Accept", "application/geo+json")

append("User-Agent", "WeatherApiClient/1.0")

}

contentType(ContentType.Application.Json)

}

// Install content negotiation plugin for JSON serialization/deserialization

install(ContentNegotiation) { json(Json { ignoreUnknownKeys = true }) }

}

// Register a tool to fetch weather alerts by state

server.addTool(

name = "get_alerts",

description = """

Get weather alerts for a US state. Input is Two-letter US state code (e.g. CA, NY)

""".trimIndent(),

inputSchema = Tool.Input(

properties = buildJsonObject {

putJsonObject("state") {

put("type", "string")

put("description", "Two-letter US state code (e.g. CA, NY)")

}

},

required = listOf("state")

)

) { request ->

val state = request.arguments["state"]?.jsonPrimitive?.content

if (state == null) {

return@addTool CallToolResult(

content = listOf(TextContent("The 'state' parameter is required."))

)

}

val alerts = httpClient.getAlerts(state)

CallToolResult(content = alerts.map { TextContent(it) })

}

// Register a tool to fetch weather forecast by latitude and longitude

server.addTool(

name = "get_forecast",

description = """

Get weather forecast for a specific latitude/longitude

""".trimIndent(),

inputSchema = Tool.Input(

properties = buildJsonObject {

putJsonObject("latitude") { put("type", "number") }

putJsonObject("longitude") { put("type", "number") }

},

required = listOf("latitude", "longitude")

)

) { request ->

val latitude = request.arguments["latitude"]?.jsonPrimitive?.doubleOrNull

val longitude = request.arguments["longitude"]?.jsonPrimitive?.doubleOrNull

if (latitude == null || longitude == null) {

return@addTool CallToolResult(

content = listOf(TextContent("The 'latitude' and 'longitude' parameters are required."))

)

}

val forecast = httpClient.getForecast(latitude, longitude)

CallToolResult(content = forecast.map { TextContent(it) })

}

```

### Running the server

Finally, implement the main function to run the server:

```kotlin

fun main() = `run mcp server`()

```

Make sure to run `./gradlew build` to build your server. This is a very important step in getting your server to connect.

Let's now test your server from an existing MCP host, Claude for Desktop.

## Testing your server with Claude for Desktop

<Note>

Claude for Desktop is not yet available on Linux. Linux users can proceed to the [Building a client](/quickstart/client) tutorial to build an MCP client that connects to the server we just built.

</Note>

First, make sure you have Claude for Desktop installed. [You can install the latest version

here.](https://claude.ai/download) If you already have Claude for Desktop, **make sure it's updated to the latest version.**

We'll need to configure Claude for Desktop for whichever MCP servers you want to use.

To do this, open your Claude for Desktop App configuration at `~/Library/Application Support/Claude/claude_desktop_config.json` in a text editor.

Make sure to create the file if it doesn't exist.

For example, if you have [VS Code](https://code.visualstudio.com/) installed:

<CodeGroup>

```bash MacOS/Linux

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

```

```powershell Windows

code $env:AppData\Claude\claude_desktop_config.json

```

</CodeGroup>

You'll then add your servers in the `mcpServers` key.

The MCP UI elements will only show up in Claude for Desktop if at least one server is properly configured.

In this case, we'll add our single weather server like so:

<CodeGroup>

```json MacOS/Linux

{

"mcpServers": {

"weather": {

"command": "java",

"args": [

"-jar",

"/ABSOLUTE/PATH/TO/PARENT/FOLDER/weather/build/libs/weather-0.1.0-all.jar"

]

}

}

}

```

```json Windows

{

"mcpServers": {

"weather": {

"command": "java",

"args": [

"-jar",

"C:\\PATH\\TO\\PARENT\\FOLDER\\weather\\build\\libs\\weather-0.1.0-all.jar"

]

}

}

}

```

</CodeGroup>

This tells Claude for Desktop:

1. There's an MCP server named "weather"

2. Launch it by running `java -jar /ABSOLUTE/PATH/TO/PARENT/FOLDER/weather/build/libs/weather-0.1.0-all.jar`

Save the file, and restart **Claude for Desktop**.

### Prerequisite knowledge

This quickstart assumes you have familiarity with:

* C#

* LLMs like Claude

* .NET 8 or higher

### System requirements

* [.NET 8 SDK](https://dotnet.microsoft.com/download/dotnet/8.0) or higher installed.

### Set up your environment

First, let's install `dotnet` if you haven't already. You can download `dotnet` from [official Microsoft .NET website](https://dotnet.microsoft.com/download/). Verify your `dotnet` installation:

```bash

dotnet --version

```

Now, let's create and set up your project:

<CodeGroup>

```bash MacOS/Linux

# Create a new directory for our project

mkdir weather

cd weather

# Initialize a new C# project

dotnet new console

```

```powershell Windows

# Create a new directory for our project

mkdir weather

cd weather

# Initialize a new C# project

dotnet new console

```

</CodeGroup>

After running `dotnet new console`, you will be presented with a new C# project.

You can open the project in your favorite IDE, such as [Visual Studio](https://visualstudio.microsoft.com/) or [Rider](https://www.jetbrains.com/rider/).

Alternatively, you can create a C# application using the [Visual Studio project wizard](https://learn.microsoft.com/en-us/visualstudio/get-started/csharp/tutorial-console?view=vs-2022).

After creating the project, add NuGet package for the Model Context Protocol SDK and hosting:

```bash

# Add the Model Context Protocol SDK NuGet package

dotnet add package ModelContextProtocol --prerelease

# Add the .NET Hosting NuGet package

dotnet add package Microsoft.Extensions.Hosting

```

Now let’s dive into building your server.

## Building your server

Open the `Program.cs` file in your project and replace its contents with the following code:

```csharp

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using ModelContextProtocol;

using System.Net.Http.Headers;

var builder = Host.CreateEmptyApplicationBuilder(settings: null);

builder.Services.AddMcpServer()

.WithStdioServerTransport()

.WithToolsFromAssembly();

builder.Services.AddSingleton(_ =>

{

var client = new HttpClient() { BaseAddress = new Uri("https://api.weather.gov") };

client.DefaultRequestHeaders.UserAgent.Add(new ProductInfoHeaderValue("weather-tool", "1.0"));

return client;

});

var app = builder.Build();

await app.RunAsync();

```

<Note>

When creating the `ApplicationHostBuilder`, ensure you use `CreateEmptyApplicationBuilder` instead of `CreateDefaultBuilder`. This ensures that the server does not write any additional messages to the console. This is only necessary for servers using STDIO transport.

</Note>

This code sets up a basic console application that uses the Model Context Protocol SDK to create an MCP server with standard I/O transport.

### Weather API helper functions

Create an extension class for `HttpClient` which helps simplify JSON request handling:

```csharp

using System.Text.Json;

internal static class HttpClientExt

{

public static async Task<JsonDocument> ReadJsonDocumentAsync(this HttpClient client, string requestUri)

{

using var response = await client.GetAsync(requestUri);

response.EnsureSuccessStatusCode();

return await JsonDocument.ParseAsync(await response.Content.ReadAsStreamAsync());

}

}

```

Next, define a class with the tool execution handlers for querying and converting responses from the National Weather Service API:

```csharp

using ModelContextProtocol.Server;

using System.ComponentModel;

using System.Globalization;

using System.Text.Json;

namespace QuickstartWeatherServer.Tools;

[McpServerToolType]

public static class WeatherTools

{

[McpServerTool, Description("Get weather alerts for a US state.")]

public static async Task<string> GetAlerts(

HttpClient client,

[Description("The US state to get alerts for.")] string state)

{

using var jsonDocument = await client.ReadJsonDocumentAsync($"/alerts/active/area/{state}");

var jsonElement = jsonDocument.RootElement;

var alerts = jsonElement.GetProperty("features").EnumerateArray();

if (!alerts.Any())

{