This is a mono repository for my home infrastructure and Kubernetes cluster. I try to adhere to Infrastructure as Code (IaC) and GitOps practices using tools like Ansible, Terraform, Kubernetes, Flux, Renovate, and GitHub Actions.

My Kubernetes cluster is deployed with Talos. This is a semi-hyper-converged cluster, workloads and block storage are sharing the same available resources on my nodes while I have a separate server with ZFS for NFS/SMB shares, bulk file storage and backups.

There is a template over at onedr0p/cluster-template if you want to try and follow along with some of the practices I use here.

- actions-runner-controller: Self-hosted Github runners.

- cert-manager: Creates SSL certificates for services in my cluster.

- cilium: Internal Kubernetes container networking interface.

- cloudflared: Enables Cloudflare secure access to certain ingresses.

- external-dns: Automatically syncs ingress DNS records to a DNS provider.

- external-secrets: Managed Kubernetes secrets using 1Password Connect.

- ingress-nginx: Kubernetes ingress controller using NGINX as a reverse proxy and load balancer.

- rook: Distributed block storage for peristent storage.

- sops: Managed secrets for Kubernetes and Terraform which are commited to Git.

- spegel: Stateless cluster local OCI registry mirror.

- volsync: Backup and recovery of persistent volume claims.

Flux watches the clusters in my kubernetes folder (see Directories below) and makes the changes to my clusters based on the state of my Git repository.

The way Flux works for me here is it will recursively search the kubernetes/apps folder until it finds the most top level kustomization.yaml per directory and then apply all the resources listed in it. That aforementioned kustomization.yaml will generally only have a namespace resource and one or many Flux kustomizations (ks.yaml). Under the control of those Flux kustomizations there will be a HelmRelease or other resources related to the application which will be applied.

Renovate watches my entire repository looking for dependency updates, when they are found a PR is automatically created. When some PRs are merged Flux applies the changes to my cluster.

This Git repository contains the following directories under Kubernetes.

📁 kubernetes

├── 📁 apps # applications

├── 📁 components # re-useable kustomize components

└── 📁 flux # flux system configurationThis is a high-level look how Flux deploys my applications with dependencies. In most cases a HelmRelease will depend on other HelmRelease's, in other cases a Kustomization will depend on other Kustomization's, and in rare situations an app can depend on a HelmRelease and a Kustomization. The example below shows that atuin won't be deployed or upgrade until the rook-ceph-cluster Helm release is installed or in a healthy state.

graph TD

A>Kustomization: rook-ceph] -->|Creates| B[HelmRelease: rook-ceph]

A>Kustomization: rook-ceph] -->|Creates| C[HelmRelease: rook-ceph-cluster]

C>HelmRelease: rook-ceph-cluster] -->|Depends on| B>HelmRelease: rook-ceph]

D>Kustomization: atuin] -->|Creates| E(HelmRelease: atuin)

E>HelmRelease: atuin] -->|Depends on| C>HelmRelease: rook-ceph-cluster]

While most of my infrastructure and workloads are self-hosted I do rely upon the cloud for certain key parts of my setup. This saves me from having to worry about three things. (1) Dealing with chicken/egg scenarios, (2) services I critically need whether my cluster is online or not and (3) The "hit by a bus factor" - what happens to critical apps (e.g. Email, Password Manager, Photos) that my family relies on when I no longer around.

Alternative solutions to the first two of these problems would be to host a Kubernetes cluster in the cloud and deploy applications like HCVault, Vaultwarden, ntfy, and Gatus; however, maintaining another cluster and monitoring another group of workloads would be more work and probably be more or equal out to the same costs as described below.

| Service | Use | Cost |

|---|---|---|

| 1Password | Secrets with External Secrets | ~$65/yr |

| Cloudflare | Domain and S3 | ~$30/yr |

| GCP | Voice interactions with Home Assistant over Google Assistant | Free |

| GitHub | Hosting this repository and continuous integration/deployments | Free |

| Migadu | Email hosting | ~$20/yr |

| Pushover | Kubernetes Alerts and application notifications | $5 OTP |

| UptimeRobot | Monitoring internet connectivity and external facing applications | ~$58/yr |

| Total: ~$20/mo |

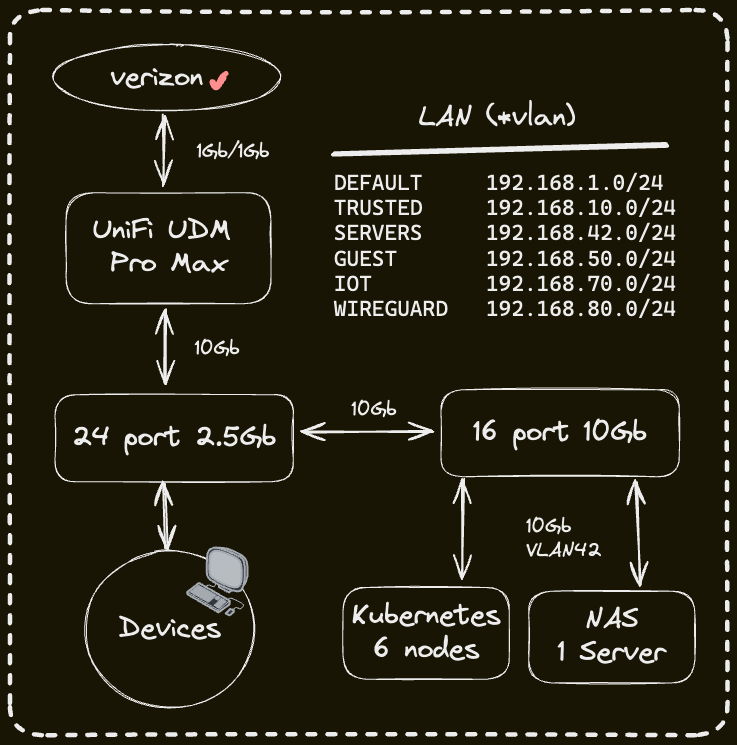

In my cluster there are two instances of ExternalDNS running. One for syncing private DNS records to my UDM Pro Max using ExternalDNS webhook provider for UniFi, while another instance syncs public DNS to Cloudflare. This setup is managed by creating ingresses with two specific classes: internal for private DNS and external for public DNS. The external-dns instances then syncs the DNS records to their respective platforms accordingly.

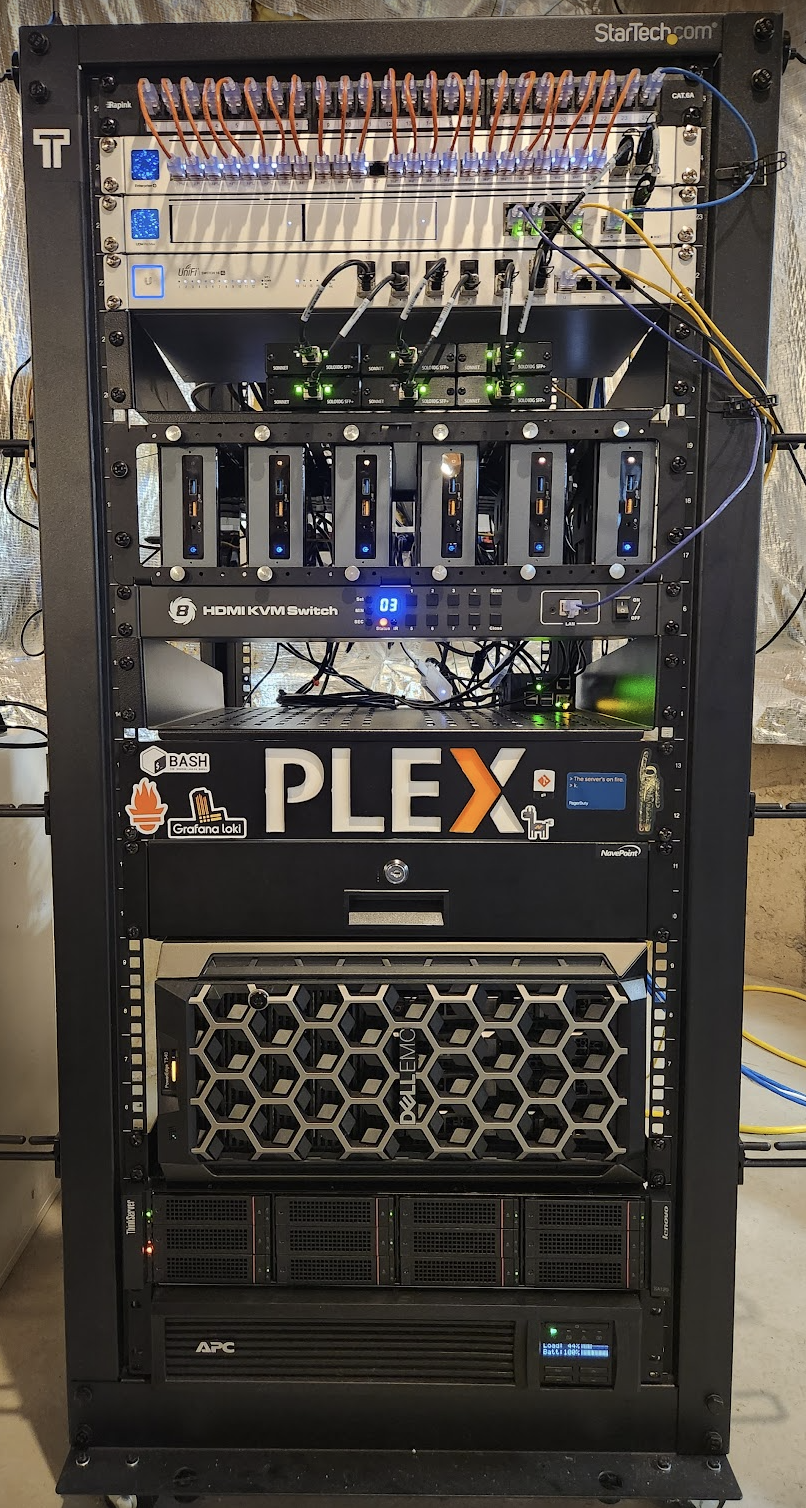

| Device | Num | OS Disk Size | Data Disk Size | Ram | OS | Function |

|---|---|---|---|---|---|---|

| ASUS NUC 14 Pro CU 5 125H | 3 | 1TB SSD | 1TB (local) / 800GB (rook-ceph) | 96GB | Talos | Kubernetes |

| PowerEdge T340 | 1 | 1TB SSD | 8x22TB ZFS (mirrored vdevs) | 64GB | TrueNAS SCALE | NFS + Backup Server |

| PiKVM (RasPi 4) | 1 | 64GB (SD) | - | 4GB | PiKVM | KVM |

| TESmart 8 Port KVM Switch | 1 | - | - | - | - | Network KVM (for PiKVM) |

| UniFi UDMP Max | 1 | - | 2x4TB HDD | - | - | Router & NVR |

| UniFi US-16-XG | 1 | - | - | - | - | 10Gb Core Switch |

| UniFi USW-Enterprise-24-PoE | 1 | - | - | - | - | 2.5Gb PoE Switch |

| UniFi USP PDU Pro | 1 | - | - | - | - | PDU |

| APC SMT1500RM2U | 1 | - | - | - | - | UPS |

Thanks to all the people who donate their time to the Home Operations Discord community. Be sure to check out kubesearch.dev for ideas on how to deploy applications or get ideas on what you could deploy.