5G-Monarch is a network slice monitoring architecture for cloud native 5G network deployments. This repository contains the source code and configuration files for setting up 5G-MonArch, in conjunction with a 5G network deployment.

The figure above shows the conceptual architecture of Monarch. Monarch is designed for cloud-native 5G deployments and focuses on network slice monitoring and per-slice KPI computation.

- 5G-Monarch

- Requirements

- Deployment

- Step 1: Create a namespace for deploying Monarch

- Step 2: Deploy the Data Store

- Step 3: Deploy the NSSDC

- Step 4: Deploy the Data Distribution Component

- Step 5: Deploy the Data Visualization Component

- Step 6: Deploy Monarch External Components

- Step 7: Deploy the Monitoring Manager

- Step 8: Deploy the Request Translator

- Step 9: Configure Datasources and Dashboards in Grafana

- Step 10: Submit a Slice Monitoring Request

- Step 11: Generate Traffic and View KPIs in Grafana

- Visualizing network slices KPIs using Monarch

- Citation

- Contributions

- Supported OS: Ubuntu 22.04 LTS (recommended) or Ubuntu 20.04 LTS

- Minimum Specifications: 8 cores, 8 GB RAM

The open5gs-k8s repository contains the source code and configuration files for deploying a 5G network using Open5GS on Kubernetes. Please follow the detailed instructions in the open5gs-k8s repository to set up your 5G network.

Note

To enable metrics collection for monitoring purposes, select the Deployment with Monarch option while deploying open5gs-k8s.

After deploying the 5G network, ensure that two network slices have been successfully configured by performing a ping test to verify connectivity. This step confirms that the network is functioning correctly and is ready for Monarch deployment.

After completing the deployment of the 5G network, to deploy Monarch, follow the deployment steps below:

We will create a namespace for deploying all Monarch components.

kubectl create namespace monarchYou can verify the creation of namespace as follows.

kubectl get namespacesThe data_store component in Monarch is an abstraction of long-term persistent storage and is responsible for storing monitoring data as well as configuration data (e.g., templates for KPI computation modules).

Follow the steps below to deploy it.

Most Monarch components come with install.sh and uninstall.sh scripts for easy setup and teardown. To deploy the data_store component, navigate to its directory and run the install.sh script:

cd data_store

./install.shVerify the deployment with:

kubectl get pods -n monarchYou should see output similar to the following:

NAME READY STATUS RESTARTS AGE

datastore-minio-695cb778d5-tjw44 1/1 Running 0 54s

datastore-mongodb-0 1/1 Running 0 43sMonarch's data store includes a MinIO object storage service, which requires an access key and secret key for secure access. To configure these credentials:

- Open the MinIO GUI in your browser at http://localhost:30713.

- Log in using the default credentials:

- Username: admin

- Password: monarch-operator

After logging in, create the access key and secret key as follows:

- In the left sidebar, navigate to Access Keys.

- Click Create to generate a new access key and secret key.

You should see the credentials generated and displayed as shown below:

Note

Be sure to note down these credentials, as you will need them in the next configuration steps.

The third step is the deployment of the Network Slice Segment Data Collector (NSSDC) component.

This component is instantiated per network slice segment (NSS) and interacts with the NSS management function (NSSMF) (e.g., service management and orchestration (SMO) in the RAN and network function virtualization orchestration (NFVO) in the core) to instantiate monitoring data exporters (MDEs) specific to that network slice segment.

Our NSSDC uses Prometheus which is an open-source systems monitoring toolkit.

Begin by creating a .env file in the root of your repository to store important environment variables, such as the Minio access key and secret key:

~/5g-monarch$ touch .envOpen the .env file in a text editor and add the following environment variables. Make sure to replace <node_ip> with the actual IP address of your Kubernetes host.

MONARCH_MINIO_ENDPOINT="<node_ip>:30712" # Replace with the node IP of the Kubernetes host

MONARCH_MINIO_ACCESS_KEY="" # Access key from Minio GUI

MONARCH_MINIO_SECRET_KEY="" # Secret key from Minio GUI

MONARCH_MONITORING_INTERVAL="1s"Navigate to the nssdc directory and execute the install.sh script to deploy the NSSDC:

cd nssdc

./install.shMonitor the status of the prometheus-nssdc-prometheus-0 pod to ensure it is running correctly. Once the pod status shows READY (3/3), you can proceed to the next step:

kubectl get pods -n monarchYou should see output similar to the following:

NAME READY STATUS RESTARTS AGE

datastore-minio-695cb778d5-tjw44 1/1 Running 0 21m

datastore-mongodb-0 1/1 Running 0 20m

nssdc-operator-5555d675fd-g29lv 1/1 Running 0 92s

prometheus-nssdc-prometheus-0 3/3 Running 0 91sNote

If the prometheus-nssdc-prometheus-0 pod is crashing, it may indicate an issue with the IP address specified in the .env file. Ensure that you are using the correct IP address for the primary network interface (e.g., enp0s3) if you are running on a VM.

In this step, we will deploy the data distribution component. The data distribution component is responsible for collecting processed monitoring data from different NSSDCs.

Our data distribution component uses Thanos, which extends Prometheus with high-availability and long-term storage.

Follow the instructions below to deploy this component.

First, populate the following environment variables in your .env file, replacing <node_ip> with the actual IP address of your Kubernetes host:

MONARCH_THANOS_STORE_GRPC="<node_ip>:30905"

MONARCH_THANOS_STORE_HTTP="<node_ip>:30906"Tip

These ports are specified in the nssdc/values.yaml file.

Next, navigate to the data_distribution directory and run the install.sh script to deploy the component:

cd data_distribution

./install.shVerify the deployment with

kubectl get pods -n monarchYou should see 4 datadist pods deployed.

NAME READY STATUS RESTARTS AGE

datadist-thanos-query-689589fcf4-g5lf9 1/1 Running 0 89s

datadist-thanos-query-frontend-65cb6b64df-58hd2 1/1 Running 0 89s

datadist-thanos-receive-0 1/1 Running 0 89s

datadist-thanos-storegateway-0 1/1 Running 0 89sNote

It may take some time for these pods to reach the READY state.

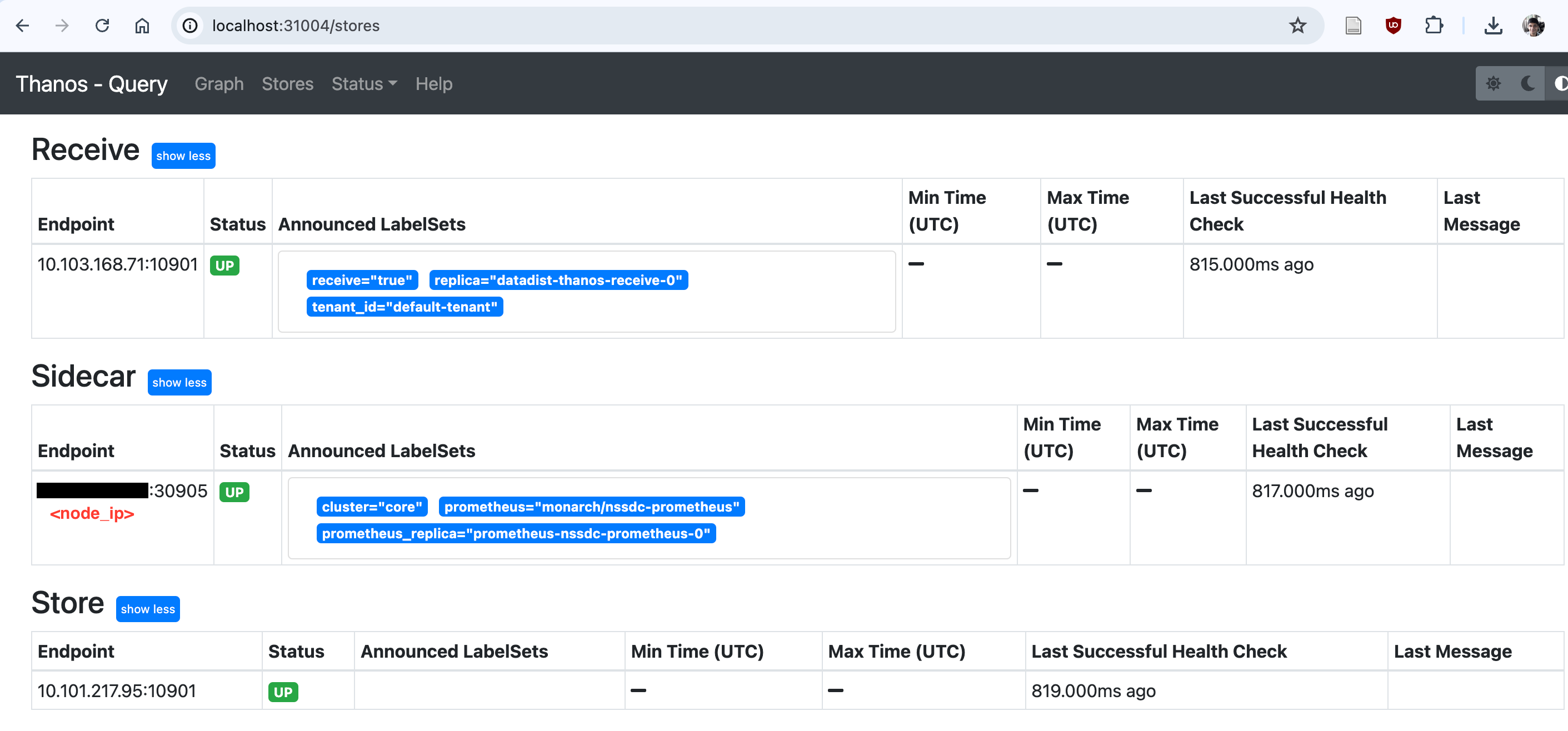

Once the pods are confirmed as running, you can access the Thanos GUI at http://localhost:31004. From there, verify and that the nssdc sidecar has been successfully discovered, as shown in the figure below.

In this step, we will deploy the data_visualization component, which leverages

Grafana.

To deploy the data visualization component, navigate to the data_visualization directory and run the install.sh script:

cd data_visualization

./install.shVerify the deployment:

kubectl get pods -n monarchYou should see the dataviz-grafana pod in READY(2/2) state after some time.

dataviz-grafana-747d778c9c-xkhhv 2/2 Running 0 45sOnce the data_visualization component has been successfully deployed, you can access the Grafana GUI at at http://localhost:32005. Login with the default credentials:

- Username: admin

- Password: prom-operator

To deploy Monarch's external components, follow the steps below. These components are required for the request_translator and monitoring_manager modules to function correctly.

Several components depend on Python libraries like flask and requests. Install these dependencies by running:

pip3 install -r requirements.txtThe Service Orchestrator is a mock component that simulates the basic functionality of the actual service orchestrator (e.g., ONAP). To deploy the Service Orchestrator, run:

cd service_orchestrator

./install.shYou can verify that it is running with:

kubectl get pods -n monarch | grep service-orchestratorExpected output:

service-orchestrator-7b9ffd8c5b-5twq7 1/1 Running 0 2m50sOnce it is running, you can check the logs:

kubectl logs service-orchestrator-7b9ffd8c5b-5twq7 -n monarchYou should see an output similar to the following, indicating successful startup:

2024-11-01 12:03:14,409 - service_orchestrator - INFO - Service Orchestrator started

2024-11-01 12:03:14,410 - service_orchestrator - INFO - Slice info loaded: {'1-000001': [{'nf': 'upf1', 'nss': 'edge'}, {'nf': 'smf1', 'nss': 'core'}], '2-000002': [{'nf': 'upf2', 'nss': 'edge'}, {'nf': 'smf2', 'nss': 'core'}]}Verify the Service orchestrator Health

Open a new terminal and verify that the Service Orchestrator is running correctly by executing the following test script:

./service_orchestrator/test-orchestrator.sh A successful response will resemble:

{

"message": "Service Orchestrator is healthy",

"status": "success"

}

{

"pods": [

{

"name": "open5gs-smf1-6d8bcc6789-tkmbm",

"nf": "smf",

"nss": "edge",

"pod_ip": "10.244.0.105"

},Next, deploy the NFV Orchestrator, another mock component that simulates NFV orchestration functionalities. This should remain running in the background to ensure continuous communication with Monarch components. We recommend leaving it running in a separate terminal. Run it with the command:

cd nfv_orchestrator

python3 run.pyWarning

Keep the nfv orchestrator running in this terminal, as it will be essential for interactions with the monitoring_manager and other Monarch modules!

Step 7 is deploying the monitoring_manager component.

First, add the following environment variable to your .env file, replacing <node_ip> with the actual IP address:

NFV_ORCHESTRATOR_URI="http://<node_ip>:6001"Note

Make sure that the NFV orchestrator is running at the specified URI. We can check this as follows:

curl -X GET http://localhost:6001/api/healthYou should see the following:

{

"message": "NFV Orchestrator is healthy",

"status": "success"

}Navigate to the monitoring_manager directory and run the install.sh script to deploy the component:

cd monitoring_manager

./install.shNext, we will deploy the request_translator component.

Add the following environment variable to your .env file, replacing <node_ip> with the actual IP address:

SERVICE_ORCHESTRATOR_URI="http://<node_ip>:30501"Navigate to the request_translator directory and run the install.sh script:

cd request_translator

./install.shIn this step, we’ll configure datasources and add dashboards in Grafana, using the Grafana GUI from Step 5.

- In Grafana, navigate to Home > Connections > Data Sources and select Add new data source.

- Configure the datasource by filling in the following fields:

First, we need to add the Monarch datadist (Thanos) datasource. Click on Home->Connections->Data Sources->Add new data source. Populate the following fields:

- Name: monarch-thanos

- Prometheus server URL: http://<node_ip>:31004

- Promtheus type: Thanos

- Thanos version: 0.31.x

- HTTP method: POST

To import a pre-configured dashboard for monitoring two network slices:

- Go to Home > Dashboards > New > Import.

- Select the

monarch-dashboard.jsonfile located at dashboards and follow the prompts to complete the import.

In this step, you’ll submit a slice monitoring request to the request_translator component. Upon successful submission, the Monitoring Data Exporters (MDEs) and KPI computation processes will be triggered, and the results will appear on the Grafana dashboard.

First, update the environment variable in the .env file with the appropriate IP for the Monarch Thanos URL:

MONARCH_THANOS_URL="http://<node_ip>:31004"To submit a slice monitoring request, we can use the test_api.py script in the request_translator directory.

cd request_translator

python3 test_api.py --url "http://localhost:30700" --json_file requests/request_slice.json submitExpected output:

Status Code: 200

Response: {'request_id': 'HqYQa4ZMSZaS5PGYVntAJZ', 'status': 'success'}

Tip

Note the request_id for future reference. You can use later to delete the request.

Upon submission, you should see the creation of metrics services for the AMF, SMF, and UPF network functions:

kubectl get service -n open5gs | grep metricsExample output:

amf-metrics-service ClusterIP 10.105.19.40 <none> 9090/TCP 8m34s

smf-metrics-service ClusterIP None <none> 9090/TCP 8m34s

upf-metrics-service ClusterIP None <none> 9090/TCP 8m34sYou should also see the KPI computation component running:

kubectl get pods -n monarch | grep kpiExample output:

kpi-calculator-fc599b544-pqd5b 1/1 Running 0 9m49sTo list all submitted requests, run:

cd request_translator

python3 test_api.py --url "http://localhost:30700" listExample output:

Status Code: 200

Response: {'data': {'HqYQa4ZMSZaS5PGYVntAJZ': {'api_version': '1.0', 'duration': {'end_time': '2023-12-01T00:05:00Z', 'start_time': '2023-12-01T00:00:00Z'}, 'kpi': {'kpi_description': 'Throughput of the network slice', 'kpi_name': 'slice_throughput', 'sub_counter': {'sub_counter_ids': ['1-000001', '2-000002'], 'sub_counter_type': 'SNSSAI'}, 'units': 'Mbps'}, 'monitoring_interval': {'adaptive': True, 'interval_seconds': 1}, 'request_description': 'Monitoring request for slice throughput', 'scope': {'scope_id': 'NSI01', 'scope_type': 'slice'}}, 'KvaF952C4vsNKQf4Aywc4o': {'api_version': '1.0', 'duration': {'end_time': '2023-12-01T00:05:00Z', 'start_time': '2023-12-01T00:00:00Z'}, 'kpi': {'kpi_description': 'Throughput of the network slice', 'kpi_name': 'slice_throughput', 'sub_counter': {'sub_counter_ids': ['1-000001', '2-000002'], 'sub_counter_type': 'SNSSAI'}, 'units': 'Mbps'}, 'monitoring_interval': {'adaptive': True, 'interval_seconds': 1}, 'request_description': 'Monitoring request for slice throughput', 'scope': {'scope_id': 'NSI01', 'scope_type': 'slice'}}, 'TDpUDNjAh5XSwPbmKNBaQZ': {'api_version': '1.0', 'duration': {'end_time': '2023-12-01T00:05:00Z', 'start_time': '2023-12-01T00:00:00Z'}, 'kpi': {'kpi_description': 'Throughput of the network slice', 'kpi_name': 'slice_throughput', 'sub_counter': {'sub_counter_ids': ['1-000001', '2-000002'], 'sub_counter_type': 'SNSSAI'}, 'units': 'Mbps'}, 'monitoring_interval': {'adaptive': True, 'interval_seconds': 1}, 'request_description': 'Monitoring request for slice throughput', 'scope': {'scope_id': 'NSI01', 'scope_type': 'slice'}}, 'hLHJDHjkEE7dBydukLVCyJ': {'api_version': '1.0', 'duration': {'end_time': '2023-12-01T00:05:00Z', 'start_time': '2023-12-01T00:00:00Z'}, 'kpi': {'kpi_description': 'Throughput of the network slice', 'kpi_name': 'slice_throughput', 'sub_counter': {'sub_counter_ids': ['1-000001', '2-000002'], 'sub_counter_type': 'SNSSAI'}, 'units': 'Mbps'}, 'monitoring_interval': {'adaptive': True, 'interval_seconds': 1}, 'request_description': 'Monitoring request for slice throughput', 'scope': {'scope_id': 'NSI01', 'scope_type': 'slice'}}}, 'status': 'success'}To delete a specific request by request_id:

cd request_translator

python3 test_api.py --url "http://localhost:30700" delete --request_id <request_id>Expected output:

Status Code: 200

Response: {'message': 'Monitoring request deleted', 'status': 'success'}At this point, all Monarch components are set up and ready for use.

To start monitoring KPIs in real-time, generate traffic through the UEs. Once the traffic flows through, you'll see computed KPIs for the network slices in the Grafana dashboard configured earlier.

.

.

Note

For testing, you can use the uesimtun0 interface for the UEs to send ping traffic through the network slices, as shown in conduct a ping test.

The dashboard below shows Monarch being used to monitor network slices for a cloud-gaming use-case during a demo at the University of Waterloo.

If you use the code in this repository in your research work or project, please consider citing the following publication.

N. Saha, N. Shahriar, R. Boutaba and A. Saleh. (2023). MonArch: Network Slice Monitoring Architecture for Cloud Native 5G Deployments. In Proceedings of the IEEE/IFIP Network Operations and Management Symposium (NOMS). Miami, Florida, USA, 08 - 12 May, 2023.

Contributions, improvements to documentation, and bug-fixes are always welcome! See first-contributions.