GSNet: Joint Vehicle Pose and Shape Reconstruction with Geometrical and Scene-aware Supervision [ECCV'20]

Code and 3D car mesh models for the ECCV 2020 paper "GSNet: Joint Vehicle Pose and Shape Reconstruction with Geometrical and Scene-aware Supervision". GSNet performs joint vehicle pose estimation and vehicle shape reconstruction with single RGB image as input.

[arXiv]|[Paper]|[Project Page]

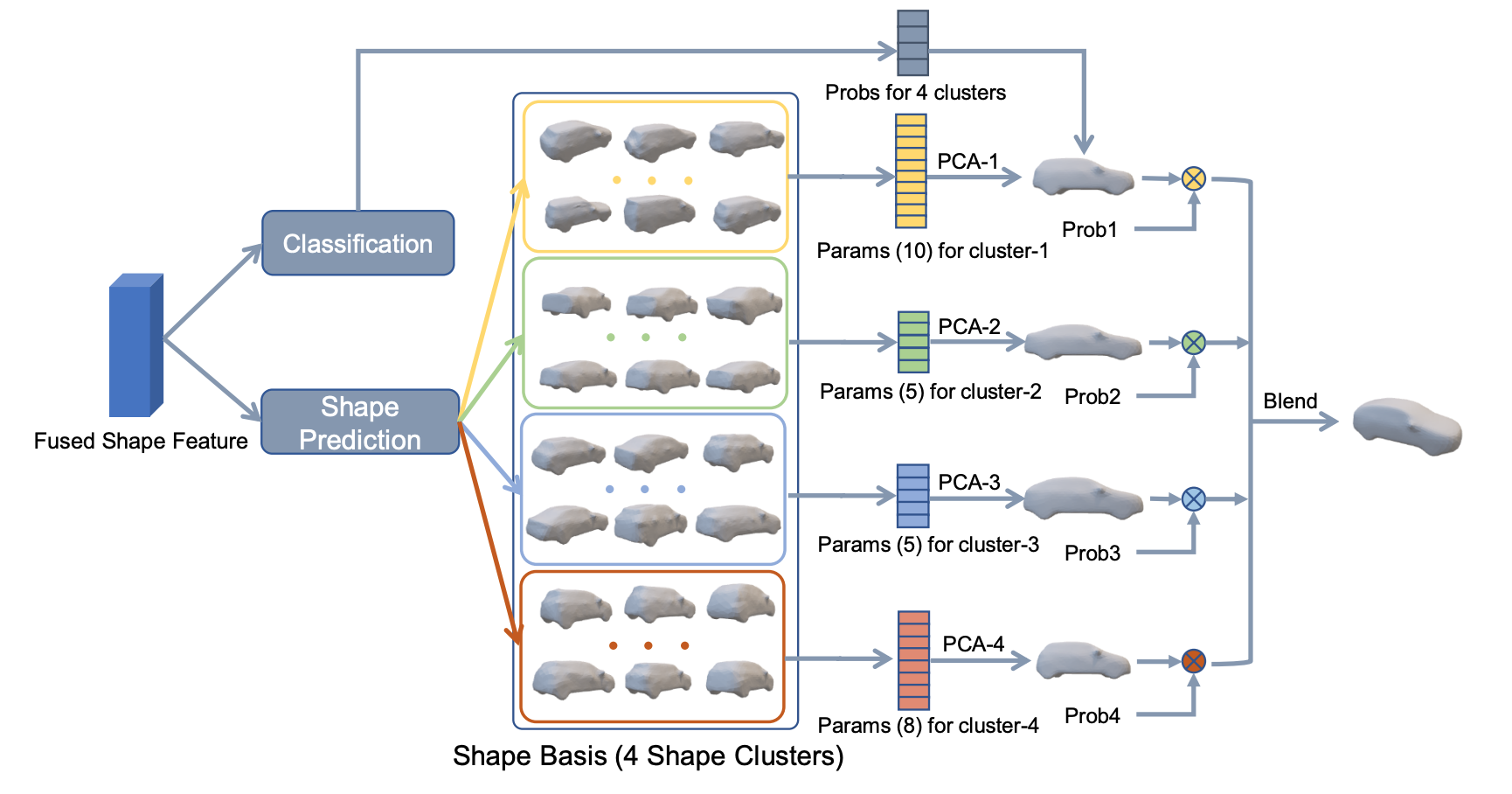

We present a novel end-to-end framework named as GSNet (Geometric and Scene-aware Network), which jointly estimates 6DoF poses and reconstructs detailed 3D car shapes from single urban street view. GSNet utilizes a unique four-way feature extraction and fusion scheme and directly regresses 6DoF vehicle poses and shapes in a single forward pass. Extensive experiments show that our diverse feature extraction and fusion scheme can greatly improve model performance. Based on a divide-and-conquer 3D shape representation strategy, GSNet reconstructs 3D vehicle shape with great detail (1352 vertices and 2700 faces). This dense mesh representation further leads us to consider geometrical consistency and scene context, and inspires a new multi-objective loss function to regularize network training, which in turn improves the accuracy of 6D pose estimation and validates the merit of jointly performing both tasks.

(Check Table 3 of the paper for full results)

| Method | A3DP-Rel-mean | A3DP-Abs-mean |

|---|---|---|

| DeepMANTA (CVPR'17) | 16.04 | 20.1 |

| 3D-RCNN (CVPR'18) | 10.79 | 16.44 |

| Kpt-based (CVPR'19) | 16.53 | 20.4 |

| Direct-based (CVPR'19) | 11.49 | 15.15 |

| GSNet (ECCV'20) | 20.21 | 18.91 |

We build GSNet based on the Detectron2 developed by FAIR. Please first follow its readme file. We recommend the Pre-Built Detectron2 (Linux only) version with pytorch 1.5 by the following command:

python -m pip install detectron2 -f \

https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.5/index.html

The ApolloCar3D dataset is detailed in paper ApolloCar3D and the corresponding images can be obtained from link. We provide our converted car meshes (same topology), kpts, bounding box, 3d pose annotations etc. in coco format under the car_deform_result and datasets/apollo/annotations folders.

car_deform_result: We provide 79 types of ground truth car meshes with the same topology (1352 vertices and 2700 faces) converted using SoftRas (https://github.com/ShichenLiu/SoftRas)

The file car_models.py has a detailed description on the car id and car type correspondance.

merge_mean_car_shape: The mean car shape of the four shape basis used by four independent PCA models.

pca_components: The learned weights of the four PCA models.

How to use our car mesh models? Please refer to the class StandardROIHeads in roi_heads.py, which contains the core inference code for ROI head of GSNet. It relies on the SoftRas to load and manipulate the car meshes.

Please follow the readme page (including the pretrained model).

Please star this repository and cite the following paper in your publications if it helps your research:

@InProceedings{gsnet2020ke,

author = {Ke, Lei and Li, Shichao and Sun, Yanan and Tai, Yu-Wing and Tang, Chi-Keung},

title = {GSNet: Joint Vehicle Pose and Shape Reconstruction with Geometrical and Scene-aware Supervision},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}

}

Related repo based on detectron2: BCNet, CVPR'21- a bilayer instance segmentation method

Related work reading: EgoNet, CVPR'21

A MIT license is used for this repository. However, certain third-party datasets, such as (ApolloCar3D), are subject to their respective licenses and may not grant commercial use.