PROJECT NOT UNDER ACTIVE MANAGEMENT

This project will no longer be maintained by Intel.

Intel has ceased development and contributions including, but not limited to, maintenance, bug fixes, new releases, or updates, to this project.

Intel no longer accepts patches to this project.

If you have an ongoing need to use this project, are interested in independently developing it, or would like to maintain patches for the open source software community, please create your own fork of this project.

Contact: webadmin@linux.intel.com

This reference use case is an end-to-end reference solution for building an AI-augmented multi-modal semantic search system for document images (for example, scanned documents). This solution can help enterprises gain more insights from their document archives more quickly and easily using natural language queries.

Enterprises are accumulating a vast quantity of documents, a large portion of which is in image formats such as scanned documents. These documents contain a large amount of valuable information, but it is a challenge for enterprises to index, search and gain insights from the document images due to the reasons below:

- The end-to-end (e2e) solution involves many components that are not easy to integrate together.

- Indexing a large document image collection is very time consuming. Distributed indexing capability is needed, but there is no open-source solution that is ready to use.

- Query against document image collections with natural language requires multi-modality AI that understands both images and languages. Building such multi-modality AI models requires deep expertise in machine learning.

- Deploying multi-modality AI models together with databases and a user interface is not an easy task.

- Majority of multi-modality AI models can only comprehend English, developing non-English models takes time and requires ML experience.

In this reference use case, we implement and demonstrate a complete end-to-end solution that helps enterprises tackle these challenges and jump start the customization of the referece solution for their own document archives. The architecture of the reference use case is shown in the figure below. It is composed of 3 pipelines, for which we will go into details in the How It Works section:

- Single-node Dense Passage Retriever (DPR) fine tuning pipeline

- Image-to-document indexing pipeline (can be run on either single node or distributed on multiple nodes)

- Single-node deployment pipeline

- Developer productivity: The 3 pipelines in this reference use case are all containerized and allow customization through either command line arguments or config files. Developers can bring their own data and jump start development very easily.

- New state-of-the-art (SOTA) retrieval recall & mean reciprocal rank (MRR) on the benchmark dataset: better than the SOTA reported in this paper on Dureader-vis, the largest open-source document visual retrieval dataset (158k raw images in total). We demonstrated that AI-augmented ensemble retrieval method achieved higher recall and MRR than non-AI retrieval method (see the table below).

- Performance: distributed capability significantly accelerates the indexing process to shorten the development time.

- Deployment made easy: using two Docker containers from Intel's open domain question answering workflow and two other open-source containers, you can easily deploy the retrieval solution by customizing the config files and running the launch script provided in this reference use case.

- Multilingual customizable models: you can use our pipelines to develop and deploy your own models in many different languages.

| Method | Top-5 Recall | Top-5 MRR | Top-10 Recall | Top-10 MRR |

|---|---|---|---|---|

| BM25 only (ours) | 0.7665 | 0.6310 | 0.8333 | 0.6402 |

| DPR only (ours) | 0.6362 | 0.4997 | 0.7097 | 0.5094 |

| Ensemble (ours) | 0.7983 | 0.6715 | 0.8452 | 0.6780 |

| SOTA reported by Baidu | 0.7633 | did not report | 0.8180 | 0.6508 |

Please note that indexing of the entire Dureader-vis dataset can take days depending on the type and the number of CPU nodes that you are using for the indexing pipeline. This reference use case provides a multi-node distributed indexing pipeline that accelerates the indexing process. It is recommended to use at least 2 nodes with the hardware specifications listed in the table below. A network file system (NFS) is needed for the distributed indexing pipeline.

To try out this reference use case in a shorter time frame, you can download only one part of the Dureader-vis dataset (the entire dataset has 10 parts) and follow the instructions below.

| Supported Hardware | Specifications |

|---|---|

| Intel® 1st, 2nd, 3rd, and 4th Gen Xeon® Scalable Performance processors | FP32 |

| Memory | larger is better, recommend >376 GB |

| Storage | >250 GB |

We present some technical background on the three pipelines of this use case. We recommend running our reference solution first and then customizing the reference solution to your own use case by following the How to customize this reference kit section.

Dense passage retriever is a dual-encoder retriever based on transformers. Please refer to the original DPR paper for in-depth description of DPR model architecture and the fine-tuning algorithms. Briefly, DPR consists of two encoders, one for the query and one for the documents. DPR encoders can be fine tuned with customer datasets using the in-batch negative method where the answer documents of the other queries in the mini-batch serve as the negative samples. Hard negatives can be added to further improve the retrieval performance (recall and MRR).

In this reference use case, we used a pretrained cross-lingual language model open-sourced on Huggingface model hub, namely, the infoxlm-base model pretrained by Microsoft, as the starting point for both the query encoder and document encoder. We fine tuned the encoders with in-batch negatives. However, we did not include hard negatives in our fine-tuning pipeline. This can be future work in our later releases. We showcase that ensembling our fine-tuned DPR with BM25 retriever (a type of widely used non-AI retriever) can improve the retrieval recall and MRR compared to BM25 only.

The stock haystack library only supports BERT based DPR models, we have made modifications to the haystack APIs to allow any encoder architecture (e.g., RoBERTa, xlm-RoBERTa, etc.) that you can load via the from_pretrained method of Hugging Face transformers library. By using our containers, you can fine tune a diverse array of custom DPR models by setting xlm_roberta flag to true when initiating DensePassageRetriever object. (Note: although the flag is called "xlm_roberta", it supports any model architecture that can be loaded with from_pretrained method.)

In order to retrieve documents in response to queries, we first need to index the documents where the raw document images are converted into text passages and stored into databases with indices. In this reference use case, we demonstrate that using an ensemble retrieval method (BM25 + DPR) improves the retrieval recall and MRR over the BM25 only and DPR only retrieval methods. In order to condcut the ensemble retrieval, we need to build two databases: 1) an ElasticSearch database for BM25 retrieval, and 2) a PostgreSQL database plus a FAISS index file for DPR retrieval.

The architecture of the indexing pipeline is shown in the diagram below. There are 3 tasks in the indexing pipeline:

- Preprocessing task: this task consists of 3 steps - image preprocessing, text extraction with OCR (optical character recognition), post processing of OCR outputs. This task converts images into text passages.

- Indexing task: this tasks write text passages produced by the Preprocessing task into one or two databases depending on the retrieval method that the user specified.

- Embedding task: this task generates dense vector representations of text passages using the DPR document encoder and then generates a FAISS index file with the vectors. FAISS is a similarity search engine for dense vectors. When it comes to retrieval, the query will be turned into its vector representation by the DPR query encoder, and the query vector will be used to search against the FAISS index to find the vectors of text passages with the highest similarity to the query vector. The embedding task is required for the DPR retrieval or the ensemble retrieval method, but is not required for BM25 retrieval.

After the DPR encoders are fine-tuned and the document images are indexed into databases as text passages, we can deploy a retrieval system on a server with Docker containers and retrieve documents in response to user queries. Once the deployment pipeline is successfully launched, users can interact with the retrieval system through a web user interface (UI) and submit queries in natural language. The retrievers will search the most relevant text passages in the databases and return those passages to be displayed on the web UI. The diagram below shows how BM25 and DPR retrievers work to retrieve top-K passages and how the ensembler rerank the passages with weighting factors to improve the recall and MRR of individual retrievers.

Set up environment variables on the head node, and if planning to run distributed indexing pipeline, set up the environment variables on the worker nodes as well.

export HEAD_IP=<your head node ip address>

export MODEL_NAME=my_dpr_model # the default name for the fine-tuned dpr model

Append "haystack-api" to $NO_PROXY:

export NO_PROXY=<your original NO_PROXY>,haystack-api

Define an environment variable that will store the workspace path named NFS_DIR, this can be an existing directory or one to be created in further steps. This ENVVAR will be used for all the commands executed using absolute paths.

export NFS_DIR=/mydisk/mtw/work

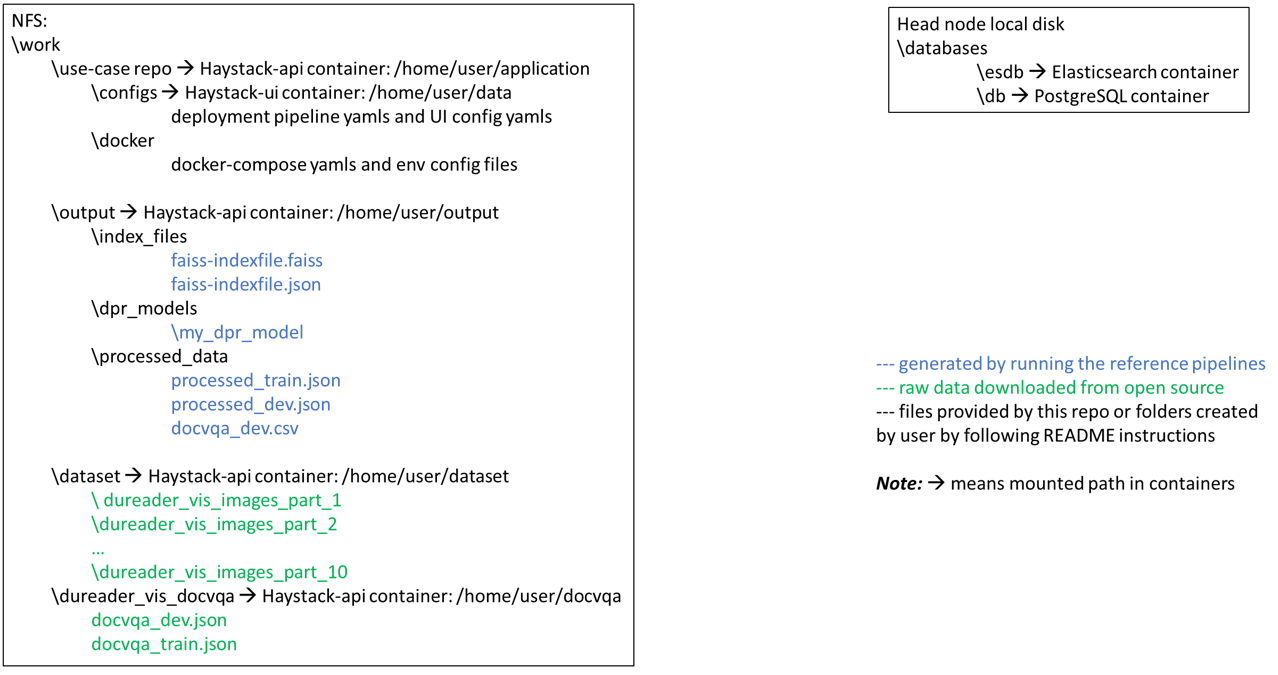

Set up the directories as shown in the diagram below. Some directories will be mounted to containers when you run the pipelines later on. You don't need to worry about mounting the directories when running our reference solution as mounting will be done automatically by our scripts. We want to show the mounting architecture here to make it clear and easy for you to customize the pipelines later on.

Note: If you want to run the distributed indexing pipeline with multiple nodes, please set up the directories on a network file system (NFS) to store raw image data and repos as well as outputs from the pipelines. However, if you only want to run single-node pipelines, you can set up the directories on a local disk.

# On NFS for distributed indexing

# or localdisk if only running single-node pipelines

mkdir -p $NFS_DIR

cd $NFS_DIR

mkdir $NFS_DIR/dataset $NFS_DIR/output

mkdir $NFS_DIR/output/dpr_models $NFS_DIR/output/index_files $NFS_DIR/output/processed_data

On the local disk of the head node, make a database directory to store the files generated by the indexing pipeline.

# set up env variable for databases directory

export LOCAL_DIR=/mydisk/mtw/databases

# On the local disk of the head node, create database directory

mkdir -p $LOCAL_DIR

Double check the environment variables are set correctly with commands below:

echo $HEAD_IP

echo $LOCAL_DIR

echo $MODEL_NAME

Important Notes:

- Users need to have adequate write permissions to the folders on NFS. IT policies of NFS may prevent you from mounting volumes on NFS to containers. Please refer to the Troubleshooting section if you ever ran into "permission denied" error when running the pipelines.

- It is important to set up the $LOCAL_DIR variable. Make sure you have it set up.

- You may want to double check your http and https proxies on your machines to make sure you can download models from external internet, more specifically, from Hugging Face model hub and from PaddleOCR.

cd $NFS_DIR

git clone https://github.com/intel/document-automation.git

The Dureader-vis dataset is the largest open-source dataset for document visual retrieval (retrieval of images that contain answers to natural language queries). The entire dataset has 158k raw images. Refer to the dataset source for more details.

# Note: Make sure Anaconda or Miniconda already installed

cd $NFS_DIR/document-automation

. scripts/run_download_dataset.sh

Firstly, get the raw images for the indexing pipeline. There are in total 10 parts of images, you can download all or a subset of them to try out this reference use case.

Note: Indexing the entire Dureader-vis dataset will take a long time. Download only one part of the Dureader-vis dataset (for example, dureader_vis_images_part_2.tar.gz) to run through this reference use case in a shorter period of time. Each part contains about 16k images.

# Download the raw images into dataset folder.

cd $NFS_DIR/dataset

wget https://dataset-bj.cdn.bcebos.com/qianyan/dureader_vis_images_part_2.tar.gz

tar -xzf dureader_vis_images_part_2.tar.gz

Then get the training and dev json files for the fine-tuning pipeline. You will get docvqa_train.json and docvqa_dev.json after you run the commands below.

cd $NFS_DIR # come back to the workspace directory

# download dureader_vis_docvqa.tar.gz to workspace

wget https://dataset-bj.cdn.bcebos.com/qianyan/dureader_vis_docvqa.tar.gz

tar -xzf dureader_vis_docvqa.tar.gz

For data scientists and developers, we provide the following:

- Single-node step-by-step preprocessing, fine-tuning, indexing and deployment pipelines with Docker compose,

- Containerized multi-node distributed indexing pipeline to accelerate the indexing process.

For MLOps engineers, we provide template to run this use case with Argo. For advanced users, we will explain how you can bring your own scripts and run containers interactively.

In addition, for users who want to test the reference solution without going into a lot of technical details, we provide instructions to execute the single-node fine-tuning and indexing with pipelines with a single docker compose command.

You can run the reference pipelines using the following environments:

- Docker

- Argo

You'll need to install Docker Engine on your development system. Note that while Docker Engine is free to use, Docker Desktop may require you to purchase a license. See the Docker Engine Server installation instructions for details.

If the Docker image is run on a cloud service, mention they may also need credentials to perform training and inference related operations (such as these for Azure):

- Set up the Azure Machine Learning Account

- Configure the Azure credentials using the Command-Line Interface

- Compute targets in Azure Machine Learning

- Virtual Machine Products Available in Your Region

Ensure you have Docker Compose installed on your machine. If you don't have this tool installed, consult the official Docker Compose installation documentation.

DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

mkdir -p $DOCKER_CONFIG/cli-plugins

curl -SL https://github.com/docker/compose/releases/download/v2.7.0/docker-compose-linux-x86_64 -o $DOCKER_CONFIG/cli-plugins/docker-compose

chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

docker compose versionBuild or pull the provided docker image.

cd $NFS_DIR/document-automation/docker

docker compose build OR

docker pull intel/ai-workflows:doc-automation-fine-tuning

docker pull intel/ai-workflows:doc-automation-indexingWe preprocess the docvqa_train.json and docvqa_dev.json files from Dureader-vis that contain questions, answers and document text extracted by PaddleOCR. Refer to the dataset source for more details on the json files. The preprocessing is needed to satisfy the requirements of haytack APIs for DPR training. The preprocessing will split the document text into passages with max_length=500, overlap=10, min_length=5 by default, and identify the positive passage for each question. Please refer to this section on how to customize this step.

You will see progress bars showing the dataset processing status when you run the commands below.

# go inside the docker folder of the use case repo

cd $NFS_DIR/document-automation/docker

docker compose run pre-processTo run the reference fine-tuning pipeline with Docker compose, please follow steps below. To customize the fine-tuning pipeline, please refer to the Customize Fine Tuning Pipeline section.

Our reference docker-compose.yml is set up to take default folder directories as shown in the Get Started section. If you want to set up your directories in a different way, you can export environment varibles listed in the table below, before you run docker compose commands.

| Environment Variable Name | Default Value | Description |

|---|---|---|

| DATASET | $PWD/../../dureader_vis_docvqa |

Path to Dureader-vis VQA Dataset |

| MODEL_NAME | my_dpr_model |

Name of the model folder |

| SAVEPATH | $PWD/../../output |

Path to processed data and fine-tuned DPR models |

Run the fine-tuning pipeline using Docker compose as shown below. You will see progress bars showing the training status. The default hyperparameters used for fine tuning are listed here.

cd $NFS_DIR/document-automation/docker

docker compose run fine-tuningTo run single-node indexing, go to this section. To run multi-node distributed indexing, go to this section.

We provide 3 options for indexing: bm25 , dpr , all. Our reference use case uses the all option, which will index the document images for both BM25 and DPR retrieval.

The default methods/models/parameters used for indexing are shown in table below. To customize the indexing pipeline, please refer to the Customize Indexing Pipeline section.

| Methods/Models/Parameters | Default |

|---|---|

| Image preprocessing method | grayscale |

| OCR engine | paddleocr |

| post processing of OCR outputs | splitting into passages |

| max passage length | 500 |

| passage overlap | 10 |

| min passage length | 5 |

| DPR model | fine-tuned from microsoft/infoxlm-base with the fine-tuning pipeline above |

Both single-node and multi-node distributed indexing pipelines use Ray in the backend to parallelize and accelerate the process.

Tune the following Ray params in scripts/run_distributed_indexing.sh based on your system (number of CPU cores and memory size). You can start with the default values and refer to the Troubleshooting section if you got out of memory error.

RAY_WRITING_BS=10000

RAY_EMBED_BS=50

RAY_PREPROCESS_MIN_ACTORS=8

RAY_PREPROCESS_MAX_ACTORS=20

RAY_EMBED_MIN_ACTORS=4

RAY_EMBED_MAX_ACTORS=8

RAY_PREPROCESS_CPUS_PER_ACTOR=4

RAY_WRITING_CPUS_PER_ACTOR=4

RAY_EMBED_CPUS_PER_ACTOR=10

Note: if you plan to run this use case with a small subset using the --toy_example option, the retrieval recall may be very low or even zero because the subset may not include any images that contain answers to the queries in the dev set of Dureader-vis. Improving the toy example is future work for our later releases. To run the indexing toy example, just uncomment the --toy_example flag in the last line of the scripts/run_distributed_indexing.sh.

You will see print-outs on your head-node terminal as the indexing process progresses. When the indexing process is finished, you will see the embedding time and save time being printed out on the terminal.

Our reference docker-compose.yml is set up to work with the setup described in the Get Started section. If you want to set up your directories in a different way, you can export environment varibles listed in the table below, before you run docker compose commands for the indexing pipeline.

| Environment Variable Name | Default Value | Description |

|---|---|---|

| HEAD_IP | localhost |

IP Address of the Head Node |

| DATASET | $PWD/../../dataset |

Path to Dureader-vis images |

| SAVEPATH | $PWD/../../output |

Path to index files, fine-tuned DPR models |

Run the elasticsearch and postgresql database containers using Docker compose as follows

# add permission for esdb

mkdir -p $LOCAL_DIR/esdb && chmod -R 777 $LOCAL_DIR/esdb

# if not in the docker folder,

# go inside the docker folder of the use case repo

cd $NFS_DIR/document-automation/docker

docker compose up postgresql -d

docker compose up elasticsearch -d Check that both postgresql and elasticsearch containers are started with the command below

docker ps

If you did not see both the containers, refer to the Troubleshooting section.

Below is a diagram showing the ports and directories connected to the two database containers.

%%{init: {'theme': 'dark'}}%%

flowchart RL

VESDB{{"${ESDB"}} x-. "-$LOCAL_DIR}/esdb" .-x elasticsearch

VDB{{"/${DB"}} x-. "-$LOCAL_DIR}/db" .-x postgresql[(postgresql)]

VPWDpsqlinitsql{{"/${PWD}/psql_init.sql"}} x-. /docker-entrypoint-initdb.d/psql_init.sql .-x postgresql

P0((9200)) -.-> elasticsearch

P1((5432)) -.-> postgresql

classDef volumes fill:#0f544e,stroke:#23968b

class VESDB,VDB,VPWDpsqlinitsql volumes

classDef ports fill:#5a5757,stroke:#b6c2ff

class P0,P1 ports

cd $NFS_DIR/document-automation/docker

docker compose run indexingYou can evaluate the performance (Top-K recall and MRR) of 3 different retrieval methods:

- BM25 only

- DPR only

- BM25 + DPR ensemble

To run each of the three retrieval methods, specify the following variable in thescripts/run_retrieval_eval.shscript.

RETRIEVAL_METHOD="ensemble" #options: bm25, dpr, ensemble (default)

To evaluate the retrieval performance, run the following command:

cd $NFS_DIR/document-automation/docker

docker compose run performance-retrievalIf you did not get the results listed in the table above, please refer to the Troubleshooting section.

Use the following command to stop and remove all the services at any time.

cd $NFS_DIR/document-automation/docker

docker compose downRun Single-Node Preprocessing Pipeline and Run Single-Node DPR Fine-Tuning Pipeline need to be executed before running this section.

- Stop and remove all running docker containers from previous runs.

# in the root directory of this use case repo on NFS

# if you were inside docker folder, come back one level

cd $NFS_DIR/document-automation

bash scripts/stop_and_cleanup_containers.sh

- Start doc-automation-indexing, elasticsearch and postgresql containers on the head node.

Set the following variables in thescripts/startup_ray_head_and_db.sh. Note:num_threads_db + num_threads_indexing <= total number of threads of head node.

num_threads_db=<num of threads to be used for databases>

num_threads_indexing=<num_threads to be used for indexing>

Then run the command:

# on head node

cd $NFS_DIR/document-automation

bash scripts/startup_ray_head_and_db.sh

Once you successfully run the command above, you will be taken inside the doc-automation-indexing container on the head node. Note: If you got error from docker daemon when running distributed indexing pipeline that "mkdir permission denied", it is due to NFS policy not allowing mounting folders on NFS to docker containers. Contact your IT to get permission or change to an NFS that allows container volume mounting.

- Start doc-automation-indexing container on all the worker nodes for distributed indexing.

Set the following variable in thescripts/startup_workers_.sh. Note:num_threads <= total number of threads of worker node.

num_threads=<# of threads you want to use for indexing on worker node>

Then run the command below:

# on worker node

cd $NFS_DIR/document-automation

bash scripts/startup_workers_.sh

You can start the indexing pipeline with the commands below on the head node.

# inside the doc-automation-indexing container on the head node

cd /home/user/application

bash scripts/run_distributed_indexing.sh

You will see print-outs on your head-node terminal as the indexing process progresses. When the indexing process is finished you will see the embedding time and save time being printed out on the terminal.

You can evaluate the performance (Top-K recall and MRR) of 3 different retrieval methods:

- BM25 only

- DPR only

- BM25 + DPR ensemble

To run each retrieval method, specify the following variable in thescripts/run_retrieval_eval.shscript.

RETRIEVAL_METHOD="ensemble" #options: bm25, dpr, ensemble (default)

Then run the command below inside the doc-automation-indexing container on the head node to evaluate performance of the retrieval methods.

cd /home/user/application

bash scripts/run_retrieval_eval.sh

If you got a connection error at the end of retrieval evaluation when using the multi-node distributed indexing pipeline, you ignore it. This error does not have any impact on the indexing pipeline or the evaluation results.

If you did not get the results listed in the table above, please refer to the Troubleshooting section.

On head node, exit from the indexing container by typing exit. And then on both the head node and all worker nodes, run the command below.

cd $NFS_DIR/document-automation

bash scripts/stop_and_cleanup_containers.sh

- What if I want to run multiple indexing experiments? Do I need to stop/remove/restart containers? - No. You can specify a different

INDEXNAMEand a differentINDEXFILEfor each experiment in thescripts/run_distributed_indexing.sh. - I got a lot of chmod error messages when I restart the containers. Do I need to worry? - No. These errors will not cause problems for the indexing pipeline.

Follow the instructions below to quickly launch the two pipelines with one line of docker compose command. After the process finished, you can then run the deployment pipeline. Please make sure you have started the database containers according to this section prior to executing the following command.

cd $NFS_DIR/document-automation/docker

docker compose run indexing-performance-retrieval &This will run the pipelines in the background. To view status

fgFor MLOPs engineers, the diagram below shows the interactions between different containers when running the single-node preprocessing, fine-tuning and indexing pipelines.

%%{init: {'theme': 'dark'}}%%

flowchart RL

VDATASET{{"/${DATASET"}} x-. "-$PWD/../../dureader_vis_docvqa}" .-x preprocess[pre-process]

VSAVEPATH{{"/${SAVEPATH"}} x-. "-$PWD/../../output}/processed_data" .-x preprocess

VPWDdocumentautomationrefkit{{"/${PWD}/../document_automation_ref_kit"}} x-. /home/user/application .-x preprocess

VPWDdocumentautomationrefkit x-. /home/user/application .-x finetuning[fine-tuning]

VSAVEPATH x-. "-$PWD/../../output}" .-x finetuning

VSAVEPATH x-. "-$PWD/../../output}/dpr_models" .-x finetuning

VPWDdocumentautomationrefkit x-. /home/user/application .-x indexing

VSAVEPATH x-. "-$PWD/../../output}/index_files" .-x indexing

VDATASET x-. "-$PWD/../../dataset}" .-x indexing

VSAVEPATH x-. "-$PWD/../../output}" .-x indexing

VPWDdocumentautomationrefkit x-. /home/user/application .-x performanceretrieval[performance-retrieval]

VSAVEPATH x-. "-$PWD/../../output}" .-x performanceretrieval

VDATASET x-. "-$PWD/../../dureader_vis_docvqa}" .-x performanceretrieval

finetuning --> preprocess

indexing --> finetuning

performanceretrieval --> indexing

P0((9200)) -.-> indexing

P1((8265)) -.-> indexing

P2((5432)) -.-> indexing

classDef volumes fill:#0f544e,stroke:#23968b

class VDATASET,VSAVEPATH,VPWDdocumentautomationrefkit,VPWDdocumentautomationrefkit,VSAVEPATH,VSAVEPATH,VPWDdocumentautomationrefkit,VSAVEPATH,VDATASET,VSAVEPATH,VPWDdocumentautomationrefkit,VSAVEPATH,VDATASET volumes

classDef ports fill:#5a5757,stroke:#b6c2ff

class P0,P1,P2 ports

Before you run this pipeline, please make sure you have completed all the steps in the Get Started, Run DPR Fine Tuning Pipeline and Run Indexing Pipeline sections.

The deployment pipeline is a no-code, config-driven, containerized pipeline. There are 4 config files:

- docker-compose yaml to launch the pipeline

- env config file that specifies environment variables for docker-compose

- pipeline config yaml that specifies the haystack components and properties

- UI config yaml

Please refer to this section on how to customize the deployment pipeline through config files.

In this reference use case, we have implemented deployment pipelines for the three retrieval methods: bm25, dpr, ensemble. Please refer to this section for more technical details. Here we have prepared 3 sets of configs for the 3 retrieval methods respectively. Please note that different retrievals need to be executed independently from start to end

To deploy bm25 retrieval, use the following commands:

# make sure you are in the docker folder of this use case repo

cd $NFS_DIR/document-automation/docker

docker compose --env-file env.bm25 -f docker-compose-bm25.yml upTo deploy dpr retrieval, use the following commands:

# make sure you are in the docker folder of this use case repo

# if not, go into the docker folder

cd $NFS_DIR/document-automation/docker

docker compose --env-file env.dpr -f docker-compose-dpr.yml upTo deploy ensemble retrieval, use the following commands:

# make sure you are in the docker folder of this use case repo

# if not, go into the docker folder

cd $NFS_DIR/document-automation/docker

docker compose --env-file env.ensemble -f docker-compose-ensemble.yml up Once any of the deployments is launched successfully, you can open up a browser (Chrome recommended) and type in the following address:

<head node ip>:8501

And you should see a webpage that look like the one below.

If you want to deploy the pipeline on a different machine than the one you used for indexing, you need to do the following:

- Set up the directories, the repo of this use case and Docker images on the new machine as described in the Get Started section.

- If the new machine does not have access to NFS or if you saved outputs in a local disk, then you need to copy the DPR models and FAISS index files to the new machine.

- Copy the Elasticsearch and PostgreSQL database files to the new machine.

- Modify the faiss-indexfile.json: change the IP address to the IP address of the new machine.

- Double check and modify folder paths in the 4 config files if needed.

- Install Helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 && \

chmod 700 get_helm.sh && \

./get_helm.sh- Install Argo Workflows and Argo CLI

- Configure your Artifact Repository

- Ensure that your dataset and config files are present in your chosen artifact repository.

Ensure that you have reviewed the Hardware Requirements and each of your nodes has sufficient memory for the workflow. Follow Helm Chart README to properly configure the values.yaml file.

export NAMESPACE=argo

helm install --namespace ${NAMESPACE} --set proxy=${http_proxy} document-automation ./chart

argo submit --from wftmpl/document-automation --namespace=${NAMESPACE}To view your workflow progress

argo logs @latest -fOnce all the steps are successful, open a port-forward so you can access the UI:

kubectl -n argo port-forward pod/`kubectl -n argo get pods|grep ui-phase|awk '{print $1}'` 8501:8501 --address '0.0.0.0'You can open up a browser (Chrome recommended) and type in the following address:

<k8s node ip>:8501

You have run through the three pipelines of this reference use case that builds and deploys an AI-enhanced multi-modal retrieval solution for Dureader-vis images, with which you can use natural language queries to retrieve images. The three pipelines are 1) DPR fine tuning pipeline, 2) image-to-document indexing pipeline, 3) deployment pipeline.

Next you can customize the reference solution to your own use case by following the customization sections below.Haystack APIs are used for fine tuning DPR models, which require a specific format for training data. The data processing script in our reference fine-tuning pipeline is tailored to the data format of the Dureader-vis dataset. Please refer to this haystack tutorial on how to custom datasets for DPR fine tuning with haystack.

if you just want to try different passage lengths/overlaps with Dureader-vis dataset, you can change the following params in thescripts/run_process_dataset.sh:

MAX_LEN_PASSAGE=500

OVERLAP=10

MINCHARS=5

Once you have processed your data according to the format required by haystack APIs, you can use our fine-tuning script implemented in this reference use case. You can customize the training hyperparameters listed here. To save your fine-tuned model with a customized name, you can change the MODEL_NAME variable by export MODEL_NAME=<your model name>.

MODEL_NAME variable.

You can bring your own images: just put your raw images in the dataset foler.

You can use command line arguments in the scripts/run_distributed_indexing.sh to customize the indexing pipeline with the various functionalities already implemented in our use case. A detailed description of the command line arguments is here. The following components can be customized through command line arguments:

- Image preprocessing methods

- OCR engine

- Post processing methods for OCR outputs

- Certain database settings

- DPR model for embedding document passages: you can

- either use any pretrained models on Huggingface model hub that supports AutoModel.from_pretrained method

- or use your own DPR model fine tuned on your own data. Refer to the Customize fine tuning pipeline section on how to leverage our pipelline to fine tune your own model.

Advanced users can write their own methods and incorporate more customized functionality into src/gen-sods-doc-image-ray.py for more flexibility.

This pipeline can be customized through config files. There are 4 config files:

- docker-compose yaml to launch the pipeline

- env config file that specifies environment variables for docker-compose

- pipeline config yaml that specifies the haystack components and properties

- UI config yaml

Please refer to this section to learn more about how to customize the config files.

Once you have completed customizing the config files, you can edit the following 2 variables in the scripts/launch_query_retrieval_ui_pipeline.sh script:

config=docker/<your-env-config>

yaml_file=docker/<your-docker-compose.yml>

Then run the command below to launch the customized pipeline.

bash scripts/launch_query_retrieval_ui_pipeline.sh

Currently, in Indexing Pipeline, it may take days to generate document indexing file based on server type and number nodes you are using.

If you use bare metal, we provide multi-node distributed indexing pipeline with Ray to accelerate the indexing process, but in K8S environment we only support single node now.

KubeRay is a powerful, open-source Kubernetes operator that simplifies the deployment and management of Ray applications on Kubernetes.

KubeRay will enhance the capabilities of Indexing Pipeline in the k8s environment.

To learn about KubeRay, see these resources:

You can run your own script or run a standalone service using Docker compose with the dev service defined in our docker-compose.yml as follows:

cd $NFS_DIR/document-automation/docker

docker compose run dev| Environment Variable Name | Default Value | Description |

|---|---|---|

| IMAGE_DATASET | $PWD/../../dataset |

Path to Dureader Image Dataset |

| VQA_DATASET | $PWD/../../dureader_vis_docvqa |

Path to Dureader VQA Dataset |

| SAVEPATH | $PWD/../../output |

Path to Index files,fine-tuned models |

| WORKFLOW | bash scripts/run_dpr_training.sh |

Name of the workflow |

Make sure to initialize databases by following this section if your script requires database interactions.

Instead of using Docker compose to run the pipelines, you can use docker run commands to go inside Docker containers and run the pipelines interactively. We provide an example below to show how this can be done.

If your environment requires a proxy to access the internet, export your development system's proxy settings to the docker environment:

export DOCKER_RUN_ENVS="-e ftp_proxy=${ftp_proxy} \

-e FTP_PROXY=${FTP_PROXY} -e http_proxy=${http_proxy} \

-e HTTP_PROXY=${HTTP_PROXY} -e https_proxy=${https_proxy} \

-e HTTPS_PROXY=${HTTPS_PROXY} -e no_proxy=${no_proxy} \

-e NO_PROXY=${NO_PROXY} -e socks_proxy=${socks_proxy} \

-e SOCKS_PROXY=${SOCKS_PROXY}"For example, you can run the pre-process workflow with the docker run command, as shown:

export DATASET=$PWD/../../dureader_vis_docvqa

export SAVEPATH=$PWD/../../output/precessed_data

docker run -a stdout ${DOCKER_RUN_ENVS} \

-v $PWD/../../document-automation:/home/user/application \

-v ${DATASET}:/home/user/docvqa \

-v ${SAVEPATH}:/home/user/output/processed_data \

--privileged --network host --init -it --rm --pull always \

-w /home/user/application \

intel/ai-workflows:doc-automation-fine-tuning \

/bin/bashThen you will be taken inside the container and you can run the command below for pre-processing:

bash scripts/run_process_dataset.shNote that please unset ENV variables after finishing if you want to try another execution method.

To read about other use cases and workflows examples, see these resources:

- Intel's Open Domain Question Answering workflow

- Developer Catalog

- Intel® AI Analytics Toolkit (AI Kit)

- If you got "permissions denied" error to write to the output folder in the preprocessing or fine-tuning pipeline, it is very likely that you do not have adequate write permissions to write to the folder on NFS. You can use

chmodto change the permissions of the output folder. If you cannot change the permissions, you can try to set up the work directory on a local disk where you have adequate write permissions. - If you got errors about not being able to write to databases,or if either postgresql or elasticsearch container did not get started with docker-compose commands, the errors are likely due to the postgresql container user and the elasticsearch container user being different, and they have different/inadequate permissions to write to the database directory that you have set up on your machine. Change the write permissions of the

$LOCAL_DIRwithchmodcommands so that both postgresql and elasticsearch containers can write to it. - If you got error from docker daemon when running distributed indexing pipeline that "mkdir permission denied", it is due to NFS policy not allowing mounting folders on NFS to docker containers. Contact your IT to get permission or change to an NFS that allows container volume mounting.

- If you got out of memory (OOM) error from Ray actors, or the ray process got stuck for a very long time, try reducing the number of actors and increasing the number of CPUs per actor. As a rule of thumb, max_num_ray_actors * num_cpus_per_actor should not exceed the total number of threads of your CPU. For example, you have 48 CPU cores in your system and have hyperthreading turned on, in this case you have in total 48 * 2 = 96 threads, max_num_ray_actors * num_cpus_per_actor should not exceed 96.

- If you see diffrent retrieval performances: first of all what we reported here is retrieval performance on the entire Dureader-vis dataset, not a subset, make sure you have indexed the entire Dureader-vis dataset. Secondly, due to the stochastic nature of the DPR fine tuning process, you might have a slightly different DPR model than ours, so you will likely see a different recall and MRR with your DPR model.

- If you got a connection error at the end of retrieval evaluation when using the multi-node distributed indexing pipeline, you ignore it. This error does not have any impact on the indexing pipeline or the evaluation results.

- If you got errors regarding connection to Hugging Face modelhub or to PaddleOCR models, please check your proxies and set appropriate proxies as follows:

export http_proxy=<your http proxy>

export https_proxy=<your https proxy>

- If you find that cpu utilization of ray cluster is low during the indexing pipeline, you need to tune parameters

RAY_PREPROCESS_CPUS_PER_ACTOR,RAY_EMBED_CPUS_PER_ACTORinscripts/run_distributed_indexing.sh: If the cpu performance is strong, you need to reduce the number of CPUS_PER_ACTOR and increase the number of RAY_ACTORS.

The Document Automation team tracks both bugs and enhancement requests using GitHub issues.

These materials are intended to assist designers who are developing applications within their scope. These materials do not purport to provide all of the requirements for a commercial, productions, or other solution. Any commercial or productions use of solution based on or derived from these materials is beyond their scope. You are solely responsible for the engineering, testing, safety, qualification, validation, and applicable approvals for any solution you build or use based on these materials. Intel bears no responsibility or liability for such use. You are solely responsible for using your independent analysis, evaluation and judgment in designing your applications and have full and exclusive responsibility to assure the safety of your applications and compliance of your applications with all applicable regulations, laws and other applicable requirements. You further understand that you are solely responsible for obtaining any licenses to third-party intellectual property rights that may be necessary for your applications or the use of these materials.

To the extent that any public or non-Intel datasets or models are referenced by or accessed using these materials those datasets or models are provided by the third party indicated as the content source. Intel does not create the content and does not warrant its accuracy or quality. By accessing the public content, or using materials trained on or with such content, you agree to the terms associated with that content and that your use complies with the applicable license.

Intel expressly disclaims the accuracy, adequacy, or completeness of any such public content, and is not liable for any errors, omissions, or defects in the content, or for any reliance on the content. Intel is not liable for any liability or damages relating to your use of public content.

Intel’s provision of these resources does not expand or otherwise alter Intel’s applicable published warranties or warranty disclaimers for Intel products or solutions, and no additional obligations, indemnifications, or liabilities arise from Intel providing such resources. Intel reserves the right, without notice, to make corrections, enhancements, improvements, and other changes to its materials.

Intel technologies may require enabled hardware, software or service activation. Performance varies by use, configuration and other factors. No product or component can be absolutely secure.

Intel is committed to respecting human rights and avoiding complicity in human rights abuses. See Intel's Global Human Rights Principles. Intel's content is intended only to be used in applications that do not cause or contribute to a violation of an internationally recognized human right.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

*Other names and brands may be claimed as the property of others.

Trademarks.

*FFmpeg is an open source project licensed under LGPL and GPL. See https://www.ffmpeg.org/legal.html. You are solely responsible for determining if your use of FFmpeg requires any additional licenses. Intel is not responsible for obtaining any such licenses, nor liable for any licensing fees due, in connection with your use of FFmpeg.