🚨 This repository contains information about our work "Diffusion Models for Monocular Depth Estimation: Overcoming Challenging Conditions", ECCV 2024

by Fabio Tosi, Pierluigi Zama Ramirez, and Matteo Poggi

University of Bologna

Note: 🚧 Kindly note that this repository is currently in the development phase. We are actively working to add and refine features and documentation. We apologize for any inconvenience caused by incomplete or missing elements and appreciate your patience as we work towards completion.

- Introduction

- Method

- Dataset

- Qualitative Results

- Quantitative Results

- Models

- Potential for Enhancing State-of-the-Art Models

- Citation

- Contacts

- Acknowledgements

We present a novel approach designed to address the complexities posed by challenging, out-of-distribution data in the single-image depth estimation task. Our method leverages cutting-edge text-to-image diffusion models with depth-aware control to generate new, user-defined scenes with a comprehensive set of challenges and associated depth information.

Key Contributions:

- Pioneering use of text-to-image diffusion models with depth-aware control to address single-image depth estimation challenges

- A knowledge distillation approach that enhances the robustness of existing monocular depth estimation models in challenging out-of-distribution settings

- The first unified framework that simultaneously tackles multiple challenges (e.g. adverse weather and non-Lambertian)

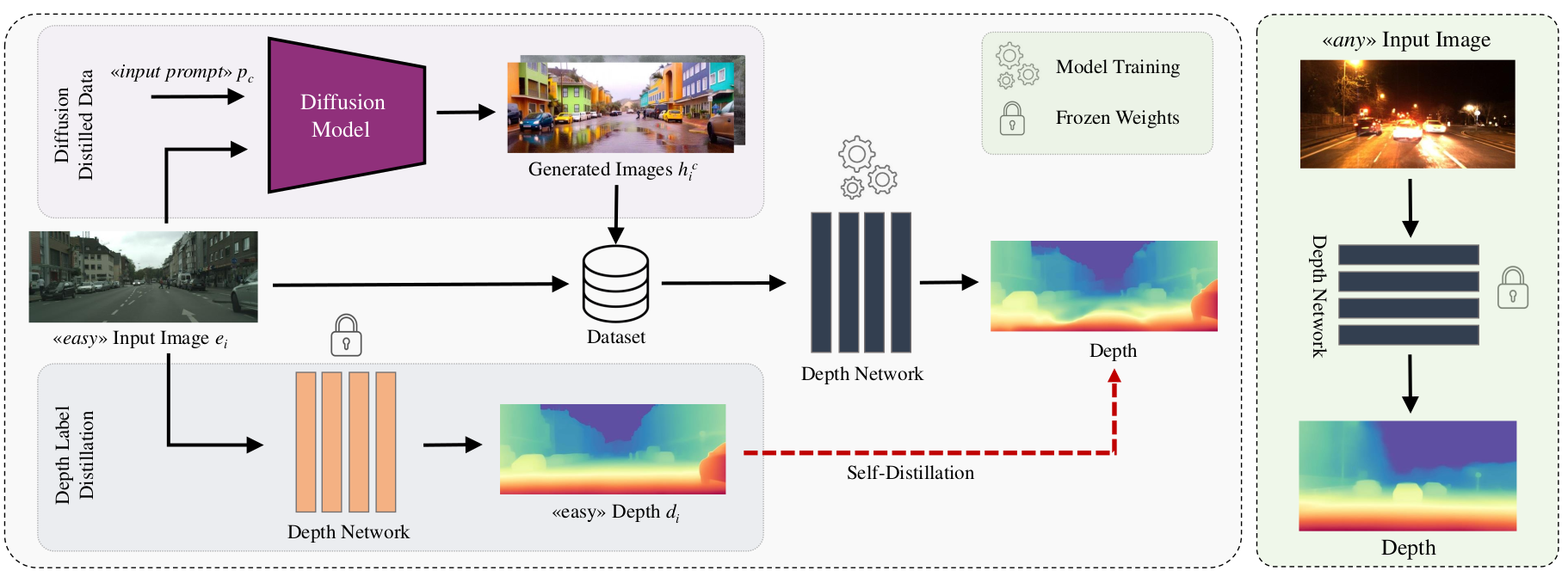

Our approach addresses the challenge of monocular depth estimation in complex, out-of-distribution scenarios by leveraging the power of diffusion models. Here's how our method works:

-

Scene Generation with Diffusion Models: We start with images that are easy for depth estimation (e.g., clear daylight scenes). Using state-of-the-art text-to-image diffusion models (e.g. ControlNet, T2I-Adapter), we transform these into challenging scenarios (like rainy nights or scenes with reflective surfaces). The novelty here is that we preserve the underlying 3D structure while dramatically altering the visual appearance. This allows us to generate a vast array of challenging scenes with known depth information.

-

Depth Estimation on Simple Scenes: We use a pre-trained monocular depth network, such as DPT, ZoeDepth, Depth-Anything, to estimate depth for the original, unchallenging scenes. This provides us with reliable depth estimates for the base scenarios.

-

Self-Distillation Protocol: We then fine-tune the depth network using a self-distillation protocol. This process involves:

- Using the generated challenging images as input

- Employing the depth estimates from the simple scenes as pseudo ground truth

- Applying a scale-and-shift-invariant loss to account for the global changes introduced by the diffusion process

This approach allows the depth network to learn robust depth estimation across a wide range of challenging conditions.

Key advantages of our method:

- Flexibility: By using text prompts to guide the diffusion model, we can generate an almost unlimited variety of challenging scenarios.

- Scalability: Once set up, our pipeline can generate large amounts of training data with minimal human intervention.

- Domain Generalization: By exposing the network to a wide range of challenging conditions during training, we improve its ability to generalize to unseen challenging real-world scenarios.

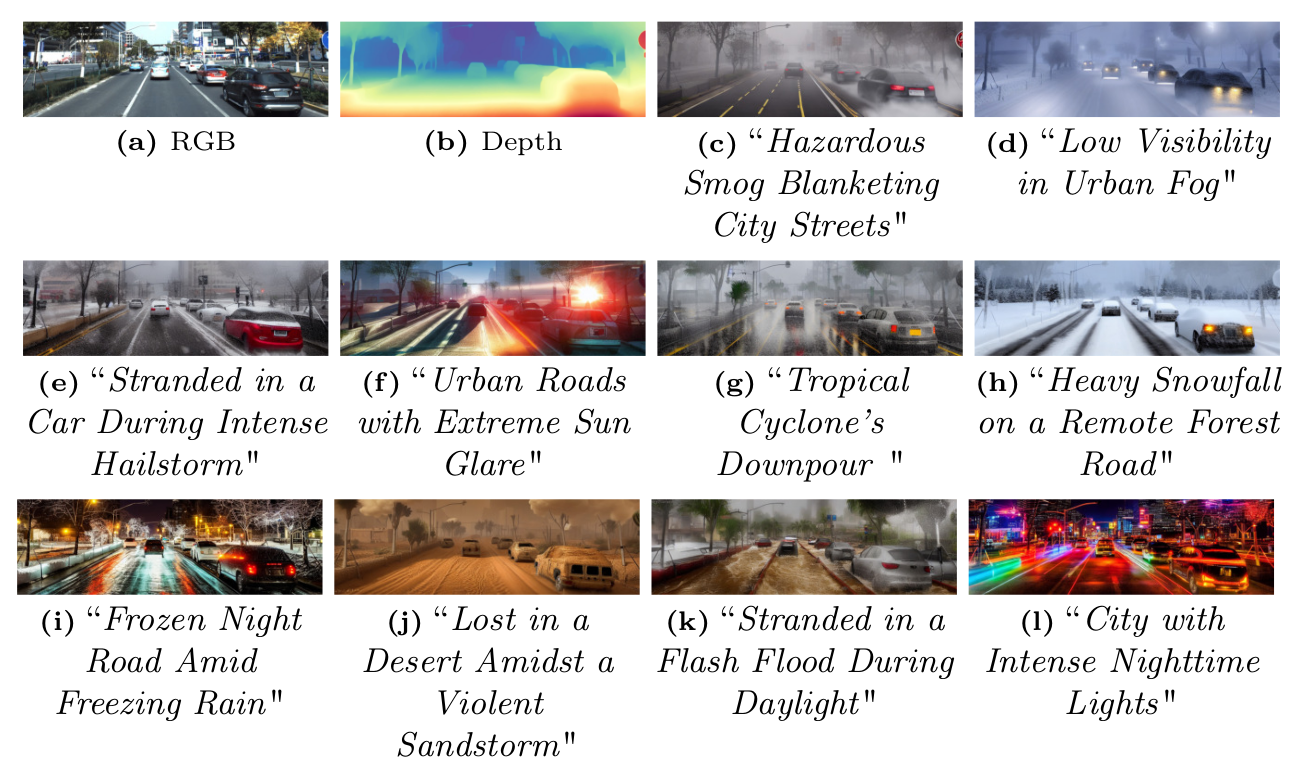

Our approach generates datasets for a variety of challenging conditions, demonstrating the versatility of creating diverse, realistic scenarios for robust depth estimation:

- Adverse weather (rain, snow, fog)

- Low-light conditions (night)

- Non-Lambertian surfaces (transparent and reflective objects)

- etc..

This image demonstrates our ability to generate driving scenes with arbitrarily chosen challenging weather conditions.

This figure illustrates our process of transforming easy scenes into challenging ones:

- Left: Original "easy" images, typically clear and well-lit scenes.

- Center: Depth maps estimated from the easy scenes, which serve as a guide for maintaining 3D structure.

- Right: Generated "challenging" images, showcasing various difficult conditions while preserving the underlying depth structure.

🚧 The generated dataset will be made available soon, providing a valuable resource for training and evaluating depth estimation models under challenging conditions.

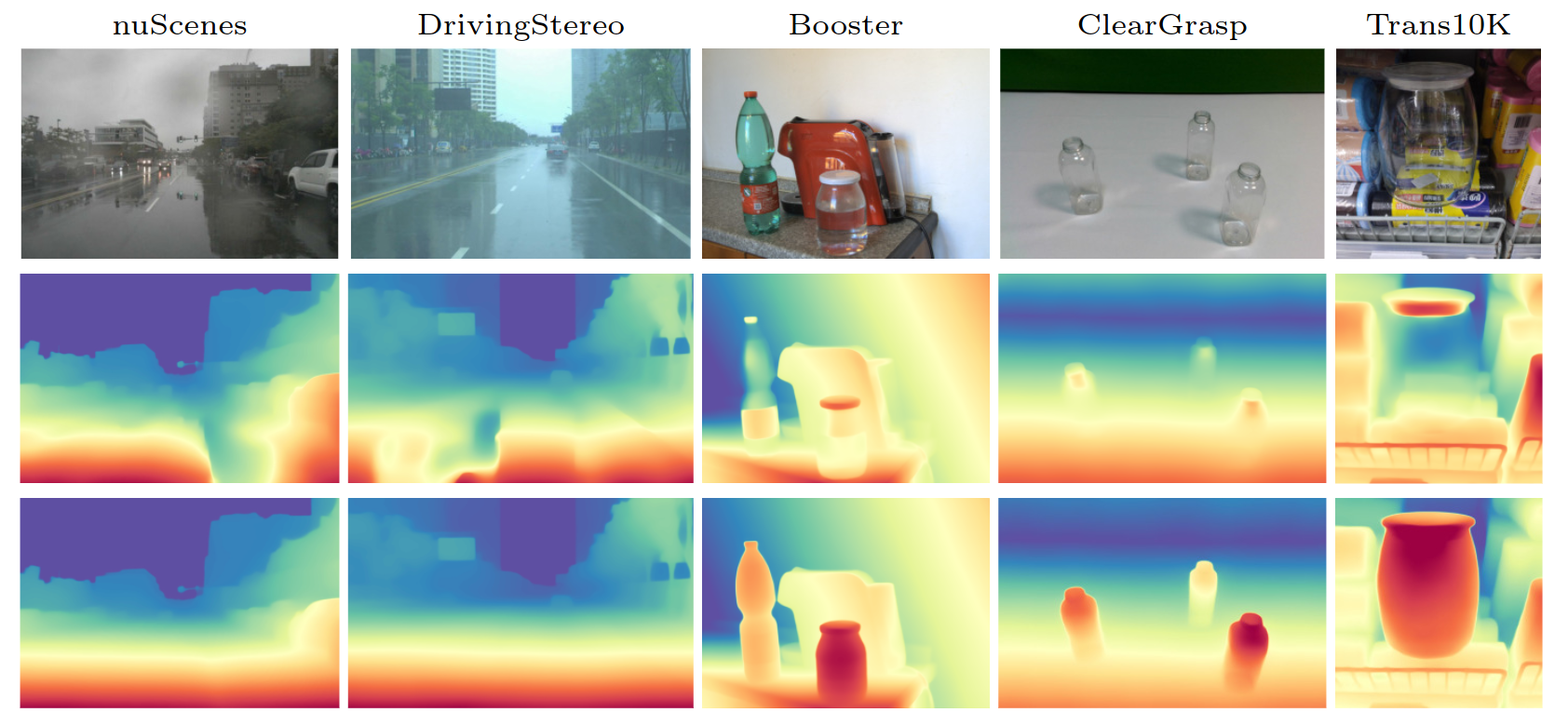

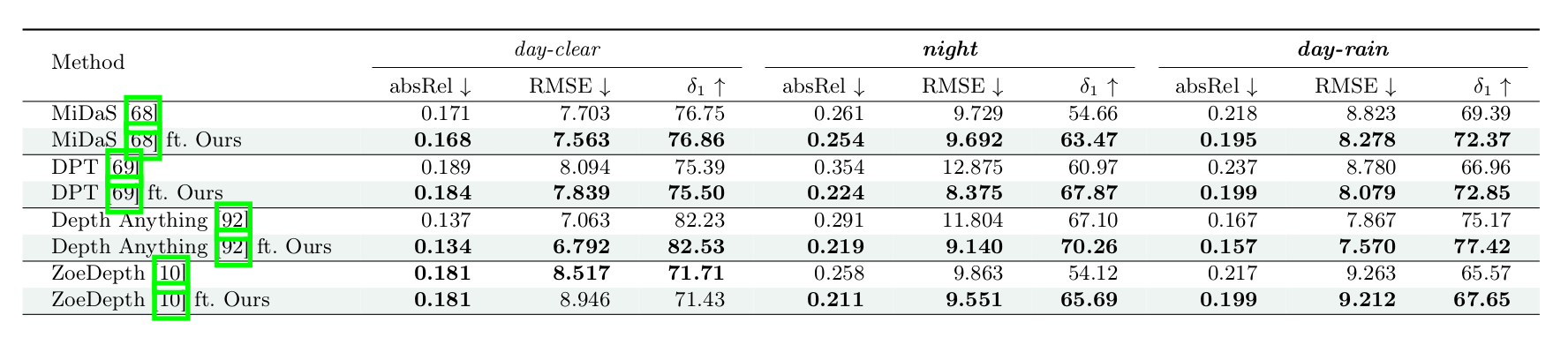

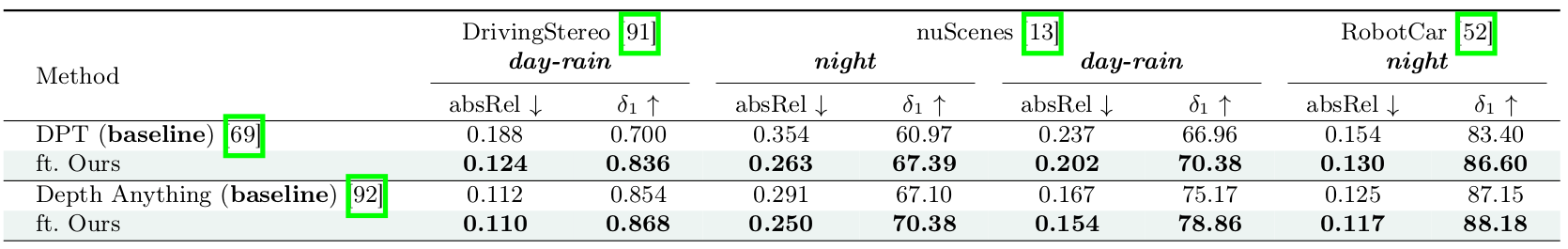

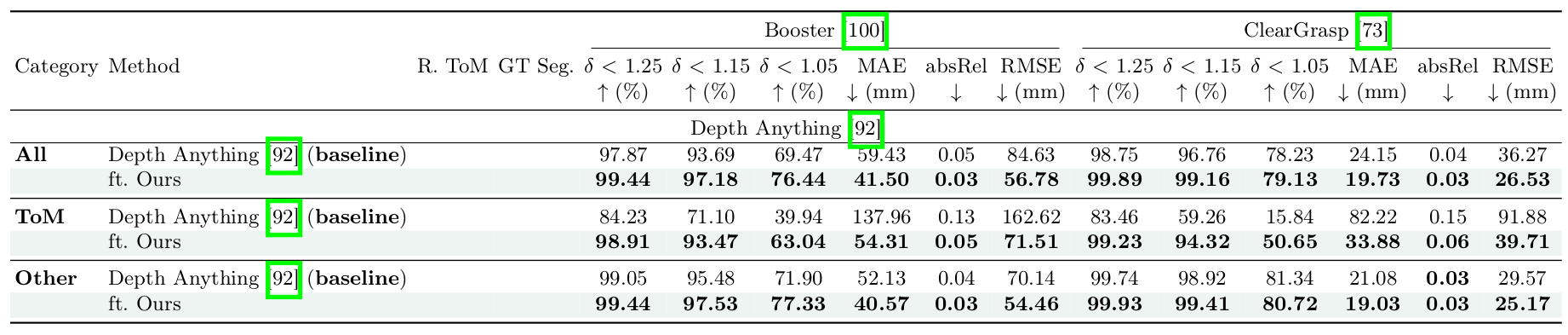

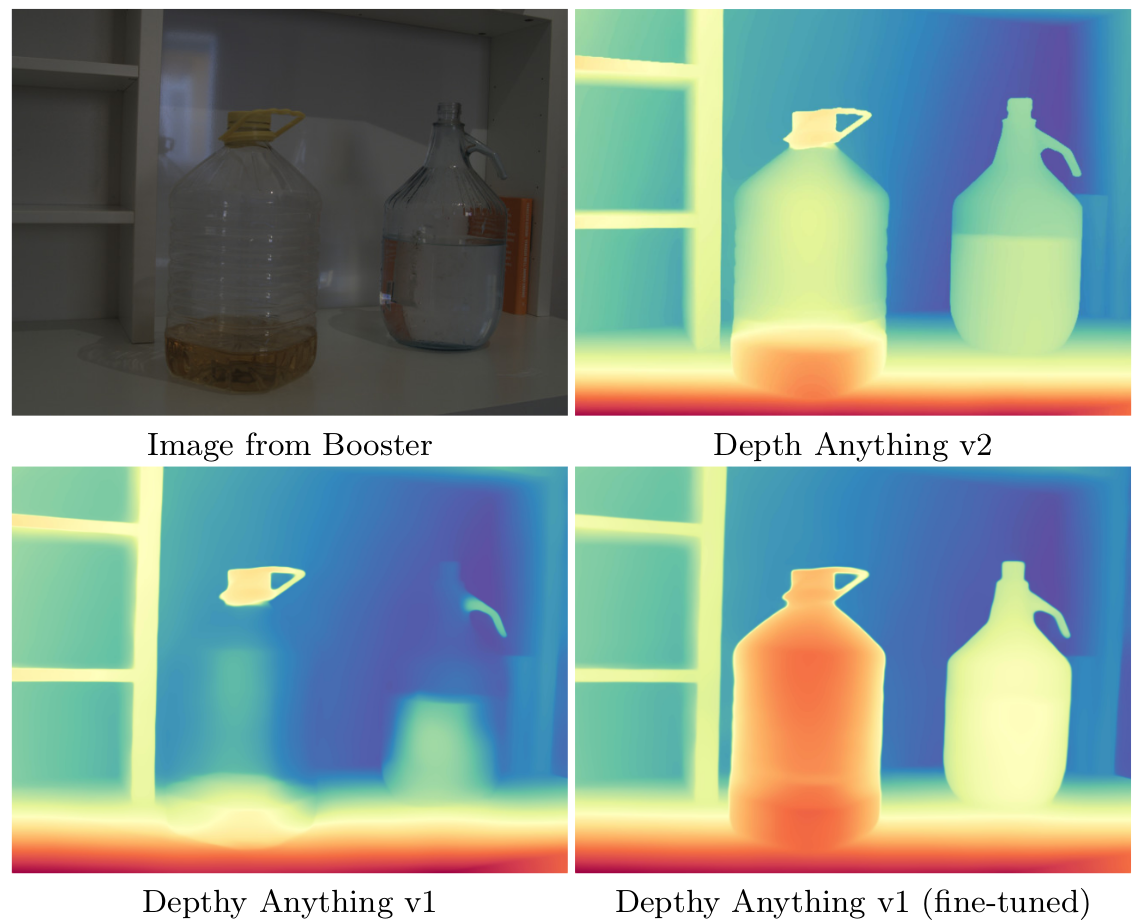

Our method significantly improves the performance of state-of-the-art monocular depth estimation networks. We show the ability of our approach to boost the capabilities of existing networks, such as Depth Anything in the following picture, across various challenging real-world datasets:

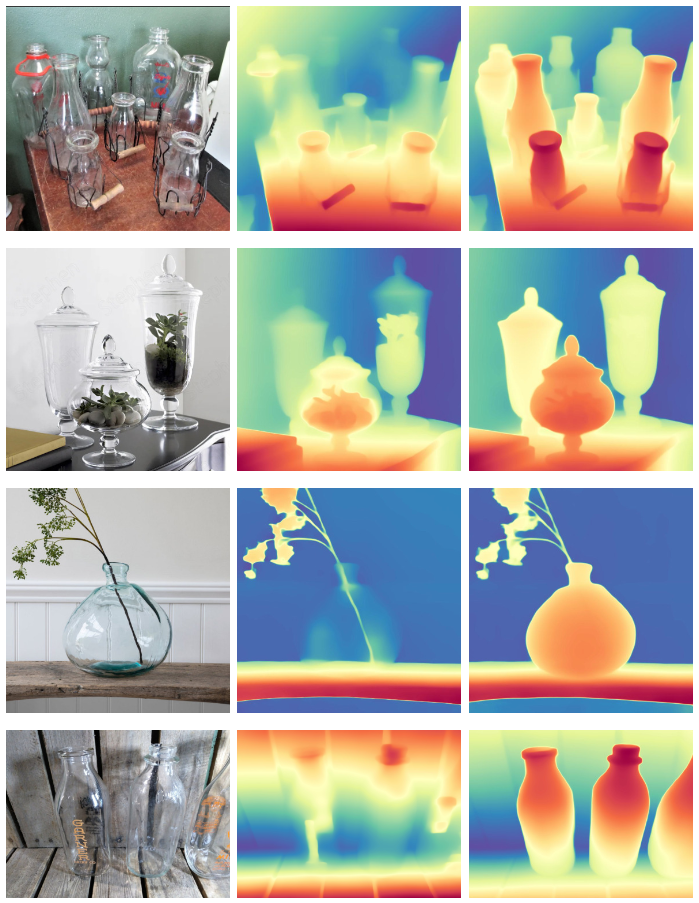

To further demonstrate the effectiveness of our approach, we tested the fine-tuned Depth Anything model using our generated dataset on a diverse set of challenging images featuring ToM surfaces sourced from the web:

- Left: Original RGB images from various real-world scenarios.

- Center: Depth maps estimated by the original Depth Anything model.

- Right: Depth maps produced by our fine-tuned Depth Anything model.

Our approach improves performance across various scenarios and datasets:

- Trained using only "easy" condition images (KITTI, Mapillary, etc.)

COMING SOON

We are currently preparing our trained models for release. Please check back soon for updates.

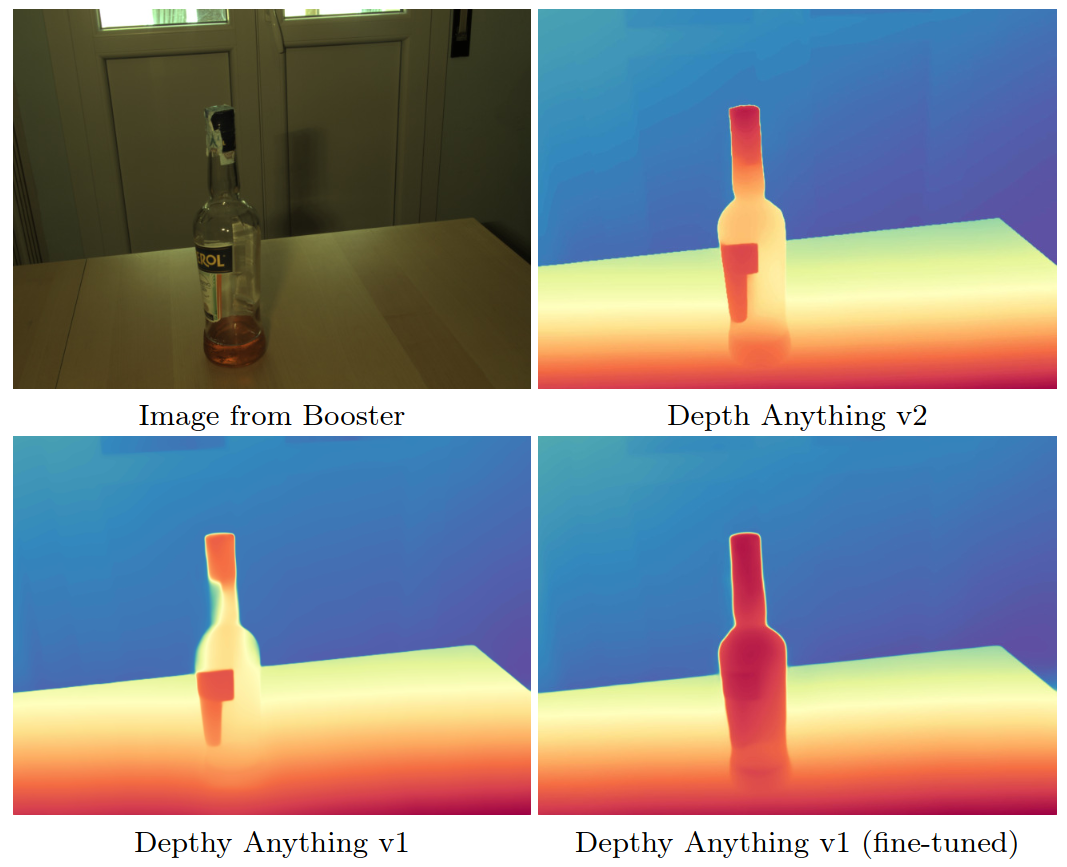

Note: Our work was submitted before the release of Depth Anything v2, which appeared on arXiv during the ECCV 2024 decision period.

Our framework shows potential for enhancing even state-of-the-art models like Depth Anything v2, which already performs impressively in challenging scenarios. The following example demonstrates how our method can improve depth estimation in specific cases:

If you find our work useful in your research, please consider citing:

@inproceedings{tosi2024diffusion,

title={Diffusion Models for Monocular Depth Estimation: Overcoming Challenging Conditions},

author={Tosi, Fabio and Zama Ramirez, Pierluigi and Poggi, Matteo},

booktitle={European Conference on Computer Vision (ECCV)},

year={2024}

}For questions, please send an email to fabio.tosi5@unibo.it, pierluigi.zama@unibo.it, or m.poggi@unibo.it

We would like to extend our sincere appreciation to the authors of the following projects for making their code available, which we have utilized in our work:

We also extend our gratitude to the creators of the datasets used in our experiments: nuScenes, RobotCar, KITTI, Mapillary, DrivingStereo, Booster and ClearGrasp.