Sentiment Analysis of Tweets Using ETL processes and Elastic Search was the objective of this Assigment. We were instructed to create an account on AWS(or any other cloud service provider) and twitter so that we could extract tweets and perform sentiment analysis on it. We are using python 3 for this Assignment. After that we load the result into ElasticSearch on Microsoft Azure Cloud. This assignment gives us an idea of how ETL works.

We extracted more than 100 tweets after creating a twitter account and used the credentials to authenticate ourselves.

To be able to run this program, you need to have the following things:

- python 3 (if not present)

- pip3 (if not present)

- pandas

- tweepy

- VaderSentiment library

- elasticSearch

- ElasticSearch DSL

- more_etertools library

-

To install python3 execute this command.

sudo apt-get install -y python3-pip -

Using pip you have to intall other libraries. To install tweepy execute this command.

pip3 install tweepy -

Install pandas next by executing this command.

sudo -H pip3 install pandas -

Install the vaderSentiment which is required for performing sentiment analysis.

pip3 install vaderSentiment -

Lastly, install more_itertools by executing this command.

pip3 install more-itertools -

Now, to be able load the data into elasticsearch, we need to install libararies that enables us to do that. Execute the following command to install elasticsearch.

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - sudo apt-get install apt-transport-https echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-6.x.list sudo apt-get update && sudo apt-get install elasticsearch -

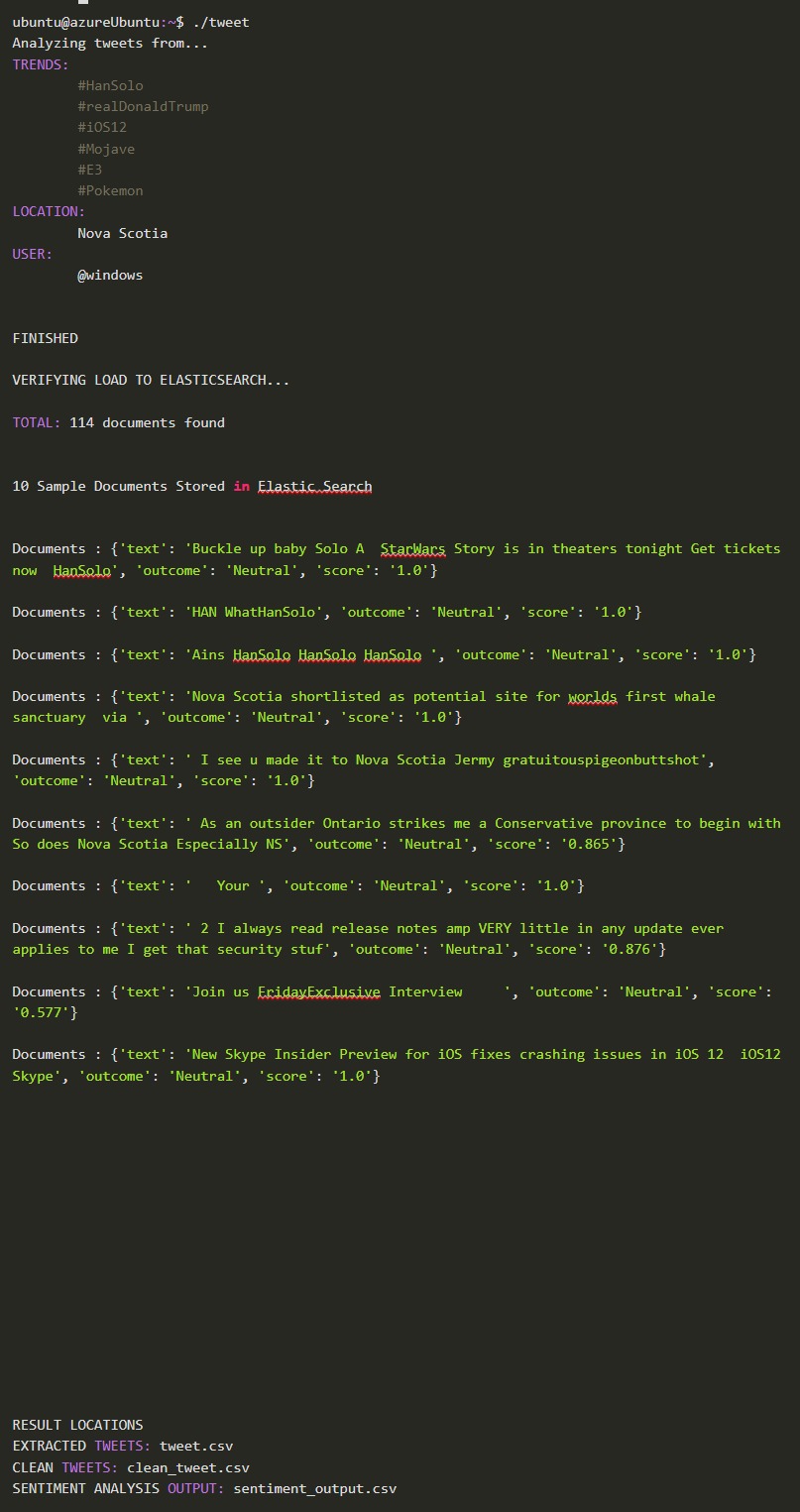

To run this program from a bash shell you'll have to write the following command.

./tweet

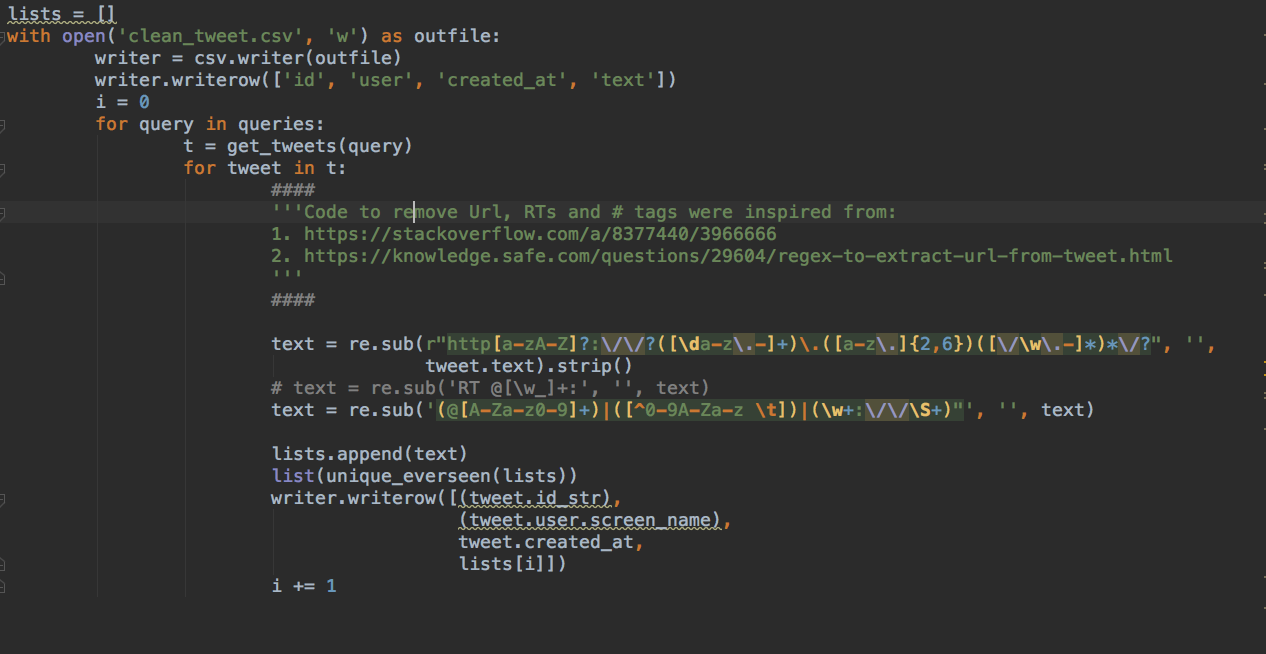

We are using tweepy to run a query and extract tweet into csv. Further, we cleaned the tweets and again created a new csv with nothing but pure texts. To clean the tweets we are making use of various regex and also we are encoding the tweets to "utf-8" because users were adding different emojis in their tweets.

We ran our query to find tweets from the following hashtags and usernames:

- #HanSolo

- Nova Scotia

- @Windows

- #realDonaldTrump

- #iOS12

- #Mojave

- #E3

- #Pokemon

Following is the code by which we are cleaning the tweets.

We are removing the following things from the tweets:

- URLs

- RTs and @

- Different language scripts

We are also adding multiple filters like language filter and retweet filter to retrieve tweets in english and remove redundant tweets.

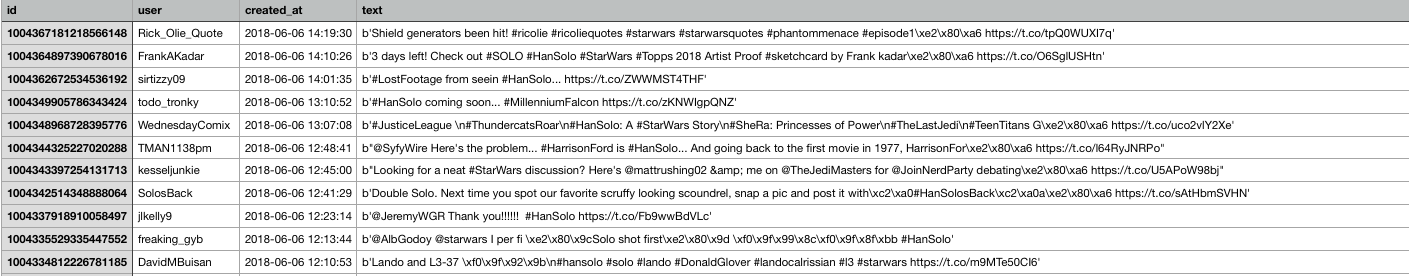

Raw tweets before cleaning

Tweets after cleaning

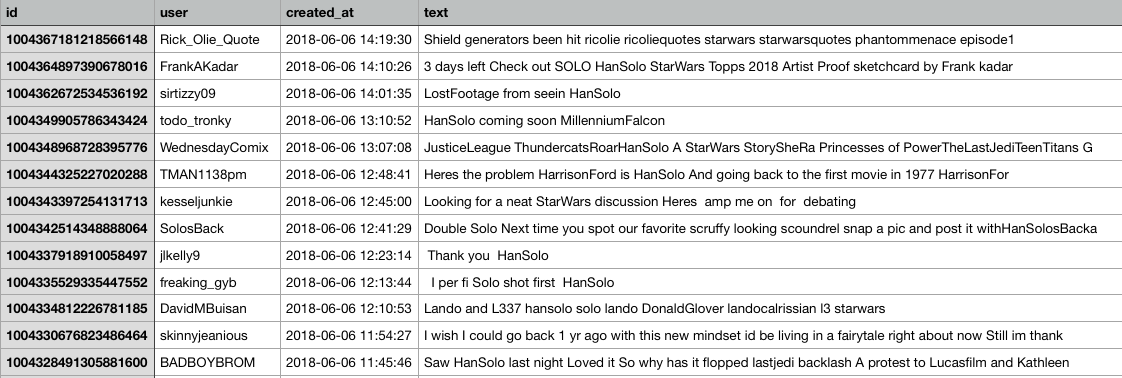

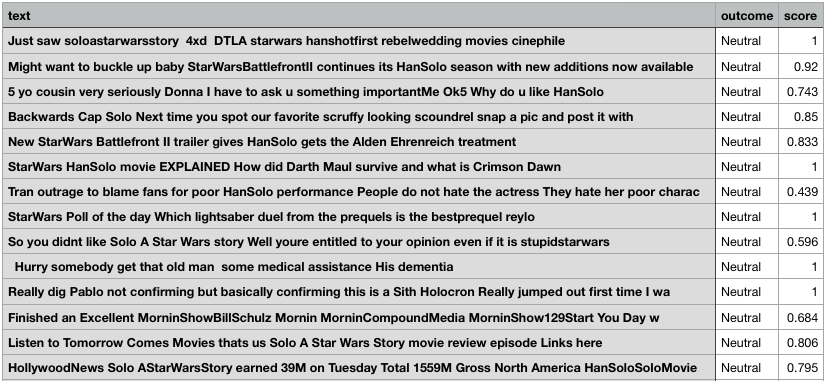

For performing sentiment analysis we are using the csv file that has clean tweets. A python library named vaderSentiment was chosen to carry out sentiment analysis. Vader takes the entire sentence as input and spits out the polarity scores after analyzing the sentence. VaderSentiment uses something called "boosters" which gives additional scores to adjectives.

Snap of booster in vaderSentiment Library

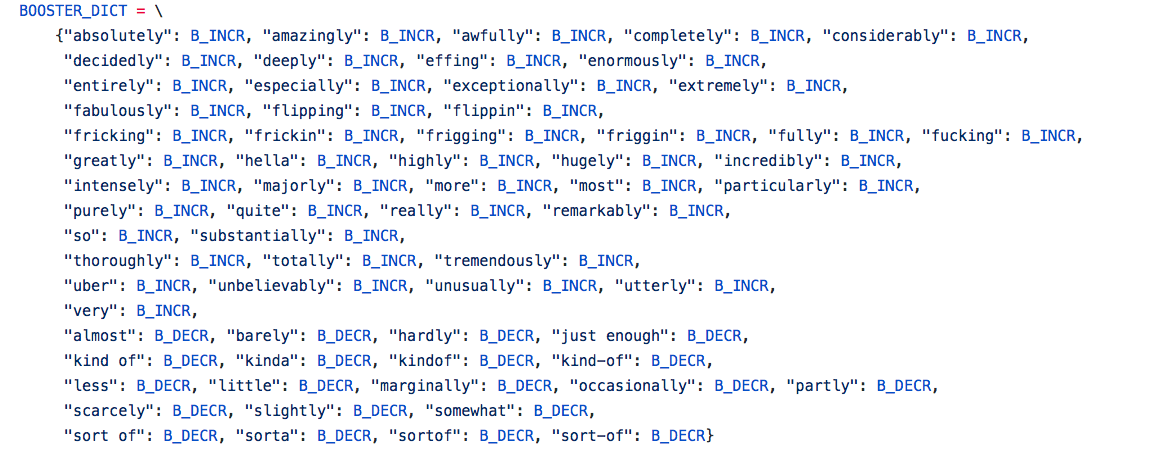

After performing the sentiment analysis, we are storing the output in a file "sentiment_output.csv" which has three columns, "Positive", "Negative" and "Neutral", respectively. These three columns gives the score of how much positive, negative or neutral the tweet was.

Screenshot of Sentiment Analysis

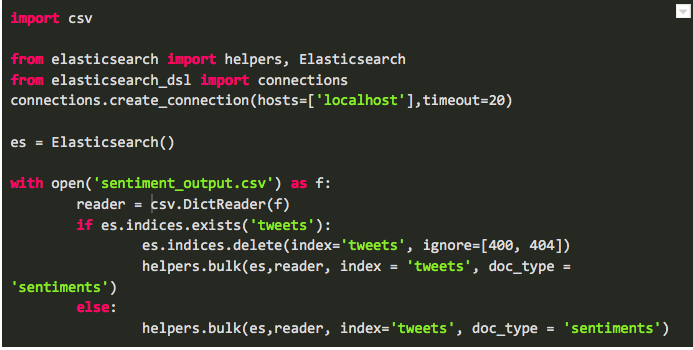

After the sentiment analysis is completed, we are loading the output data stored in “sentiment_output.csv” to Elasticsearch using Elasticsearch bulk helper function. The bulk function takes the instance of Elasticsearch and DictReader, index name (“tweets”)and document type(“sentiments”). In case the index already exists, it will delete the index and then will load the data.

Loading data into ElasticSearch

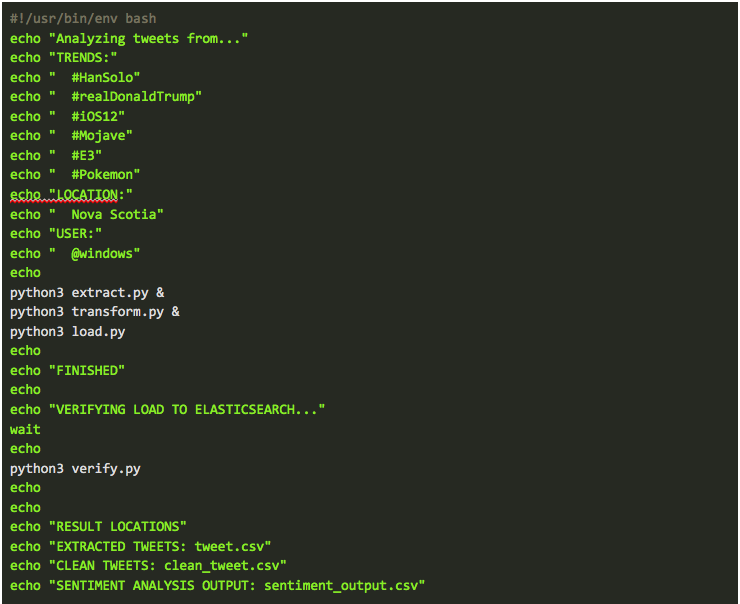

To run all three processes of extracting data from twitter, performing sentiment analysis and loading output in Elastic Search, a shell script using Linux Shell commands is created (./tweet). It will be used to run all the three process in a single batch job with no user intervention required and that runs as a background process.

Shell Script

- The license of vaderSentiment

The MIT License (MIT)

Copyright (c) 2016 C.J. Hutto

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE."