Service Function Chaining Application for Ryu SDN controller

This project is a prototype of a Service Function Chaining (SFC) application written in Python for Ryu SDN controller. It uses the flat network, which can be referenced as SFC enabled domain.

The only function which is performed by Service Functions (which I may refer as Virtual Network Functions) is further traffic forwarding. So those VNFs referenced here as forwarders.

The application enforces forwarding rules for a particular flow to OpenFlow enabled network so that traffic is passed through the defined chain of Service Functions.

There are several organizations working on fostering SDN and NFV. The Internet Engineering Task Force, Working Group for service function Chaining (IETF WG SFC) and European Telecommunications Standards Institute, Industry Specification Group for NFV (ETSI ISG NFV) are two to mention. They tend to call the same things in different wordings. Where IETF call an intended path a flow traverses as a Service Function Chain, ETSI refers the same as a Forwarding Graph. The same relates to a Service Function (IETF) and (Virtual) Network Function (IETF) which are an instance of something that processes data flows. So those terms picked from both of standardization bodies and used here interchangeable. Although for VNF it is not that important to emphasize its virtual nature in this project, I widely use acronym VNF here.

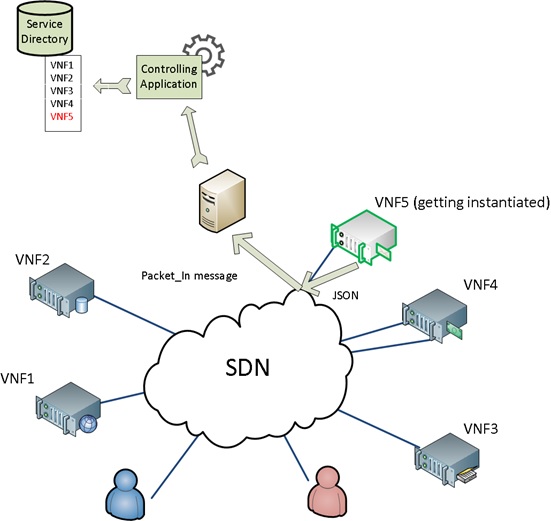

A Network Function Forwarding Graph defines a sequence of NFs that packets traverse. VNF Forwarding Graph provides the logical connectivity between VNFs. Controller application reads from the Service Catalogue a description of a service intended for a packet flow generated by a tenant. The service description is provisioned by OSS/BSS or manually in the test environment. It supposed to be a list of VNFs. This information along with VNF description is sufficient to formulate and enforce Open Flow rules to the network. Actual graphs achieved through manipulation of address information in packet headers followed by packet forwarding.

VNF self-registration is a service function discovery mechanism.

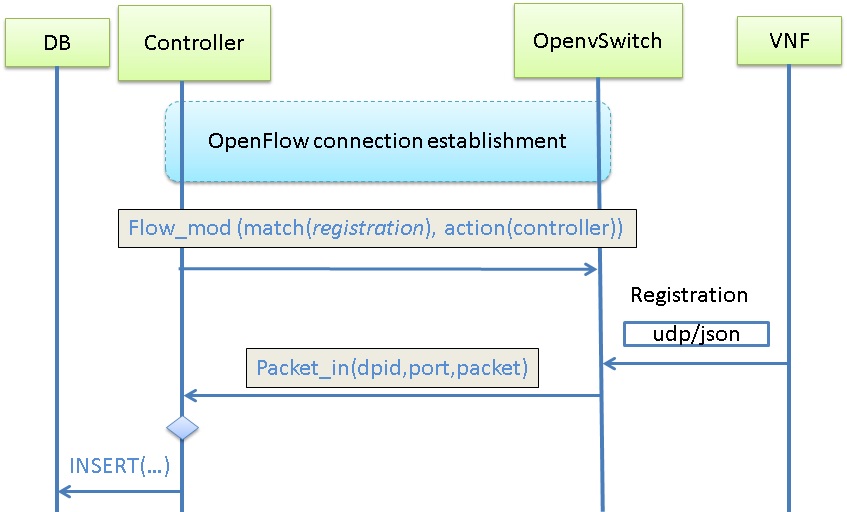

A cattle approach implies a service being instantiated from a resource pool embracing computing, storage, network resources. Virtual infrastructure manager is responsible for scheduling and spinning up a virtual machine. In this case, the location of a service (we will call it service function) is known with the granularity of the resource pool location, which is not enough for the purpose of Forwarding Graph building. To address this challenge we present self-registration functionality which allows a service announce its presence in the network. An assistant process on the VM emits registration message to the network on behalf of the service. This message contains the descriptive information on what kind of service it is, what the role the emitting interface plays (in, out, in-out), whether the service is bidirectional or asymmetric and so forth. The service itself, nevertheless, doesn’t have all the necessary information. This is where an Open Flow capable switch steps in.

It wraps the registration packet into a packet_in OpenFlow message and sends it to the SDN controller. Packet_in message includes network specific information, such as service address locator, which is its mac address, datapath identificator which is a unique switch id, and a port id, through which the registration message has been received. The controlling application parses the packet_in message, retrieves the information from it, decapsulates the registration message, decodes it as well, and passes all the retrieved information to the database.

In the setup, we have SDN network controlled by SDN controller on top of which an SFC application is running. The application exposes REST API to accept directives from OSS/BSS system. The application is integrated with the database, where information on registered service functions, services and flows is stored. This database represents a Service Catalog. There is no any OSS/BSS system (so I’m going to play a role of OSS/BSS system), service definitions and flow to service bindings prepared manually as well as sending requests to the REST APIs of the application.

The process goes like this:

- SF discovery: VNF self-registration. Rightmost (see pic.) DB table is populated with VNF characteristics.

- OSS/BSS populates DB tables describing service and Flow to service binding, where flow specification is included.

- OSS/BSS requests to start SFC for a flow. Flow is referenced by ID.

- SFC application interrogates DB on the flow specification...

- ... and installs the catching rule on the network. It is assumed that the ingress point of the flow is not known.

- As soon the traffic of interest appears in the SFC-enabled domain, the event reported to SFC application. At this moment the ingress point is revealed.

- Catching rule is removed and steering rule is installed along the path of the service chain.

- Traffic is steered.

The application can be improved by adding support for the following functionalities:

- Interface type support. Currently, all the interfaces are treated as inout type. Refining the requests to the Service Catalog Data Base will allow implementing multi-homed service function with defined flow direction (ex. Firewall with inside and outside interfaces). [DONE]

- Symmetric flow support can be a convenient feature to add reverse forwarding graph through symmetric service functions automagically. Symmetric or bidirectional functions are those which require traffic to be passed in both directions: uplink and downlink so that service could be provided (example: NAT). Not having that feature is not critical, but requires explicit definition of the reverse forwarding graph. [DONE]

- Group support is required when several instances of a service function are deployed. The absence of it can be worked around by using Load Balancers in front of actual Network Functions.

- Etc.: A richer set of protocol fields, wildcard logic, VNF statuses and other enhancements.

Initially, the environment was based on Ubuntu 14.04. Recently it has been tried out on the latest Ubuntu 20.04 LTS. I suppose other Linuxes are OK too, though I didn’t try them. The following software should be installed. Detailed installation instructions for each of the component can be found on the related web pages.

To avoid errors when running Ryu app, install older eventlet version:

pip install eventlet==0.30.2

In fact, as long as there is any SQLite client, this one is not required, but I found the tool very convenient for checking and editing SQLite database. The database is used in the project as a Service Directory.

My demonstration environment is based on mininet, a network emulator which creates a network of virtual hosts, switches, controllers, and links. The switches used in the demonstration are OpenvSwitches. For the sake of simplicity, the demonstration environment consists of five linearly connected switches; five hosts, each linked to one switch, except h2 and h3 which have additional links to switches s3 and s4 respectively, and a Ryu controller running OpenFlow1.3. example.py script sets up the environment (see Demonstration Instructions). In the environment, hosts h2 and h3 are VNFs with two interfaces. Those interfaces are of type "in" or "out" each. Type "inout" is supported as well.

The traffic comes from h1 to h5 and passes through h2 and h3 according to a service description when flow rules are imposed on the network.

To run the demonstration smoothly, make sure that IP forwarding is enabled on OS (sudo sysctl net.ipv4.ip_forward=1), traceroute is intalled and following lines added to /etc/hosts:

10.0.0.1 h1

10.0.0.2 h2-in

10.0.0.3 h3-in

10.0.0.4 h4

10.0.0.5 h5

10.0.0.12 h2-out

10.0.0.13 h3-out

The demonstration can be launched on the docker containers as well. See the instructions here.

The demonstration includes prepopulated flow table in the database, which, of course, can be modified. Flow 3 is used here which specification describes a flow from h1 (10.0.0.1) to h5 (10.0.0.5) Script example.py does all the magic of running mininet, interconnecting hosts and running self-registration on them.

- Open four terminals

- Start Ryu application in the 1st terminal:

- 1st terminal:

ryu-manager --verbose ./sfc_app.py

- 1st terminal:

- Start test topology in the 2nd terminal:

- 2nd terminal:

sudo ./example.py

- 2nd terminal:

- Check default OpenFlow rules before SFC applied:

- 2nd terminal:

mininet> h1 traceroute -I h5 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - One hop can be seen, default rules are insatlled

- 2nd terminal:

- Apply Service Function Chain and check catching rules being installed on OF switches:

- 3rd terminal:

curl -v http://127.0.0.1:8080/add_flow/3 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - After flow application catching rules for both directions are seen on OF switch. Flow 3 has a bidirectional VNF in it.

- 3rd terminal:

- Start traffic running, check steering rules:

- 2nd terminal:

mininet> h1 traceroute -I h5 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - Now traffic passes several hops, a catching rule has been replaced with a steering rule.

- 2nd terminal:

- Check traffic running in reverse direction, check steering rules:

- 2nd terminal:

mininet> h5 traceroute -I h1 - Reverse traffic flow passes several hops as one of the VNFs is bidirectional.

- 2nd terminal:

- Delete flows

- 3rd terminal:

curl -v http://127.0.0.1:8080/delete_flow/3 - 2nd terminal:

mininet> h1 traceroute -I h5 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - Data flow passes one hop again, no related rules seen on OF switch

- 3rd terminal:

- Apply Service Function Chain and check catching rules being installed on OF switches:

- 3rd terminal:

curl -v http://127.0.0.1:8080/add_flow/4 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - After flow application a catching rule is seen on OF switch. Flow 4 doesn't have a bidirectional VNF in it.

- 3rd terminal:

- Start traffic running, check steering rules:

- 2nd terminal:

mininet> h1 traceroute -I h4 - 2nd terminal:

mininet> h4 traceroute -I h1 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - Now traffic passes several hops from h1 to h4, a catching rule has been replaced with a steering rule. Traffic from h4 to h1 still passes one hop as there are no bidirectional VNFs on the way.

- 2nd terminal:

- Delete flows

- 3rd terminal:

curl -v http://127.0.0.1:8080/delete_flow/4 - 2nd terminal:

mininet> h1 traceroute -I h4 - 4th terminal:

for i in {1..5}; do echo s$i; sudo ovs-ofctl -O OpenFlow13 dump-flows s$i ; done - Data flow passes one hop again, no related rules seen on OF switch

- 3rd terminal: