中文 | English

InterpretDL, short for interpretations of deep learning models, is a model interpretation toolkit for PaddlePaddle models. This toolkit contains implementations of many interpretation algorithms, including LIME, Grad-CAM, Integrated Gradients and more. Some SOTA and new interpretation algorithms are also implemented.

InterpretDL is under active construction and all contributions are welcome!

The increasingly complicated deep learning models make it impossible for people to understand their internal workings. Interpretability of black-box models has become the research focus of many talented researchers. InterpretDL provides a collection of both classical and new algorithms for interpreting models.

By utilizing these helpful methods, people can better understand why models work and why they don't, thus contributing to the model development process.

For researchers working on designing new interpretation algorithms, InterpretDL gives an easy access to existing methods that they can compare their work with.

-

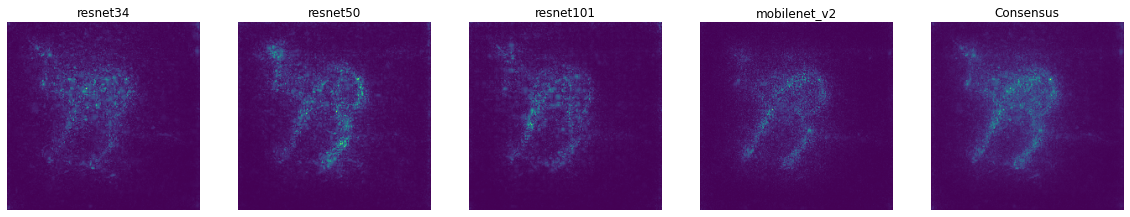

(2022/01/06) Implmented the Cross-Model Consensus Explanation method. In brief, this method averages the explanation results from several models. Instead of interpreting individual models, this method is able to identify the discriminative features in the input data with accurate localization. See the paper for details.

Consensus: Xuhong Li, Haoyi Xiong, Siyu Huang, Shilei Ji, Dejing Dou. Cross-Model Consensus of Explanations and Beyond for Image Classification Models: An Empirical Study. arXiv:2109.00707.

We show a demo with four models, while more models (around 15) could give a much better result. See the tutorial for details.

-

(2021/10/20) Implemented the Transition Attention Maps (TAM) explanation method for PaddlePaddle Vision Transformers. As always, several lines call this interpreter. See details from the tutorial notebook, and the paper:

TAM: Tingyi Yuan, Xuhong Li, Haoyi Xiong, Hui Cao, Dejing Dou. Explaining Information Flow Inside Vision Transformers Using Markov Chain. In Neurips 2021 XAI4Debugging Workshop.

| image | elephant | zebra |

|---|---|---|

|

|

|

Interpretation algorithms give a hint of why a black-box model makes its decision.

The following table gives visualizations of several interpretation algorithms applied to the original image to tell us why the model predicts "bull_mastiff."

| Original Image | IntGrad (demo) | SG (demo) | LIME (demo) | Grad-CAM (demo) |

|---|---|---|---|---|

|

|

|

|

|

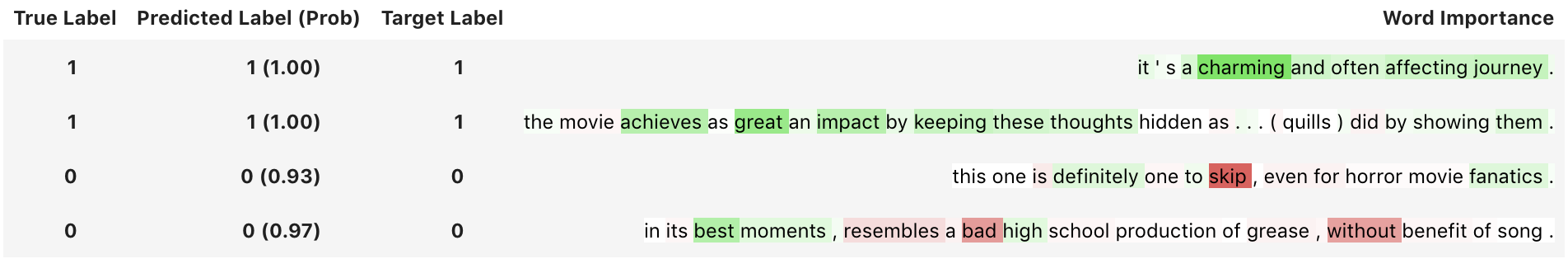

For sentiment classfication task, the reason why a model gives positive/negative predictions can be visualized as follows. A quick demo can be found here. Samples in Chinese are also available here.

- InterpretDL: Interpretation of Deep Learning Models based on PaddlePaddle

- Why InterpretDL

- 🔥 🔥 🔥 News 🔥 🔥 🔥

- Demo

- Contents

- Installation

- Documentation

- Usage Guideline

- Roadmap

- Copyright and License

- Recent News

It requires the deep learning framework paddlepaddle, versions with CUDA support are recommended.

pip install interpretdl

# or with tsinghua mirror

pip install interpretdl -i https://pypi.tuna.tsinghua.edu.cn/simplegit clone https://github.com/PaddlePaddle/InterpretDL.git

# ... fix bugs or add new features

cd InterpretDL && pip install -e .

# welcome to propose pull request and contribute# run gradcam unit tests

python -m unittest -v tests.interpreter.test_gradcam

# run all unit tests

python -m unittest -vOnline link: interpretdl.readthedocs.io.

Or generate the docs locally:

git clone https://github.com/PaddlePaddle/InterpretDL.git

cd docs

make html

open _build/html/index.htmlAll interpreters inherit the abstract class Interpreter, of which interpret(**kwargs) is the function to call.

# an example of SmoothGradient Interpreter.

import interpretdl as it

from paddle.vision.models import resnet50

paddle_model = resnet50(pretrained=True)

sg = it.SmoothGradInterpreter(paddle_model, use_cuda=True)

gradients = sg.interpret("test.jpg", visual=True, save_path=None)Details of the usage can be found under tutorials folder.

We are planning to create a useful toolkit for offering the model interpretations as well as evaluations. We have now implemented the interpretation algorithms as follows, and we are planning to add more algorithms that are desired. Welcome to contribute or just tell us which algorithms are desired.

-

Target at Input Features

- SmoothGrad

- IntegratedGradients

- Occlusion

- GradientSHAP

- LIME

- GLIME (LIMEPrior)

- NormLIME/FastNormLIME

- LRP

-

Target at Intermediate Features

- CAM

- GradCAM

- ScoreCAM

- Rollout

- TAM

-

Dataset-level Interpretation Algorithms

- Forgetting Event

- SGDNoise

- TrainIng Data analYzer (TIDY)

-

Cross-Model Explanation

- Consensus

-

Dataset-level Interpretation Algorithms

- Influence Function

-

Evaluations

- Perturbation Tests

- Deletion & Insertion

- Localization Ablity

- Local Fidelity

- Sensitivity

We plan to provide at least one example for each interpretation algorithm, and hopefully cover applications for both CV and NLP.

Current tutorials can be accessed under tutorials folder.

SGDNoise: On the Noisy Gradient Descent that Generalizes as SGD, Wu et al 2019IntegratedGraients: Axiomatic Attribution for Deep Networks, Mukund Sundararajan et al. 2017CAM,GradCAM: Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization, Ramprasaath R. Selvaraju et al. 2017SmoothGrad: SmoothGrad: removing noise by adding noise, Daniel Smilkov et al. 2017GradientShap: A Unified Approach to Interpreting Model Predictions, Scott M. Lundberg et al. 2017Occlusion: Visualizing and Understanding Convolutional Networks, Matthew D Zeiler and Rob Fergus 2013Lime: "Why Should I Trust You?": Explaining the Predictions of Any Classifier, Marco Tulio Ribeiro et al. 2016NormLime: NormLime: A New Feature Importance Metric for Explaining Deep Neural Networks, Isaac Ahern et al. 2019ScoreCAM: Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks, Haofan Wang et al. 2020ForgettingEvents: An Empirical Study of Example Forgetting during Deep Neural Network Learning, Mariya Toneva et al. 2019LRP: On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation, Bach et al. 2015Rollout: Quantifying Attention Flow in Transformers, Abnar et al. 2020TAM: Explaining Information Flow Inside Vision Transformers Using Markov Chain. Yuan et al. 2021Consensus: Cross-Model Consensus of Explanations and Beyond for Image Classification Models: An Empirical Study. Li et al 2021Perturbation: Evaluating the visualization of what a deep neural network has learned.Deletion&Insertion: RISE: Randomized Input Sampling for Explanation of Black-box Models.PointGame: Top-down Neural Attention by Excitation Backprop.

InterpretDL is provided under the Apache-2.0 license.

-

(2021/10/20) Implemented the Transition Attention Maps (TAM) explanation method for PaddlePaddle Vision Transformers. As always, several lines call this interpreter. See details from the tutorial notebook, and the paper:

TAM: Tingyi Yuan, Xuhong Li, Haoyi Xiong, Hui Cao, Dejing Dou. Explaining Information Flow Inside Vision Transformers Using Markov Chain. In Neurips 2021 XAI4Debugging Workshop.

import paddle

import interpretdl as it

# load vit model and weights

# !wget -c https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/ViT_base_patch16_224_pretrained.pdparams -P assets/

from assets.vision_transformer import ViT_base_patch16_224

paddle_model = ViT_base_patch16_224()

MODEL_PATH = 'assets/ViT_base_patch16_224_pretrained.pdparams'

paddle_model.set_dict(paddle.load(MODEL_PATH))

# Call the interpreter.

tam = it.TAMInterpreter(paddle_model, use_cuda=True)

img_path = 'samples/el1.png'

heatmap = tam.interpret(

img_path,

start_layer=4,

label=None, # elephant

visual=True,

save_path=None)

heatmap = tam.interpret(

img_path,

start_layer=4,

label=340, # zebra

visual=True,

save_path=None)| image | elephant | zebra |

|---|---|---|

|

|

|

- (2021/07/22) Implemented Rollout Explanations for PaddlePaddle Vision Transformers. See the notebook for the visualization.

import paddle

import interpretdl as it

# wget -c https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/ViT_small_patch16_224_pretrained.pdparams -P assets/

from assets.vision_transformer import ViT_small_patch16_224

paddle_model = ViT_small_patch16_224()

MODEL_PATH = 'assets/ViT_small_patch16_224_pretrained.pdparams'

paddle_model.set_dict(paddle.load(MODEL_PATH))

img_path = 'assets/catdog.png'

rollout = it.RolloutInterpreter(paddle_model, use_cuda=True)

heatmap = rollout.interpret(img_path, start_layer=0, visual=True)