Utilities & scripts to collect and find insight from social network data.

Store a local cache of data from social media sites in a Postgres database for analysis. Report on interesting trends, user patterns, etc..

Lets see what a user's been doing lately:

(env)~/reddit$ ./user_stats spez

INFO:main:obtaining user history: spez

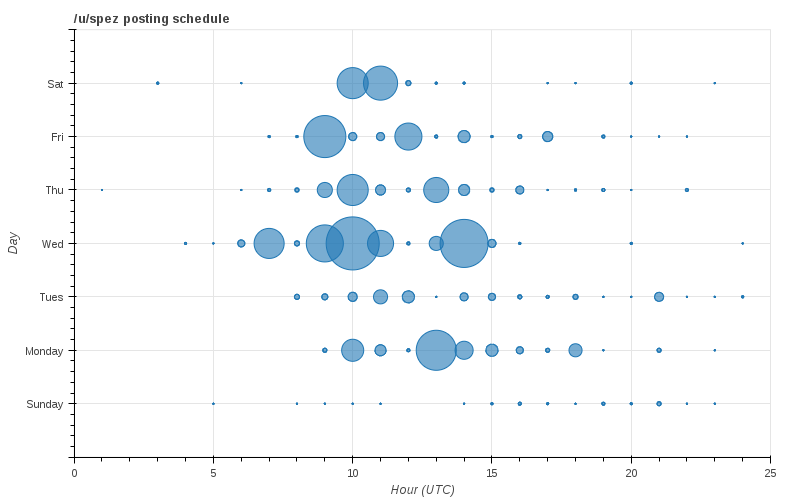

INFO:main:querying user schedule

INFO:main:querying user_comments

INFO:main:generate schedule graph

INFO:main:obtaining user history

/u/spez history has 966 posts

announcements 475 (49.2%)

reddit.com 76 ( 7.9%)

IAmA 52 ( 5.4%)

cscareerquestions 47 ( 4.9%)

programming 34 ( 3.5%)

modnews 34 ( 3.5%)

technology 26 ( 2.7%)

[...snip...]

- Clone the repo

- Get a Postgres database running

- Dive into the platform-specific directories (e.g. Reddit, or Twitter)

- Follow platform-specific instructions found in that README

- Run some scripts under ./collect ... then check out what's available under ./report

The project is static. Reddit changed their search functionality so cloudsearch no longer works. This leaves ./reddit/collect/subreddit_comments.py broken. The rest of it works as far as I know so you can still stream and get a redditors history. TODO: explore pushshift.io which looks like a better source for historical data from Reddit.