SORSA: Singular Values and Orthonormal Regularized Singular Vectors Adaptation of Large Language Models

This repository contains the codes of experiments of the paper SORSA: Singular Values and Orthonormal Regularized Singular Vectors Adaptation of Large Language Models.

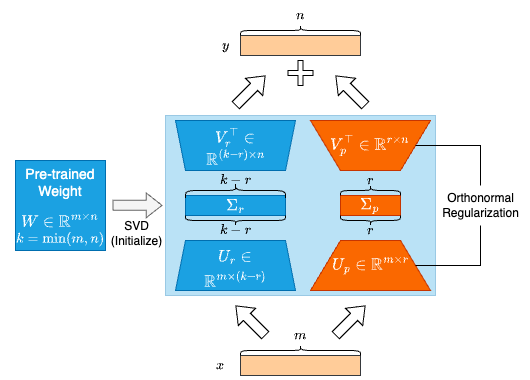

Singular Values and Orthonormal Regularized Singular Vectors Adaptation, or SORSA, is a novel PEFT method. Each SORSA layer consists of two main parts: trainable principle singular weights

| Method | Trainable Parameters |

MATH | GSM-8K |

|---|---|---|---|

| Full FT | 6738M | 7.22 | 49.05 |

| LoRA | 320M | 5.50 | 42.30 |

| PiSSA | 320M | 7.44 | 53.07 |

| SORSA | 320M | 10.36 | 56.03 |

| Method | Trainable Parameters |

MATH | GSM-8K |

|---|---|---|---|

| Full FT | 7242M | 18.60 | 67.02 |

| LoRA | 168M | 19.68 | 67.70 |

| PiSSA | 168M | 21.54 | 72.86 |

| SORSA | 168M | 21.86 | 73.09 |

First, install the packages via anaconda

conda env create -f environment.ymlDownload the MetaMathQA dataset from huggingface and put into ./datasets folder.

Run the run.py using hyperparameters in the paper to train:

python3 run.py --run-path ./runs --name llama2_sorsa_r128 --model meta-llama/Llama-2-7b-hf --lr 3e-5 --wd 0.00 --batch-size 2 --accum-step 64 --gamma 4e-4 --rank 128 --epochs 1 --train --bf16 --tf32After training, run the following command to merge the adapter to the base model:

python3 run.py --run-path ./runs --name llama2_sorsa_r128 --mergeRun following command to evaluate on GSM-8K:

python3 run.py --run-path ./runs --name llama2_sorsa_r128 --test --gsm-8k --bf16Run following command to evaluate on MATH:

python3 run.py --run-path ./runs --name llama2_sorsa_r128 --test --math --bf16You could cite the work by using the following BibTeX Code:

@misc{cao2024sorsasingularvaluesorthonormal,

title={SORSA: Singular Values and Orthonormal Regularized Singular Vectors Adaptation of Large Language Models},

author={Yang Cao},

year={2024},

eprint={2409.00055},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2409.00055},

}