Event Stream-based Visual Object Tracking: A High-Resolution Benchmark Dataset and A Novel Baseline. Xiao Wang, Shiao Wang, Chuanming Tang, Lin Zhu, Bo Jiang, Yonghong Tian, Jin Tang (2023). arXiv preprint arXiv:2309.14611. [Paper] [Code] [DemoVideo]

Tracking using bio-inspired event cameras draws more and more attention in recent years. Existing works either utilize aligned RGB and event data for accurate tracking or directly learn an event-based tracker. The first category needs more cost for inference and the second one may be easily influenced by noisy events or sparse spatial resolution. In this paper, we propose a novel hierarchical knowledge distillation framework that can fully utilize multi-modal / multi-view information during training to facilitate knowledge transfer, enabling us to achieve high-speed and low-latency visual tracking during testing by using only event signals. Specifically, a teacher Transformer based multi-modal tracking framework is first trained by feeding the RGB frame and event stream simultaneously. Then, we design a new hierarchical knowledge distillation strategy which includes pairwise similarity, feature representation and response maps based knowledge distillation to guide the learning of the student Transformer network. Moreover, since existing event-based tracking datasets are all low-resolution (

-

🔥 [2024.03.12] A New Long-term RGB-Event based Visual Object Tracking Benchmark Dataset (termed FELT) is available at [Paper] [Code] [DemoVideo]

-

🔥 [2024.02.28] Our code, visualizations and other experimental results have been updated.

-

🔥 [2024.02.27] Our work is accepted by CVPR-2024!

-

🔥 [2023.12.04] EventVOT_eval_toolkit, from EventVOT_eval_toolki (Passcode:wsad)

-

🔥 [2023.09.26] arXiv paper, dataset, pre-trained models, and benchmark results are all released [arXiv]

A demo video Youtube can be found by clicking the image below:

A distillation framework for Event Stream-based Visual Object Tracking.

[HDETrack_S_ep0050.pth] Passcode:wsad

[Raw Results] Passcode:wsad

Install env

conda create -n hdetrack python=3.8

conda activate hdetrack

bash install.sh

Run the following command to set paths for this project

python tracking/create_default_local_file.py --workspace_dir . --data_dir ./data --save_dir ./output

After running this command, you can also modify paths by editing these two files

lib/train/admin/local.py # paths about training

lib/test/evaluation/local.py # paths about testing

Then, put the tracking datasets EventVOT in ./data.

Download pre-trained MAE ViT-Base weights and put it under $/pretrained_models

Download teacher pre-trained CEUTrack_ep0050.pth and put it under $/pretrained_models

Download the trained model weights from [HDETrack_S_ep0050.pth] and put it under $/output/checkpoints/train/hdetrack/hdetrack_eventvot for test directly.

You can also access Weight files in Dropbox to download these weights files.

# train

python tracking/train.py --script hdetrack --config hdetrack_eventvot --save_dir ./output --mode single --nproc_per_node 1 --use_wandb 0

# test

python tracking/test.py hdetrack hdetrack_eventvot --dataset eventvot --threads 1 --num_gpus 1

Note: The speeds reported in our paper were tested on a single RTX 3090 GPU.

- Event Image version (train.zip 28.16GB, val.zip 703M, test.zip 9.94GB)

💾 Baidu Netdisk: link:https://pan.baidu.com/s/1NLSnczJ8gnHqF-69bE7Ldg?pwd=wsad code:wsad

- Complete version (Event Image + Raw Event data, train.zip 180.7GB, val.zip 4.34GB, test.zip 64.88GB)

💾 Baidu Netdisk: link:https://pan.baidu.com/s/1ZTX7O5gWlAdpKmd4R9VhYA?pwd=wsad code:wsad

💾 Dropbox: https://www.dropbox.com/scl/fo/fv2e3i0ytrjt14ylz81dx/h?rlkey=6c2wk2z7phmbiwqpfhhe29i5p&dl=0

- If you want to download the dataset directly on the Ubuntu terminal using a script, please try this:

wget -O EventVOT_dataset.zip https://www.dropbox.com/scl/fo/fv2e3i0ytrjt14ylz81dx/h?rlkey=6c2wk2z7phmbiwqpfhhe29i5p"&"dl=1

The directory should have the below format:

├── EventVOT dataset

├── Training Subset (841 videos, 180.7GB)

├── recording_2022-10-10_17-28-38

├── img

├── recording_2022-10-10_17-28-38.csv

├── groundtruth.txt

├── absent.txt

├── ...

├── Testing Subset (282 videos, 64.88GB)

├── recording_2022-10-10_17-28-24

├── img

├── recording_2022-10-10_17-28-24.csv

├── groundtruth.txt

├── absent.txt

├── ...

├── validating Subset (18 videos, 4.34GB)

├── recording_2022-10-10_17-31-07

├── img

├── recording_2022-10-10_17-31-07.csv

├── groundtruth.txt

├── absent.txt

├── ... Normally, we only need the "img" and "..._voxel" files from the EventVOT dataset for training. During testing, we only input "img" for inference. As shown in the following figure,

Note: Our EventVOT dataset is an unimodal Event Dataset, if you need a multimodal RGB-E dataset, please refer to [COESOT], [VisEvent], or [FELT].

- Download the EventVOT_eval_toolkit from EventVOT_eval_toolki (Passcode:wsad), and open it with Matlab (over Matlab R2020).

- add your tracking results and baseline results (Passcode:wsad) in

$/eventvot_tracking_results/and modify the name in$/utils/config_tracker.m - run

Evaluate_EventVOT_benchmark_SP_PR_only.mfor the overall performance evaluation, including SR, PR, NPR. - run

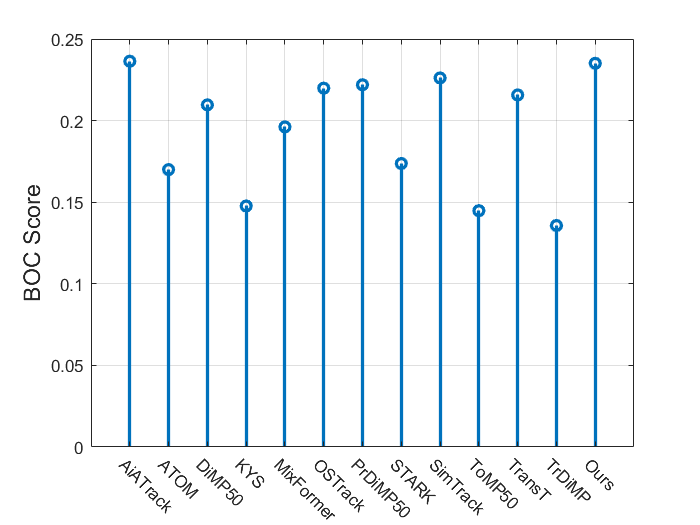

plot_BOC.mfor BOC score evaluation and figure plot. - run

plot_radar.mfor attributes radar figrue plot. - run

Evaluate_EventVOT_benchmark_attributes.mfor attributes analysis and figure saved in$/res_fig/.

The overall performance evaluation, including SR, PR, NPR.

- Thanks for the CEUTrack, OSTrack, PyTracking and ViT library for a quickly implement.

@inproceedings{wang2024event,

title={Event stream-based visual object tracking: A high-resolution benchmark dataset and a novel baseline},

author={Wang, Xiao and Wang, Shiao and Tang, Chuanming and Zhu, Lin and Jiang, Bo and Tian, Yonghong and Tang, Jin},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={19248--19257},

year={2024}

}