Abstract

Objective:

The objective of this study was to analyze bibliometric data from ISI, National Institutes of Health (NIH)–funding data, and faculty size information for Association of American Medical Colleges (AAMC) member schools during 1997 to 2007 to assess research productivity and impact.

Methods:

This study gathered and synthesized 10 metrics for almost all AAMC medical schools (n = 123): (1) total number of published articles per medical school, (2) total number of citations to published articles per medical school, (3) average number of citations per article, (4) institutional impact indices, (5) institutional percentages of articles with zero citations, (6) annual average number of faculty per medical school, (7) total amount of NIH funding per medical school, (8) average amount of NIH grant money awarded per faculty member, (9) average number of articles per faculty member, and (10) average number of citations per faculty member. Using principal components analysis, the author calculated the relationships between measures, if they existed.

Results:

Principal components analysis revealed 3 major clusters of variables that accounted for 91% of the total variance: (1) institutional research productivity, (2) research influence or impact, and (3) individual faculty research productivity. Depending on the variables in each cluster, medical school research may be appropriately evaluated in a more nuanced way. Significant correlations exist between extracted factors, indicating an interrelatedness of all variables. Total NIH funding may relate more strongly to the quality of the research than the quantity of the research. The elimination of medical schools with outliers in 1 or more indicators (n = 20) altered the analysis considerably.

Conclusions:

Though popular, ordinal rankings cannot adequately describe the multidimensional nature of a medical school's research productivity and impact. This study provides statistics that can be used in conjunction with other sound methodologies to provide a more authentic view of a medical school's research. The large variance of the collected data suggests that refining bibliometric data by discipline, peer groups, or journal information may provide a more precise assessment.

Highlights

Principal components analysis discovered three clusters of variables: (1) institutional research productivity, (2) research influence or impact, and (3) individual faculty research productivity.

The associations between size-independent measures (e.g., average number of citations/article) were more significant than associations between size-independent bibliometric measures and size-dependent (e.g., number of faculty) bibliometric measures and vice versa, except in the case of total National Institutes of Health (NIH) funding.

The factor coefficients, or loadings, for total NIH funding may associate more with the quality of research rather than the quantity of research.

The removal of twenty outliers, fourteen highly productive or influential medical schools and six medical schools with relatively low research profiles, changed the results of the analysis significantly.

This study's broad institutional bibliometric data sets cannot be extrapolated to specific departments at the studied medical schools.

Implications

Librarians, administrators, and faculty should use several methodologies in tandem with bibliometric data when evaluating institutions' research impact and productivity.

Health sciences librarians should not make use of university rankings materials lacking strong methodological foundations.

This study's bibliometric data may provide a starting point or point of comparison for future assessments.

Introduction

Bibliometric statistics are used by institutions of higher education to evaluate the research quality and productivity of their faculty. On an individual level, tenure, promotion, and reappointment decisions are considerably influenced by bibliometric indicators, such as gross totals of publications and citations and journal impact factors [1–6]. At the departmental, institutional, or national level, bibliometrics inform funding decisions [1,7,8], develop benchmarks [1,9], and identify institutional strengths [1,10,11], collaborative research [1,12], and emerging areas of research [1,13,14]. Due to the important organizational and personnel decisions made from these analyses, these statistics and the concomitant rankings elicit controversy. Many scholars denounce the use of ISI's impact factor and immediacy index as well as citation counts in assessing a study's quality and influence. Major criticisms of reliance on bibliometric indicators include manipulation of impact factors by publishers, individual self-citations [15], uniqueness of disciplinary citation patterns [15,16], context of a citation [17], and deficient bibliometric analysis [18]. Many researchers condemn ISI for promoting and promulgating flawed and biased bibliometric data that rely on unsophisticated or limited methodologies [15,19,20], exclude the vast majority of the world's journals [15,19], and contain errors and inconsistency [15,21]. Conversely, other scholars point out the utility of bibliometric measures, even in light of valid criticisms, and posit that they accurately depict scholarly communication patterns [22–24], correlate with peer-review ratings [25], predict emerging fields of research [22], show disciplinary influences [26], and map various types of collaboration [22].

Assessment of medical schools and their research output has demonstrated no methodological uniformity. Arguably the most famous ranking of US medical schools is America's Best Graduate Schools, published by the magazine, U.S. News & World Report, which takes two research measures into account: total dollar amounts from National Institutes of Health (NIH) research grants and the average dollar amounts from NIH research grants per faculty member. This methodology also employs reputational assessments from medical and osteopathic school deans, residency program directors, or other school administrators; student body characteristics; and faculty-to-student ratios to formulate rankings [27]. Several methodological shortcomings have been identified with the U.S. News & World Report's rankings, including the predominance of reputational measures, omission of over half of the accredited medical schools, inadequate use of standard statistical methods, and absence of any bibliometric measure [28–30].

Although their methodologies differed, more rigorous studies addressed the issue of evaluating biomedical research. Over twenty-five years ago, McAllister and Narin appraised research at medical schools in the United States by comparing NIH funding and basic citation information [31]. More recently, McGaghie and Thompson argued that research output should be evaluated by grants and peer-reviewed publications as well [28]. Combining quantitative and qualitative methodologies, Wallin recommended sound bibliometric analysis paired with a peer review of the research's influence to evaluate research [32]. More sophisticated analyses have formulated novel bibliometric indicators from collected data. For example, British researchers recently concluded that new measures, such as “world scale values” and “relative esteem values,” used in tandem with journal impact factors, citation analysis, and participation on journal editorial boards best evaluate psychiatric researchers [33]. In assessing medical schools in Europe, Lewison used bibliographic and bibliometric data that tracked the amount of international collaboration, volume and increases of research output, “journal esteem factors,” systematic review percentages, and citations by patents [34]. Integrating several measures derived from a variety of sound methodological approaches might provide a nuanced and more accurate interpretation of a medical school's research output.

The objective of this study was to collect and examine bibliometric data, NIH-funding statistics, and faculty size information from Association of American Medical Colleges (AAMC) medical schools in the United States to identify some basic measures of research productivity and impact. In addition to presenting a general picture of a medical school's research, conducting a macro-level institutional analysis was intended to provide medical schools with potential benchmarks for future comparisons. Additionally, the author sought to analyze the multivariate relationships between the collected metrics to describe the relative association of individual measures with each other.

Methodology

Gathering Data

Employing raw citation data gathered and synthesized from ISI's online citation index, Web of Science, this study intended to provide measures that might describe research productivity of US medical schools that were AAMC members (n = 128). Web of Science contains bibliographic and bibliometric data from approximately 8,700 journals. In searching for research from specific medical schools, the author used broad searches in the Web of Science address field and limited to the date range, 1997–2007. Because the author used the search term, “Med*,” in most of the searches, he took precautions to eliminate articles dealing with veterinary medicine and media studies. The author subsequently refined the results by institution using the Analyze Results feature in Web of Science. Exercising due diligence in capturing all possible name variants of a medical school in Web of Science, the author linked proper names of medical schools (Weill Medical College) and institutional names (Cornell University) with a Boolean “OR” operator. Furthermore, Web of Science attributes the same status to all institutions listed in the address field regardless of primary authorship or total number of authors. The author did not use proportional or fractional attribution methods [35]. For example, an article with the primary author and nine authors from medical school A and one author from medical school B treated both medical schools the same in the article and citation counts.

For each medical school search, a citation report was run in Web of Science on the refined results set to gather five measures:

the total number of published articles per medical school (a)

the total number of citations to published articles per medical school (c)

the average citations per article (a/c)

the institutional h-index (h)

the number of articles with zero citations per medical school

From these measures, the author calculated:

institutional impact indices (i)

institutional percentages of articles with zero citations (z)

The citation report feature in Web of Science limits users to results sets less than 10,000 articles. Given that numerous medical schools exceeded 10,000 published articles in medicine and biomedical sciences, the author ran multiple citation reports for the most prolific institutions. Articles and the accompanying citation data from these institutions were harvested through refining the data by individual year and totaling the sums. These data were searched during the week of March 16, 2008, through March 22, 2008.

Using faculty information from AAMC [36] and institutional grant information from NIH [37], the following measures were retrieved or calculated:

total amount of NIH funding, 1997–2005, per medical school (t)

annual average number of faculty per medical school, 1997–2007 (f2007)

annual average number of faculty per medical school, 1997–2005 (f2005)

average amount of NIH grant money awarded in 1997–2005 per faculty member (t/f2005)

average number of articles per faculty member, 1997–2007 (a/f2007)

average number of citations per faculty member, 1997–2007 (c/f2007)

Two caveats must be mentioned in regard to the NIH data. Because NIH had only published NIH institutional funding data through 2005 by March 2008, the author calculated the average amount of NIH grant money awarded per faculty member (t/f2005) for only the nine-year period of 1997–2005. Secondly, NIH did not publish funding figures for 5 AAMC medical schools: Florida International University College of Medicine, Florida State University College of Medicine, Mayo Medical School, San Juan Bautista School of Medicine, and University of Central Florida College of Medicine. These 5 institutions were eliminated from the statistical analyses, resulting in 123 medical schools studied. Isolated data points or groups of points, such as the data from the aforementioned medical schools, exert powerful influence over variable correlations and can camouflage fundamental relationships that may exist.

Calculating Impact Index

Hirsch developed the h-index (h) originally to measure the quantitative and qualitative impact of the individual scholar's research [24]. The h-index is an indicator that is defined as the number of published papers, h, each of which has been cited by others at least h times. Universities that produce larger numbers of publications will generally command higher h-indices, demonstrating the metric's inherent size dependence. In this study, size-dependent variables included total number of publications (a), total number of citations (c), faculty size (f2007), and total NIH funding (t), which all favored larger medical schools. To allow comparative equity among variously sized medical schools, size-independent variables—such as average citations per article (a/c), institutional impact indices (i), institutional percentages of articles with zero citations (z), average amount of NIH grant money per faculty member (t/f2005), average number of articles per faculty member (a/f2007), and average number of citations per faculty member (c/f2007)—were necessary for faculty-level productivity and research impact analyses.

To mitigate the influence of the number of articles published, Molinari and Molinari devised a methodology to extend the utility of the h-index with a new metric, the impact index (i), to evaluate the research productivity of large groupings. This study applied Molinari and Molinari's methodology to retrieve and calculate size-independent bibliometric data. In their paper, the impact index (i) was calculated by dividing a set of published articles' h-index (h) by a factor (m) of the number of published articles (a). Molinari and Molinari computed 0.4 as the power law exponent, or the master curve (m), as it has shown to be the universal growth rate for citations over time for large numbers of papers [38]. Thus, impact index was calculated to be:

This equation characterizes the number of relatively high-impact articles at an institution in relation to all articles published at the same institution, producing a metric that is able to compare publication sets of differing sizes [38]. Recently, a similar study in the Proceedings of the National Academy of Sciences also applied Molinari and Molinari's methodology, to calculate h-indices and impact indices of non-biomedical research for selected universities, laboratories, and government agencies in the United States [39].

Analyzing Data

The author entered the collected and synthesized data into SPSS v.15. Because the ten indicators were dependent and interval variables, the author employed factor analysis to examine the relationships between variables. A multivariate statistical technique, factor analysis, can ascertain the underlying structure of the variables' relationships by reducing them to a smaller number of hidden variables, known as factors. These factors, in turn, provide insight to how variables cluster together [40–42].

Problems arise with factor analysis when variables in the correlation matrix exhibit very high correlations, also known as multicollinearity. The author assumed multicollinearity existed in the data due to the fact that size-independent variables were synthesized from size-dependent variables and the concomitant presence of several significant bivariate linear correlations. SPSS calculated the Pearson's correlation coefficients (r) and the coefficients of determination (R2). Furthermore, statisticians suggest a very large sample size when conducting factor analysis. This study's sample size of 123 medical schools was a poor-to-fair sample size for common factor analysis [42]. SPSS was unable to calculate factors using principal axis, or common, factor analysis, so another method of factor analysis, principal components analysis, was utilized to extract factors. Analogous to common factor analysis, principal components analysis uncovers how many factors describe the set of variables, what the factors themselves are, and which variables form independent coherent groupings [41].

After extracting the factor coefficients, or loadings, both orthogonal (using varimax technique) and oblique (using promax technique) rotations were performed to provide breadth to the principal component analysis. Rotational methods simplify the data interpretation by diversifying the variable loadings, thus showing which variables cluster together more clearly. Orthogonal rotations assume no correlations exist among factors, whereas oblique rotations presume that correlations do exist. When applied, the two types of rotations reap different results.

Results

Collected and Synthesized Data

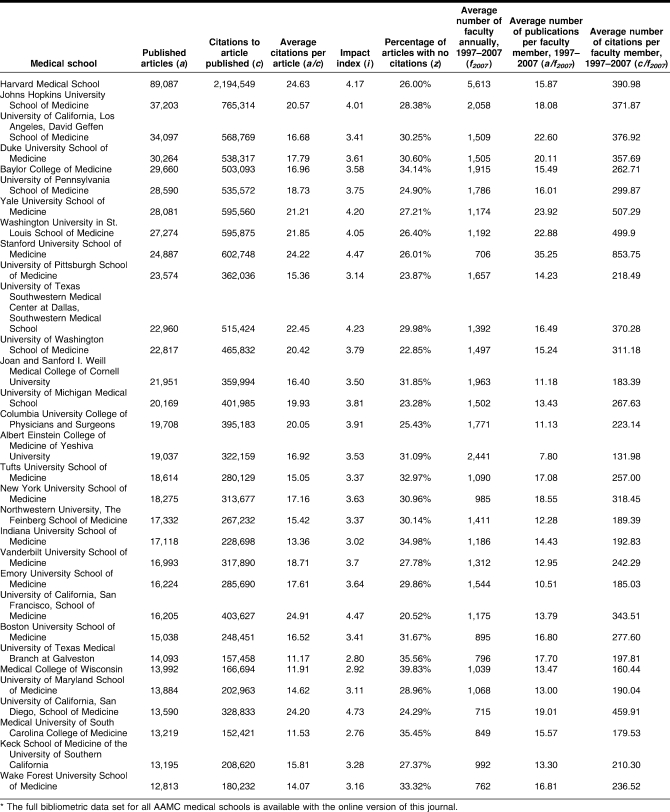

Table 1 provides the total number of published articles from 1997–2007 per medical school (a), total number of citations to those published articles per medical school (c), average number of citations per article (a/c), institutional impact index (i), and institutional percentage of articles with no citations (z) from the top quartile of medical schools in total published articles (n = 31). From 1997–2007, Harvard University Medical School published more than twice as many articles as any other medical school and garnered almost three times as many citations. Two of the three size-independent bibliometric measures, the institutional impact index (i) and the percentage of articles with no citations (z), were topped by the Mount Sinai School of Medicine of New York University. The University of California, San Francisco, School of Medicin, had the highest average number of citations per article (a/c) with 24.91 citations per article. Additionally, dividing many of the collected metrics, such as publications (a) and citations (c), with the annual average of total faculty members (f2007) provided useful size-independent measures. Table 1 also illustrates these measures among the top quartile of the medical schools in total articles (a) (n = 31). Stanford University School of Medicine ranked highest in publications (35.25) and citations (853.75) per faculty member over 1997–2007.

Table 1.

Bibliometric data for the top quartile of Association of American Medical Colleges (AAMC) medical schools in published articles, 1997–2007 (n = 31)*

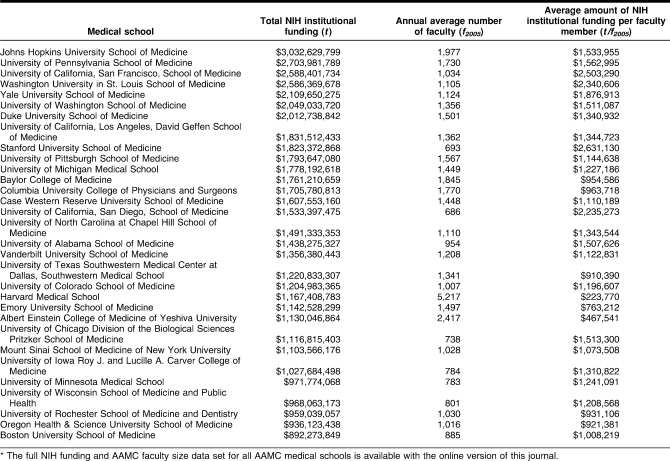

The 1997–2005 NIH funding and AAMC statistics from the top quartile of medical schools in total NIH funding (n = 31) are displayed in Table 2. From 1997–2005, Johns Hopkins Medical School garnered the most NIH funding with $3,032,629,799 total, and at $2,631,130 per faculty member, Stanford University Medical School faculty were awarded the most NIH funding on average. Harvard University Medical School annually averaged the highest number of faculty from 1997–2005 at 5,217 members. (Bibliometric, NIH, and AAMC statistics for all 123 medical schools for the full data sets are available with the online version of this journal.)

Table 2.

National Institutes of Health (NIH) funding and AAMC faculty data for AAMC medical schools in the top quartile of total NIH funding, 1997–2005 (n = 31)*

Descriptive Statistics

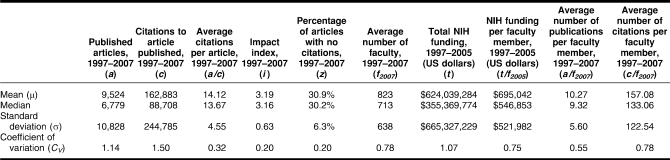

To demonstrate scale and variance of the data, Table 3 displays descriptive statistics such as the means, medians, standard deviations, and coefficients of variance for bibliometric, NIH, and AAMC data. The publication and citation histories across the studied medical schools showed a tremendous amount of variance for size-dependent variables. The standard deviations of published articles (a), citations to those articles (c), and total amounts of NIH funding (t) exceeded 100% of their means. The size-independent variables—average citations per article (a/c), impact index (i), and percentage of articles with no citations (z)—showed a smaller amount of variance with their relative standard deviations amounting to 32%, 20% and 20% of their means, respectively.

Table 3.

Means, medians, standard deviations, and coefficients of variance of the collected and synthesized bibliometric, NIH, and faculty size measures (n = 123)

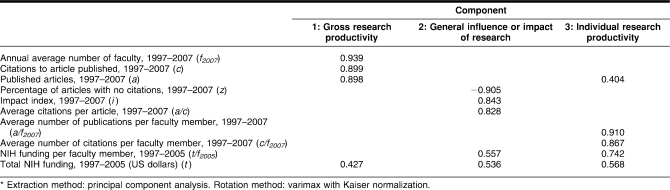

Principal Components Analysis

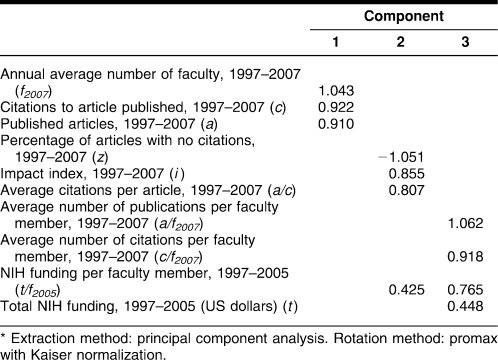

The relationships of the 10 studied indicators were analyzed via principal components analysis for 123 medical schools. For this data set, SPSS extracted 3 factors that explain 91% of the total explained variance. Table 4 illustrates the role of the variables in the composition of the factors rotated orthogonally using the varimax technique. The first factor, which accounted for 31% of the rotated variance, was highly associated with size-dependent measures—total articles (a), total citations (c), total number of faculty (f2007), and to a lesser degree, total NIH funding (t). Responsible for 30% of the rotated variance, the second factor grouped citations per article (a/c), impact index (i), and percentage of articles with no citations (z), which were all size-independent measures, and to a lesser extent, total NIH funding (t). Finally, the third factor, influenced heavily by size-independent measures—average number of published articles per faculty member (a/f2007), average number of citations per faculty member (c/f2007), and average NIH funding per faculty member (t/f2005)—also accounted for 30% of the rotated variance. Total NIH funding (t) was most significantly connected with this final grouping.

Table 4.

Factor loadings from the rotated component matrix*

The oblique rotation offered a slightly different interpretation of the data. Table 5 displays the pattern matrix extracted from an oblique rotation using the promax technique. Theoretically, the extracted pattern matrix approximated the orthogonally rotated factor matrix (Table 4) as it measured one linear factor-variable relationship, the uniqueness of the relationship. The oblique pattern matrix revealed many of the same structural patterns and groupings modeled in the orthogonal factors matrix. However, the one major difference was that the total NIH funding (t) factor loadings only showed a significant association with the third grouping of variables. Oblique rotation also revealed that the three extracted factors correlated highly with each other.

Table 5.

Factor loadings from the pattern matrix*

Using both rotational analyses in tandem, the data summary provided three distinct clusters of variables. The first grouping of variables, labeled gross research productivity, comprised total articles (a), total citations (c), and total number of faculty (f2007). The second cluster reflected the general influence or impact of research and included citations per article (a/c), impact index (i), and percentage of articles with no citations (z). The variable, percentage of articles with no citations (z), displayed a negative correlation; thus, it decreased when other variables demonstrated positive growth. The third grouping described the size-independent variables that reflected research productivity on an individual level: average number of published articles per faculty member (a/f2007), average number of citations per faculty member (c/f2007), and average NIH funding per faculty member (t/f2005). Interestingly, total NIH funding (t) demonstrated a significant relationship with all three factors when the data were rotated orthogonally, but only with the individual productivity group with oblique rotation. Counterintuitively, the relationship between gross research productivity and total NIH funding (t) was the weakest relationship. Practically speaking, these data might show that the influence of research carried more weight than volume of research in NIH-funded awards to medical schools.

Discussion

Analysis of Collected and Synthesized Data

Despite methodological and data-specific limitations, the compiled data sets presented in Table 1 and Table 2 broadly characterize the research productivity and impact at AAMC medical schools from 1997–2007. Each metric characterizes research output distinctively. Size-dependent measures may quantify aspects of overall institutional productivity, while size-independent measures may describe a medical school's impact in the research community or productivity of individual faculty members. The principal components analysis combined with a perusal of the data reveal a general sense of the most productive or influential research faculties on an institutional and individual basis. Due to scalability and generalizability problems, synthesizing a single metric based on the studied indicators does not honestly assess the multifaceted nature of medical school research.

The most productive medical schools on an institutional level—Johns Hopkins University School of Medicine; Harvard Medical School; University of Pennsylvania School of Medicine; Washington University in St. Louis School of Medicine; University of California, Los Angeles, David Geffen School of Medicine; Yale University School of Medicine; and Stanford University School of Medicine—demonstrated high rankings in total number of published articles (a), total number of citations (c), total number of faculty (f2007), and total NIH funding (t).

Stanford University School of Medicine; Yale University School of Medicine; Washington University in St. Louis School of Medicine; University of California, San Diego, School of Medicine; University of California, Los Angeles, David Geffen School of Medicine; Johns Hopkins University School of Medicine;, and Duke University School of Medicine appeared to have faculties who were among the most productive per person based on the data calculated for average number of published articles per faculty member (a/f2007), average number of citations per faculty member (c/f2007), average NIH funding per faculty member (t/f2005), and total NIH funding (t).

Size-independent measures, average citations per article (a/c) and impact index (i) percentage of articles not cited (z), and size-dependent measure, total NIH funding (t), might best describe the influence or impact of a medical school's research. Mount Sinai School of Medicine of New York University; University of Texas Medical School at San Antonio; University of California, San Francisco, School of Medicine; University of California, San Diego, School of Medicine; and University of Chicago Pritzker School of Medicine had faculties who produced very influential research, according to this study's data. It must be noted that the above-mentioned medical schools were not ranked or listed in any particular order.

Comparisons with Other Studies

Earlier analyses of biomedical and medical research typically focused on specific disciplines [34, 43–64], journals and the influence of journal impact factors [47,48,51,52,55,57,58,61,64,65], and national research performance of countries [44, 46–51, 53–55, 59–63,66,67]. Few studies concentrated on medical school research productivity and impact on an institutional level. The methods used by the investigators of these studies differed, so comparisons of data sets were complicated. Nevertheless, discrete correlations found in certain studies offered some points of comparisons with this research.

In 1983, McAllister and Narin found a much more significant linear correlation between total number of articles and NIH funding than this study (r = 0.95 versus r = 0.69, P<0.001). Changes in publishing behaviors, such as the enormous growth in the volume of scholarship, and grant funding might explain this. They also discovered interconnectedness between impact metrics, size-dependent productivity metrics, and faculty perceptions of institutional quality [31]. More recently, a study on peritoneal dialysis publications significantly correlated articles and citation counts (r = 0.63, P = 0.039) [46], though the significance appeared somewhat weaker than this study's findings (r = 0.98, P<0.001). In concordance with this study's findings, British researchers concluded that research impact correlated positively to funding [68,69]. A recent JAMA study corroborated this causal relationship, discovering that funding over $20,000 generally indicated higher-quality medical education research [70]. Lewison also arrived at the same conclusion as this study: an assortment of statistical indicators is essential in institutional analyses as each indicator paints a very different picture of research [34].

Though not focused exclusively on medical schools, van Raan conducted a comprehensive institutional analysis using statistics from the 100 largest European universities. His research also found a strong correlative relationship between average citations per article (a/c) and percentage of articles that are not cited (z) (r = -0.92), which bolstered the current study's principal components analysis and basic linear correlation (r = −0.74, P<0.001). Other metrics from the current study exhibited similar associations with van Raan's power law analysis, including strong correlations between total articles (a) and total citations (c) (r = 0.98, P<0.001; van Raan, r = 0.89) and a negligible correlation between total articles (a) and percentage of articles that were not cited (z) (r = −0.255, P = 0.002; van Raan, r = 0.35) [71].

Implications for Medical Librarianship

This study's results can inform the provision of bibliometric services in health sciences libraries. Primarily, medical librarians may become aware of the nature of their institution's unique research profile in regard to gross productivity, individual productivity, research impact, and funding. Medical librarians may support administrative assessment efforts by providing benchmarks or preliminary data that can be used in intra-campus departmental analyses or larger intercampus comparisons. Furthermore, familiarity with the various evaluative methodologies of research metrics permits health sciences librarians to better evaluate their collections—particularly regarding resources dealing with the assessment of universities or medical schools. Beyond simple impact factors and citation counts, librarians may assist health sciences faculty, administrators, and departments with tenure and promotion dossiers and their analyses and provide educational offerings on the basics of bibliometrics. At the author's home institution, the health sciences library offers a workshop, “Tenure Metrics,” and a support wiki that covers cited reference searching for ten databases [72], among other services.

Methodological Limitations

Due to the nature of Web of Science's data set and capabilities, limitations to this methodology exist. For example, research linked to institution names that were misspelled or used unfamiliar variants in the address field were not retrieved. Moreover, citation errors occur in all bibliographic fields in Web of Science, thus, these citations were not accounted for under this methodology. In fact, Moed estimated that 7% of citations from ISI databases contain errors [21]. As stated in the methodology section, no fraction or proportional attribution techniques were applied in the case of multiple authors from different medical schools. Selective in its coverage, Web of Science does not track the citation histories of thousands of journals, proceedings, technical reports, and patents. It must be noted that the collected Web of Science data include self-citations, which may skew the resulting data. Other bibliometric studies demonstrate that including self-citations proves insignificant to the overall results in macro-level studies [73,74].

NIH was the only source of grant funding data used in this study. Monies from private foundations, nongovernmental organizations, and other government departments were not taken into account in this study. NIH grants used for other programs apart from research (i.e., training) were included in this study. In regard to AAMC data, the association stresses that the numbers from their faculty roster are not the official numbers reported to the Liaison Committee on Medical Education, and their ongoing data collection methodology may report different faculty numbers [36].

Due to the outliers at both extremes, the variances of many metrics were high. The most notable outliers were data from the top-ranking medical schools for particular variables. For example, Harvard Medical School exceeded 3 standard deviations from the mean in total articles (a), total citations (c), and total number of faculty (f2007). Stanford University School of Medicine also surpassed 3 standard deviations from the mean in average NIH funding per faculty member (t/f2005), articles per faculty member (a/f2007), and citations per faculty member (c/f2007). Six schools exceeded 3 standard deviations from the mean in at least 1 indicator, and 20 schools were 2 standard deviations from the mean in 1 or more indicators. The data analysis was notably affected by the presence of these medical schools. By eliminating the 20 schools that exceeded 2 standard deviations from the mean in 1 indicator, SPSS was able to run a principal axis factor analysis on the remaining 103 medical schools. In this type of analysis, only 2 factors were extracted. From these analyses, the author observed variant patterns of variable clustering where total articles (a), total citations (c), average number of articles per faculty member (a/f2007), and average number of citations per faculty member (c/f2007) associated with the first factor, and average citations per article (a/c), impact index (i), percentage of article with no citations (z), total NIH funding (t), and average NIH funding per faculty member (t/f2005) associated with the second factor.

Given this study's intention of conducting a macro-analysis of bibliometric statistics, NIH funding data, and AAMC faculty data, results are necessarily broad. Furthermore, every discipline has a unique culture and variant citation rates [46], so the data cannot be extrapolated to specific departments at the medical schools studied. This is also evident when calculating impact indices, where the 0.4 m-curve may not exactly represent the universal growth rate for all biomedical disciplines. The use of indicator averages negates the study of temporal qualities of the data, so potentially illustrative statistics, such as growth over time, have not been described.

Further Refinement and Study

To get a more focused picture of research productivity, the author suggests refining broad bibliometric data in various ways. Specifically, researchers could conduct bibliometric analyses at the school, departmental, or laboratory level. For instance, the general citation behaviors of pediatricians may be much different than those of cell biologists. A great deal of research delves into the bibliometrics of medical specialties and subspecialties [6, 34, 43–64]. Furthermore, the author believes the high data variance, especially in size-dependent measures, requires researchers to comparatively study medical schools among similar sized institutions to generate valid associative assessments. This is evident in the differences of analyses when a group of the highest and lowest performing medical schools were removed from the population.

Not taken into account in this study, metrics synthesized from journal prestige information, such as ISI's impact factors and EigenFactor's article influence [75], can also shed a different light on research productivity. Mathematically, modeling bibliometric data according to a power law distribution [76] may reveal another view of the data. Commercially, ISI provides citation analysis products, the university science indicators and essential science indicators, which allow users to customize and mine their extensive data sets. Their web-based newsletter, ScienceWatch, discusses several bibliometric-related topics such as emerging research fronts, top researchers in specific fields, and “hottest” papers [77]. Other resources—such as Google Scholar, Scopus, Citebase, and other subject-specific databases—can also provide supplementary citation information mined from the web, journals not indexed by ISI, patents, and gray literature.

Conclusion

Despite the commercial allure of medical school rankings, no one statistic or ranking system can distill the complexity and diversity of a medical school's research output. As this study reveals, medical schools may appear strong in a few bibliometric categories and weak in others. Thus, researchers must be cognizant of the nuances of each statistical indicator. Is it size dependent or size independent? Are the data on an ordinal scale or interval scale? Does the indicator measure institutional productivity, individual productivity, overall research impact, or reputation? What statistical tests were applied?

Applying the measures employed in this study in combination with other evaluative methodologies potentially gives a broad, robust, and reasonable overview of an institution's research. The different results from a range of statistical tests and methodologies bolster the aforementioned notion of utilizing a variety of data sources and synthesized statistics when evaluating research productivity. Overall measures as well as the individual statistics for each school, department, and researcher may provide medical school administrators, faculty, and medical librarians an estimate of their faculty's research productivity and influence or points of comparison that can be used in benchmarking. However, quantitative measures should not be used alone. Intention, subjectivity, and professional opinion remain vital parts of the analysis process.

Electronic Content

Footnotes

References

- 1.Borgman C.L., Furner J. Scholarly communication and bibliometrics. Annu Rev Inform Sci Technol. 2002;36:3–72. [Google Scholar]

- 2.Garfield E. Garfield E. Essays of an information scientist. v.6. Philadelphia, PA: ISI Press; 1983. How to use citation analysis for faculty evaluations, and when is it relevant? part 1; pp. 354–62. [Google Scholar]

- 3.Garfield E. Garfield E. Essays of an information scientist. v.6. Philadelphia, PA: ISI Press; 1983. How to use citation analysis for faculty evaluations, and when is it relevant? part 2; pp. 363–72. [Google Scholar]

- 4.Cronin B., Atkins H.B. The scholar's spoor. In: Cronin B., Atkins H.B., editors. The web of knowledge: a festschrift in honor of Eugene Garfield. Medford, NJ: Information Today; 2000. pp. 1–7. [Google Scholar]

- 5.Epstein R.J. Journal impact factors do not equitably reflect academic staff performance in different medical subspecialties. J Investig Med. 2004 Dec;52(8):531–6. doi: 10.1136/jim-52-08-25. [DOI] [PubMed] [Google Scholar]

- 6.Maunder R.G. Using publication statistics for evaluation in academic psychiatry. Can J Psychiatry. 2007 Dec;52(12):790–7. doi: 10.1177/070674370705201206. [DOI] [PubMed] [Google Scholar]

- 7.Murphy P.S. Journal quality assessment for performance-based funding. Assess Eval High Educ. 1998 Mar;23(1):25–31. [Google Scholar]

- 8.Lewison G., Cottrell R., Dixon D. Bibliometric indicators to assist the peer review process in grant decisions. Res Eval. 1999 Apr;8(1):47–52. [Google Scholar]

- 9.Noyons E.C.M., Moed H.F., Luwel M. Combining mapping and citation analysis for evaluative bibliometric purposes: a bibliometric study. J Am Soc Inf Sci. 1999 Feb;50(2):115–31. [Google Scholar]

- 10.Huang M.H., Chang H.W., Chen D.Z. Research evaluation of research-oriented universities in Taiwan from 1993 to 2003. Scientometrics. 2006 Jun;67(3):419–35. [Google Scholar]

- 11.Schummer J. The global institutionalization of nanotechnology research: a bibliometric approach to the assessment of science policy. Scientometrics. 2007 Mar;70(3):669–92. [Google Scholar]

- 12.Garfield E. What citations tell us about Canadian research. Can J Info Libr Sci. 1993 Dec;18(4):14–35. [Google Scholar]

- 13.Leydesdorff L., Cozzens S., Vandenbesselaar P. Tracking areas of strategic importance using scientometric journal mappings. Res Pol. 1994 Mar;23(2):217–29. [Google Scholar]

- 14.Hinze S. Bibliographical cartography of an emerging interdisciplinary scientific field: the case of bioelectronics. Scientometrics. 1994 Mar;29(3):353–76. [Google Scholar]

- 15.Seglen P.O. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997 Feb 15;314(7079):498–502. doi: 10.1136/bmj.314.7079.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Coleman R. Impact factors: use and abuse in biomedical research. Anat Rec B New Anat. 1999 Apr;257(2):54–7. doi: 10.1002/(SICI)1097-0185(19990415)257:2<54::AID-AR5>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- 17.Shadish W.R., Tolliver D., Gray M., Gupta S.K.S. Author judgements about works they cite: three studies from psychology journals. Soc Stud Sci. 1995 Aug;25(3):477–98. [Google Scholar]

- 18.Weingart P. Impact of bibliometrics upon the science system: inadvertent consequences. Scientometrics. 2005 Jan;62(1):117–31. [Google Scholar]

- 19.Walter G., Bloch S., Hunt G., Fisher K. Counting on citations: a flawed way to measure quality. Med J Aust. 2003 Mar;178(6):280–1. doi: 10.5694/j.1326-5377.2003.tb05196.x. [DOI] [PubMed] [Google Scholar]

- 20.Loonen M.P.J., Hage J.J., Kon M. Value of citation numbers and impact factors for analysis of plastic surgery research. Plast Reconstr Surg. 2007 Dec;120(7):2082–91. doi: 10.1097/01.prs.0000295971.84297.b7. [DOI] [PubMed] [Google Scholar]

- 21.Moed H.F. The impact-factors debate: the ISI's uses and limits. Nature. 2002 Feb;415(6873):731–2. doi: 10.1038/415731a. [DOI] [PubMed] [Google Scholar]

- 22.Raan A.F.J. Advanced bibliometric methods as quantitative core of peer review based evaluation and foresight exercises. Scientometrics. 1996 Jul;36(3):397–420. [Google Scholar]

- 23.Aksnes D.W. Citation rates and perceptions of scientific contribution. J Am Soc Inf Sci Technol. 2006 Jan;57(2):169–85. [Google Scholar]

- 24.Hirsch J.E. An index to quantify an individual's scientific research output. Proc Natl Acad Sci U S A. 2005 Nov;102(46):16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Oppenheim C. The correlation between citation counts and the 1992 research assessment exercise ratings for British research in genetics, anatomy and archaeology. J Doc. 1997;53(5):477–87. [Google Scholar]

- 26.Schoonbaert D., Roelants G. Citation analysis for measuring the value of scientific publications: quality assessment tool or comedy of errors. Trop Med Int Health. 1996 Dec;1(6):739–52. doi: 10.1111/j.1365-3156.1996.tb00106.x. [DOI] [PubMed] [Google Scholar]

- 27.U.S. News & World Report. America's best graduate schools. 2007 ed. Washington, DC: U.S. News & World Report; 2007. Schools of medicine; pp. 34–6. [Google Scholar]

- 28.McGaghie W.C., Thompson J.A. America's best medical schools: a critique of the U.S. News & World Report rankings. Acad Med. 2001 Oct;76(10):985–92. doi: 10.1097/00001888-200110000-00005. [DOI] [PubMed] [Google Scholar]

- 29.National Opinion Research Center. A review of the methodology for the U.S. News & World Report's rankings of undergraduate colleges and universities [Internet] Wash Monthly. [rev. 2003; cited 4 Apr 2008]. < http://www.washingtonmonthly.com/features/2000/norc.html>.

- 30.Webster T.J. A principal component analysis of the U.S. News & World Report tier rankings of colleges and universities. Econ Educ Rev. 2001 Jun;20(3):235–44. [Google Scholar]

- 31.McAllister P.R., Narin F. Characterization of the research papers of US medical schools. J Am Soc Inf Sci. 1983 Mar;34(2):123–31. doi: 10.1002/asi.4630340205. [DOI] [PubMed] [Google Scholar]

- 32.Wallin J.A. Bibliometric methods: pitfalls and possibilities. Basic Clin Pharmacol Toxicol. 2005 Nov;97(5):261–75. doi: 10.1111/j.1742-7843.2005.pto_139.x. [DOI] [PubMed] [Google Scholar]

- 33.Lewison G., Thornicroft G., Szmukler G., Tansella M. Fair assessment of the merits of psychiatric research. Br J Psychiatry. 2007 Apr;190(4):314–8. doi: 10.1192/bjp.bp.106.024919. [DOI] [PubMed] [Google Scholar]

- 34.Lewison G. New bibliometric techniques for the evaluation of medical schools. Scientometrics. 1998 Jan;41(1–2):5–16. [Google Scholar]

- 35.Schubert A., Glanzel W., Thijs B. The weight of author self-citations. a fractional approach to self-citation counting. Scientometrics. 2006 Jun;67(3):503–14. [Google Scholar]

- 36.American Association of Medical Colleges. Washington, DC: The Association [rev. 2008; cited 24 Mar 2008]; Reports available through faculty roster, 2008 [Internet] < http://www.aamc.org/data/facultyroster/reports.htm>. [Google Scholar]

- 37.National Institutes of Health. Bethesda, MD: The Institutes [rev. 2007; cited 24 Mar 2008]; Award trends [Internet] < http://www.grants.nih.gov/grants/award/awardtr.htm>. [Google Scholar]

- 38.Molinari J.F., Molinari A. A new methodology for ranking scientific institutions. Scientometrics. 2008 Apr;75(1):163–74. [Google Scholar]

- 39.Kinney A.L. National scientific facilities and their science impact on nonbiomedical research. Proc Natl Acad Sci U S A. 2007 Nov;104(46):17943–7. doi: 10.1073/pnas.0704416104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Child D. The essentials of factor analysis. 3rd ed. London, UK: Continuum International Publishing Group; 2006. [Google Scholar]

- 41.Kline P. An easy guide to factor analysis. London, UK: Routledge; 1994. [Google Scholar]

- 42.Comrey A.L., Lee H.B. A first course in factor analysis. 2nd ed. Hillsdale, NJ: Lawrence Erlbaum Associates; 1992. [Google Scholar]

- 43.Bansard J.Y., Rebholz-Schuhmann D., Cameron G., Clark D., van Mulligen E., Beltrame F., Barbolla E.D., Martin-Sanchez F., Milanesi L., Tollis I., van der Lei J., Coatrieux J.L. Medical informatics and bioinformatics: a bibliometric study. IEEE T Inf Technol Biomed. 2007 May;11(3):237–43. doi: 10.1109/TITB.2007.894795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Horta H., Veloso F.M. Opening the box: comparing EU and US scientific output by scientific field. Technol Forecast Soc Change. 2007 Oct;74(8):1334–56. [Google Scholar]

- 45.Lewison G. The definition and calibration of biomedical subfields. Scientometrics. 1999 Nov;46(3):529–37. [Google Scholar]

- 46.Chen T.W., Chou L.F., Chen T.J. World trend of peritoneal dialysis publications. Perit Dial Int. 2007 Mar;27(2):173–8. [PubMed] [Google Scholar]

- 47.Garcia-Garcia P., Lopez-Munoz F., Callejo J., Martin-Agueda B., Alamo C. Evolution of Spanish scientific production in international obstetrics and gynecology journals during the period 1986–2002. Eur J Obstet Gynecol Reprod Biol. 2005 Dec;123(2):150–6. doi: 10.1016/j.ejogrb.2005.06.039. [DOI] [PubMed] [Google Scholar]

- 48.Glover S.W., Bowen S.L. Bibliometric analysis of research published in Tropical Medicine and International Health 1996–2003. Trop Med Int Health. 2004 Dec;9(12):1327–30. doi: 10.1111/j.1365-3156.2004.01331.x. [DOI] [PubMed] [Google Scholar]

- 49.Lazarides M.K., Nikolopoulos E.S., Antoniou G.A., Georgiadis G.S., Simopoulos C.E. Publications in vascular journals: contribution by country. Eur J Vasc Endovasc Surg. 2007 Aug;34(2):243–5. doi: 10.1016/j.ejvs.2007.03.008. [DOI] [PubMed] [Google Scholar]

- 50.Lewison G., Grant J., Jansen P. International gastroenterology research: subject areas, impact, and funding. Gut. 2001 Aug;49(2):295–302. doi: 10.1136/gut.49.2.295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lopez-Illescas C., de Moya-Anegon F., Moed H.F. The actual citation impact of European oncological research. Eur J Cancer. 2008 Jan;44(2):228–36. doi: 10.1016/j.ejca.2007.10.020. [DOI] [PubMed] [Google Scholar]

- 52.Lundberg J., Brommels M., Skar J., Tomson G. Measuring the validity of early health technology assessment: bibliometrics as a tool to indicate its scientific basis. Int J Technol Assess Health Care. 2008;24(1):70–5. doi: 10.1017/S0266462307080099. [DOI] [PubMed] [Google Scholar]

- 53.Mela G.S., Cimmino M.A., Ugolini D. Impact assessment of oncology research in the European Union. Eur J Cancer. 1999 Aug;35(8):1182–6. doi: 10.1016/s0959-8049(99)00107-0. [DOI] [PubMed] [Google Scholar]

- 54.Mela G.S., Mancardi G.L. Neurological research in Europe, as assessed with a four-year overview of neurological science international journals. J Neurol. 2002 Apr;249(4):390–5. doi: 10.1007/s004150200027. [DOI] [PubMed] [Google Scholar]

- 56.Nieminen P., Isohanni M. The use of bibliometric data in evaluating research on therapeutic community for addictions and in psychiatry. Subst Use Misuse. 1997;32(5):555–70. doi: 10.3109/10826089709027312. [DOI] [PubMed] [Google Scholar]

- 57.Nieminen P., Rucker G., Miettunen J., Carpenter J., Schumacher M. Statistically significant papers in psychiatry were cited more often than others. J Clin Epidemiol. 2007 Sep;60(9):939–46. doi: 10.1016/j.jclinepi.2006.11.014. [DOI] [PubMed] [Google Scholar]

- 58.Oelrich B., Peters R., Jung K. A bibliometric evaluation of publications in urological journals among European Union countries between 2000–2005. Eur Urol. 2007 Oct;52(4):1238–48. doi: 10.1016/j.eururo.2007.06.050. [DOI] [PubMed] [Google Scholar]

- 59.Schoonbaert D. Citation patterns in tropical medicine journals. Trop Med Int Health. 2004 Nov;9(11):1142–50. doi: 10.1111/j.1365-3156.2004.01327.x. [DOI] [PubMed] [Google Scholar]

- 60.Skram U., Larsen B., Ingwersen P., Viby-Mogensen J. Scandinavian research in anaesthesiology 1981–2000: visibility and impact in EU and world context. Acta Anaesthesiol Scand. 2004 Sep;48(8):1006–13. doi: 10.1111/j.1399-6576.2004.00447.x. [DOI] [PubMed] [Google Scholar]

- 61.Ugolini D., Casilli C., Mela G.S. Assessing oncological productivity: is one method sufficient. Eur J Cancer. 2002 May;38(8):1121–5. doi: 10.1016/s0959-8049(02)00025-4. [DOI] [PubMed] [Google Scholar]

- 62.Ugolini D., Mela G.S. Oncological research overview in the European Union a 5-year survey. Eur J Cancer. 2003 Sep;39(13):1888–94. doi: 10.1016/s0959-8049(03)00431-3. [DOI] [PubMed] [Google Scholar]

- 63.Falagas M.E., Karavasiou A.I., Bliziotis I.A. A bibliometric analysis of global trends of research productivity in tropical medicine. Acta Trop. 2006 Oct;99(2–3):155–9. doi: 10.1016/j.actatropica.2006.07.011. [DOI] [PubMed] [Google Scholar]

- 64.Tsay M.Y., Chen Y.L. Journals of general & internal medicine and surgery: an analysis and comparison of citation. Scientometrics. 2005 Jul;64(1):17–30. [Google Scholar]

- 65.Lewison G., Paraje G. The classification of biomedical journals by research level. Scientometrics. 2004 Jun;60(2):145–57. [Google Scholar]

- 66.Luwel M. A bibliometric profile of Flemish research in natural, life and technical sciences. Scientometrics. 2000 Feb;47(2):281–302. [Google Scholar]

- 67.Lewison G. Beyond outputs: new measures of biomedical research impact. Aslib Proc. 2003;55(1/2):32–42. [Google Scholar]

- 68.Lewison G., Dawson G. The effect of funding on the outputs of biomedical research. Scientometrics. 1998 Jan;41(1):17–27. [Google Scholar]

- 69.Dawson G., Lucocq B., Cottrell R., Lewison G. Mapping the landscape: national biomedical research outputs 1988–95. policy report [Internet] London, UK: The Welcome Trust [rev. 1998; cited 22 May 2008]; <http://www.wellcome.ac.uk/stellent/groups/corporatesite/@policy_communications/documents/web_document/wtd003187.pdf>. [Google Scholar]

- 70.Lee K.P., Schotland M., Bacchetti P., Bero L.A. Association of journal quality indicators with methodological quality of clinical research articles. JAMA. 2002 Jun;287(21):2805–8. doi: 10.1001/jama.287.21.2805. [DOI] [PubMed] [Google Scholar]

- 71.van Raan A.F.J. Bibliometric statistical properties of the 100 largest European research universities: prevalent scaling rules in the science system. J Am Soc Inf Sci Technol. 2008 Feb;59(3):461–75. [Google Scholar]

- 72.Health Sciences Library. Health Sciences Library wiki: tenure metrics: cited reference searching [Internet] Buffalo, NY: State University of New York at Buffalo Health Sciences Library [rev. 2008; cited 22 May 2008]; < http://libweb.lib.buffalo.edu/dokuwiki/hslwiki/doku.phpidtenure_metrics>. [Google Scholar]

- 73.Glanzel W., Debackere K., Thijs B., Schubert A. A concise review on the role of author self-citations in information science, bibliometrics and science policy. Scientometrics. 2006 May;67(2):263–77. [Google Scholar]

- 74.Thijs B., Glanzel W. The influence of author self-citations on bibliometric meso-indicators: the case of European universities. Scientometrics. 2005 Dec;66(1):71–80. [Google Scholar]

- 75.Bergstrom C., West J., Althouse B., Rosvall M., Bergstrom T. Eigenfactor: detailed methods [Internet] Seattle, WA: Eigenfactor.org [rev. 2007; cited 15 May 2008]; < http://www.eigenfactor.org/methods.pdf>. [Google Scholar]

- 76.Newman M.E.J. Power laws, Pareto distributions and Zipf's law. Cont Phys. 2005 Sep;46(5):323–51. [Google Scholar]

- 77.ScienceWatch.com. Tracking trends & performance in basic research [Internet] Philadelphia, PA: Thomson Reuters [rev. 2008; cited 16 May 2008]; < http://www.sciencewatch.com>. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.