Meta Llama 3.1: The Comprehensive Guide to the Best Open-Source AI Model

Introduction to Meta Llama 3.1

Meta Llama 3.1 is an advanced open-source language model developed by Meta, designed for flexibility, control, and superior performance. Available in sizes up to Llama 3.1 405B, it supports a 128K token context length and excels in general knowledge, steerability, math, tool use, and multilingual translation. Trained on over 15 trillion tokens, it leverages synthetic data generation and model distillation to enhance its capabilities.

Meta’s commitment to open-source AI ensures Llama 3.1 is widely accessible on platforms like AWS and Hugging Face. With tools like Llama Guard 3 and Prompt Guard, it offers enhanced security and safety, enabling responsible use and innovation. This makes Llama 3.1 a powerful tool for developers and researchers seeking to leverage the latest advancements in AI.

Key Features of Llama 3.1

1、Expanded Context Length

Llama 3.1 supports a context length of up to 128K tokens, allowing for more complex and lengthy interactions, enhancing its performance in various applications.

2、Multilingual Support

The model excels in multilingual translation, supporting languages such as English, French, German, Spanish, and more, making Llama 3.1 ideal for global applications.

3、Llama 3.1 405B Model

The Llama 3.1 405B is one of the largest open-source models available, offering unmatched capabilities in general knowledge, steerability, and tool use.

4、Synthetic Data Generation

The model leverages synthetic data generation to improve training and performance, making Llama 3.1 highly efficient for various AI tasks.

5、Model Distillation

Llama 3.1 supports model distillation, allowing for the creation of smaller, more efficient models while maintaining high performance and accuracy.

6、Open-Source Accessibility

As an open-source model, Llama 3.1 is accessible on platforms like AWS and Hugging Face, allowing for use and customization by developers.

How to Access Llama 3.1

Llama 3.1 can be accessed directly on the Meta website and is also available on major platforms such as AWS (including Amazon Bedrock) and Hugging Face, ensuring broad support for deployment and use in various applications.

1、Accessing Llama 3.1 on Meta

Llama 3.1 can also be used directly on Meta’s website, where developers and users can try out the model's capabilities by asking challenging math or coding questions through platforms like WhatsApp and the Meta AI site.

2、Accessing Llama 3.1 on Hugging Face

On Hugging Face, users need to agree to the LLAMA 3.1 COMMUNITY LICENSE AGREEMENT and share contact information. Follow the platform’s guidelines to download, deploy, and integrate the models seamlessly, including the powerful Llama 3.1 405B model.

3、Accessing on AWS and Amazon Bedrock

Developers can access Llama 3.1 through AWS, specifically via Amazon Bedrock. Navigate to the Amazon Bedrock console, select the Llama 3.1 models, and follow the provided instructions to request access and begin deployment in a secure and reliable environment.

Access Llama 3.1 on Hugging Face

Llama 3.1 is available on Hugging Face, providing users with easy access to this advanced language model. To use Llama 3.1 on Hugging Face, first agree to the LLAMA 3.1 COMMUNITY LICENSE AGREEMENT and provide your contact information to gain access. Then, navigate to the Hugging Face Hub to find and download Llama 3.1 and the necessary files. Use the Hugging Face Transformers library to integrate and deploy Llama 3.1, including the Llama 3.1 405B model. Leverage the model’s extended context length and multilingual support to enhance your AI projects, following Hugging Face’s guidelines for optimal integration.

How to Run Llama 3.1 Locally

Running Llama 3.1 locally, especially the high-parameter models like the 405B, requires specific steps and substantial hardware resources. Here’s a guide to help you get started:

1、Access and Download

To use Llama 3.1, you need to first accept the LLAMA 3.1 COMMUNITY LICENSE AGREEMENT. This can be done either through Meta's official website or Hugging Face. After accepting the agreement, you will receive download links for the model. These links must be used within 24 hours, or they will expire. The models are available in different sizes, such as 405B, 70B, and 8B.

2、System Requirements

Running Llama 3.1 405B locally is highly demanding:

- Storage: Approximately 820GB is required.

- RAM: At least 1TB of RAM.

- GPU: Multiple high-end GPUs are recommended, ideally NVIDIA A100 or H100 series.

- VRAM: At least 640GB across all GPUs.

These requirements make it nearly impossible to run Llama 3.1 405B on consumer-grade hardware. For more manageable alternatives, consider using the 70B or 8B variants, which require fewer resources.

3、Installation Steps

After downloading the model, set up your environment. For instance, using an Ubuntu 22.04 system, you can:

- Create a new environment using tools like conda.

- Install prerequisites such as PyTorch and Transformers. Ensure you have the latest version of Transformers (>= 4.43.0).

- Use the Hugging Face CLI to log in and authenticate your access with a token from your Hugging Face profile.

- Initialize a Jupyter notebook or another development environment to start using the model.

4、Running and Testing the Model

After setup, test the model's capabilities by initiating dialogue or running specific tasks. The Llama 3.1 405B model excels in areas like general knowledge, coding, and multilingual tasks but requires substantial computational power. While Llama 3.1 405B is a powerful model, running it locally is challenging due to its high hardware requirements. More accessible variants like the 70B and 8B models provide a practical alternative for most users. For full-scale deployment, cloud-based solutions are recommended to leverage the model's capabilities without the need for extensive local resources.

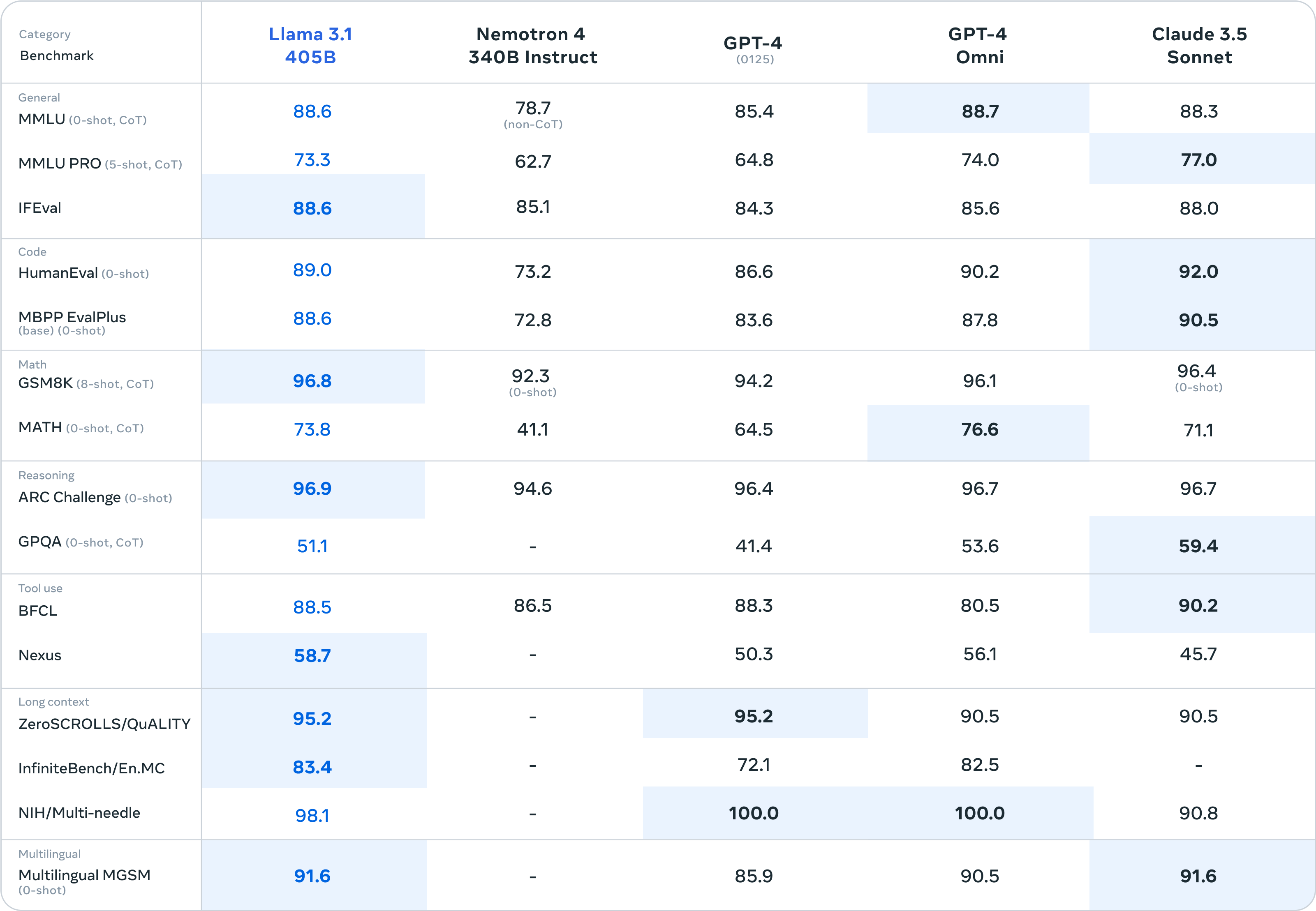

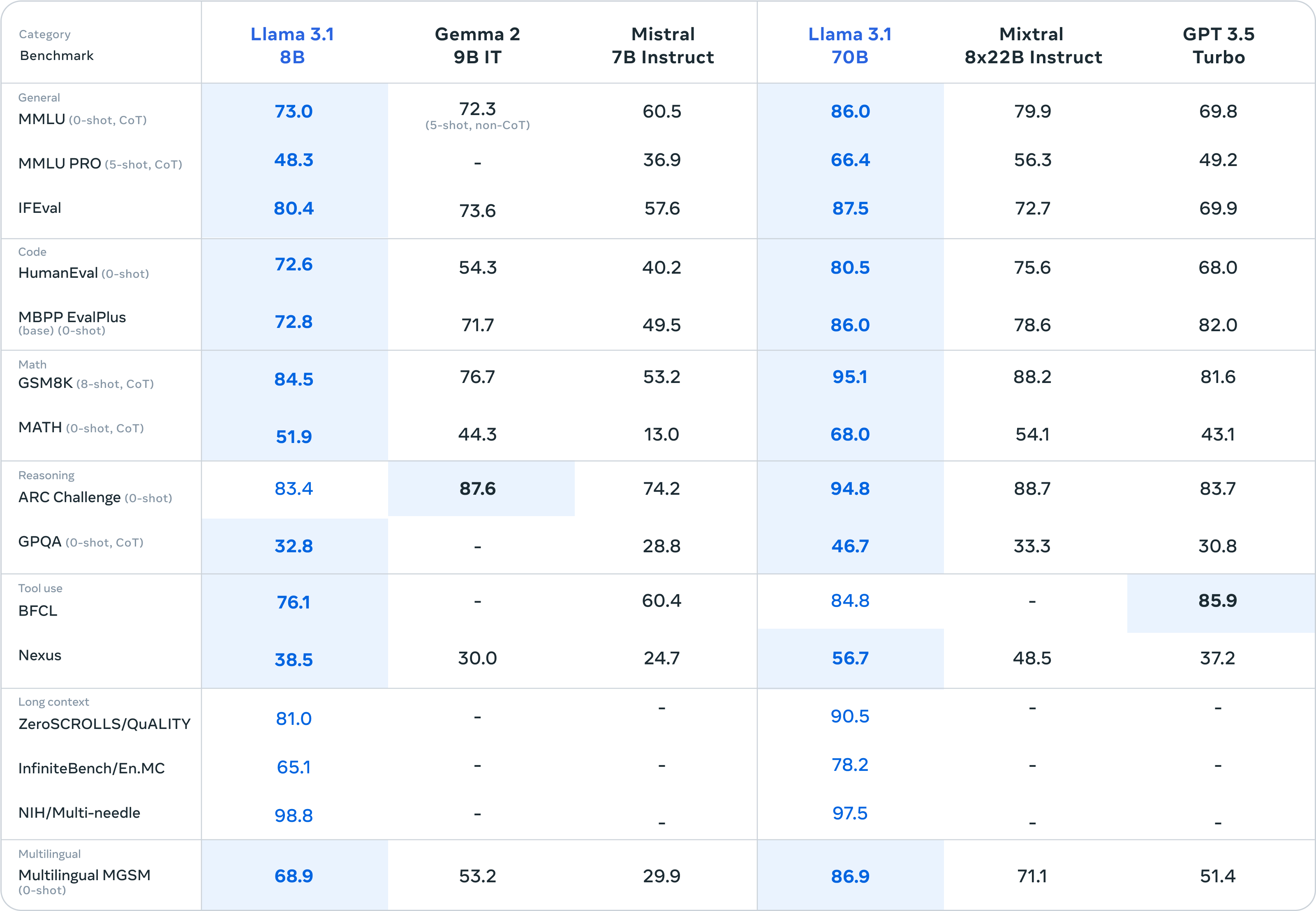

Comparison with Other models like GPT4 Omni

Llama 3.1 models demonstrate competitive performance compared to other leading models like GPT4 Omni and Nemotron 4 across various benchmarks. They excel in general knowledge, coding, math, reasoning, tool use, long-context tasks, and multilingual support. The Llama 3.1 405B model is particularly notable for its advanced capabilities and flexibility in diverse AI applications. The Llama 3.1 70B and Llama 3.1 8B models also show strong performance, especially in coding and mathematical tasks, reinforcing the Llama series' status as a prominent open-source AI model.

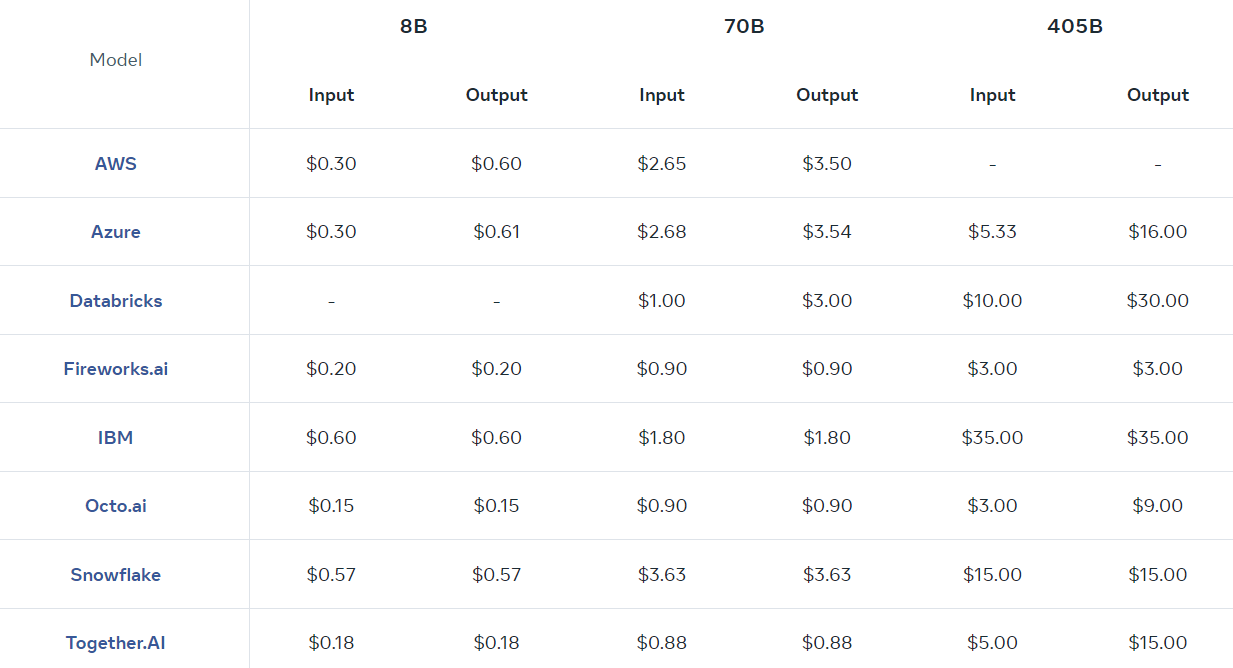

Pricing Guide for Llama 3.1 API

The Pricing Guide for Llama 3.1 API provides detailed information on the cost structure for using the Llama 3.1 models across various cloud platforms. The pricing varies based on the model size (8B, 70B, 405B) and the service provider, including AWS, Azure, Databricks, Fireworks.ai, IBM, Octo.ai, Snowflake, and Together.AI. It is essential for developers and businesses to understand the financial implications of integrating Llama 3.1 into their applications.

Frequently Asked Questions

1、What are the key features of Llama 3.1 405b?

Llama3.1 405b is a flagship model in the Llama3.1 series, known for its 405 billion parameters. It excels in tasks requiring extensive context, multilingual capabilities, and high precision. Llama3.1 405b is designed for advanced applications such as natural language understanding, coding, and complex reasoning.

2、 How does Meta Llama 3.1 perform in multilingual tasks?

Meta Llama 3.1 is optimized for multilingual tasks, supporting a wide range of languages with high accuracy. It is particularly effective in natural language processing tasks like translation and multilingual dialogue, outperforming many other models in these areas.

3、Can Llama 3.1 be used as an alternative to Mistral NeMo?

While Mistral NeMo is a capable model, Llama 3.1 offers more advanced features, particularly in terms of multilingual support and flexibility. However, the choice between the two models depends on specific project needs, such as language support and computational resources.

4、What are the system requirements for running Llama 3.1 locally?

Running Llama 3.1 locally, especially the 405b variant, requires substantial hardware resources, including at least 1TB of RAM, multiple high-end GPUs (preferably NVIDIA A100 or H100 series), and significant storage space. For those with limited resources, the Llama3.1 70B or 8B models are more practical alternatives.

5、How does GPT-4 Omni compare to Llama 3.1 in terms of performance?

GPT4o mini is a compact version of the GPT-4 series, offering decent performance in a smaller footprint. However, Llama 3.1 generally outperforms it in benchmarks related to general knowledge, coding, and multilingual tasks, making Llama3.1 a more versatile option for diverse applications.

6、How can developers leverage Llama 3.1 for AI projects?

Developers can leverage Llama 3.1 by integrating it into their applications using platforms like Hugging Face. This model provides extensive support for a range of AI tasks, including language understanding, dialogue systems, and more. Accessing the model through platforms like Hugging Face ensures a smooth integration process, complete with comprehensive documentation and community support.