Paper:

VGG-16 Convolutional Neural Network-Oriented Detection of Filling Flow Status of Viscous Food

Changfan Zhang, Dezhi Meng, and Jing He†

College of Electrical and Information Engineering, Hunan University of Technology

No.88 Taishan Road, Tianyuan District, Zhuzhou, Hunan 412007, China

†Corresponding author

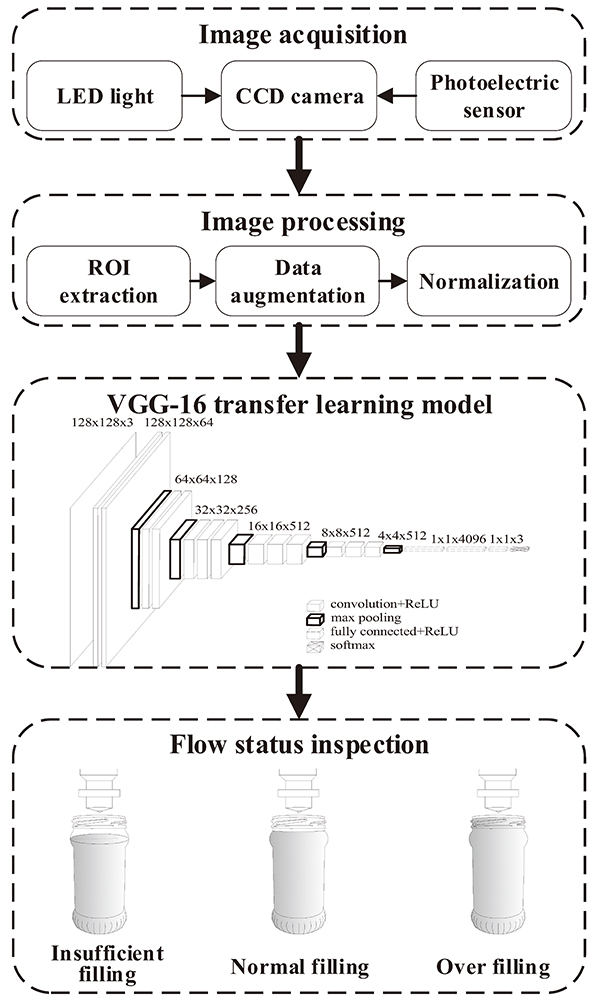

A method is proposed to detect the filling flow status for automatic filling of thick liquid food. The method is based on a convolutional neural network algorithm and it solves the problem of poor accuracy in traditional flow detection devices. An adaptive threshold segmentation algorithm was first used to extract the region of interest for the acquired level image. Next, normalization and augmentation treatment were performed on the extracted images to construct a flow status dataset. A VGG-16 network trained on an ImageNet dataset was then used for isomorphic data-oriented feature migration and parameter tuning to automatically extract features and train the model. The identification accuracy and error rate of the network were verified and the advantages and disadvantages of the proposed method were compared to those of other methods. The experimental results demonstrated that the algorithm effectively detects multi-category flow status information and complies with the requirements for actual production.

Structural block diagram for VGG-16 transfer learning algorithm-oriented filling flow state detection

- [1] D. Melchionni, A. Pesatori, and M. Norgia, “Liquid level measurement system based on a coherent optical sensor,” Proc. of 2014 IEEE Int. Instrumentation and Measurement Technology Conf., pp. 968-971, 2014.

- [2] V. A. Dave and S. K. Hadia, “Automatic Bottle Filling Inspection System Using Image Processing,” Int. J. of Science and Research, Vol.4, Issue 4, pp. 1116-1120, 2015.

- [3] S. Eppel, “Tracing liquid level and material boundaries in transparent vessels using the graph cut computer vision approach,” arXiv preprint, arXiv: 1602.00177, 2016.

- [4] X. Jiang, Z. Zhang, Y. Wang, X. Zhou, Y. Zheng, Y. Peng, and M. Li, “PET full bottle fast detection based on gradient diffusion of gray projection,” J. of Electronic Measurement and Instrumentation, Vol.30, No.8, pp. 1152-1159, 2016 (in Chinese).

- [5] L. Chen, H. Cheng, S. Qin, X. Liu, and X. Wu, “Filling Quality Detection of Wine Base on Machine Vision,” Packaging Engineering, Vol.38, No.9, pp. 146-150, 2017 (in Chinese).

- [6] J. He, H. Yu, C. Zhang, J. Liu, and X. Luo, “Damage detection of train wheelset tread using canny-YOLOv3,” J. of Electronic Measurement and Instrumentation, Vol.33, No.12, pp. 25-30, 2019 (in Chinese).

- [7] G. E. Hinton and R. R. Salakhutdinov, “Reducing the Dimensionality of Data with Neural Networks,” Science, Vol.313, Issue 5786, pp. 504-507, 2006.

- [8] A. Krizhevsky, I. Sutskever, and G. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Proc. of the 25th Int. Conf. on Neural Information Processing Systems (NIPS’12), Vol.1, pp. 1097-1105, 2012.

- [9] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv preprint, arXiv: 1409.1556, 2014.

- [10] J. Yim, D. Joo, J. Bae, and J. Kim, “A Gift from Knowledge Distillation: Fast Optimization, Network Minimization and Transfer Learning,” Proc. of the 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7130-7138, 2017.

- [11] P. Jain and V. Tyagi, “A survey of edge-preserving image denoising methods,” Information Systems Frontiers, Vol.18, Issue 1, pp. 159-170, 2014.

- [12] Z. Rahman, Y. Pu, M. Aamir, and F. Ullah, “A framework for fast automatic image cropping based on deep saliency map detection and gaussian filter,” Int. J. of Computers and Applications, Vol.41, Issue 3, pp. 207-217, 2019.

- [13] P. Ganesan and G. Sajiv, “A comprehensive study of edge detection for image processing applications,” Proc. of the 2017 Int. Conf. on Innovations in Information, Embedded and Communication Systems (ICIIECS), pp. 1-6, 2017.

- [14] J. Yang, Z. Gui, L. Zhang, and P. Zhang, “Aperture generation based on threshold segmentation for intensity modulated radiotherapy treatment planning,” Medical Physics, Vol.45, Issue 4, pp. 1758-1770, 2018.

- [15] R. Paul, “Classifying Cooking Object’s State using a Tuned VGG Convolutional Neural Network,” arXiv preprint, arXiv: 1805.09391, 2018.

- [16] M. C. Kruithof, H. Bouma, N. M. Fischer, and K. Schutte, “Object recognition using deep convolutional neural networks with complete transfer and partial frozen layers,” D. Burgess et al. (Eds.), “Proc. Vol.9995: Optics and Photonics for Counterterrorism, Crime Fighting, and Defence XII,” pp. 159-165, SPIE, 2016.

- [17] J. Yosinski, J. Clune, Y. Bengio, and H. Lipson, “How transferable are features in deep neural networks?,” Proc. of the Neural Information Processing Systems 2014 (NIPS 2014), pp. 3320-3328, 2014.

- [18] Q. Qin and J. Vychodil, “Pedestrian Detection Algorithm Based on Improved Convolutional Neural Network,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.5, pp. 834-839, 2017.

- [19] W. Luo and J. Wang, “The Application of A-CNN in Crowd Counting of Scenic Spots,” J. Adv. Comput. Intell. Intell. Inform., Vol.23, No.2, pp. 305-308, 2019.

- [20] V. Nair and G. E. Hinton, “Rectified linear units improve restricted boltzmann machines,” Proc. of the 27th Int. Conf. on Machine Learning (ICML 2010), pp. 807-814, 2010.

- [21] Z. Yan and Y. Wu, “A Neural N-Gram Network for Text Classification,” J. Adv. Comput. Intell. Intell. Inform., Vol.22, No.3, pp. 380-386, 2018.

- [22] I. Kandasamy and F. Smarandache, “Multicriteria Decision Making Using Double Refined Indeterminacy Neutrosophic Cross Entropy and Indeterminacy Based Cross Entropy,” Applied Mechanics and Materials, Vol.859, pp. 129-143, 2016.

- [23] J. Schmidhuber, “Deep learning in neural networks: An overview,” Neural Networks, Vol.61, pp. 85-117, 2015.

- [24] C. Zhang, D. Meng, J. He, and S. Mao, “Detection of Filling Flow Status of Highly Viscous Sauce Based on Convolutional Neural Network,” The 6th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII), 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.