diff --git a/.cargo/config.toml b/.cargo/config.toml

deleted file mode 100644

index af777ef..0000000

--- a/.cargo/config.toml

+++ /dev/null

@@ -1,7 +0,0 @@

-[build]

-target="wasm32-wasi"

-rustflags = ["--cfg", "wasmedge", "--cfg", "tokio_unstable"]

-

-

-[target.wasm32-wasi]

-runner = "wasmedge"

diff --git a/.gitignore b/.gitignore

index 648dadb..aa07357 100644

--- a/.gitignore

+++ b/.gitignore

@@ -2,3 +2,4 @@

.env

Cargo.lock

diff.json

+details.md

\ No newline at end of file

diff --git a/Cargo.toml b/Cargo.toml

index 8a6e8a6..e290b83 100644

--- a/Cargo.toml

+++ b/Cargo.toml

@@ -3,26 +3,20 @@ name = "github-pr-summary"

version = "0.1.0"

edition = "2021"

-[patch.crates-io]

-tokio = { git = "https://github.com/second-state/wasi_tokio.git", branch = "v1.36.x" }

-socket2 = { git = "https://github.com/second-state/socket2.git", branch = "v0.5.x" }

-hyper = { git = "https://github.com/second-state/wasi_hyper.git", branch = "v0.14.x" }

-reqwest = { git = "https://github.com/second-state/wasi_reqwest.git", branch = "0.11.x" }

-

[lib]

path = "src/github-pr-summary.rs"

crate-type = ["cdylib"]

[dependencies]

dotenv = "0.15.0"

-github-flows = "0.8"

+github-flows = "0.5"

serde = { version = "1.0", features = ["derive"] }

serde_json = "1.0.93"

+tokio_wasi = { version = "1.25.1", features = ["macros", "rt"] }

anyhow = "1"

flowsnet-platform-sdk = "0.1"

lazy_static = "1.4.0"

regex = "1.7.1"

-llmservice-flows = "0.3.0"

+openai-flows = "0.8.2"

words-count = "0.1.4"

log = "0.4"

-tokio = { version = "1", features = ["rt", "macros", "net", "time"] }

diff --git a/README.md b/README.md

index 72250d6..d897c21 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,10 @@

+ [中文文档](README-zh.md)

+

-# Agent to summarize Github PRs

+# ChatGPT/4 code reviewer for Github PR

@@ -16,85 +18,85 @@

-[Deploy this function on flows.network](https://flows.network/flow/createByTemplate/github-pr-summary-llm), and you will get an AI agent to review and summarize GitHub Pull Requests. It helps busy open source contributors understand and make decisions on PRs faster! Here are some examples. Notice how the code review bot provides code snippets to show you how to improve the code!

-

-> We recommend you to use a [Gaia node](https://github.com/GaiaNet-AI/gaianet-node) running an open source coding LLM as the backend to perform PR reviews and summarizations. You can use [a community node](https://docs.gaianet.ai/user-guide/nodes#codestral) or run a node [on your own computer](https://github.com/GaiaNet-AI/node-configs/tree/main/codestral-0.1-22b)!

+[Deploy this function on flows.network](#deploy-your-own-code-review-bot-in-3-simple-steps), and you will get a GitHub 🤖 to review and summarize Pull Requests. It helps busy open source contributors understand and make decisions on PRs faster! A few examples below!

* [[Rust] Improve support for host functions in the WasmEdge Rust SDK](https://github.com/WasmEdge/WasmEdge/pull/2394#issuecomment-1497819842)

* [[bash] Support ARM architecture in the WasmEdge installer](https://github.com/WasmEdge/WasmEdge/pull/1084#issuecomment-1497830324)

* [[C++] Add an eBPF plugin for WasmEdge](https://github.com/WasmEdge/WasmEdge/pull/2314#issuecomment-1497861516)

* [[Haskell] Improve the CLI utility for WasmEdge Component Model tooling](https://github.com/second-state/witc/pull/73#issuecomment-1507539260)

-> Still not convinced? [See "potential problems 1" in this review](https://github.com/second-state/wasmedge-quickjs/pull/82#issuecomment-1498299630), it identified an inefficient Rust implementation of an algorithm.

+> Still not convinced? [See "potential problems 1" in this review](https://github.com/second-state/wasmedge-quickjs/pull/82#issuecomment-1498299630), it identified an inefficient Rust implementation of an algorithm. 🤯

This bot **summarizes commits in the PR**. Alternatively, you can use [this bot](https://github.com/flows-network/github-pr-review) to review changed files in the PR.

## How it works

-This flow function will be triggered when a new PR is raised in the designated GitHub repo. The flow function collects the content in the PR, and asks ChatGPT/4 to review and summarize it. The result is then posted back to the PR as a comment. The flow functions are written in Rust and run in hosted [WasmEdge Runtimes](https://github.com/wasmedge) on [flows.network](https://flows.network/).

+This flow function (or 🤖) will be triggered when a new PR is raised in the designated GitHub repo. The flow function collects the content in the PR, and asks ChatGPT/4 to review and summarize it. The result is then posted back to the PR as a comment. The flow functions are written in Rust and run in hosted [WasmEdge Runtimes](https://github.com/wasmedge) on [flows.network](https://flows.network/).

-* The PR summary comment is updated automatically every time a new commit is pushed to this PR.

-* A new summary could be triggered when someone says a magic *trigger phrase* in the PR's comments section. The default trigger phrase is "flows summarize".

+* The code review comment is updated automatically every time a new commit is pushed to this PR.

+* A new code review could be triggered when someone says a magic *trigger phrase* in the PR's comments section. The default trigger phrase is "flows summarize".

## Deploy your own code review bot in 3 simple steps

-1. Create a bot from template

-2. Connect to an LLM

-3. Connect to GitHub for access to the target repo

+1. Create a bot from a template

+2. Add your OpenAI API key

+3. Configure the bot to review PRs on a specified GitHub repo

### 0 Prerequisites

+You will need to bring your own [OpenAI API key](https://openai.com/blog/openai-api). If you do not already have one, [sign up here](https://platform.openai.com/signup).

+

You will also need to sign into [flows.network](https://flows.network/) from your GitHub account. It is free.

-### 1 Create a bot from template

+### 1 Create a bot from a template

-Create a flow function from [this template](https://flows.network/flow/createByTemplate/github-pr-summary-llm).

-It will fork a repo into your personal GitHub account. Your flow function will be compiled from the source code

-in your forked repo. You can configure how it is summoned from the GitHub PR.

+[**Just click here**](https://flows.network/flow/createByTemplate/Summarize-Pull-Request)

-* `trigger_phrase` : The magic words to write in a PR comment to summon the bot. It defaults to "flows summarize".

+Review the `trigger_phrase` variable. It is the magic words you type in a PR comment to manually summon the review bot.

Click on the **Create and Build** button.

-> Alternatively, fork this repo to your own GitHub account. Then, from [flows.network](https://flows.network/), you can **Create a Flow** and select your forked repo. It will create a flow function based on the code in your forked repo. Click on the **Advanced** button to see configuration options for the flow function.

-

-[ ](create.png)

-

-### 2 Connect to an LLM

+### 2 Add your OpenAI API key

-Configure the LLM API service you want to use to summarize the PRs.

+You will now set up OpenAI integration. Click on **Connect**, enter your key and give it a name.

-* `llm_api_endpoint` : The OpenAI compatible API service endpoint for the LLM to conduct code reviews. We recommend the [Codestral Gaia node](https://github.com/GaiaNet-AI/node-configs/tree/main/codestral-0.1-22b): `https://codestral.us.gaianet.network/v1`

-* `llm_model_name` : The model name required by the API service. We recommend the following model name for the above public Gaia node: `codestral`

-* `llm_ctx_size` : The context window size of the selected model. The Codestral model has a 32k context window, which is `32768`.

-* `llm_api_key` : Optional: The API key if required by the LLM service provider. It is not required for the Gaia node.

+[

](create.png)

-

-### 2 Connect to an LLM

+### 2 Add your OpenAI API key

-Configure the LLM API service you want to use to summarize the PRs.

+You will now set up OpenAI integration. Click on **Connect**, enter your key and give it a name.

-* `llm_api_endpoint` : The OpenAI compatible API service endpoint for the LLM to conduct code reviews. We recommend the [Codestral Gaia node](https://github.com/GaiaNet-AI/node-configs/tree/main/codestral-0.1-22b): `https://codestral.us.gaianet.network/v1`

-* `llm_model_name` : The model name required by the API service. We recommend the following model name for the above public Gaia node: `codestral`

-* `llm_ctx_size` : The context window size of the selected model. The Codestral model has a 32k context window, which is `32768`.

-* `llm_api_key` : Optional: The API key if required by the LLM service provider. It is not required for the Gaia node.

+[ ](https://user-images.githubusercontent.com/45785633/222973214-ecd052dc-72c2-4711-90ec-db1ec9d5f24e.png)

-Click on the **Continue** button.

+Close the tab and go back to the flow.network page once you are done. Click on **Continue**.

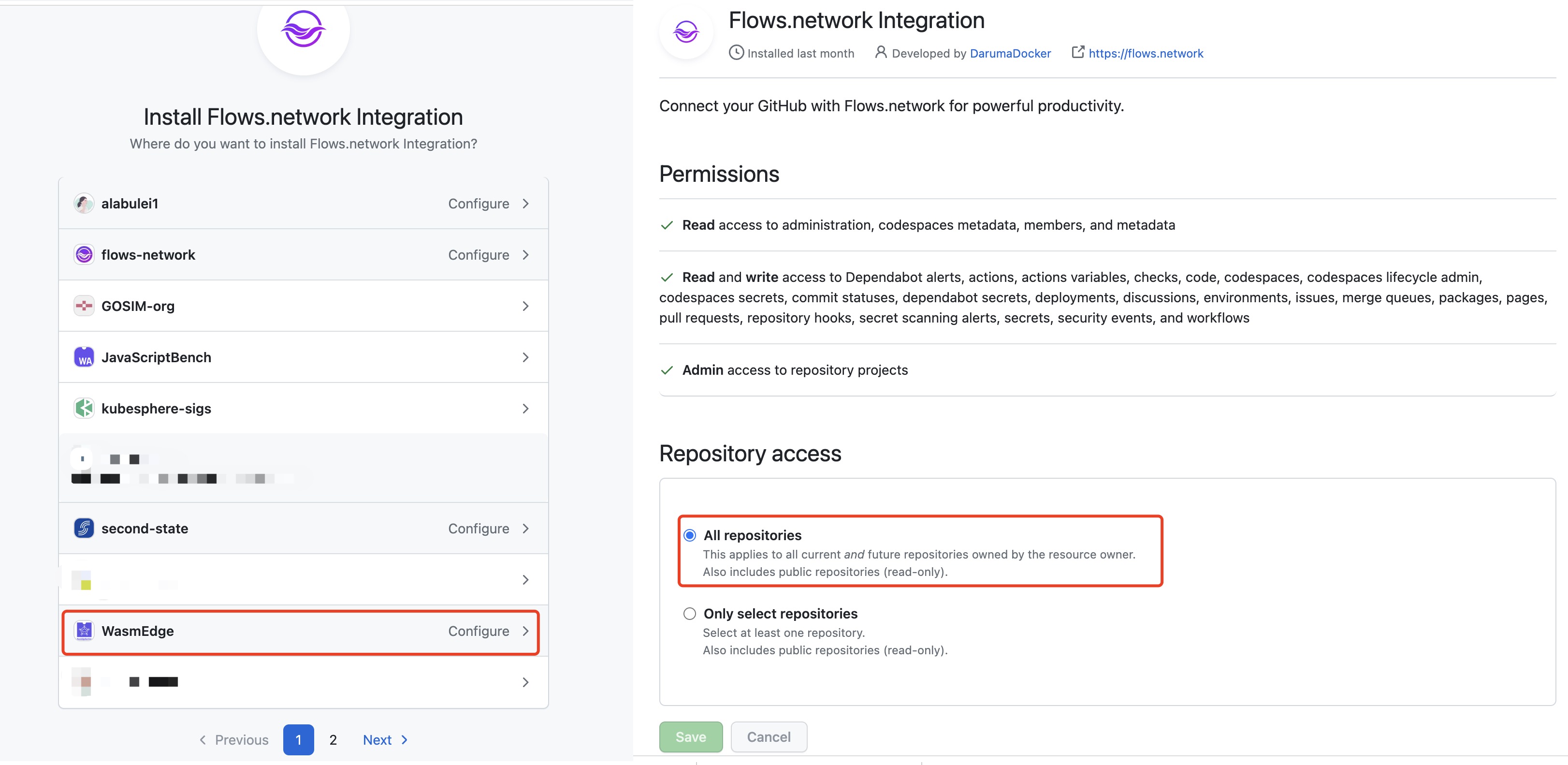

-### 3 Connect to GitHub for access to the target repo

+### 3 Configure the bot to access GitHub

-Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to summarize.

+Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to review.

-* `github_owner`: GitHub org for the repo you want to summarize PRs

-* `github_repo` : GitHub repo you want to summarize PRs

+* `github_owner`: GitHub org for the repo *you want to deploy the 🤖 on*.

+* `github_repo` : GitHub repo *you want to deploy the 🤖 on*.

-> Let's see an example. You would like to deploy the bot to summarize PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

+> Let's see an example. You would like to deploy the bot to review code in PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

-Finally, the GitHub repo will need to give you access so that the flow function can access and summarize its PRs!

-Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo. You'll be redirected to a new page where you must grant flows.network permission to the repo.

+Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo to deploy the 🤖. You'll be redirected to a new page where you must grant [flows.network](https://flows.network/) permission to the repo.

-[

](https://user-images.githubusercontent.com/45785633/222973214-ecd052dc-72c2-4711-90ec-db1ec9d5f24e.png)

-Click on the **Continue** button.

+Close the tab and go back to the flow.network page once you are done. Click on **Continue**.

-### 3 Connect to GitHub for access to the target repo

+### 3 Configure the bot to access GitHub

-Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to summarize.

+Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to review.

-* `github_owner`: GitHub org for the repo you want to summarize PRs

-* `github_repo` : GitHub repo you want to summarize PRs

+* `github_owner`: GitHub org for the repo *you want to deploy the 🤖 on*.

+* `github_repo` : GitHub repo *you want to deploy the 🤖 on*.

-> Let's see an example. You would like to deploy the bot to summarize PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

+> Let's see an example. You would like to deploy the bot to review code in PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

-Finally, the GitHub repo will need to give you access so that the flow function can access and summarize its PRs!

-Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo. You'll be redirected to a new page where you must grant flows.network permission to the repo.

+Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo to deploy the 🤖. You'll be redirected to a new page where you must grant [flows.network](https://flows.network/) permission to the repo.

-[ ](llm.png)

+[

](llm.png)

+[ ](https://github.com/flows-network/github-pr-summary/assets/45785633/6cefff19-9eeb-4533-a20b-03c6a9c89473)

-Click on **Deploy**.

+Close the tab and go back to the flow.network page once you are done. Click on **Deploy**.

### Wait for the magic!

This is it! You are now on the flow details page waiting for the flow function to build. As soon as the flow's status became `running`, the bot is ready to give code reviews! The bot is summoned by every new PR, every new commit, as well as magic words (i.e., `trigger_phrase`) in PR comments.

-[

](https://github.com/flows-network/github-pr-summary/assets/45785633/6cefff19-9eeb-4533-a20b-03c6a9c89473)

-Click on **Deploy**.

+Close the tab and go back to the flow.network page once you are done. Click on **Deploy**.

### Wait for the magic!

This is it! You are now on the flow details page waiting for the flow function to build. As soon as the flow's status became `running`, the bot is ready to give code reviews! The bot is summoned by every new PR, every new commit, as well as magic words (i.e., `trigger_phrase`) in PR comments.

-[ ](target.png)

+[

](target.png)

+[ ](https://user-images.githubusercontent.com/45785633/229329247-16273aec-f89b-4375-bf2b-4ffce5e35a33.png)

## FAQ

+### Customize the bot

+

+The bot's source code is available in the GitHub repo you cloned from the template. Feel free to make changes to the source code (e.g., model, context length, API key and prompts) to fit your own needs. If you need help, [ask in Discord](https://discord.gg/ccZn9ZMfFf)!

+

+### Use GPT4

+

+By default, the bot uses GPT3.5 for code review. If your OpenAI API key has access to GPT4, you can open the `src/github-pr-review.rs` file

+in your cloned source code repo, and change `GPT35Turbo` to `GPT4` in the source code. Commit and push the change back to GitHub.

+The flows.network platform will automatically detect and rebuild the bot from your updated source code.

+

### Use the bot on multiple repos

You can [manually create a new flow](https://flows.network/flow/new) and import the source code repo for the bot (i.e., the repo you cloned from the template). Then, you can use the flow config to specify the `github_owner` and `github_repo` to point to the target repo you need to deploy the bot on. Deploy and authorize access to that target repo.

diff --git a/create.png b/create.png

deleted file mode 100644

index 1cc98bb..0000000

Binary files a/create.png and /dev/null differ

diff --git a/llm.png b/llm.png

deleted file mode 100644

index 9ce98e4..0000000

Binary files a/llm.png and /dev/null differ

diff --git a/src/github-pr-summary.rs b/src/github-pr-summary.rs

index 9d9920e..ee10e8f 100644

--- a/src/github-pr-summary.rs

+++ b/src/github-pr-summary.rs

@@ -1,56 +1,51 @@

use dotenv::dotenv;

use flowsnet_platform_sdk::logger;

use github_flows::{

- event_handler, get_octo, listen_to_event,

+ get_octo, listen_to_event,

+ octocrab::models::events::payload::{IssueCommentEventAction, PullRequestEventAction},

octocrab::models::CommentId,

- octocrab::models::webhook_events::{WebhookEvent, WebhookEventPayload},

- octocrab::models::webhook_events::payload::{IssueCommentWebhookEventAction, PullRequestWebhookEventAction},

- GithubLogin,

+ EventPayload, GithubLogin,

};

-use llmservice_flows::{

- chat::{ChatOptions},

- LLMServiceFlows,

+use openai_flows::{

+ chat::{ChatModel, ChatOptions},

+ OpenAIFlows,

};

use std::env;

+// The soft character limit of the input context size

+// the max token size or word count for GPT4 is 8192

+// the max token size or word count for GPT35Turbo16K is 16384

+static CHAR_SOFT_LIMIT: usize = 30000;

+static MODEL: ChatModel = ChatModel::GPT35Turbo16K;

+// static MODEL : ChatModel = ChatModel::GPT4;

+

#[no_mangle]

#[tokio::main(flavor = "current_thread")]

-pub async fn on_deploy() {

+pub async fn run() -> anyhow::Result<()> {

dotenv().ok();

logger::init();

log::debug!("Running github-pr-summary/main");

let owner = env::var("github_owner").unwrap_or("juntao".to_string());

let repo = env::var("github_repo").unwrap_or("test".to_string());

+ let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- listen_to_event(&GithubLogin::Default, &owner, &repo, vec!["pull_request", "issue_comment"]).await;

-}

+ let events = vec!["pull_request", "issue_comment"];

+ listen_to_event(&GithubLogin::Default, &owner, &repo, events, |payload| {

+ handler(&owner, &repo, &trigger_phrase, payload)

+ })

+ .await;

-#[event_handler]

-async fn handler(event: Result) {

- dotenv().ok();

- logger::init();

- log::debug!("Running github-pr-summary/main handler()");

+ Ok(())

+}

- let owner = env::var("github_owner").unwrap_or("juntao".to_string());

- let repo = env::var("github_repo").unwrap_or("test".to_string());

- let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- let llm_api_endpoint = env::var("llm_api_endpoint").unwrap_or("https://api.openai.com/v1".to_string());

- let llm_model_name = env::var("llm_model_name").unwrap_or("gpt-4o".to_string());

- let llm_ctx_size = env::var("llm_ctx_size").unwrap_or("16384".to_string()).parse::().unwrap_or(0);

- let llm_api_key = env::var("llm_api_key").unwrap_or("LLAMAEDGE".to_string());

-

- // The soft character limit of the input context size

- // This is measured in chars. We set it to be 2x llm_ctx_size, which is measured in tokens.

- let ctx_size_char : usize = (2 * llm_ctx_size).try_into().unwrap_or(0);

-

- let payload = event.unwrap();

- let mut new_commit : bool = false;

- let (title, pull_number, _contributor) = match payload.specific {

- WebhookEventPayload::PullRequest(e) => {

- if e.action == PullRequestWebhookEventAction::Opened {

+async fn handler(owner: &str, repo: &str, trigger_phrase: &str, payload: EventPayload) {

+ let mut new_commit: bool = false;

+ let (title, pull_number, _contributor) = match payload {

+ EventPayload::PullRequestEvent(e) => {

+ if e.action == PullRequestEventAction::Opened {

log::debug!("Received payload: PR Opened");

- } else if e.action == PullRequestWebhookEventAction::Synchronize {

+ } else if e.action == PullRequestEventAction::Synchronize {

new_commit = true;

log::debug!("Received payload: PR Synced");

} else {

@@ -64,8 +59,8 @@ async fn handler(event: Result) {

p.user.unwrap().login,

)

}

- WebhookEventPayload::IssueComment(e) => {

- if e.action == IssueCommentWebhookEventAction::Deleted {

+ EventPayload::IssueCommentEvent(e) => {

+ if e.action == IssueCommentEventAction::Deleted {

log::debug!("Deleted issue comment");

return;

}

@@ -77,12 +72,12 @@ async fn handler(event: Result) {

// return;

// }

// TODO: Makeshift but operational

- if body.starts_with("Hello, I am a [PR summary agent]") {

+ if body.starts_with("Hello, I am a [code review bot]") {

log::info!("Ignore comment via bot");

return;

};

- if !body.to_lowercase().starts_with(&trigger_phrase.to_lowercase()) {

+ if !body.to_lowercase().contains(&trigger_phrase.to_lowercase()) {

log::info!("Ignore the comment without the magic words");

return;

}

@@ -93,14 +88,17 @@ async fn handler(event: Result) {

};

let octo = get_octo(&GithubLogin::Default);

- let issues = octo.issues(owner.clone(), repo.clone());

+ let issues = octo.issues(owner, repo);

let mut comment_id: CommentId = 0u64.into();

if new_commit {

- // Find the first "Hello, I am a [PR summary agent]" comment to update

+ // Find the first "Hello, I am a [code review bot]" comment to update

match issues.list_comments(pull_number).send().await {

Ok(comments) => {

for c in comments.items {

- if c.body.unwrap_or_default().starts_with("Hello, I am a [PR summary agent]") {

+ if c.body

+ .unwrap_or_default()

+ .starts_with("Hello, I am a [code review bot]")

+ {

comment_id = c.id;

break;

}

@@ -113,7 +111,7 @@ async fn handler(event: Result) {

}

} else {

// PR OPEN or Trigger phrase: create a new comment

- match issues.create_comment(pull_number, "Hello, I am a [PR summary agent](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/).\n\nIt could take a few minutes for me to analyze this PR. Relax, grab a cup of coffee and check back later. Thanks!").await {

+ match issues.create_comment(pull_number, "Hello, I am a [code review bot](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/).\n\nIt could take a few minutes for me to analyze this PR. Relax, grab a cup of coffee and check back later. Thanks!").await {

Ok(comment) => {

comment_id = comment.id;

}

@@ -123,9 +121,11 @@ async fn handler(event: Result) {

}

}

}

- if comment_id == 0u64.into() { return; }

+ if comment_id == 0u64.into() {

+ return;

+ }

- let pulls = octo.pulls(owner.clone(), repo.clone());

+ let pulls = octo.pulls(owner, repo);

let patch_as_text = pulls.get_patch(pull_number).await.unwrap();

let mut current_commit = String::new();

let mut commits: Vec = Vec::new();

@@ -139,8 +139,8 @@ async fn handler(event: Result) {

// Start a new commit

current_commit.clear();

}

- // Append the line to the current commit if the current commit is less than ctx_size_char

- if current_commit.len() < ctx_size_char {

+ // Append the line to the current commit if the current commit is less than CHAR_SOFT_LIMIT

+ if current_commit.len() < CHAR_SOFT_LIMIT {

current_commit.push_str(line);

current_commit.push('\n');

}

@@ -156,68 +156,75 @@ async fn handler(event: Result) {

}

let chat_id = format!("PR#{pull_number}");

- let system = &format!("You are an experienced software developer. You will act as a reviewer for a GitHub Pull Request titled \"{}\". Please be as concise as possible while being accurate.", title);

- let mut lf = LLMServiceFlows::new(&llm_api_endpoint);

- lf.set_api_key(&llm_api_key);

- // lf.set_retry_times(3);

+ let system = &format!("You are an experienced software developer. You will act as a reviewer for a GitHub Pull Request titled \"{}\".", title);

+ let mut openai = OpenAIFlows::new();

+ openai.set_retry_times(3);

let mut reviews: Vec = Vec::new();

+ let mut reviews_md_str: Vec = Vec::new();

let mut reviews_text = String::new();

for (_i, commit) in commits.iter().enumerate() {

let commit_hash = &commit[5..45];

- log::debug!("Sending patch to LLM: {}", commit_hash);

+ log::debug!("Sending patch to OpenAI: {}", commit_hash);

let co = ChatOptions {

- model: Some(&llm_model_name),

- token_limit: llm_ctx_size,

+ model: MODEL,

restart: true,

system_prompt: Some(system),

+ max_tokens: Some(200),

..Default::default()

};

- let question = "The following is a GitHub patch. Please summarize the key changes in concise points. Start with the most important findings.\n\n".to_string() + truncate(commit, ctx_size_char);

- match lf.chat_completion(&chat_id, &question, &co).await {

+ let question = "The following is a GitHub patch. Please summarize the key changes and identify potential problems. Start with the most important findings.\n\n".to_string() + truncate(commit, CHAR_SOFT_LIMIT);

+ match openai.chat_completion(&chat_id, &question, &co).await {

Ok(r) => {

- if reviews_text.len() < ctx_size_char {

+ if reviews_text.len() < CHAR_SOFT_LIMIT {

reviews_text.push_str("------\n");

reviews_text.push_str(&r.choice);

reviews_text.push_str("\n");

}

let mut review = String::new();

- review.push_str(&format!("### [Commit {commit_hash}](https://github.com/{owner}/{repo}/pull/{pull_number}/commits/{commit_hash})\n"));

+ // review.push_str(&format!("### [Commit {commit_hash}](https://github.com/WasmEdge/WasmEdge/pull/{pull_number}/commits/{commit_hash})\n"));

review.push_str(&r.choice);

review.push_str("\n\n");

- reviews.push(review);

- log::debug!("Received LLM resp for patch: {}", commit_hash);

+ reviews.push(review.clone());

+ let formatted_review = format!(

+ r#"

](https://user-images.githubusercontent.com/45785633/229329247-16273aec-f89b-4375-bf2b-4ffce5e35a33.png)

## FAQ

+### Customize the bot

+

+The bot's source code is available in the GitHub repo you cloned from the template. Feel free to make changes to the source code (e.g., model, context length, API key and prompts) to fit your own needs. If you need help, [ask in Discord](https://discord.gg/ccZn9ZMfFf)!

+

+### Use GPT4

+

+By default, the bot uses GPT3.5 for code review. If your OpenAI API key has access to GPT4, you can open the `src/github-pr-review.rs` file

+in your cloned source code repo, and change `GPT35Turbo` to `GPT4` in the source code. Commit and push the change back to GitHub.

+The flows.network platform will automatically detect and rebuild the bot from your updated source code.

+

### Use the bot on multiple repos

You can [manually create a new flow](https://flows.network/flow/new) and import the source code repo for the bot (i.e., the repo you cloned from the template). Then, you can use the flow config to specify the `github_owner` and `github_repo` to point to the target repo you need to deploy the bot on. Deploy and authorize access to that target repo.

diff --git a/create.png b/create.png

deleted file mode 100644

index 1cc98bb..0000000

Binary files a/create.png and /dev/null differ

diff --git a/llm.png b/llm.png

deleted file mode 100644

index 9ce98e4..0000000

Binary files a/llm.png and /dev/null differ

diff --git a/src/github-pr-summary.rs b/src/github-pr-summary.rs

index 9d9920e..ee10e8f 100644

--- a/src/github-pr-summary.rs

+++ b/src/github-pr-summary.rs

@@ -1,56 +1,51 @@

use dotenv::dotenv;

use flowsnet_platform_sdk::logger;

use github_flows::{

- event_handler, get_octo, listen_to_event,

+ get_octo, listen_to_event,

+ octocrab::models::events::payload::{IssueCommentEventAction, PullRequestEventAction},

octocrab::models::CommentId,

- octocrab::models::webhook_events::{WebhookEvent, WebhookEventPayload},

- octocrab::models::webhook_events::payload::{IssueCommentWebhookEventAction, PullRequestWebhookEventAction},

- GithubLogin,

+ EventPayload, GithubLogin,

};

-use llmservice_flows::{

- chat::{ChatOptions},

- LLMServiceFlows,

+use openai_flows::{

+ chat::{ChatModel, ChatOptions},

+ OpenAIFlows,

};

use std::env;

+// The soft character limit of the input context size

+// the max token size or word count for GPT4 is 8192

+// the max token size or word count for GPT35Turbo16K is 16384

+static CHAR_SOFT_LIMIT: usize = 30000;

+static MODEL: ChatModel = ChatModel::GPT35Turbo16K;

+// static MODEL : ChatModel = ChatModel::GPT4;

+

#[no_mangle]

#[tokio::main(flavor = "current_thread")]

-pub async fn on_deploy() {

+pub async fn run() -> anyhow::Result<()> {

dotenv().ok();

logger::init();

log::debug!("Running github-pr-summary/main");

let owner = env::var("github_owner").unwrap_or("juntao".to_string());

let repo = env::var("github_repo").unwrap_or("test".to_string());

+ let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- listen_to_event(&GithubLogin::Default, &owner, &repo, vec!["pull_request", "issue_comment"]).await;

-}

+ let events = vec!["pull_request", "issue_comment"];

+ listen_to_event(&GithubLogin::Default, &owner, &repo, events, |payload| {

+ handler(&owner, &repo, &trigger_phrase, payload)

+ })

+ .await;

-#[event_handler]

-async fn handler(event: Result) {

- dotenv().ok();

- logger::init();

- log::debug!("Running github-pr-summary/main handler()");

+ Ok(())

+}

- let owner = env::var("github_owner").unwrap_or("juntao".to_string());

- let repo = env::var("github_repo").unwrap_or("test".to_string());

- let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- let llm_api_endpoint = env::var("llm_api_endpoint").unwrap_or("https://api.openai.com/v1".to_string());

- let llm_model_name = env::var("llm_model_name").unwrap_or("gpt-4o".to_string());

- let llm_ctx_size = env::var("llm_ctx_size").unwrap_or("16384".to_string()).parse::().unwrap_or(0);

- let llm_api_key = env::var("llm_api_key").unwrap_or("LLAMAEDGE".to_string());

-

- // The soft character limit of the input context size

- // This is measured in chars. We set it to be 2x llm_ctx_size, which is measured in tokens.

- let ctx_size_char : usize = (2 * llm_ctx_size).try_into().unwrap_or(0);

-

- let payload = event.unwrap();

- let mut new_commit : bool = false;

- let (title, pull_number, _contributor) = match payload.specific {

- WebhookEventPayload::PullRequest(e) => {

- if e.action == PullRequestWebhookEventAction::Opened {

+async fn handler(owner: &str, repo: &str, trigger_phrase: &str, payload: EventPayload) {

+ let mut new_commit: bool = false;

+ let (title, pull_number, _contributor) = match payload {

+ EventPayload::PullRequestEvent(e) => {

+ if e.action == PullRequestEventAction::Opened {

log::debug!("Received payload: PR Opened");

- } else if e.action == PullRequestWebhookEventAction::Synchronize {

+ } else if e.action == PullRequestEventAction::Synchronize {

new_commit = true;

log::debug!("Received payload: PR Synced");

} else {

@@ -64,8 +59,8 @@ async fn handler(event: Result) {

p.user.unwrap().login,

)

}

- WebhookEventPayload::IssueComment(e) => {

- if e.action == IssueCommentWebhookEventAction::Deleted {

+ EventPayload::IssueCommentEvent(e) => {

+ if e.action == IssueCommentEventAction::Deleted {

log::debug!("Deleted issue comment");

return;

}

@@ -77,12 +72,12 @@ async fn handler(event: Result) {

// return;

// }

// TODO: Makeshift but operational

- if body.starts_with("Hello, I am a [PR summary agent]") {

+ if body.starts_with("Hello, I am a [code review bot]") {

log::info!("Ignore comment via bot");

return;

};

- if !body.to_lowercase().starts_with(&trigger_phrase.to_lowercase()) {

+ if !body.to_lowercase().contains(&trigger_phrase.to_lowercase()) {

log::info!("Ignore the comment without the magic words");

return;

}

@@ -93,14 +88,17 @@ async fn handler(event: Result) {

};

let octo = get_octo(&GithubLogin::Default);

- let issues = octo.issues(owner.clone(), repo.clone());

+ let issues = octo.issues(owner, repo);

let mut comment_id: CommentId = 0u64.into();

if new_commit {

- // Find the first "Hello, I am a [PR summary agent]" comment to update

+ // Find the first "Hello, I am a [code review bot]" comment to update

match issues.list_comments(pull_number).send().await {

Ok(comments) => {

for c in comments.items {

- if c.body.unwrap_or_default().starts_with("Hello, I am a [PR summary agent]") {

+ if c.body

+ .unwrap_or_default()

+ .starts_with("Hello, I am a [code review bot]")

+ {

comment_id = c.id;

break;

}

@@ -113,7 +111,7 @@ async fn handler(event: Result) {

}

} else {

// PR OPEN or Trigger phrase: create a new comment

- match issues.create_comment(pull_number, "Hello, I am a [PR summary agent](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/).\n\nIt could take a few minutes for me to analyze this PR. Relax, grab a cup of coffee and check back later. Thanks!").await {

+ match issues.create_comment(pull_number, "Hello, I am a [code review bot](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/).\n\nIt could take a few minutes for me to analyze this PR. Relax, grab a cup of coffee and check back later. Thanks!").await {

Ok(comment) => {

comment_id = comment.id;

}

@@ -123,9 +121,11 @@ async fn handler(event: Result) {

}

}

}

- if comment_id == 0u64.into() { return; }

+ if comment_id == 0u64.into() {

+ return;

+ }

- let pulls = octo.pulls(owner.clone(), repo.clone());

+ let pulls = octo.pulls(owner, repo);

let patch_as_text = pulls.get_patch(pull_number).await.unwrap();

let mut current_commit = String::new();

let mut commits: Vec = Vec::new();

@@ -139,8 +139,8 @@ async fn handler(event: Result) {

// Start a new commit

current_commit.clear();

}

- // Append the line to the current commit if the current commit is less than ctx_size_char

- if current_commit.len() < ctx_size_char {

+ // Append the line to the current commit if the current commit is less than CHAR_SOFT_LIMIT

+ if current_commit.len() < CHAR_SOFT_LIMIT {

current_commit.push_str(line);

current_commit.push('\n');

}

@@ -156,68 +156,75 @@ async fn handler(event: Result) {

}

let chat_id = format!("PR#{pull_number}");

- let system = &format!("You are an experienced software developer. You will act as a reviewer for a GitHub Pull Request titled \"{}\". Please be as concise as possible while being accurate.", title);

- let mut lf = LLMServiceFlows::new(&llm_api_endpoint);

- lf.set_api_key(&llm_api_key);

- // lf.set_retry_times(3);

+ let system = &format!("You are an experienced software developer. You will act as a reviewer for a GitHub Pull Request titled \"{}\".", title);

+ let mut openai = OpenAIFlows::new();

+ openai.set_retry_times(3);

let mut reviews: Vec = Vec::new();

+ let mut reviews_md_str: Vec = Vec::new();

let mut reviews_text = String::new();

for (_i, commit) in commits.iter().enumerate() {

let commit_hash = &commit[5..45];

- log::debug!("Sending patch to LLM: {}", commit_hash);

+ log::debug!("Sending patch to OpenAI: {}", commit_hash);

let co = ChatOptions {

- model: Some(&llm_model_name),

- token_limit: llm_ctx_size,

+ model: MODEL,

restart: true,

system_prompt: Some(system),

+ max_tokens: Some(200),

..Default::default()

};

- let question = "The following is a GitHub patch. Please summarize the key changes in concise points. Start with the most important findings.\n\n".to_string() + truncate(commit, ctx_size_char);

- match lf.chat_completion(&chat_id, &question, &co).await {

+ let question = "The following is a GitHub patch. Please summarize the key changes and identify potential problems. Start with the most important findings.\n\n".to_string() + truncate(commit, CHAR_SOFT_LIMIT);

+ match openai.chat_completion(&chat_id, &question, &co).await {

Ok(r) => {

- if reviews_text.len() < ctx_size_char {

+ if reviews_text.len() < CHAR_SOFT_LIMIT {

reviews_text.push_str("------\n");

reviews_text.push_str(&r.choice);

reviews_text.push_str("\n");

}

let mut review = String::new();

- review.push_str(&format!("### [Commit {commit_hash}](https://github.com/{owner}/{repo}/pull/{pull_number}/commits/{commit_hash})\n"));

+ // review.push_str(&format!("### [Commit {commit_hash}](https://github.com/WasmEdge/WasmEdge/pull/{pull_number}/commits/{commit_hash})\n"));

review.push_str(&r.choice);

review.push_str("\n\n");

- reviews.push(review);

- log::debug!("Received LLM resp for patch: {}", commit_hash);

+ reviews.push(review.clone());

+ let formatted_review = format!(

+ r#"

+ Commit {}

+ {} "#,

+ owner, repo, pull_number, commit_hash, commit_hash, review

+ );

+ reviews_md_str.push(formatted_review);

+ log::debug!("Received OpenAI resp for patch: {}", commit_hash);

}

Err(e) => {

- log::error!("LLM returned an error for commit {commit_hash}: {}", e);

+ log::error!("OpenAI returned an error for commit {commit_hash}: {}", e);

}

}

}

let mut resp = String::new();

- resp.push_str("Hello, I am a [PR summary agent](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/). Here are my reviews of code commits in this PR.\n\n------\n\n");

+ resp.push_str("Hello, I am a [code review bot](https://github.com/flows-network/github-pr-summary/) on [flows.network](https://flows.network/). Here are my reviews of code commits in this PR.\n\n------\n\n");

if reviews.len() > 1 {

- log::debug!("Sending all reviews to LLM for summarization");

+ log::debug!("Sending all reviews to OpenAI for summarization");

let co = ChatOptions {

- model: Some(&llm_model_name),

- token_limit: llm_ctx_size,

+ model: MODEL,

restart: true,

system_prompt: Some(system),

+ max_tokens: Some(4000),

..Default::default()

};

- let question = "Here is a set of summaries for source code patches in this PR. Each summary starts with a ------ line. Write an overall summary. Present the potential issues and errors first, following by the most important findings, in your summary.\n\n".to_string() + &reviews_text;

- match lf.chat_completion(&chat_id, &question, &co).await {

+ let question = "Here is a set of summaries for software source code patches. Each summary starts with a ------ line. Please write an overall summary considering all the individual summary. Please present the potential issues and errors first, following by the most important findings, in your summary.\n\n".to_string() + &reviews_text;

+ match openai.chat_completion(&chat_id, &question, &co).await {

Ok(r) => {

resp.push_str(&r.choice);

resp.push_str("\n\n## Details\n\n");

log::debug!("Received the overall summary");

}

Err(e) => {

- log::error!("LLM returned an error for the overall summary: {}", e);

+ log::error!("OpenAI returned an error for the overall summary: {}", e);

}

}

}

- for (_i, review) in reviews.iter().enumerate() {

+ for (_i, review) in reviews_md_str.iter().enumerate() {

resp.push_str(review);

}

diff --git a/target.png b/target.png

deleted file mode 100644

index d035a79..0000000

Binary files a/target.png and /dev/null differ

](create.png)

-

-### 2 Connect to an LLM

+### 2 Add your OpenAI API key

-Configure the LLM API service you want to use to summarize the PRs.

+You will now set up OpenAI integration. Click on **Connect**, enter your key and give it a name.

-* `llm_api_endpoint` : The OpenAI compatible API service endpoint for the LLM to conduct code reviews. We recommend the [Codestral Gaia node](https://github.com/GaiaNet-AI/node-configs/tree/main/codestral-0.1-22b): `https://codestral.us.gaianet.network/v1`

-* `llm_model_name` : The model name required by the API service. We recommend the following model name for the above public Gaia node: `codestral`

-* `llm_ctx_size` : The context window size of the selected model. The Codestral model has a 32k context window, which is `32768`.

-* `llm_api_key` : Optional: The API key if required by the LLM service provider. It is not required for the Gaia node.

+[

](create.png)

-

-### 2 Connect to an LLM

+### 2 Add your OpenAI API key

-Configure the LLM API service you want to use to summarize the PRs.

+You will now set up OpenAI integration. Click on **Connect**, enter your key and give it a name.

-* `llm_api_endpoint` : The OpenAI compatible API service endpoint for the LLM to conduct code reviews. We recommend the [Codestral Gaia node](https://github.com/GaiaNet-AI/node-configs/tree/main/codestral-0.1-22b): `https://codestral.us.gaianet.network/v1`

-* `llm_model_name` : The model name required by the API service. We recommend the following model name for the above public Gaia node: `codestral`

-* `llm_ctx_size` : The context window size of the selected model. The Codestral model has a 32k context window, which is `32768`.

-* `llm_api_key` : Optional: The API key if required by the LLM service provider. It is not required for the Gaia node.

+[ ](https://user-images.githubusercontent.com/45785633/222973214-ecd052dc-72c2-4711-90ec-db1ec9d5f24e.png)

-Click on the **Continue** button.

+Close the tab and go back to the flow.network page once you are done. Click on **Continue**.

-### 3 Connect to GitHub for access to the target repo

+### 3 Configure the bot to access GitHub

-Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to summarize.

+Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to review.

-* `github_owner`: GitHub org for the repo you want to summarize PRs

-* `github_repo` : GitHub repo you want to summarize PRs

+* `github_owner`: GitHub org for the repo *you want to deploy the 🤖 on*.

+* `github_repo` : GitHub repo *you want to deploy the 🤖 on*.

-> Let's see an example. You would like to deploy the bot to summarize PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

+> Let's see an example. You would like to deploy the bot to review code in PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

-Finally, the GitHub repo will need to give you access so that the flow function can access and summarize its PRs!

-Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo. You'll be redirected to a new page where you must grant flows.network permission to the repo.

+Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo to deploy the 🤖. You'll be redirected to a new page where you must grant [flows.network](https://flows.network/) permission to the repo.

-[

](https://user-images.githubusercontent.com/45785633/222973214-ecd052dc-72c2-4711-90ec-db1ec9d5f24e.png)

-Click on the **Continue** button.

+Close the tab and go back to the flow.network page once you are done. Click on **Continue**.

-### 3 Connect to GitHub for access to the target repo

+### 3 Configure the bot to access GitHub

-Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to summarize.

+Next, you will tell the bot which GitHub repo it needs to monitor for upcoming PRs to review.

-* `github_owner`: GitHub org for the repo you want to summarize PRs

-* `github_repo` : GitHub repo you want to summarize PRs

+* `github_owner`: GitHub org for the repo *you want to deploy the 🤖 on*.

+* `github_repo` : GitHub repo *you want to deploy the 🤖 on*.

-> Let's see an example. You would like to deploy the bot to summarize PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

+> Let's see an example. You would like to deploy the bot to review code in PRs on `WasmEdge/wasmedge_hyper_demo` repo. Here `github_owner = WasmEdge` and `github_repo = wasmedge_hyper_demo`.

-Finally, the GitHub repo will need to give you access so that the flow function can access and summarize its PRs!

-Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo. You'll be redirected to a new page where you must grant flows.network permission to the repo.

+Click on the **Connect** or **+ Add new authentication** button to give the function access to the GitHub repo to deploy the 🤖. You'll be redirected to a new page where you must grant [flows.network](https://flows.network/) permission to the repo.

-[ ](llm.png)

+[

](llm.png)

+[ ](target.png)

+[

](target.png)

+[ ](https://user-images.githubusercontent.com/45785633/229329247-16273aec-f89b-4375-bf2b-4ffce5e35a33.png)

## FAQ

+### Customize the bot

+

+The bot's source code is available in the GitHub repo you cloned from the template. Feel free to make changes to the source code (e.g., model, context length, API key and prompts) to fit your own needs. If you need help, [ask in Discord](https://discord.gg/ccZn9ZMfFf)!

+

+### Use GPT4

+

+By default, the bot uses GPT3.5 for code review. If your OpenAI API key has access to GPT4, you can open the `src/github-pr-review.rs` file

+in your cloned source code repo, and change `GPT35Turbo` to `GPT4` in the source code. Commit and push the change back to GitHub.

+The flows.network platform will automatically detect and rebuild the bot from your updated source code.

+

### Use the bot on multiple repos

You can [manually create a new flow](https://flows.network/flow/new) and import the source code repo for the bot (i.e., the repo you cloned from the template). Then, you can use the flow config to specify the `github_owner` and `github_repo` to point to the target repo you need to deploy the bot on. Deploy and authorize access to that target repo.

diff --git a/create.png b/create.png

deleted file mode 100644

index 1cc98bb..0000000

Binary files a/create.png and /dev/null differ

diff --git a/llm.png b/llm.png

deleted file mode 100644

index 9ce98e4..0000000

Binary files a/llm.png and /dev/null differ

diff --git a/src/github-pr-summary.rs b/src/github-pr-summary.rs

index 9d9920e..ee10e8f 100644

--- a/src/github-pr-summary.rs

+++ b/src/github-pr-summary.rs

@@ -1,56 +1,51 @@

use dotenv::dotenv;

use flowsnet_platform_sdk::logger;

use github_flows::{

- event_handler, get_octo, listen_to_event,

+ get_octo, listen_to_event,

+ octocrab::models::events::payload::{IssueCommentEventAction, PullRequestEventAction},

octocrab::models::CommentId,

- octocrab::models::webhook_events::{WebhookEvent, WebhookEventPayload},

- octocrab::models::webhook_events::payload::{IssueCommentWebhookEventAction, PullRequestWebhookEventAction},

- GithubLogin,

+ EventPayload, GithubLogin,

};

-use llmservice_flows::{

- chat::{ChatOptions},

- LLMServiceFlows,

+use openai_flows::{

+ chat::{ChatModel, ChatOptions},

+ OpenAIFlows,

};

use std::env;

+// The soft character limit of the input context size

+// the max token size or word count for GPT4 is 8192

+// the max token size or word count for GPT35Turbo16K is 16384

+static CHAR_SOFT_LIMIT: usize = 30000;

+static MODEL: ChatModel = ChatModel::GPT35Turbo16K;

+// static MODEL : ChatModel = ChatModel::GPT4;

+

#[no_mangle]

#[tokio::main(flavor = "current_thread")]

-pub async fn on_deploy() {

+pub async fn run() -> anyhow::Result<()> {

dotenv().ok();

logger::init();

log::debug!("Running github-pr-summary/main");

let owner = env::var("github_owner").unwrap_or("juntao".to_string());

let repo = env::var("github_repo").unwrap_or("test".to_string());

+ let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- listen_to_event(&GithubLogin::Default, &owner, &repo, vec!["pull_request", "issue_comment"]).await;

-}

+ let events = vec!["pull_request", "issue_comment"];

+ listen_to_event(&GithubLogin::Default, &owner, &repo, events, |payload| {

+ handler(&owner, &repo, &trigger_phrase, payload)

+ })

+ .await;

-#[event_handler]

-async fn handler(event: Result

](https://user-images.githubusercontent.com/45785633/229329247-16273aec-f89b-4375-bf2b-4ffce5e35a33.png)

## FAQ

+### Customize the bot

+

+The bot's source code is available in the GitHub repo you cloned from the template. Feel free to make changes to the source code (e.g., model, context length, API key and prompts) to fit your own needs. If you need help, [ask in Discord](https://discord.gg/ccZn9ZMfFf)!

+

+### Use GPT4

+

+By default, the bot uses GPT3.5 for code review. If your OpenAI API key has access to GPT4, you can open the `src/github-pr-review.rs` file

+in your cloned source code repo, and change `GPT35Turbo` to `GPT4` in the source code. Commit and push the change back to GitHub.

+The flows.network platform will automatically detect and rebuild the bot from your updated source code.

+

### Use the bot on multiple repos

You can [manually create a new flow](https://flows.network/flow/new) and import the source code repo for the bot (i.e., the repo you cloned from the template). Then, you can use the flow config to specify the `github_owner` and `github_repo` to point to the target repo you need to deploy the bot on. Deploy and authorize access to that target repo.

diff --git a/create.png b/create.png

deleted file mode 100644

index 1cc98bb..0000000

Binary files a/create.png and /dev/null differ

diff --git a/llm.png b/llm.png

deleted file mode 100644

index 9ce98e4..0000000

Binary files a/llm.png and /dev/null differ

diff --git a/src/github-pr-summary.rs b/src/github-pr-summary.rs

index 9d9920e..ee10e8f 100644

--- a/src/github-pr-summary.rs

+++ b/src/github-pr-summary.rs

@@ -1,56 +1,51 @@

use dotenv::dotenv;

use flowsnet_platform_sdk::logger;

use github_flows::{

- event_handler, get_octo, listen_to_event,

+ get_octo, listen_to_event,

+ octocrab::models::events::payload::{IssueCommentEventAction, PullRequestEventAction},

octocrab::models::CommentId,

- octocrab::models::webhook_events::{WebhookEvent, WebhookEventPayload},

- octocrab::models::webhook_events::payload::{IssueCommentWebhookEventAction, PullRequestWebhookEventAction},

- GithubLogin,

+ EventPayload, GithubLogin,

};

-use llmservice_flows::{

- chat::{ChatOptions},

- LLMServiceFlows,

+use openai_flows::{

+ chat::{ChatModel, ChatOptions},

+ OpenAIFlows,

};

use std::env;

+// The soft character limit of the input context size

+// the max token size or word count for GPT4 is 8192

+// the max token size or word count for GPT35Turbo16K is 16384

+static CHAR_SOFT_LIMIT: usize = 30000;

+static MODEL: ChatModel = ChatModel::GPT35Turbo16K;

+// static MODEL : ChatModel = ChatModel::GPT4;

+

#[no_mangle]

#[tokio::main(flavor = "current_thread")]

-pub async fn on_deploy() {

+pub async fn run() -> anyhow::Result<()> {

dotenv().ok();

logger::init();

log::debug!("Running github-pr-summary/main");

let owner = env::var("github_owner").unwrap_or("juntao".to_string());

let repo = env::var("github_repo").unwrap_or("test".to_string());

+ let trigger_phrase = env::var("trigger_phrase").unwrap_or("flows summarize".to_string());

- listen_to_event(&GithubLogin::Default, &owner, &repo, vec!["pull_request", "issue_comment"]).await;

-}

+ let events = vec!["pull_request", "issue_comment"];

+ listen_to_event(&GithubLogin::Default, &owner, &repo, events, |payload| {

+ handler(&owner, &repo, &trigger_phrase, payload)

+ })

+ .await;

-#[event_handler]

-async fn handler(event: Result