|

| 1 | +# StarCoder2-Instruct: Fully Transparent and Permissive Self-Alignment for Code Generation |

| 2 | + |

| 3 | +<p align="left"> |

| 4 | + ⭐️ <a href="#about">About</a> |

| 5 | + | 🚀 <a href="#quick-start">Quick start</a> |

| 6 | + | 📚 <a href="#data-generation-pipeline">Data generation</a> |

| 7 | + | 🧑💻 <a href="#training-details">Training</a> |

| 8 | + | 📊 <a href="#evaluation-on-evalplus-livecodebench-and-ds-1000">Evaluation</a> |

| 9 | + | ⚠️ <a href="#bias-risks-and-limitations">Limitations</a> |

| 10 | +</p> |

| 11 | + |

| 12 | + |

| 13 | + |

| 14 | +<!-- |

| 15 | +> [!WARNING] |

| 16 | +> This documentation is still WIP. --> |

| 17 | + |

| 18 | +## About |

| 19 | + |

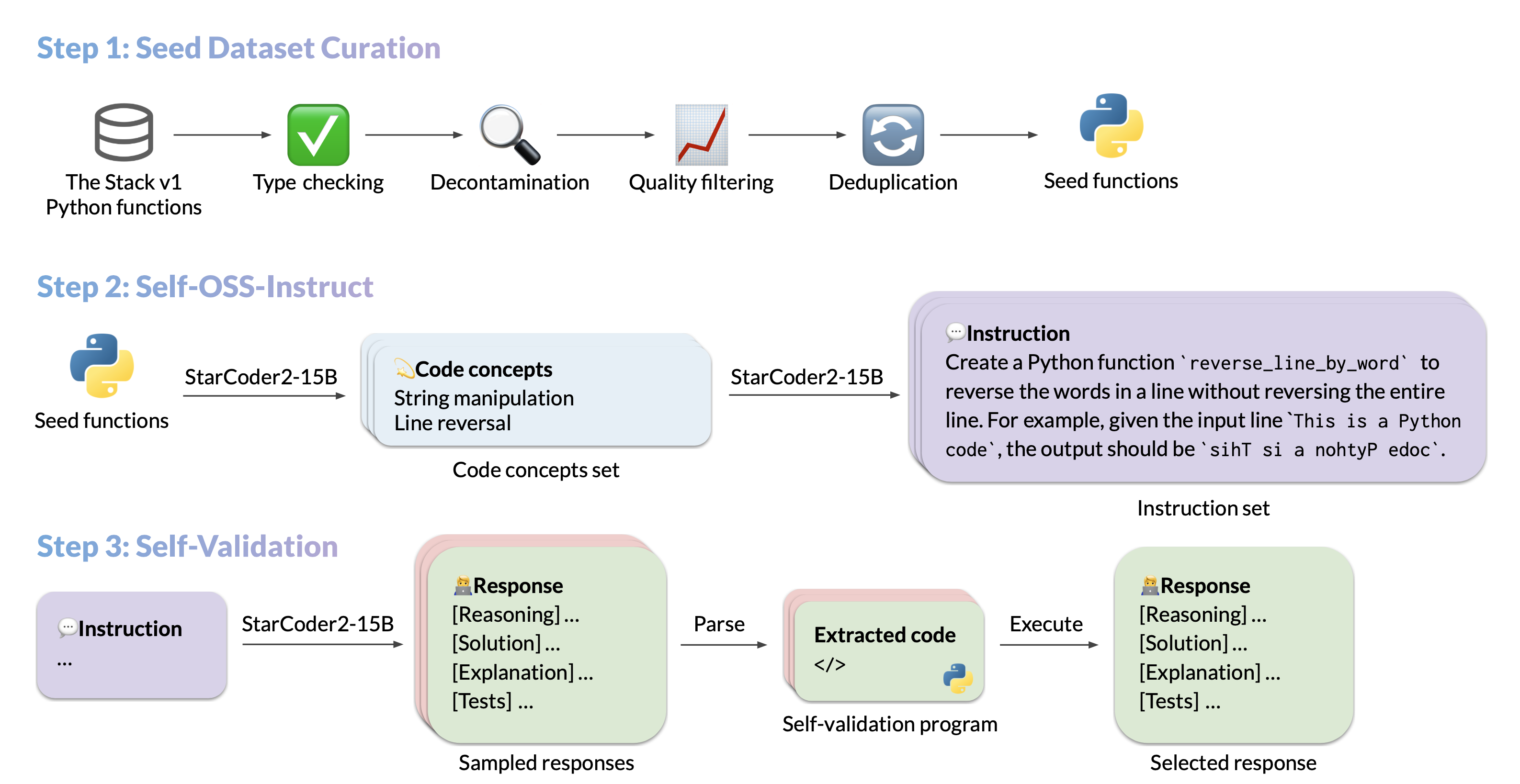

| 20 | +We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs. |

| 21 | + |

| 22 | +- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1) |

| 23 | +- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align) |

| 24 | +- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/) |

| 25 | +- **Authors:** |

| 26 | +[Yuxiang Wei](https://yuxiang.cs.illinois.edu), |

| 27 | +[Federico Cassano](https://federico.codes/), |

| 28 | +[Jiawei Liu](https://jw-liu.xyz), |

| 29 | +[Yifeng Ding](https://yifeng-ding.com), |

| 30 | +[Naman Jain](https://naman-ntc.github.io), |

| 31 | +[Harm de Vries](https://www.harmdevries.com), |

| 32 | +[Leandro von Werra](https://twitter.com/lvwerra), |

| 33 | +[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/home/), |

| 34 | +[Lingming Zhang](https://lingming.cs.illinois.edu). |

| 35 | + |

| 36 | + |

| 37 | + |

| 38 | +## Quick start |

| 39 | + |

| 40 | +Here is an example to get started with StarCoder2-15B-Instruct-v0.1 using the [transformers](https://huggingface.co/docs/transformers/index) library: |

| 41 | + |

| 42 | +```python |

| 43 | +import transformers |

| 44 | +import torch |

| 45 | + |

| 46 | +pipeline = transformers.pipeline( |

| 47 | + model="bigcode/starcoder2-15b-instruct-v0.1", |

| 48 | + task="text-generation", |

| 49 | + torch_dtype=torch.bfloat16, |

| 50 | + device_map="auto", |

| 51 | +) |

| 52 | + |

| 53 | +def respond(instruction: str, response_prefix: str) -> str: |

| 54 | + messages = [{"role": "user", "content": instruction}] |

| 55 | + prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False) |

| 56 | + prompt += response_prefix |

| 57 | + |

| 58 | + teminators = [ |

| 59 | + pipeline.tokenizer.eos_token_id, |

| 60 | + pipeline.tokenizer.convert_tokens_to_ids("###"), |

| 61 | + ] |

| 62 | + |

| 63 | + result = pipeline( |

| 64 | + prompt, |

| 65 | + max_length=256, |

| 66 | + num_return_sequences=1, |

| 67 | + do_sample=False, |

| 68 | + eos_token_id=teminators, |

| 69 | + pad_token_id=pipeline.tokenizer.eos_token_id, |

| 70 | + truncation=True, |

| 71 | + ) |

| 72 | + response = response_prefix + result[0]["generated_text"][len(prompt) :].split("###")[0].rstrip() |

| 73 | + return response |

| 74 | + |

| 75 | + |

| 76 | +instruction = "Write a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria." |

| 77 | +response_prefix = "" |

| 78 | + |

| 79 | +print(respond(instruction, response_prefix)) |

| 80 | +``` |

| 81 | + |

| 82 | +## Data generation pipeline |

| 83 | + |

| 84 | +> Run `pip install -e .` first to install the package locally. Check [seed_gathering](seed_gathering/) for details on how we collected the seeds. |

| 85 | +

|

| 86 | +By default, we use in-memory vLLM engine for data generation, but we also provide an option to use vLLM's [OpenAI compatible server](https://docs.vllm.ai/en/latest/serving/openai_compatible_server.html) for data generation. |

| 87 | + |

| 88 | +Set `CUDA_VISIBLE_DEVICES=...` to specify the GPU devices to use for the vLLM engine. |

| 89 | + |

| 90 | +To maximize data generation efficiency, we recommend invoking the script multiple times with different `seed_code_start_index` and `max_new_data` values, each with an vLLM engine running on a separate GPU set. For example, for a 100k seed dataset on a 2-GPU machine, you can have 2 processes each generating 50k samples by setting `CUDA_VISIBLE_DEVICES=0 --seed_code_start_index 0 --max_new_data 50000` and `CUDA_VISIBLE_DEVICES=1 --seed_code_start_index 50000 --max_new_data 50000`. |

| 91 | + |

| 92 | +<details> |

| 93 | + |

| 94 | +<summary>Click to see how to run with vLLM's OpenAI compatible API</summary> |

| 95 | + |

| 96 | +To do so, make sure the vLLM server is running, and the associated `openai` environment variables are set. |

| 97 | + |

| 98 | +For example, you can start an vLLM server with `docker`: <

F438

/code> |

| 99 | + |

| 100 | +```shell |

| 101 | +docker run --gpus '"device=0"' \ |

| 102 | + -v $HF_HOME:/root/.cache/huggingface \ |

| 103 | + -p 10000:8000 \ |

| 104 | + --ipc=host \ |

| 105 | + vllm/vllm-openai:v0.3.3 \ |

| 106 | + --model bigcode/starcoder2-15b \ |

| 107 | + --tensor-parallel-size 1 --dtype bfloat16 |

| 108 | +``` |

| 109 | + |

| 110 | +And then set the environment variables as follows: |

| 111 | + |

| 112 | +```shell |

| 113 | +export OPENAI_API_KEY="EMPTY" |

| 114 | +export OPENAI_BASE_URL="http://localhost:10000/v1/" |

| 115 | +``` |

| 116 | + |

| 117 | +You will also need to set `--use_vllm_server True` in the following commands. |

| 118 | + |

| 119 | +</details> |

| 120 | + |

| 121 | +<details> |

| 122 | + |

| 123 | +<summary>Snippet to concepts generation</summary> |

| 124 | + |

| 125 | +```shell |

| 126 | +MODEL=bigcode/starcoder2-15b |

| 127 | +MAX_NEW_DATA=1000000 |

| 128 | +python src/star_align/self_ossinstruct.py \ |

| 129 | + --use_vllm_server False \ |

| 130 | + --instruct_mode "S->C" \ |

| 131 | + --seed_data_files /path/to/seeds.jsonl \ |

| 132 | + --max_new_data $MAX_NEW_DATA \ |

| 133 | + --tag concept_gen \ |

| 134 | + --temperature 0.7 \ |

| 135 | + --seed_code_start_index 0 \ |

| 136 | + --model $MODEL \ |

| 137 | + --num_fewshots 8 \ |

| 138 | + --num_batched_requests 2000 \ |

| 139 | + --num_sample_per_request 1 |

| 140 | +``` |

| 141 | + |

| 142 | +</details> |

| 143 | + |

| 144 | +<details> |

| 145 | + |

| 146 | +<summary>Concepts to instruction generation</summary> |

| 147 | + |

| 148 | +```shell |

| 149 | +MODEL=bigcode/starcoder2-15b |

| 150 | +MAX_NEW_DATA=1000000 |

| 151 | +python src/star_align/self_ossinstruct.py \ |

| 152 | + --instruct_mode "C->I" \ |

| 153 | + --seed_data_files /path/to/concepts.jsonl \ |

| 154 | + --max_new_data $MAX_NEW_DATA \ |

| 155 | + --tag instruction_gen \ |

| 156 | + --temperature 0.7 \ |

| 157 | + --seed_code_start_index 0 \ |

| 158 | + --model $MODEL \ |

| 159 | + --num_fewshots 8 \ |

| 160 | + --num_sample_per_request 1 \ |

| 161 | + --num_batched_request 2000 |

| 162 | +``` |

| 163 | + |

| 164 | +</details> |

| 165 | + |

| 166 | +<details> |

| 167 | + |

| 168 | +<summary>Instruction to response (with self-validation code) generation</summary> |

| 169 | + |

| 170 | +```shell |

| 171 | +MODEL=bigcode/starcoder2-15b |

| 172 | +MAX_NEW_DATA=1000000 |

| 173 | +python src/star_align/self_ossinstruct.py \ |

| 174 | + --instruct_mode "I->R" \ |

| 175 | + --seed_data_files path/to/instructions.jsonl \ |

| 176 | + --max_new_data $MAX_NEW_DATA \ |

| 177 | + --tag response_gen \ |

| 178 | + --seed_code_start_index 0 \ |

| 179 | + --model $MODEL \ |

| 180 | + --num_fewshots 1 \ |

| 181 | + --num_batched_request 500 \ |

| 182 | + --num_sample_per_request 10 \ |

| 183 | + --temperature 0.7 |

| 184 | +``` |

| 185 | + |

| 186 | +</details> |

| 187 | + |

| 188 | +<details> |

| 189 | + |

| 190 | +<summary>Execution filter</summary> |

| 191 | + |

| 192 | +> **Warning:** Though we implemented reliability guards, it is highly recommended to run execution in a sandbox environment we provided. |

| 193 | +<!-- |

| 194 | +```shell |

| 195 | +python src/star_align/execution_filter.py --response_path /path/to/response.jsonl --result_path /path/to/filtered.jsonl |

| 196 | +# The current implementation may cause deadlock. |

| 197 | +# If you encounter deadlock, manually do `ps -ef | grep execution_filter` and kill the stuck process. |

| 198 | +# Note that filtered.jsonl may contain multiple passing samples for the same instruction which needs further selection. |

| 199 | +``` --> |

| 200 | + |

| 201 | +To use the Docker container for executing code, you will first need to `git submodule update --init --recursive` to clone the server, then run: |

| 202 | + |

| 203 | +```shell |

| 204 | +pushd ./src/star_align/code_exec_server |

| 205 | +./pull_and_run.sh |

| 206 | +popd |

| 207 | +python src/star_align/execution_filter.py \ |

| 208 | + --response_paths /path/to/response.jsonl \ |

| 209 | + --result_path /path/to/filtered.jsonl \ |

| 210 | + --max_batched_tasks 10000 \ |

| 211 | + --container_server http://127.0.0.1:8000 |

| 212 | +``` |

| 213 | + |

| 214 | +Execution filter will produce a flattened list of JSONL entries with a `pass` field indicating whether the execution passed or not. **It also incrementally dumps the results and can load a cached partial data file.** You can recover an execution with: |

| 215 | + |

| 216 | +```shell |

| 217 | +python src/star_align/execution_filter.py \ |

| 218 | + --response_paths /path/to/response.jsonl* \ |

| 219 | + --cache_paths /path/to/filtered.jsonl* \ |

| 220 | + --result_path /path/to/filtered-1.jsonl \ |

| 221 | + --max_batched_tasks 10000 \ |

| 222 | + --container_server http://127.0.0.1:8000 |

| 223 | +``` |

| 224 | + |

| 225 | +Note that sometimes execution can lead to significant slowdowns due to excessive resource consumption. To alleviate this, you can limit the docker's cpu usage (e.g., `docker run --cpuset-cpus="0-31"`). You can also do: |

| 226 | + |

| 227 | +```shell |

| 228 | +# For example, you can set the command to be `sudo pkill -f '/tmp/codeexec'` |

| 229 | +export CLEANUP_COMMAND="the command to execute after each batch" |

| 230 | +python src/star_align/execution_filter.py... |

| 231 | +``` |

| 232 | + |

| 233 | +Also, the container connection may be lost during execution. In this case, you can just leverage the caching mechanism described above to re-run the script. |

| 234 | + |

| 235 | +</details> |

| 236 | + |

| 237 | +<details> |

| 238 | + |

| 239 | +<summary>Data sanitization and selection</summary> |

| 240 | + |

| 241 | +```shell |

| 242 | +# Uncomment to do decontamination |

| 243 | +# export MBPP_PATH="/path/to/mbpp.jsonl" |

| 244 | +# export DS1000_PATH="/path/to/ds1000_data" |

| 245 | +# export DECONTAMINATION=1 |

| 246 | +./sanitize.sh /path/to/exec-filtered.jsonl /path/to/sanitized.jsonl |

| 247 | +``` |

| 248 | + |

| 249 | +</details> |

| 250 | + |

| 251 | +## Training Details |

| 252 | + |

| 253 | +> Run `pip install -e .` first to install the package locally. And install [Flash Attention](https://github.com/Dao-AILab/flash-attention) to speed up the training. |

| 254 | +

|

| 255 | +### Hyperparameters |

| 256 | + |

| 257 | +- **Optimizer:** Adafactor |

| 258 | +- **Learning rate:** 1e-5 |

| 259 | +- **Epoch:** 4 |

| 260 | +- **Batch size:** 64 |

| 261 | +- **Warmup ratio:** 0.05 |

| 262 | +- **Scheduler:** Linear |

| 263 | +- **Sequence length:** 1280 |

| 264 | +- **Dropout**: Not applied |

| 265 | + |

| 266 | +### Hardware |

| 267 | + |

| 268 | +1 x NVIDIA A100 80GB. Yes, you just need one A100 to finetune StarCoder2-15B! |

| 269 | + |

| 270 | +### Script |

| 271 | + |

| 272 | +The following script finetunes StarCoder2-15B-Instruct-v0.1 from the base StarCoder2-15B model. `/path/to/dataset.jsonl` is the JSONL format of the [50k dataset](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k) we generated. You can dump the dataset to JSONL to fit the training script. |

| 273 | + |

| 274 | +<details> |

| 275 | + |

| 276 | +<summary>Click to see the training script</summary> |

| 277 | + |

| 278 | +NOTE: StarCoder2-15B sets dropout values to 0.1 by default. We did not apply dropout in finetuning and thus set the them to 0.0. |

| 279 | + |

| 280 | +```shell |

| 281 | +MODEL_KEY=bigcode/starcoder2-15b |

| 282 | +LR=1e-5 |

| 283 | +EPOCH=4 |

| 284 | +SEQ_LEN=1280 |

| 285 | +WARMUP_RATIO=0.05 |

| 286 | +OUTPUT_DIR=/path/to/output_model |

| 287 | +DATASET_FILE=/path/to/50k-dataset.jsonl |

| 288 | +accelerate launch -m star_align.train \ |

| 289 | + --model_key $MODEL_KEY \ |

| 290 | + --model_name_or_path $MODEL_KEY \ |

| 291 | + --use_flash_attention True \ |

| 292 | + --datafile_paths $DATASET_FILE \ |

| 293 | + --output_dir $OUTPUT_DIR \ |

| 294 | + --bf16 True \ |

| 295 | + --num_train_epochs $EPOCH \ |

| 296 | + --max_training_seq_length $SEQ_LEN \ |

| 297 | + --pad_to_max_length False \ |

| 298 | + --per_device_train_batch_size 1 \ |

| 299 | + --gradient_accumulation_steps 64 \ |

| 300 | + --group_by_length False \ |

| 301 | + --ddp_find_unused_parameters False \ |

| 302 | + --logging_steps 1 \ |

| 303 | + --log_level info \ |

| 304 | + --optim adafactor \ |

| 305 | + --max_grad_norm -1 \ |

| 306 | + --warmup_ratio $WARMUP_RATIO \ |

| 307 | + --learning_rate $LR \ |

| 308 | + --lr_scheduler_type linear \ |

| 309 | + --attention_dropout 0.0 \ |

| 310 | + --residual_dropout 0.0 \ |

| 311 | + --embedding_dropout 0.0 |

| 312 | +``` |

| 313 | + |

| 314 | +</details> |

| 315 | + |

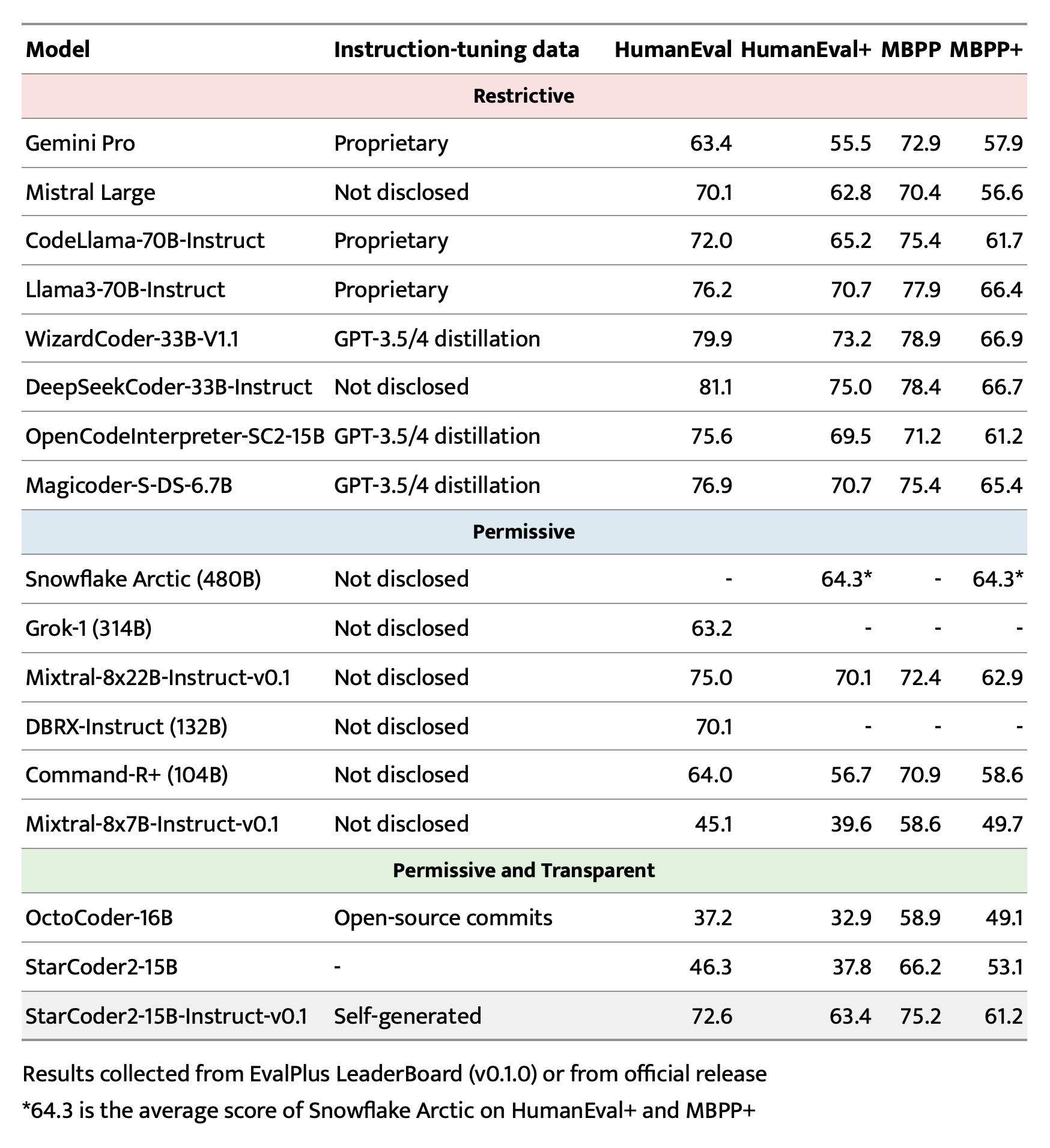

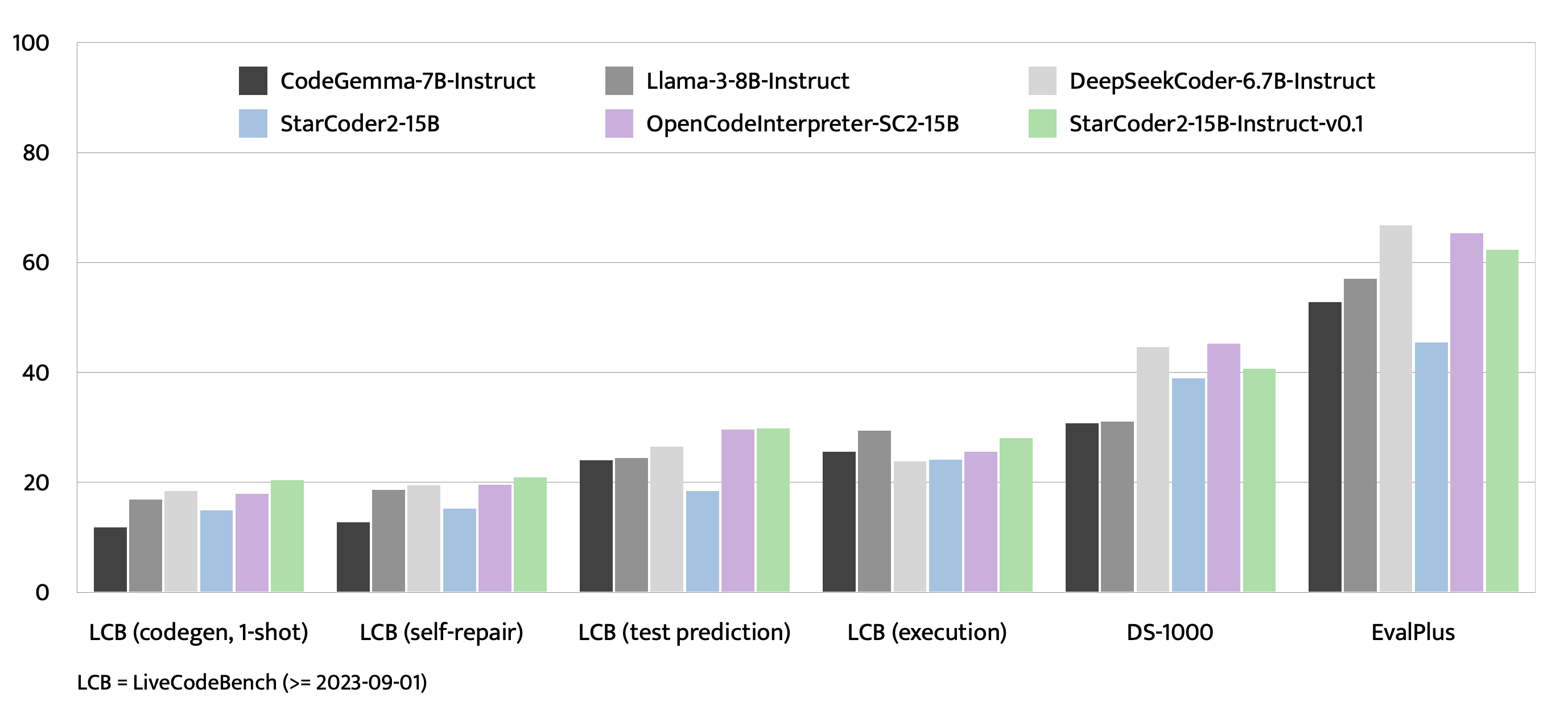

| 316 | +## Evaluation on EvalPlus, LiveCodeBench, and DS-1000 |

| 317 | + |

| 318 | +> Check [evaluation](evaluation/) for more details. |

| 319 | +

|

| 320 | + |

| 321 | + |

| 322 | + |

| 323 | + |

| 324 | +## Bias, Risks, and Limitations |

| 325 | + |

| 326 | +StarCoder2-15B-Instruct-v0.1 is primarily finetuned for Python code generation tasks that can be verified through execution, which may lead to certain biases and limitations. For example, the model might not adhere strictly to instructions that dictate the output format. In these situations, it's beneficial to provide a **response prefix** or a **one-shot example** to steer the model’s output. Additionally, the model may have limitations with other programming languages and out-of-domain coding tasks. |

| 327 | + |

| 328 | +The model also inherits the bias, risks, and limitations from its base StarCoder2-15B model. For more information, please refer to the [StarCoder2-15B model card](https://huggingface.co/bigcode/starcoder2-15b). |

0 commit comments