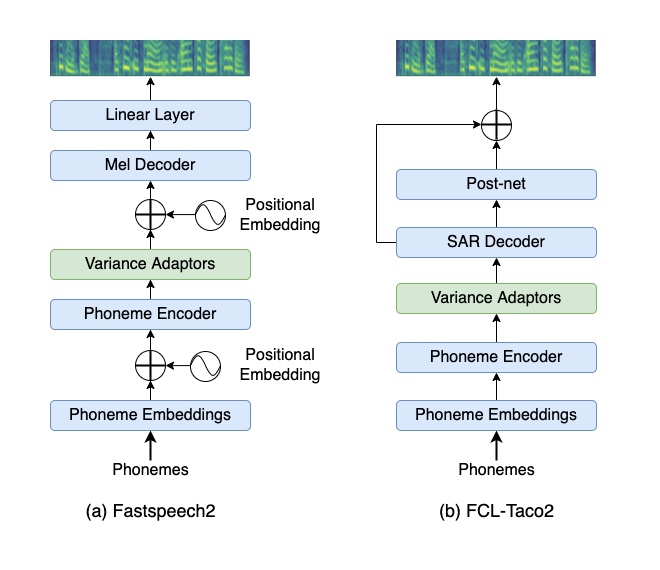

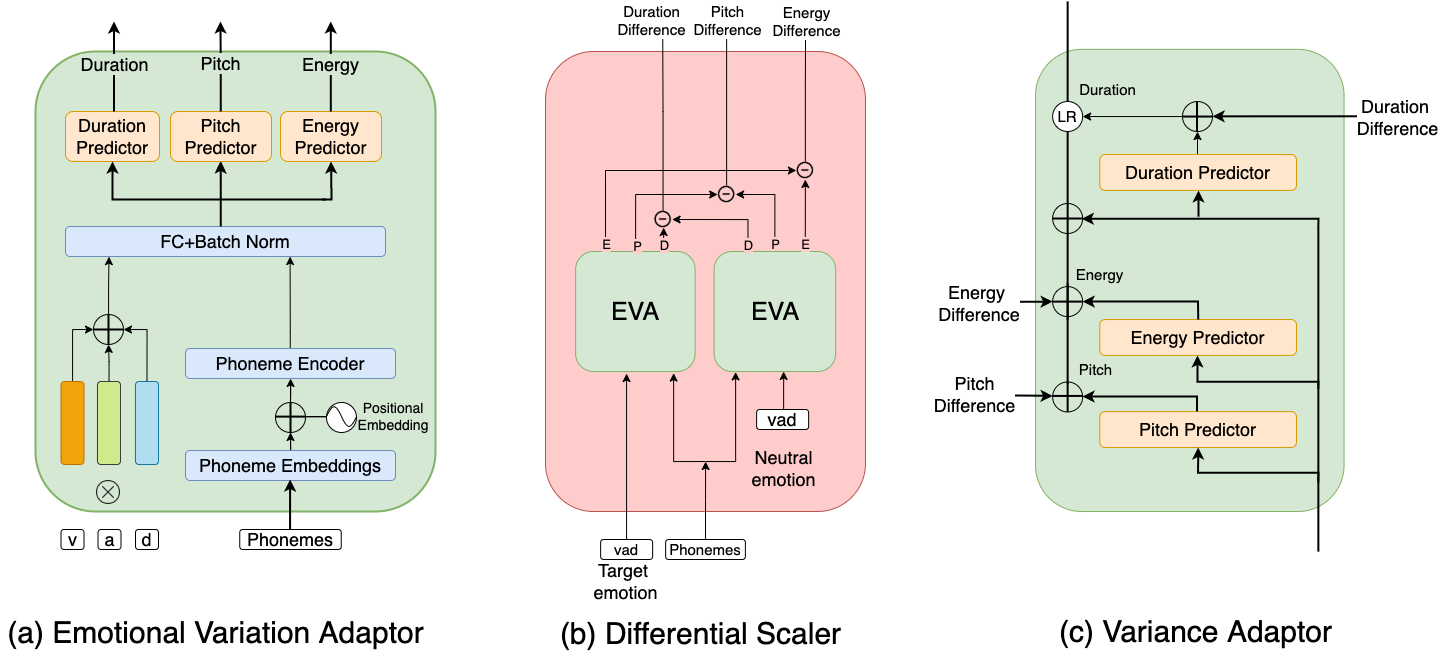

The audio samples shown here are generated with various models. Columns are various models, each row corresponds to single text. We compare with the following prioir art- FastSpeech2, Fcl-Taco2, Cai et al (TTS1) - "Emotion controllable speech synthesis using emotion-unlabeled dataset with the assistance of cross-domain speech emotion recognition." and Sivaprasad et al. (TTS2) - Emotional prosody control for speech generation. These are for just evaluating the naturalness of synthesized speech. For emotive models the samples are shown with randomly picked emotion.Samples FastSpeech2 Fcl-Taco2 FastSpeech2π TTS1 TTS2 Fcl-Taco2 + DS(Our model) FastSpeech2π + DS(Our model) "A friendly waiter taught me a few words of Italian." "I visited museums and sat in public gardens." "Shattered windows and the sound of drums." "He thought it was time to present the present."

Some of the famous dialogues from the movies generated with FastSpeech2π (Without emotion) and FastSpeech2π + DS (With Emotion)

Without emotion

With emotion

Without emotion

With emotion

Without emotion

With emotion

Without emotion

With emotion

1. Remember what she said in my last letter.-30-2321-11-123-2-32. There is no place like home.-3-2321-11-123-2-3

Comparing the emotion control of our models to existing models.Emotions TTS1 TTS2 Fcl-Taco2 + DS FastSpeech2π + DS Angry Fear Sad Happy Neutral

We present the threatre conversations where one of the actor(female) is replaced with a TTS system as shown in section 5.2. We use FastSpeech2π as our baseline. We compare the conversations generated with FastSpeech2π, our model with emotions predicted from "Emotion Recognition in Conversations (ERC)" model and our model with hand picked emotions by senior theatre director.Dialogues FastSpeech2π Our model + predicted emotions Our model + Hand picked emotions Death of a salesman Hell Speed the plow